Roshan Vijay

Simulation Assessment Guidelines towards Independent Safety Assurance of Autonomous Vehicles

Oct 02, 2023

Abstract:This Simulation Assessment Guidelines document is a public guidelines document developed by the Centre of Excellence for Testing & Research of AVs - NTU (CETRAN) in collaboration with the Land Transport Authority (LTA) of Singapore. It is primarily intended to help the developers of Autonomous Vehicles (AVs) in Singapore to prepare their software simulations and provide recommendations that can ensure their readiness for independent assessment of their virtual simulation results according to the Milestone-testing framework adopted by the assessor and the local authority in Singapore, namely, CETRAN and LTA respectively.

White paper on Selected Environmental Parameters affecting Autonomous Vehicle (AV) Sensors

Sep 06, 2023

Abstract:Autonomous Vehicles (AVs) being developed these days rely on various sensor technologies to sense and perceive the world around them. The sensor outputs are subsequently used by the Automated Driving System (ADS) onboard the vehicle to make decisions that affect its trajectory and how it interacts with the physical world. The main sensor technologies being utilized for sensing and perception (S&P) are LiDAR (Light Detection and Ranging), camera, RADAR (Radio Detection and Ranging), and ultrasound. Different environmental parameters would have different effects on the performance of each sensor, thereby affecting the S&P and decision-making (DM) of an AV. In this publication, we explore the effects of different environmental parameters on LiDARs and cameras, leading us to conduct a study to better understand the impact of several of these parameters on LiDAR performance. From the experiments undertaken, the goal is to identify some of the weaknesses and challenges that a LiDAR may face when an AV is using it. This informs AV regulators in Singapore of the effects of different environmental parameters on AV sensors so that they can determine testing standards and specifications which will assess the adequacy of LiDAR systems installed for local AV operations more robustly. Our approach adopts the LiDAR test methodology first developed in the Urban Mobility Grand Challenge (UMGC-L010) White Paper on LiDAR performance against selected Automotive Paints.

White paper on LiDAR performance against selected Automotive Paints

Sep 04, 2023

Abstract:LiDAR (Light Detection and Ranging) is a useful sensing technique and an important source of data for autonomous vehicles (AVs). In this publication we present the results of a study undertaken to understand the impact of automotive paint on LiDAR performance along with a methodology used to conduct this study. Our approach consists of evaluating the average reflected intensity output by different LiDAR sensor models when tested with different types of automotive paints. The paints were chosen to represent common paints found on vehicles in Singapore. The experiments were conducted with LiDAR sensors commonly used by autonomous vehicle (AV) developers and OEMs. The paints used were also selected based on those observed in real-world conditions. This stems from a desire to model real-world performance of actual sensing systems when exposed to the physical world. The goal is then to inform regulators of AVs in Singapore of the impact of automotive paint on LiDAR performance, so that they can determine testing standards and specifications which will better reflect real-world performance and also better assess the adequacy of LiDAR systems installed for local AV operations. The tests were conducted for a combination of 13 different paint panels and 3 LiDAR sensors. In general, it was observed that darker coloured paints have lower reflection intensity whereas lighter coloured paints exhibited higher intensity values.

CoPEM: Cooperative Perception Error Models for Autonomous Driving

Nov 22, 2022

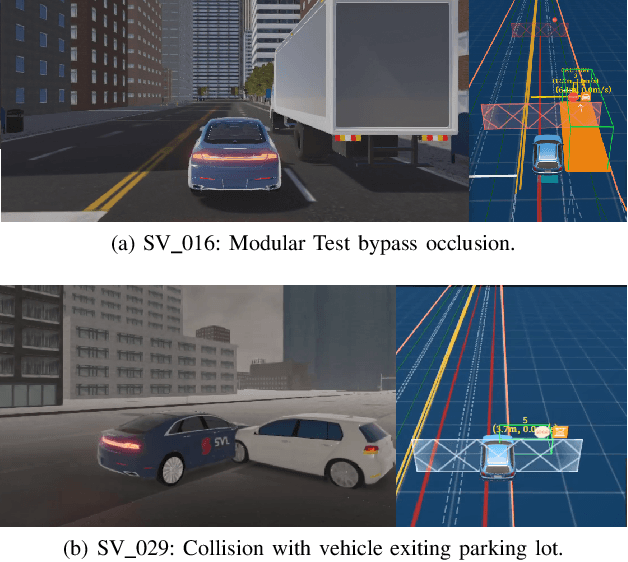

Abstract:In this paper, we introduce the notion of Cooperative Perception Error Models (coPEMs) towards achieving an effective and efficient integration of V2X solutions within a virtual test environment. We focus our analysis on the occlusion problem in the (onboard) perception of Autonomous Vehicles (AV), which can manifest as misdetection errors on the occluded objects. Cooperative perception (CP) solutions based on Vehicle-to-Everything (V2X) communications aim to avoid such issues by cooperatively leveraging additional points of view for the world around the AV. This approach usually requires many sensors, mainly cameras and LiDARs, to be deployed simultaneously in the environment either as part of the road infrastructure or on other traffic vehicles. However, implementing a large number of sensor models in a virtual simulation pipeline is often prohibitively computationally expensive. Therefore, in this paper, we rely on extending Perception Error Models (PEMs) to efficiently implement such cooperative perception solutions along with the errors and uncertainties associated with them. We demonstrate the approach by comparing the safety achievable by an AV challenged with a traffic scenario where occlusion is the primary cause of a potential collision.

Optimal Placement of Roadside Infrastructure Sensors towards Safer Autonomous Vehicle Deployments

Oct 04, 2021

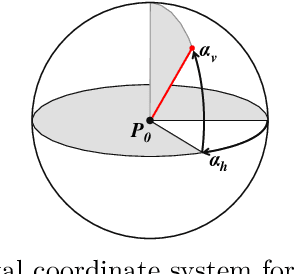

Abstract:Vehicles with driving automation are increasingly being developed for deployment across the world. However, the onboard sensing and perception capabilities of such automated or autonomous vehicles (AV) may not be sufficient to ensure safety under all scenarios and contexts. Infrastructure-augmented environment perception using roadside infrastructure sensors can be considered as an effective solution, at least for selected regions of interest such as urban road intersections or curved roads that present occlusions to the AV. However, they incur significant costs for procurement, installation and maintenance. Therefore these sensors must be placed strategically and optimally to yield maximum benefits in terms of the overall safety of road users. In this paper, we propose a novel methodology towards obtaining an optimal placement of V2X (Vehicle-to-everything) infrastructure sensors, which is particularly attractive to urban AV deployments, with various considerations including costs, coverage and redundancy. We combine the latest advances made in raycasting and linear optimization literature to deliver a tool for urban city planners, traffic analysis and AV deployment operators. Through experimental evaluation in representative environments, we prove the benefits and practicality of our approach.

ViSTA: a Framework for Virtual Scenario-based Testing of Autonomous Vehicles

Sep 07, 2021

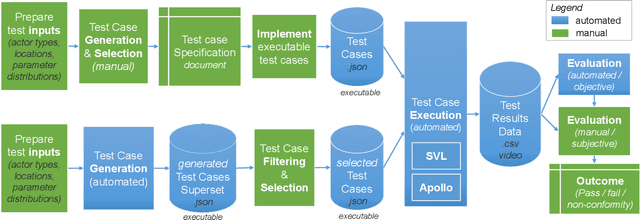

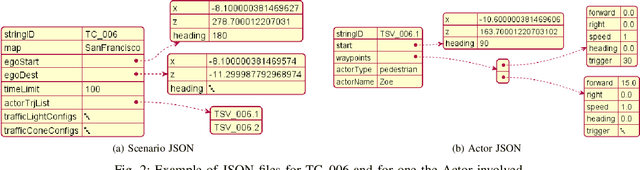

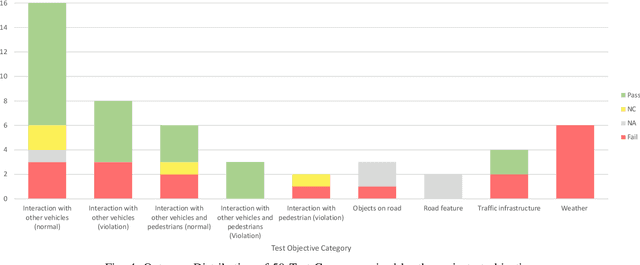

Abstract:In this paper, we present ViSTA, a framework for Virtual Scenario-based Testing of Autonomous Vehicles (AV), developed as part of the 2021 IEEE Autonomous Test Driving AI Test Challenge. Scenario-based virtual testing aims to construct specific challenges posed for the AV to overcome, albeit in virtual test environments that may not necessarily resemble the real world. This approach is aimed at identifying specific issues that arise safety concerns before an actual deployment of the AV on the road. In this paper, we describe a comprehensive test case generation approach that facilitates the design of special-purpose scenarios with meaningful parameters to form test cases, both in automated and manual ways, leveraging the strength and weaknesses of either. Furthermore, we describe how to automate the execution of test cases, and analyze the performance of the AV under these test cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge