Jim Cherian

Simulation Assessment Guidelines towards Independent Safety Assurance of Autonomous Vehicles

Oct 02, 2023

Abstract:This Simulation Assessment Guidelines document is a public guidelines document developed by the Centre of Excellence for Testing & Research of AVs - NTU (CETRAN) in collaboration with the Land Transport Authority (LTA) of Singapore. It is primarily intended to help the developers of Autonomous Vehicles (AVs) in Singapore to prepare their software simulations and provide recommendations that can ensure their readiness for independent assessment of their virtual simulation results according to the Milestone-testing framework adopted by the assessor and the local authority in Singapore, namely, CETRAN and LTA respectively.

On the Simulation of Perception Errors in Autonomous Vehicles

Feb 23, 2023

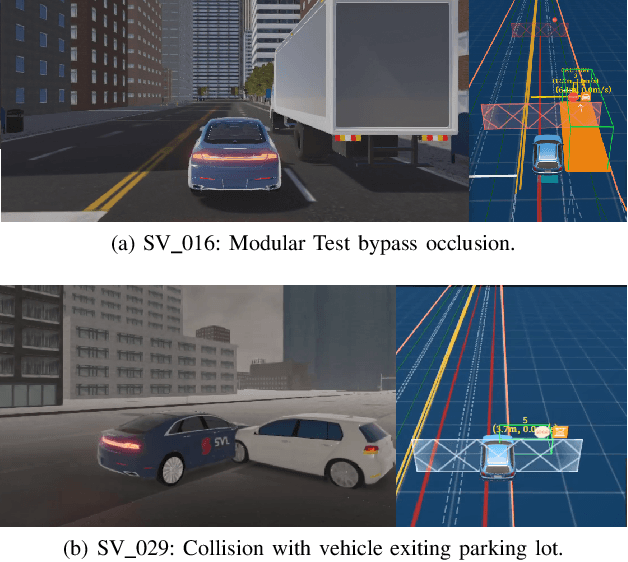

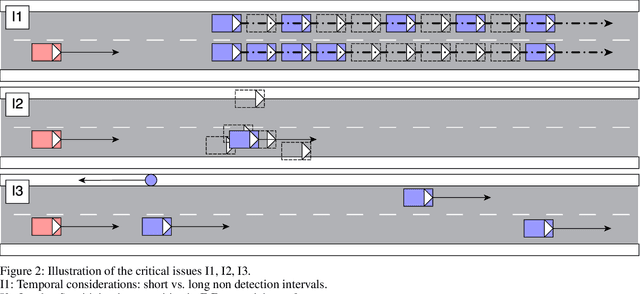

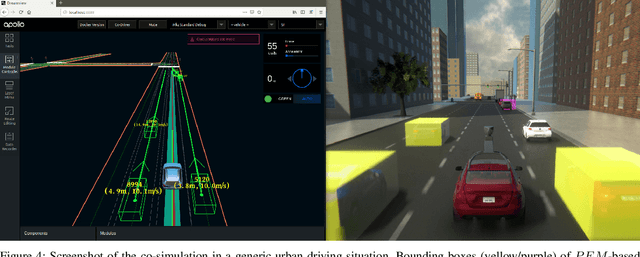

Abstract:Even though virtual testing of Autonomous Vehicles (AVs) has been well recognized as essential for safety assessment, AV simulators are still undergoing active development. One particularly challenging question is to effectively include the Sensing and Perception (S&P) subsystem into the simulation loop. In this article, we define Perception Error Models (PEM), a virtual simulation component that can enable the analysis of the impact of perception errors on AV safety, without the need to model the sensors themselves. We propose a generalized data-driven procedure towards parametric modeling and evaluate it using Apollo, an open-source driving software, and nuScenes, a public AV dataset. Additionally, we implement PEMs in SVL, an open-source vehicle simulator. Furthermore, we demonstrate the usefulness of PEM-based virtual tests, by evaluating camera, LiDAR, and camera-LiDAR setups. Our virtual tests highlight limitations in the current evaluation metrics, and the proposed approach can help study the impact of perception errors on AV safety.

CoPEM: Cooperative Perception Error Models for Autonomous Driving

Nov 22, 2022

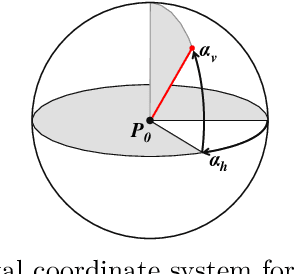

Abstract:In this paper, we introduce the notion of Cooperative Perception Error Models (coPEMs) towards achieving an effective and efficient integration of V2X solutions within a virtual test environment. We focus our analysis on the occlusion problem in the (onboard) perception of Autonomous Vehicles (AV), which can manifest as misdetection errors on the occluded objects. Cooperative perception (CP) solutions based on Vehicle-to-Everything (V2X) communications aim to avoid such issues by cooperatively leveraging additional points of view for the world around the AV. This approach usually requires many sensors, mainly cameras and LiDARs, to be deployed simultaneously in the environment either as part of the road infrastructure or on other traffic vehicles. However, implementing a large number of sensor models in a virtual simulation pipeline is often prohibitively computationally expensive. Therefore, in this paper, we rely on extending Perception Error Models (PEMs) to efficiently implement such cooperative perception solutions along with the errors and uncertainties associated with them. We demonstrate the approach by comparing the safety achievable by an AV challenged with a traffic scenario where occlusion is the primary cause of a potential collision.

Optimal Placement of Roadside Infrastructure Sensors towards Safer Autonomous Vehicle Deployments

Oct 04, 2021

Abstract:Vehicles with driving automation are increasingly being developed for deployment across the world. However, the onboard sensing and perception capabilities of such automated or autonomous vehicles (AV) may not be sufficient to ensure safety under all scenarios and contexts. Infrastructure-augmented environment perception using roadside infrastructure sensors can be considered as an effective solution, at least for selected regions of interest such as urban road intersections or curved roads that present occlusions to the AV. However, they incur significant costs for procurement, installation and maintenance. Therefore these sensors must be placed strategically and optimally to yield maximum benefits in terms of the overall safety of road users. In this paper, we propose a novel methodology towards obtaining an optimal placement of V2X (Vehicle-to-everything) infrastructure sensors, which is particularly attractive to urban AV deployments, with various considerations including costs, coverage and redundancy. We combine the latest advances made in raycasting and linear optimization literature to deliver a tool for urban city planners, traffic analysis and AV deployment operators. Through experimental evaluation in representative environments, we prove the benefits and practicality of our approach.

ViSTA: a Framework for Virtual Scenario-based Testing of Autonomous Vehicles

Sep 07, 2021

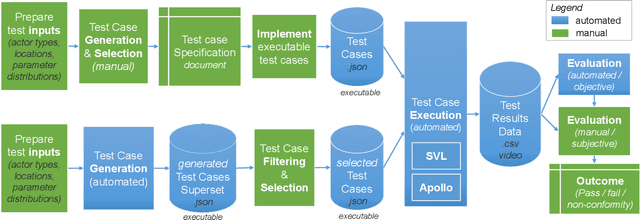

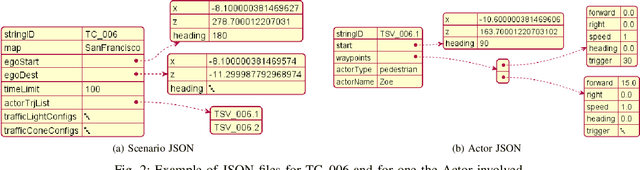

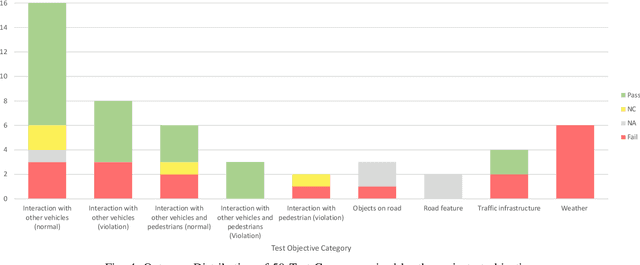

Abstract:In this paper, we present ViSTA, a framework for Virtual Scenario-based Testing of Autonomous Vehicles (AV), developed as part of the 2021 IEEE Autonomous Test Driving AI Test Challenge. Scenario-based virtual testing aims to construct specific challenges posed for the AV to overcome, albeit in virtual test environments that may not necessarily resemble the real world. This approach is aimed at identifying specific issues that arise safety concerns before an actual deployment of the AV on the road. In this paper, we describe a comprehensive test case generation approach that facilitates the design of special-purpose scenarios with meaningful parameters to form test cases, both in automated and manual ways, leveraging the strength and weaknesses of either. Furthermore, we describe how to automate the execution of test cases, and analyze the performance of the AV under these test cases.

Modeling Sensing and Perception Errors towards Robust Decision Making in Autonomous Vehicles

Jan 31, 2020

Abstract:Sensing and Perception (S&P) is a crucial component of an autonomous system (such as a robot), especially when deployed in highly dynamic environments where it is required to react to unexpected situations. This is particularly true in case of Autonomous Vehicles (AVs) driving on public roads. However, the current evaluation metrics for perception algorithms are typically designed to measure their accuracy per se and do not account for their impact on the decision making subsystem(s). This limitation does not help developers and third party evaluators to answer a critical question: is the performance of a perception subsystem sufficient for the decision making subsystem to make robust, safe decisions? In this paper, we propose a simulation-based methodology towards answering this question. At the same time, we show how to analyze the impact of different kinds of sensing and perception errors on the behavior of the autonomous system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge