Roman Schutski

When Single Answer Is Not Enough: Rethinking Single-Step Retrosynthesis Benchmarks for LLMs

Feb 03, 2026Abstract:Recent progress has expanded the use of large language models (LLMs) in drug discovery, including synthesis planning. However, objective evaluation of retrosynthesis performance remains limited. Existing benchmarks and metrics typically rely on published synthetic procedures and Top-K accuracy based on single ground-truth, which does not capture the open-ended nature of real-world synthesis planning. We propose a new benchmarking framework for single-step retrosynthesis that evaluates both general-purpose and chemistry-specialized LLMs using ChemCensor, a novel metric for chemical plausibility. By emphasizing plausibility over exact match, this approach better aligns with human synthesis planning practices. We also introduce CREED, a novel dataset comprising millions of ChemCensor-validated reaction records for LLM training, and use it to train a model that improves over the LLM baselines under this benchmark.

BindGPT: A Scalable Framework for 3D Molecular Design via Language Modeling and Reinforcement Learning

Jun 06, 2024

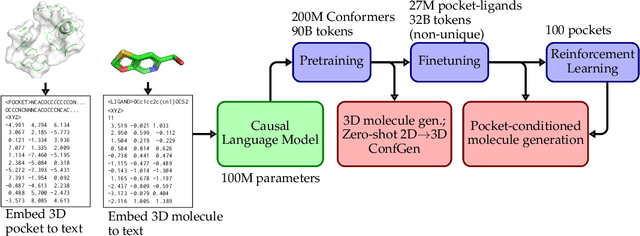

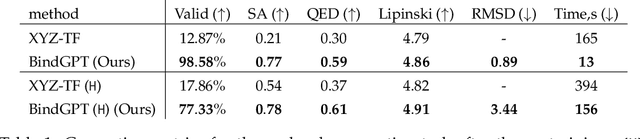

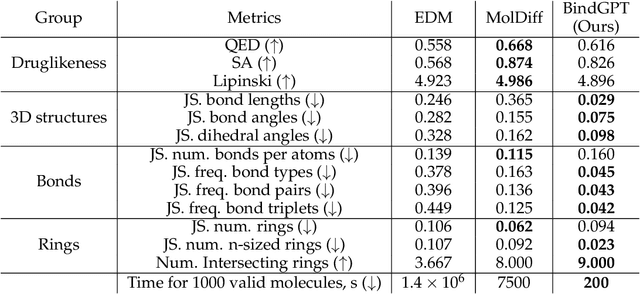

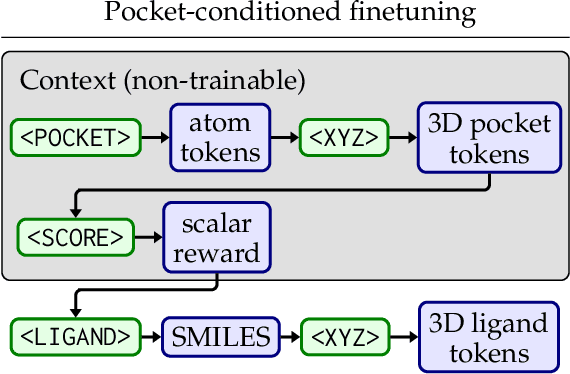

Abstract:Generating novel active molecules for a given protein is an extremely challenging task for generative models that requires an understanding of the complex physical interactions between the molecule and its environment. In this paper, we present a novel generative model, BindGPT which uses a conceptually simple but powerful approach to create 3D molecules within the protein's binding site. Our model produces molecular graphs and conformations jointly, eliminating the need for an extra graph reconstruction step. We pretrain BindGPT on a large-scale dataset and fine-tune it with reinforcement learning using scores from external simulation software. We demonstrate how a single pretrained language model can serve at the same time as a 3D molecular generative model, conformer generator conditioned on the molecular graph, and a pocket-conditioned 3D molecule generator. Notably, the model does not make any representational equivariance assumptions about the domain of generation. We show how such simple conceptual approach combined with pretraining and scaling can perform on par or better than the current best specialized diffusion models, language models, and graph neural networks while being two orders of magnitude cheaper to sample.

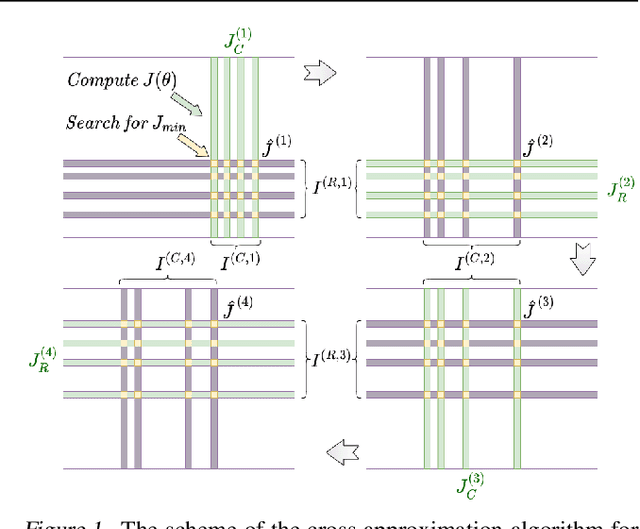

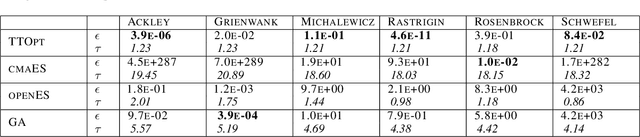

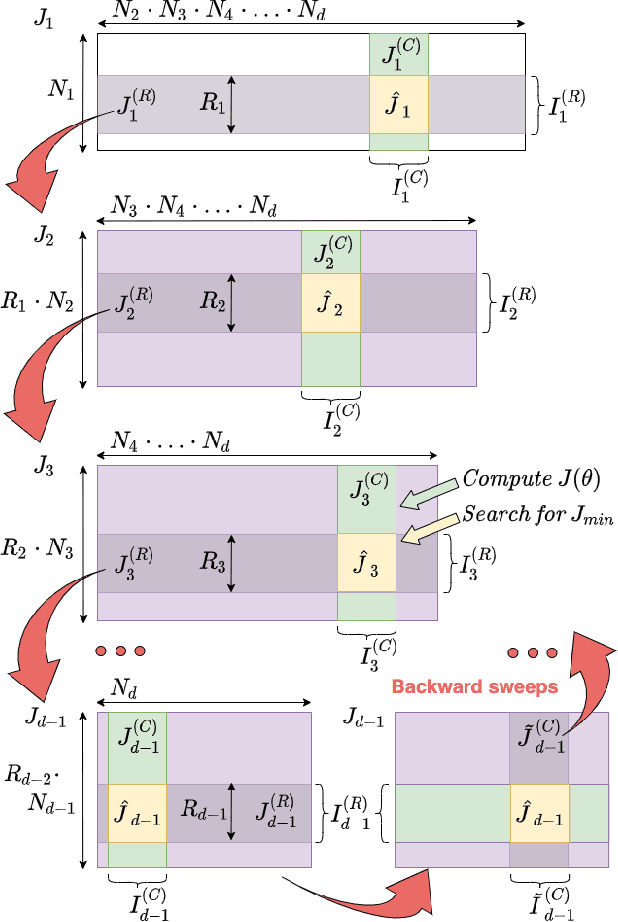

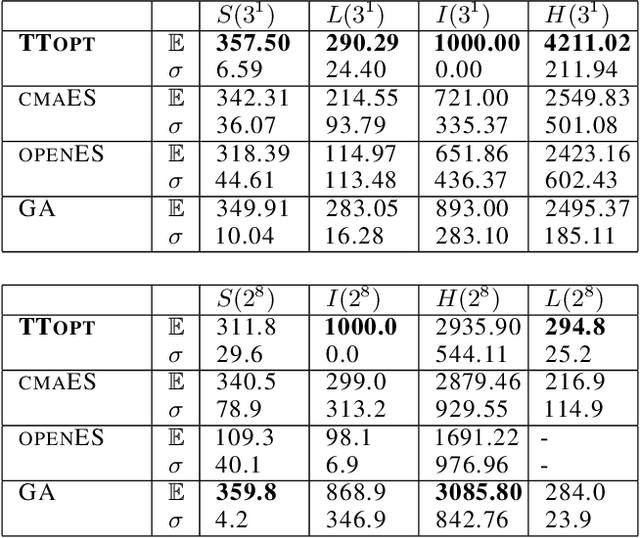

TTOpt: A Maximum Volume Quantized Tensor Train-based Optimization and its Application to Reinforcement Learning

Apr 30, 2022

Abstract:We present a novel procedure for optimization based on the combination of efficient quantized tensor train representation and a generalized maximum matrix volume principle. We demonstrate the applicability of the new Tensor Train Optimizer (TTOpt) method for various tasks, ranging from minimization of multidimensional functions to reinforcement learning. Our algorithm compares favorably to popular evolutionary-based methods and outperforms them by the number of function evaluations or execution time, often by a significant margin.

Graph Convolutional Policy for Solving Tree Decomposition via Reinforcement Learning Heuristics

Oct 18, 2019

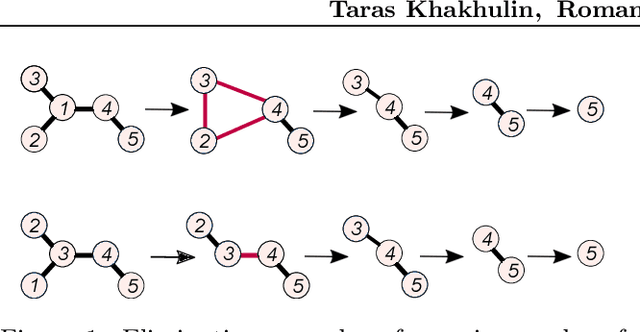

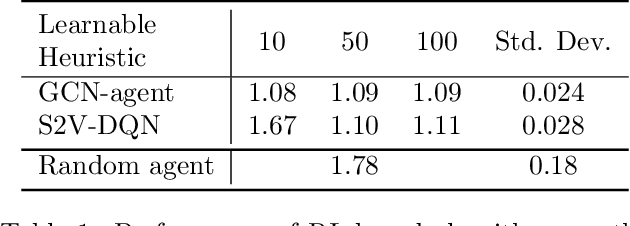

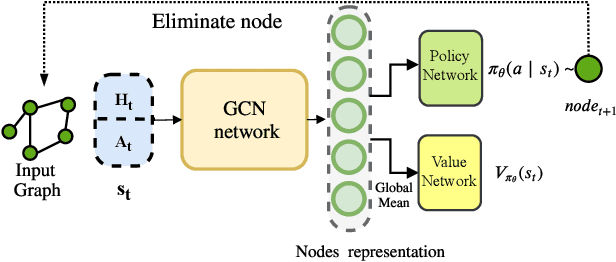

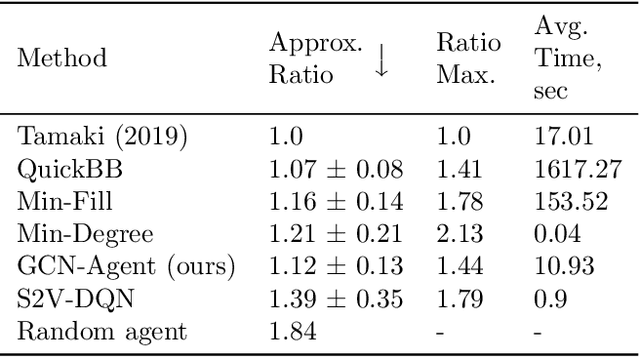

Abstract:We propose a Reinforcement Learning based approach to approximately solve the Tree Decomposition (TD)problem. TD is a combinatorial problem, which is central to the analysis of graph minor structure and computational complexity, as well as in the algorithms of probabilistic inference, register allocation, and other practical tasks. Recently, it has been shown that combinatorial problems can be successively solved by learned heuristics. However, the majority of existing works do not address the question of the generalization of learning-based solutions. Our model is based on the graph convolution neural network (GCN) for learning graph representations. We show that the agent builton GCN and trained on a single graph using an Actor-Critic method can efficiently generalize to real-world TD problem instances. We establish that our method successfully generalizes from small graphs, where TD can be found by exact algorithms, to large instances of practical interest, while still having very low time-to-solution. On the other hand, the agent-based approach surpasses all greedy heuristics by the quality of the solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge