Graph Convolutional Policy for Solving Tree Decomposition via Reinforcement Learning Heuristics

Paper and Code

Oct 18, 2019

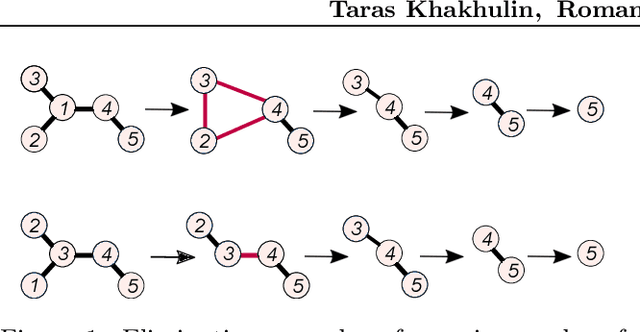

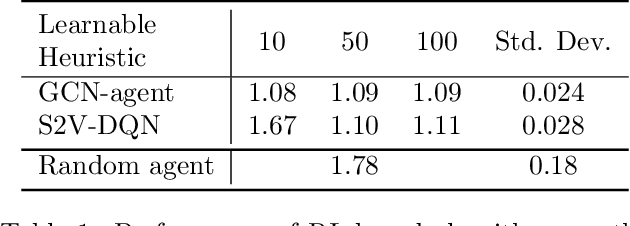

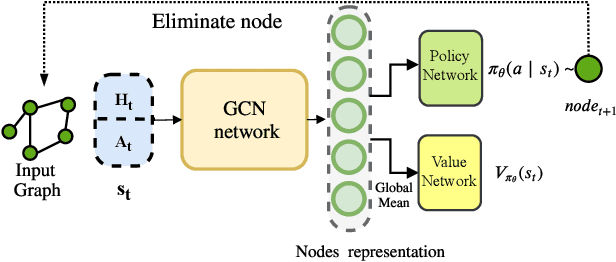

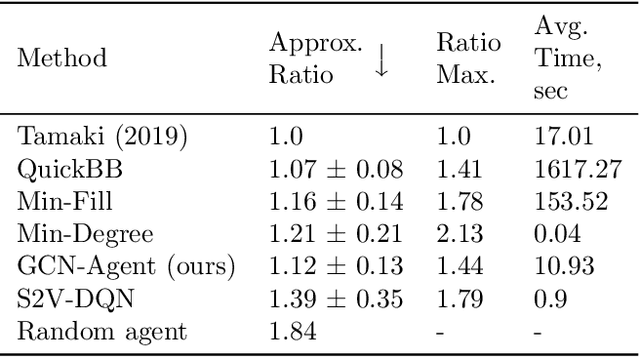

We propose a Reinforcement Learning based approach to approximately solve the Tree Decomposition (TD)problem. TD is a combinatorial problem, which is central to the analysis of graph minor structure and computational complexity, as well as in the algorithms of probabilistic inference, register allocation, and other practical tasks. Recently, it has been shown that combinatorial problems can be successively solved by learned heuristics. However, the majority of existing works do not address the question of the generalization of learning-based solutions. Our model is based on the graph convolution neural network (GCN) for learning graph representations. We show that the agent builton GCN and trained on a single graph using an Actor-Critic method can efficiently generalize to real-world TD problem instances. We establish that our method successfully generalizes from small graphs, where TD can be found by exact algorithms, to large instances of practical interest, while still having very low time-to-solution. On the other hand, the agent-based approach surpasses all greedy heuristics by the quality of the solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge