Roi Livni

CARLoS: Retrieval via Concise Assessment Representation of LoRAs at Scale

Dec 09, 2025

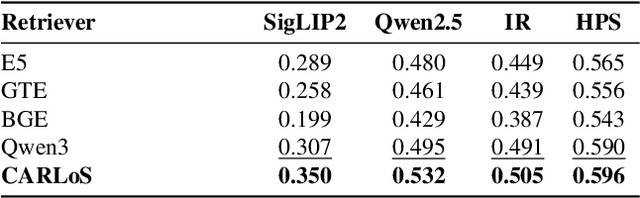

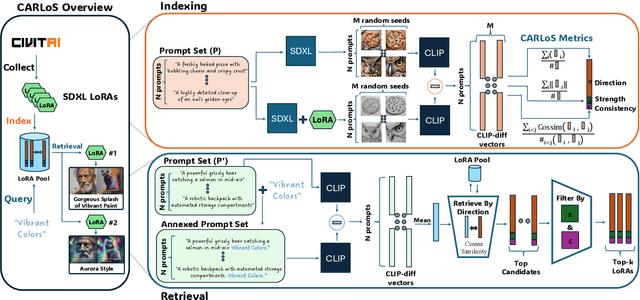

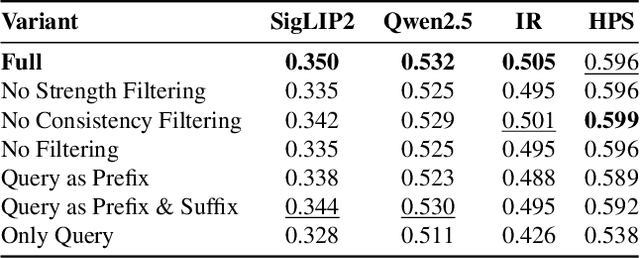

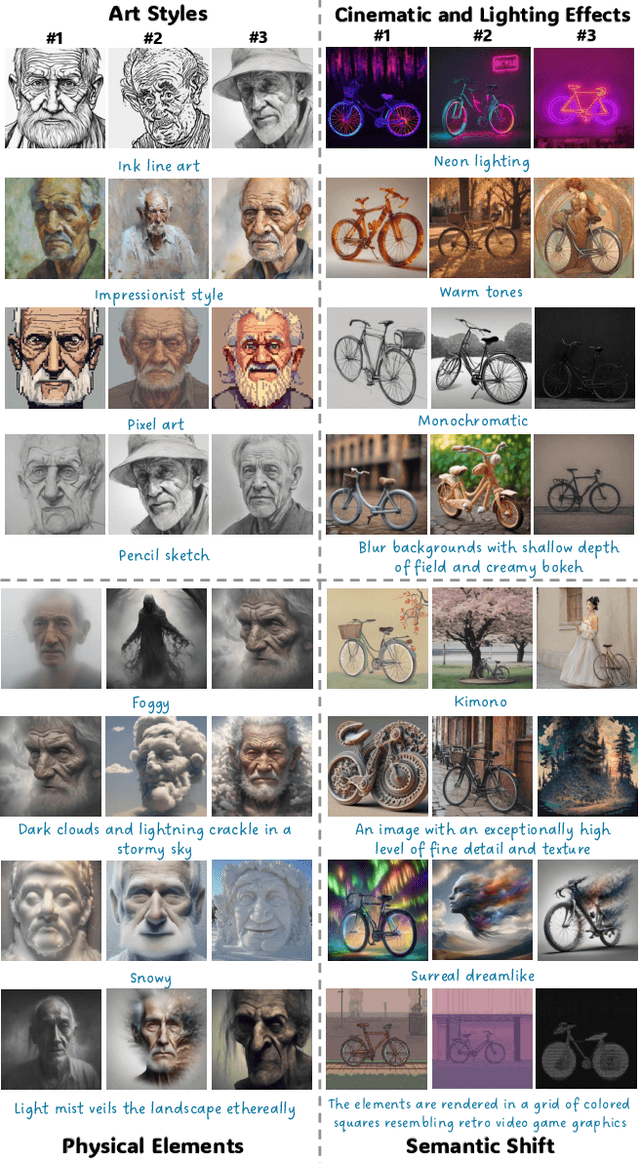

Abstract:The rapid proliferation of generative components, such as LoRAs, has created a vast but unstructured ecosystem. Existing discovery methods depend on unreliable user descriptions or biased popularity metrics, hindering usability. We present CARLoS, a large-scale framework for characterizing LoRAs without requiring additional metadata. Analyzing over 650 LoRAs, we employ them in image generation over a variety of prompts and seeds, as a credible way to assess their behavior. Using CLIP embeddings and their difference to a base-model generation, we concisely define a three-part representation: Directions, defining semantic shift; Strength, quantifying the significance of the effect; and Consistency, quantifying how stable the effect is. Using these representations, we develop an efficient retrieval framework that semantically matches textual queries to relevant LoRAs while filtering overly strong or unstable ones, outperforming textual baselines in automated and human evaluations. While retrieval is our primary focus, the same representation also supports analyses linking Strength and Consistency to legal notions of substantiality and volition, key considerations in copyright, positioning CARLoS as a practical system with broader relevance for LoRA analysis.

Low Resource Reconstruction Attacks Through Benign Prompts

Jul 10, 2025Abstract:The recent advances in generative models such as diffusion models have raised several risks and concerns related to privacy, copyright infringements and data stewardship. To better understand and control the risks, various researchers have created techniques, experiments and attacks that reconstruct images, or part of images, from the training set. While these techniques already establish that data from the training set can be reconstructed, they often rely on high-resources, excess to the training set as well as well-engineered and designed prompts. In this work, we devise a new attack that requires low resources, assumes little to no access to the actual training set, and identifies, seemingly, benign prompts that lead to potentially-risky image reconstruction. This highlights the risk that images might even be reconstructed by an uninformed user and unintentionally. For example, we identified that, with regard to one existing model, the prompt ``blue Unisex T-Shirt'' can generate the face of a real-life human model. Our method builds on an intuition from previous works which leverages domain knowledge and identifies a fundamental vulnerability that stems from the use of scraped data from e-commerce platforms, where templated layouts and images are tied to pattern-like prompts.

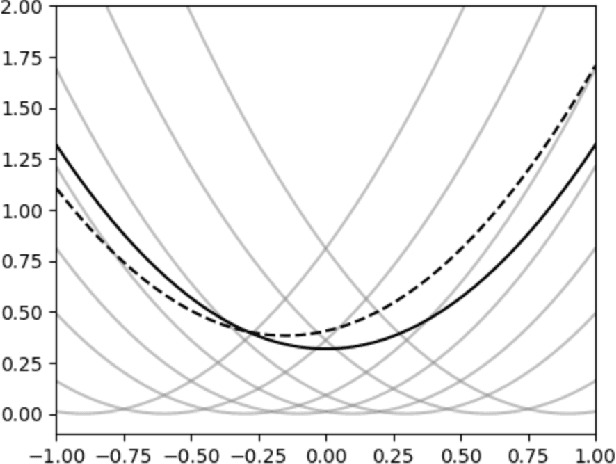

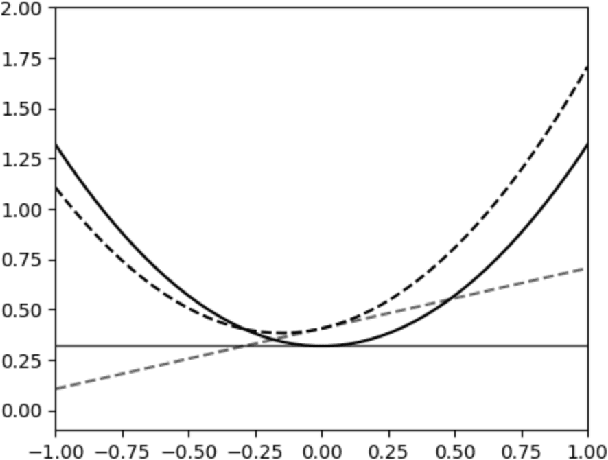

Rapid Overfitting of Multi-Pass Stochastic Gradient Descent in Stochastic Convex Optimization

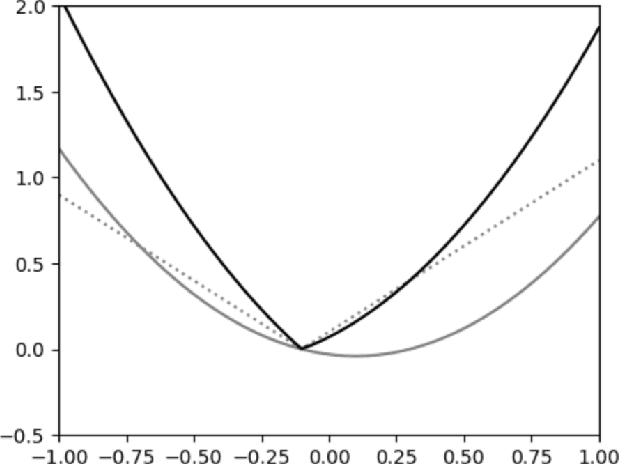

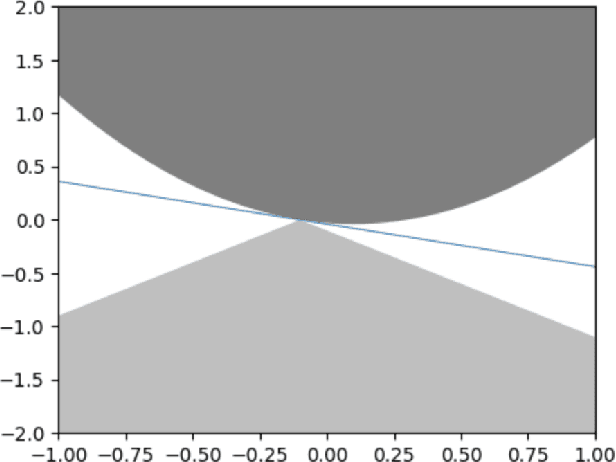

May 13, 2025Abstract:We study the out-of-sample performance of multi-pass stochastic gradient descent (SGD) in the fundamental stochastic convex optimization (SCO) model. While one-pass SGD is known to achieve an optimal $\Theta(1/\sqrt{n})$ excess population loss given a sample of size $n$, much less is understood about the multi-pass version of the algorithm which is widely used in practice. Somewhat surprisingly, we show that in the general non-smooth case of SCO, just a few epochs of SGD can already hurt its out-of-sample performance significantly and lead to overfitting. In particular, using a step size $\eta = \Theta(1/\sqrt{n})$, which gives the optimal rate after one pass, can lead to population loss as large as $\Omega(1)$ after just one additional pass. More generally, we show that the population loss from the second pass onward is of the order $\Theta(1/(\eta T) + \eta \sqrt{T})$, where $T$ is the total number of steps. These results reveal a certain phase-transition in the out-of-sample behavior of SGD after the first epoch, as well as a sharp separation between the rates of overfitting in the smooth and non-smooth cases of SCO. Additionally, we extend our results to with-replacement SGD, proving that the same asymptotic bounds hold after $O(n \log n)$ steps. Finally, we also prove a lower bound of $\Omega(\eta \sqrt{n})$ on the generalization gap of one-pass SGD in dimension $d = \smash{\widetilde O}(n)$, improving on recent results of Koren et al.(2022) and Schliserman et al.(2024).

On the Dichotomy Between Privacy and Traceability in $\ell_p$ Stochastic Convex Optimization

Feb 24, 2025Abstract:In this paper, we investigate the necessity of memorization in stochastic convex optimization (SCO) under $\ell_p$ geometries. Informally, we say a learning algorithm memorizes $m$ samples (or is $m$-traceable) if, by analyzing its output, it is possible to identify at least $m$ of its training samples. Our main results uncover a fundamental tradeoff between traceability and excess risk in SCO. For every $p\in [1,\infty)$, we establish the existence of a risk threshold below which any sample-efficient learner must memorize a \em{constant fraction} of its sample. For $p\in [1,2]$, this threshold coincides with best risk of differentially private (DP) algorithms, i.e., above this threshold, there are algorithms that do not memorize even a single sample. This establishes a sharp dichotomy between privacy and traceability for $p \in [1,2]$. For $p \in (2,\infty)$, this threshold instead gives novel lower bounds for DP learning, partially closing an open problem in this setup. En route of proving these results, we introduce a complexity notion we term \em{trace value} of a problem, which unifies privacy lower bounds and traceability results, and prove a sparse variant of the fingerprinting lemma.

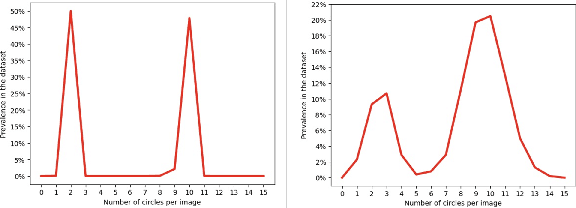

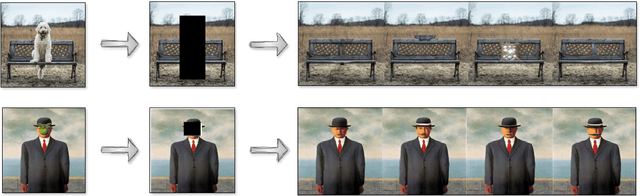

Not Every Image is Worth a Thousand Words: Quantifying Originality in Stable Diffusion

Aug 15, 2024

Abstract:This work addresses the challenge of quantifying originality in text-to-image (T2I) generative diffusion models, with a focus on copyright originality. We begin by evaluating T2I models' ability to innovate and generalize through controlled experiments, revealing that stable diffusion models can effectively recreate unseen elements with sufficiently diverse training data. Then, our key insight is that concepts and combinations of image elements the model is familiar with, and saw more during training, are more concisly represented in the model's latent space. We hence propose a method that leverages textual inversion to measure the originality of an image based on the number of tokens required for its reconstruction by the model. Our approach is inspired by legal definitions of originality and aims to assess whether a model can produce original content without relying on specific prompts or having the training data of the model. We demonstrate our method using both a pre-trained stable diffusion model and a synthetic dataset, showing a correlation between the number of tokens and image originality. This work contributes to the understanding of originality in generative models and has implications for copyright infringement cases.

Credit Attribution and Stable Compression

Jun 22, 2024

Abstract:Credit attribution is crucial across various fields. In academic research, proper citation acknowledges prior work and establishes original contributions. Similarly, in generative models, such as those trained on existing artworks or music, it is important to ensure that any generated content influenced by these works appropriately credits the original creators. We study credit attribution by machine learning algorithms. We propose new definitions--relaxations of Differential Privacy--that weaken the stability guarantees for a designated subset of $k$ datapoints. These $k$ datapoints can be used non-stably with permission from their owners, potentially in exchange for compensation. Meanwhile, the remaining datapoints are guaranteed to have no significant influence on the algorithm's output. Our framework extends well-studied notions of stability, including Differential Privacy ($k = 0$), differentially private learning with public data (where the $k$ public datapoints are fixed in advance), and stable sample compression (where the $k$ datapoints are selected adaptively by the algorithm). We examine the expressive power of these stability notions within the PAC learning framework, provide a comprehensive characterization of learnability for algorithms adhering to these principles, and propose directions and questions for future research.

The Sample Complexity of Gradient Descent in Stochastic Convex Optimization

Apr 11, 2024Abstract:We analyze the sample complexity of full-batch Gradient Descent (GD) in the setup of non-smooth Stochastic Convex Optimization. We show that the generalization error of GD, with common choice of hyper-parameters, can be $\tilde \Theta(d/m + 1/\sqrt{m})$, where $d$ is the dimension and $m$ is the sample size. This matches the sample complexity of \emph{worst-case} empirical risk minimizers. That means that, in contrast with other algorithms, GD has no advantage over naive ERMs. Our bound follows from a new generalization bound that depends on both the dimension as well as the learning rate and number of iterations. Our bound also shows that, for general hyper-parameters, when the dimension is strictly larger than number of samples, $T=\Omega(1/\epsilon^4)$ iterations are necessary to avoid overfitting. This resolves an open problem by Schlisserman et al.23 and Amir er Al.21, and improves over previous lower bounds that demonstrated that the sample size must be at least square root of the dimension.

Not All Similarities Are Created Equal: Leveraging Data-Driven Biases to Inform GenAI Copyright Disputes

Mar 26, 2024

Abstract:The advent of Generative Artificial Intelligence (GenAI) models, including GitHub Copilot, OpenAI GPT, and Stable Diffusion, has revolutionized content creation, enabling non-professionals to produce high-quality content across various domains. This transformative technology has led to a surge of synthetic content and sparked legal disputes over copyright infringement. To address these challenges, this paper introduces a novel approach that leverages the learning capacity of GenAI models for copyright legal analysis, demonstrated with GPT2 and Stable Diffusion models. Copyright law distinguishes between original expressions and generic ones (Sc\`enes \`a faire), protecting the former and permitting reproduction of the latter. However, this distinction has historically been challenging to make consistently, leading to over-protection of copyrighted works. GenAI offers an unprecedented opportunity to enhance this legal analysis by revealing shared patterns in preexisting works. We propose a data-driven approach to identify the genericity of works created by GenAI, employing "data-driven bias" to assess the genericity of expressive compositions. This approach aids in copyright scope determination by utilizing the capabilities of GenAI to identify and prioritize expressive elements and rank them according to their frequency in the model's dataset. The potential implications of measuring expressive genericity for copyright law are profound. Such scoring could assist courts in determining copyright scope during litigation, inform the registration practices of Copyright Offices, allowing registration of only highly original synthetic works, and help copyright owners signal the value of their works and facilitate fairer licensing deals. More generally, this approach offers valuable insights to policymakers grappling with adapting copyright law to the challenges posed by the era of GenAI.

Information Complexity of Stochastic Convex Optimization: Applications to Generalization and Memorization

Feb 14, 2024Abstract:In this work, we investigate the interplay between memorization and learning in the context of \emph{stochastic convex optimization} (SCO). We define memorization via the information a learning algorithm reveals about its training data points. We then quantify this information using the framework of conditional mutual information (CMI) proposed by Steinke and Zakynthinou (2020). Our main result is a precise characterization of the tradeoff between the accuracy of a learning algorithm and its CMI, answering an open question posed by Livni (2023). We show that, in the $L^2$ Lipschitz--bounded setting and under strong convexity, every learner with an excess error $\varepsilon$ has CMI bounded below by $\Omega(1/\varepsilon^2)$ and $\Omega(1/\varepsilon)$, respectively. We further demonstrate the essential role of memorization in learning problems in SCO by designing an adversary capable of accurately identifying a significant fraction of the training samples in specific SCO problems. Finally, we enumerate several implications of our results, such as a limitation of generalization bounds based on CMI and the incompressibility of samples in SCO problems.

The Sample Complexity Of ERMs In Stochastic Convex Optimization

Nov 09, 2023

Abstract:Stochastic convex optimization is one of the most well-studied models for learning in modern machine learning. Nevertheless, a central fundamental question in this setup remained unresolved: "How many data points must be observed so that any empirical risk minimizer (ERM) shows good performance on the true population?" This question was proposed by Feldman (2016), who proved that $\Omega(\frac{d}{\epsilon}+\frac{1}{\epsilon^2})$ data points are necessary (where $d$ is the dimension and $\epsilon>0$ is the accuracy parameter). Proving an $\omega(\frac{d}{\epsilon}+\frac{1}{\epsilon^2})$ lower bound was left as an open problem. In this work we show that in fact $\tilde{O}(\frac{d}{\epsilon}+\frac{1}{\epsilon^2})$ data points are also sufficient. This settles the question and yields a new separation between ERMs and uniform convergence. This sample complexity holds for the classical setup of learning bounded convex Lipschitz functions over the Euclidean unit ball. We further generalize the result and show that a similar upper bound holds for all symmetric convex bodies. The general bound is composed of two terms: (i) a term of the form $\tilde{O}(\frac{d}{\epsilon})$ with an inverse-linear dependence on the accuracy parameter, and (ii) a term that depends on the statistical complexity of the class of $\textit{linear}$ functions (captured by the Rademacher complexity). The proof builds a mechanism for controlling the behavior of stochastic convex optimization problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge