Robin R. Murphy

Fellow, IEEE

A Benchmark Dataset for Spatially Aligned Road Damage Assessment in Small Uncrewed Aerial Systems Disaster Imagery

Dec 13, 2025Abstract:This paper presents the largest known benchmark dataset for road damage assessment and road alignment, and provides 18 baseline models trained on the CRASAR-U-DRIODs dataset's post-disaster small uncrewed aerial systems (sUAS) imagery from 10 federally declared disasters, addressing three challenges within prior post-disaster road damage assessment datasets. While prior disaster road damage assessment datasets exist, there is no current state of practice, as prior public datasets have either been small-scale or reliant on low-resolution imagery insufficient for detecting phenomena of interest to emergency managers. Further, while machine learning (ML) systems have been developed for this task previously, none are known to have been operationally validated. These limitations are overcome in this work through the labeling of 657.25km of roads according to a 10-class labeling schema, followed by training and deploying ML models during the operational response to Hurricanes Debby and Helene in 2024. Motivated by observed road line misalignment in practice, 9,184 road line adjustments were provided for spatial alignment of a priori road lines, as it was found that when the 18 baseline models are deployed against real-world misaligned road lines, model performance degraded on average by 5.596\% Macro IoU. If spatial alignment is not considered, approximately 8\% (11km) of adverse conditions on road lines will be labeled incorrectly, with approximately 9\% (59km) of road lines misaligned off the actual road. These dynamics are gaps that should be addressed by the ML, CV, and robotics communities to enable more effective and informed decision-making during disasters.

An Analysis of International Use of Robots for COVID-19

Feb 04, 2021

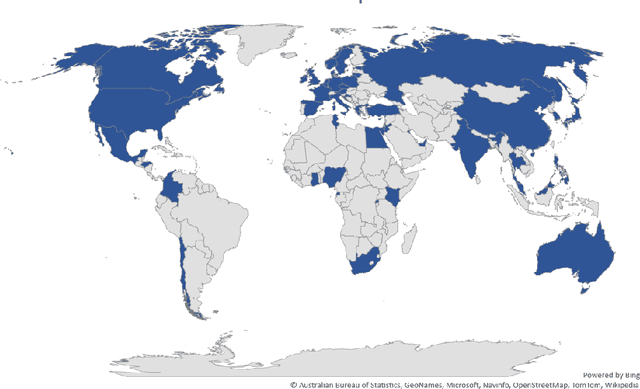

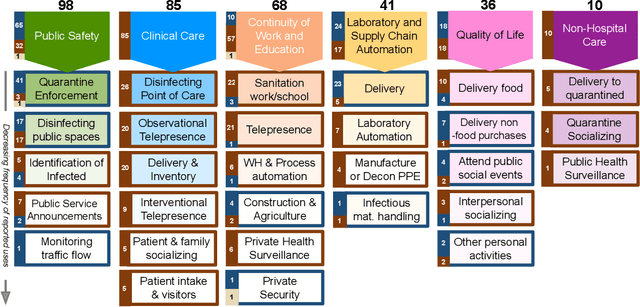

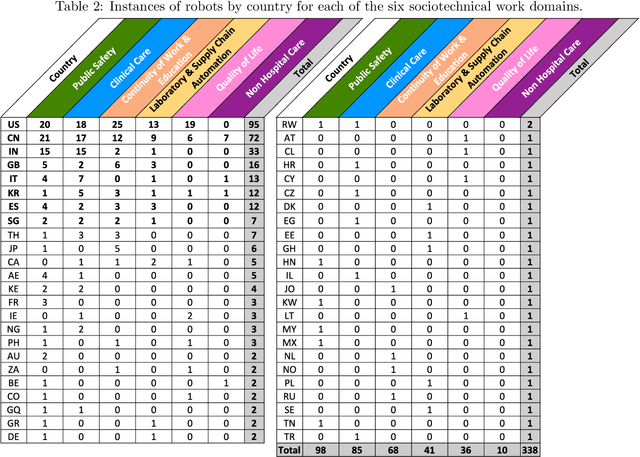

Abstract:This article analyses data collected on 338 instances of robots used explicitly in response to COVID-19 from 24 Jan, 2020, to 23 Jan, 2021, in 48 countries. The analysis was guided by four overarching questions: 1) What were robots used for in the COVID-19 response? 2) When were they used? 3) How did different countries innovate? and 4) Did having a national policy on robotics influence a country's innovation and insertion of robotics for COVID-19? The findings indicate that robots were used for six different sociotechnical work domains and 29 discrete use cases. When robots were used varied greatly on the country; although many countries did report an increase at the beginning of their first surge. To understand the findings of how innovation occurred, the data was examined through the lens of the technology's maturity according to NASA's Technical Readiness Assessment metrics. Through this lens, findings note that existing robots were used for more than 78% of the instances; slightly modified robots made up 10%; and truly novel robots or novel use cases constituted 12% of the instances. The findings clearly indicate that countries with a national robotics initiative were more likely to use robotics more often and for broader purposes. Finally, the dataset and analysis produces a broad set of implications that warrant further study and investigation. The results from this analysis are expected to be of value to the robotics and robotics policy community in preparing robots for rapid insertion into future disasters.

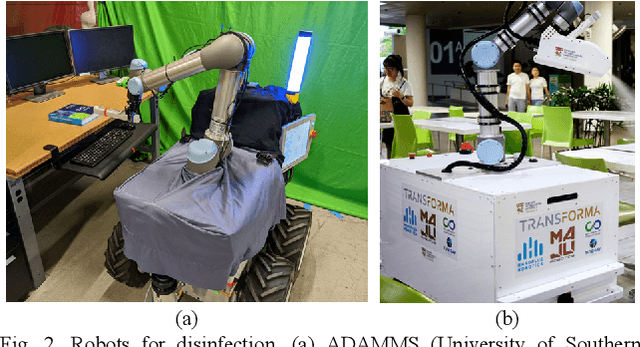

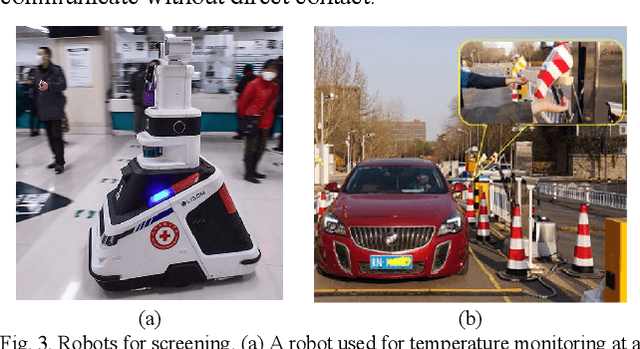

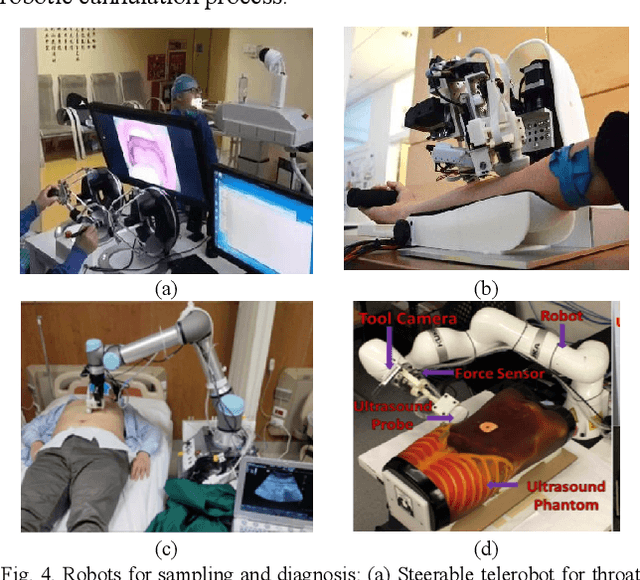

Medical Robots for Infectious Diseases: Lessons and Challenges from the COVID-19 Pandemic

Dec 14, 2020

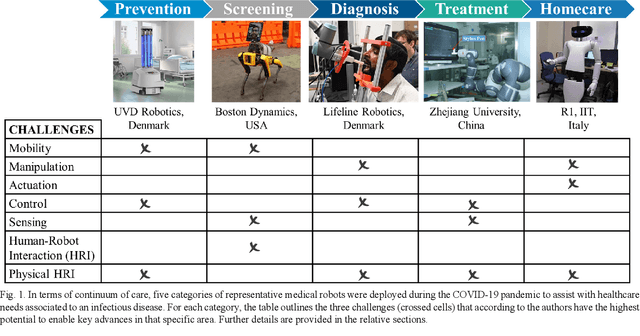

Abstract:Medical robots can play an important role in mitigating the spread of infectious diseases and delivering quality care to patients during the COVID-19 pandemic. Methods and procedures involving medical robots in the continuum of care, ranging from disease prevention, screening, diagnosis, treatment, and homecare have been extensively deployed and also present incredible opportunities for future development. This paper provides an overview of the current state-of-the-art, highlighting the enabling technologies and unmet needs for prospective technological advances within the next 5-10 years. We also identify key research and knowledge barriers that need to be addressed in developing effective and flexible solutions to ensure preparedness for rapid and scalable deployment to combat infectious diseases.

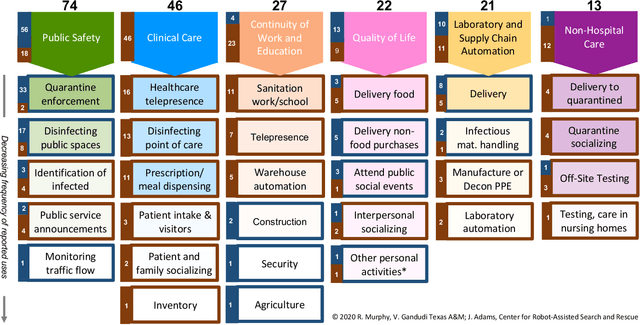

Applications of Robots for COVID-19 Response

Aug 16, 2020

Abstract:This paper reviews 262 reports appearing between March 27 and July 4, 2020, in the press, social media, and scientific literature describing 203 instances of actual use of 104 different models of ground and aerial robots for the COVID19 response. The reports are organized by stakeholders and work domain into a novel taxonomy of six application categories, reflecting major differences in work envelope, adoption strategy, and human-robot interaction constraints. Each application category is further divided into a total of 30 subcategories based on differences in mission. The largest number of reported instances were for public safety (74 out of 203) and clinical care (46), though robots for quality of life (27), continuity of work and education (22), laboratory and supply chain automation (21), and non-clinical care (13) were notable. Ground robots were used more frequently (119) than aerial systems (84), but unlike ground robots, aerial applications appeared to take advantage of existing general purpose platforms that were used for multiple applications and missions. Of the 104 models of robots, 82 were determined to be commercially available or already existed as a prototype, 11 were modifications to existing robots, 11 were built from scratch. Teleoperation dominated the control style (105 instances), with the majority of those applications intentionally providing remote presence and thus not amenable to full autonomy in the future. Automation accounted for 74 instances and taskable agency forms of autonomy, 24. The data suggests areas for further research in autonomy, human-robot interaction, and adaptability.

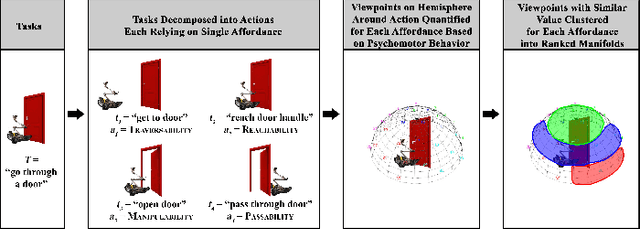

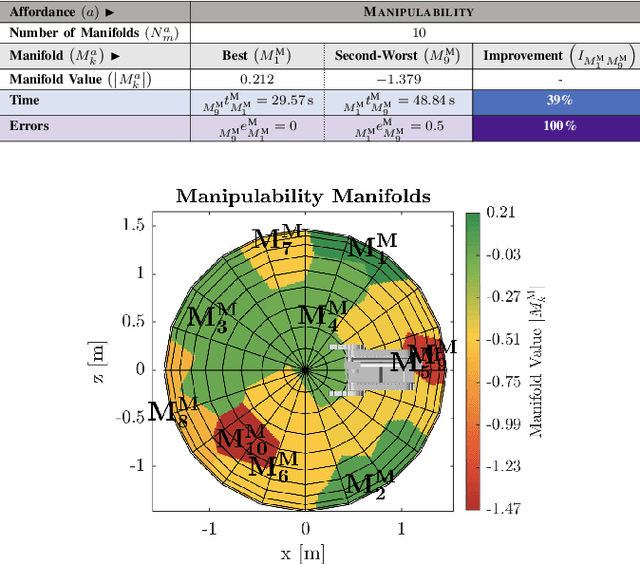

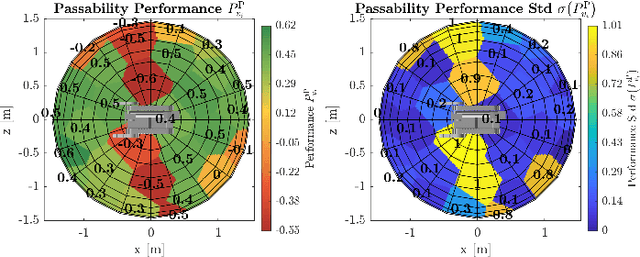

Best Viewpoints for External Robots or Sensors Assisting Other Robots

Jul 20, 2020

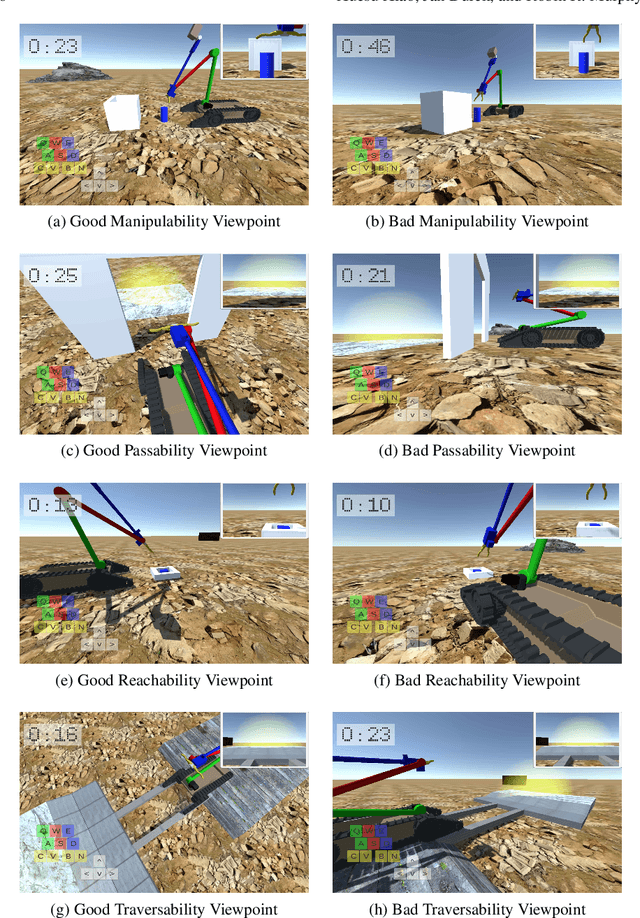

Abstract:This work creates a model of the value of different external viewpoints of a robot performing tasks. The current state of the practice is to use a teleoperated assistant robot to provide a view of a task being performed by a primary robot; however, the choice of viewpoints is ad hoc and does not always lead to improved performance. This research applies a psychomotor approach to develop a model of the relative quality of external viewpoints using Gibsonian affordances. In this approach, viewpoints for the affordances are rated based on the psychomotor behavior of human operators and clustered into manifolds of viewpoints with the equivalent value. The value of 30 viewpoints is quantified in a study with 31 expert robot operators for 4 affordances (Reachability, Passability, Manipulability, and Traversability) using a computer-based simulator of two robots. The adjacent viewpoints with similar values are clustered into ranked manifolds using agglomerative hierarchical clustering. The results show the validity of the affordance-based approach by confirming that there are manifolds of statistically significantly different viewpoint values, viewpoint values are statistically significantly dependent on the affordances, and viewpoint values are independent of a robot. Furthermore, the best manifold for each affordance provides a statistically significant improvement with a large Cohen's d effect size (1.1-2.3) in performance (improving time by 14%-59% and reducing errors by 87%-100%) and improvement in performance variation over the worst manifold. This model will enable autonomous selection of the best possible viewpoint and path planning for the assistant robot.

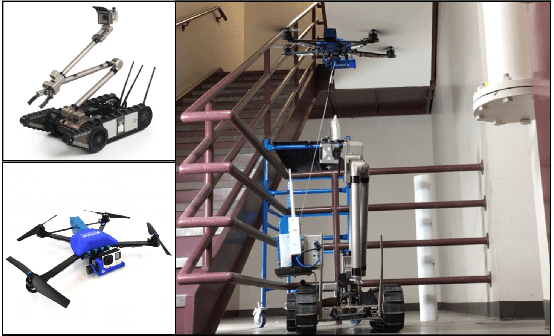

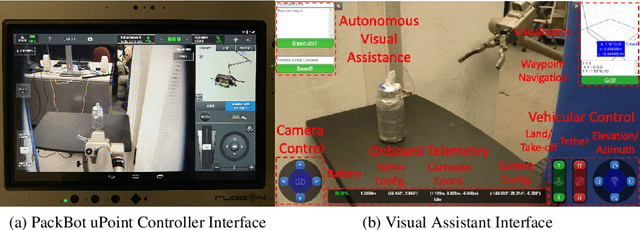

Tethered Aerial Visual Assistance

Jan 15, 2020

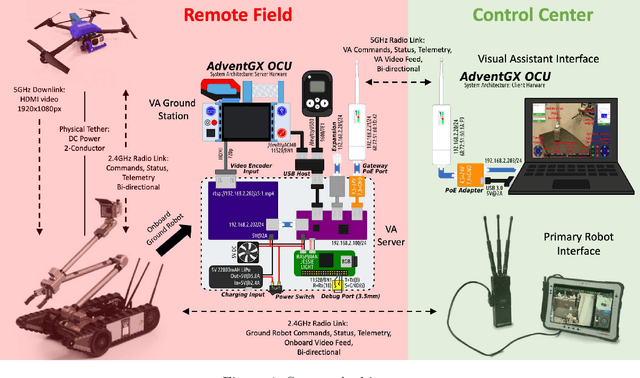

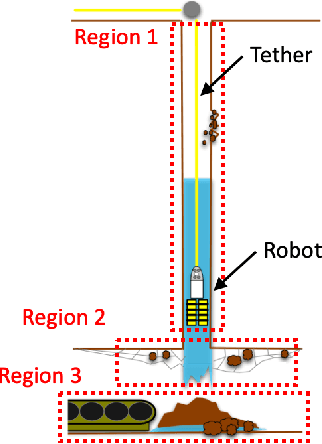

Abstract:In this paper, an autonomous tethered Unmanned Aerial Vehicle (UAV) is developed into a visual assistant in a marsupial co-robots team, collaborating with a tele-operated Unmanned Ground Vehicle (UGV) for robot operations in unstructured or confined environments. These environments pose extreme challenges to the remote tele-operator due to the lack of sufficient situational awareness, mostly caused by the unstructuredness and confinement, stationary and limited field-of-view and lack of depth perception from the robot's onboard cameras. To overcome these problems, a secondary tele-operated robot is used in current practices, who acts as a visual assistant and provides external viewpoints to overcome the perceptual limitations of the primary robot's onboard sensors. However, a second tele-operated robot requires extra manpower and teamwork demand between primary and secondary operators. The manually chosen viewpoints tend to be subjective and sub-optimal. Considering these intricacies, we develop an autonomous tethered aerial visual assistant in place of the secondary tele-operated robot and operator, to reduce human robot ratio from 2:2 to 1:2. Using a fundamental viewpoint quality theory, a formal risk reasoning framework, and a newly developed tethered motion suite, our visual assistant is able to autonomously navigate to good-quality viewpoints in a risk-aware manner through unstructured or confined spaces with a tether. The developed marsupial co-robots team could improve tele-operation efficiency in nuclear operations, bomb squad, disaster robots, and other domains with novel tasks or highly occluded environments, by reducing manpower and teamwork demand, and achieving better visual assistance quality with trustworthy risk-aware motion.

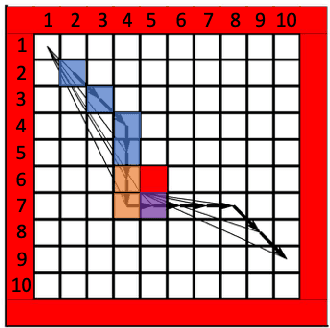

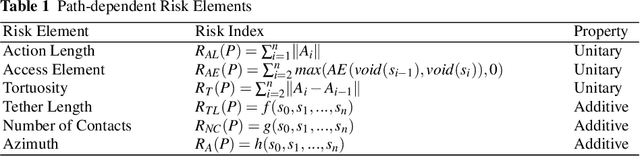

Robot Motion Risk Reasoning Framework

Sep 05, 2019

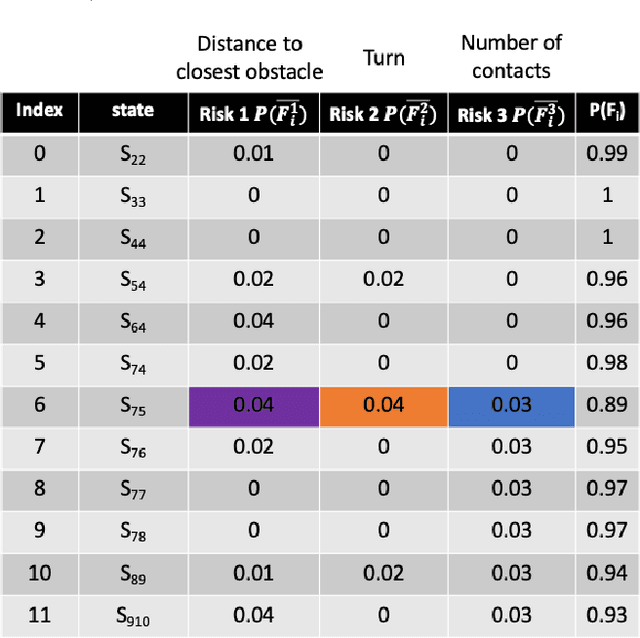

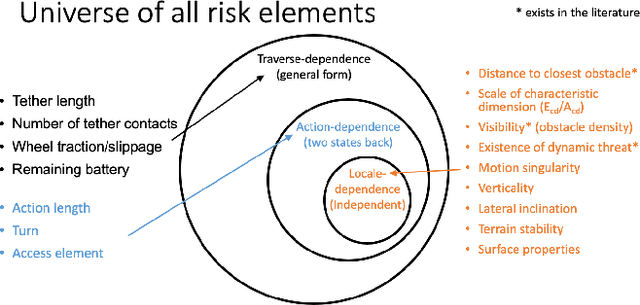

Abstract:This paper presents a formal and comprehensive reasoning framework for robot motion risk, with a focus on locomotion in challenging unstructured or confined environments. Risk which locomoting robots face in physical spaces was not formally defined in the robotics literature. Safety or risk concerns were addressed in an ad hoc fashion, depending only on the specific application of interest. Without a formal definition, certain properties of risk were simply assumed but ill-supported, such as additivity or being Markovian. The only contributing adverse effect being considered is related with obstacles. This work proposes a formal definition of robot motion risk using propositional logic and probability theory. It presents a universe of risk elements within three major risk categories and unifies them into one single metric. True properties of risk are revealed with formal reasoning, such as non-additivity or history-dependency. Risk representation which encompasses risk effects from both temporal and spatial domain is presented. The resulted risk framework provides a formal approach to reason about robot motion risk. Safety of robot locomotion could be explicitly reasoned, quantified, and compared. It could be used for risk-aware planning and reasoning by both human and robotic agents.

Autonomous Visual Assistance for Robot Operations Using a Tethered UAV

Mar 29, 2019

Abstract:This paper develops an autonomous tethered aerial visual assistant for robot operations in unstructured or confined environments. Robotic tele-operation in remote environments is difficult due to lack of sufficient situational awareness, mostly caused by the stationary and limited field-of-view and lack of depth perception from the robot's onboard camera. The emerging state of the practice is to use two robots, a primary and a secondary that acts as a visual assistant to overcome the perceptual limitations of the onboard sensors by providing an external viewpoint. However, problems exist when using a tele-operated visual assistant: extra manpower, manually chosen suboptimal viewpoint, and extra teamwork demand between primary and secondary operators. In this work, we use an autonomous tethered aerial visual assistant to replace the secondary robot and operator, reducing human robot ratio from 2:2 to 1:2. This visual assistant is able to autonomously navigate through unstructured or confined spaces in a risk-aware manner, while continuously maintaining good viewpoint quality to increase the primary operator's situational awareness. With the proposed co-robots team, tele-operation missions in nuclear operations, bomb squad, disaster robots, and other domains with novel tasks or highly occluded environments could benefit from reduced manpower and teamwork demand, along with improved visual assistance quality based on trustworthy risk-aware motion in cluttered environments.

Use of Dempster-Shafer Conflict Metric to Detect Interpretation Inconsistency

Jul 04, 2012

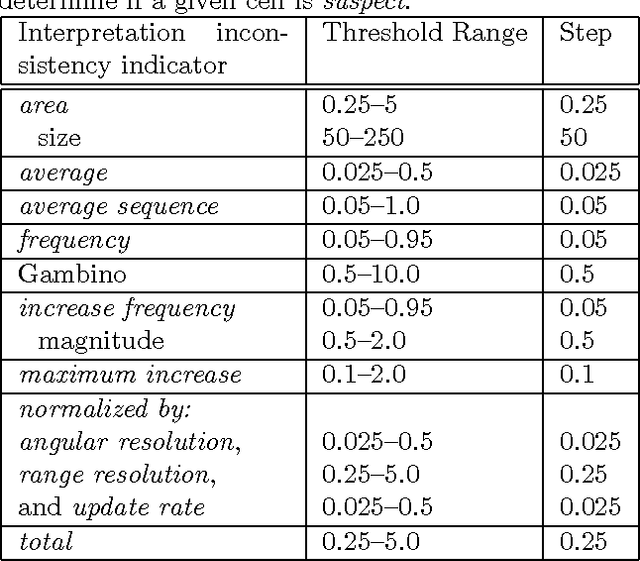

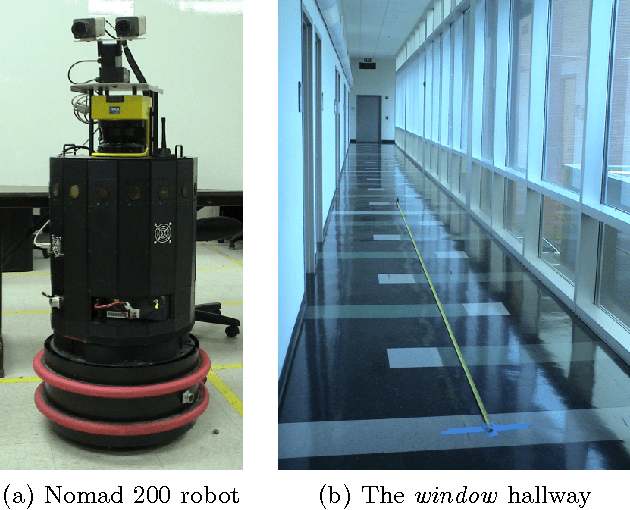

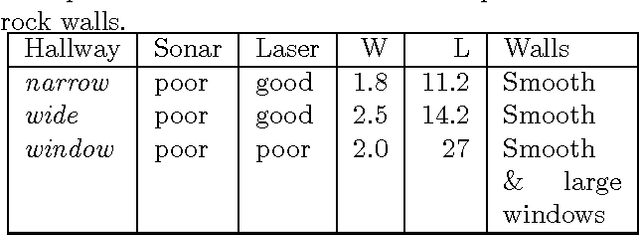

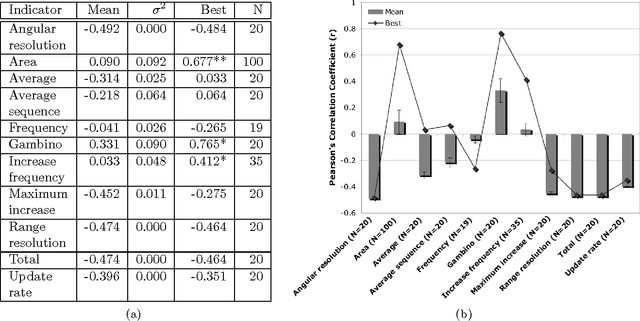

Abstract:A model of the world built from sensor data may be incorrect even if the sensors are functioning correctly. Possible causes include the use of inappropriate sensors (e.g. a laser looking through glass walls), sensor inaccuracies accumulate (e.g. localization errors), the a priori models are wrong, or the internal representation does not match the world (e.g. a static occupancy grid used with dynamically moving objects). We are interested in the case where the constructed model of the world is flawed, but there is no access to the ground truth that would allow the system to see the discrepancy, such as a robot entering an unknown environment. This paper considers the problem of determining when something is wrong using only the sensor data used to construct the world model. It proposes 11 interpretation inconsistency indicators based on the Dempster-Shafer conflict metric, Con, and evaluates these indicators according to three criteria: ability to distinguish true inconsistency from sensor noise (classification), estimate the magnitude of discrepancies (estimation), and determine the source(s) (if any) of sensing problems in the environment (isolation). The evaluation is conducted using data from a mobile robot with sonar and laser range sensors navigating indoor environments under controlled conditions. The evaluation shows that the Gambino indicator performed best in terms of estimation (at best 0.77 correlation), isolation, and classification of the sensing situation as degraded (7% false negative rate) or normal (0% false positive rate).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge