Jennifer Carlson

Use of Dempster-Shafer Conflict Metric to Detect Interpretation Inconsistency

Jul 04, 2012

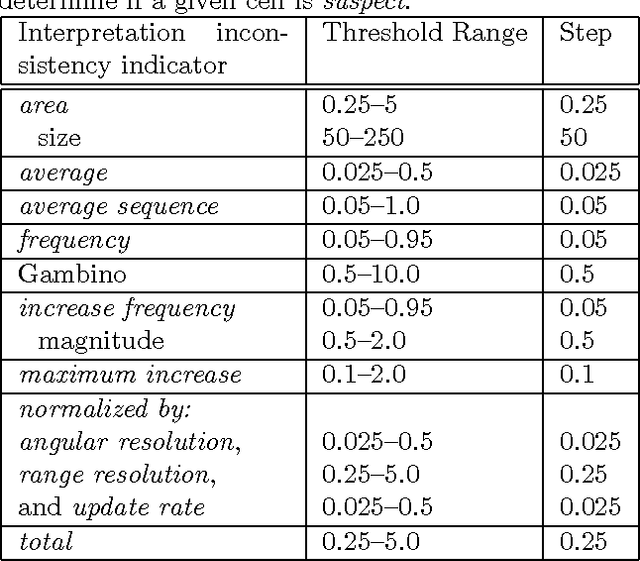

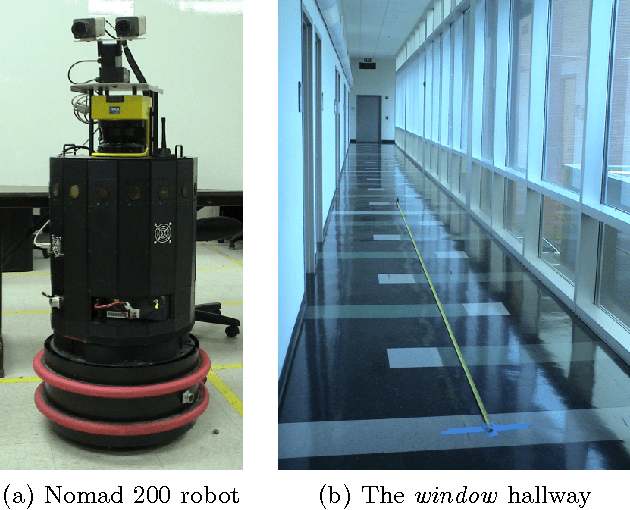

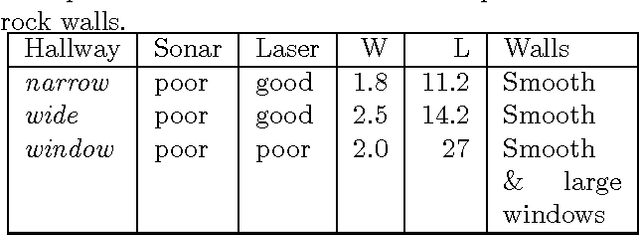

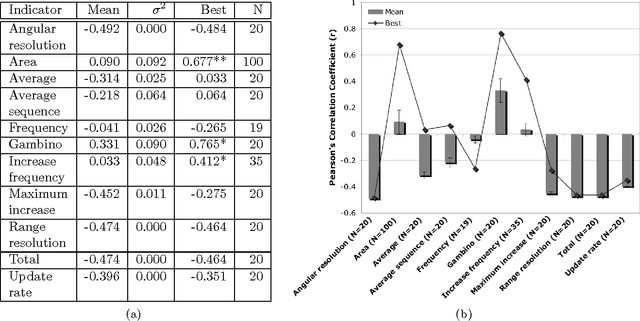

Abstract:A model of the world built from sensor data may be incorrect even if the sensors are functioning correctly. Possible causes include the use of inappropriate sensors (e.g. a laser looking through glass walls), sensor inaccuracies accumulate (e.g. localization errors), the a priori models are wrong, or the internal representation does not match the world (e.g. a static occupancy grid used with dynamically moving objects). We are interested in the case where the constructed model of the world is flawed, but there is no access to the ground truth that would allow the system to see the discrepancy, such as a robot entering an unknown environment. This paper considers the problem of determining when something is wrong using only the sensor data used to construct the world model. It proposes 11 interpretation inconsistency indicators based on the Dempster-Shafer conflict metric, Con, and evaluates these indicators according to three criteria: ability to distinguish true inconsistency from sensor noise (classification), estimate the magnitude of discrepancies (estimation), and determine the source(s) (if any) of sensing problems in the environment (isolation). The evaluation is conducted using data from a mobile robot with sonar and laser range sensors navigating indoor environments under controlled conditions. The evaluation shows that the Gambino indicator performed best in terms of estimation (at best 0.77 correlation), isolation, and classification of the sensing situation as degraded (7% false negative rate) or normal (0% false positive rate).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge