Roberto Verdone

Multi-Agent Reinforcement Learning for Power Control in Wireless Networks via Adaptive Graphs

Nov 27, 2023

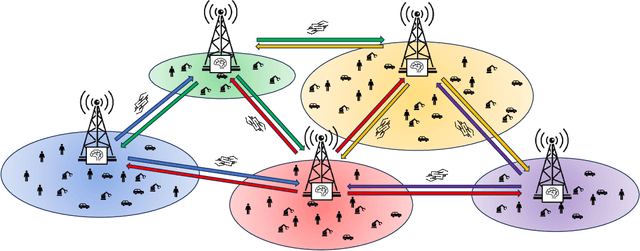

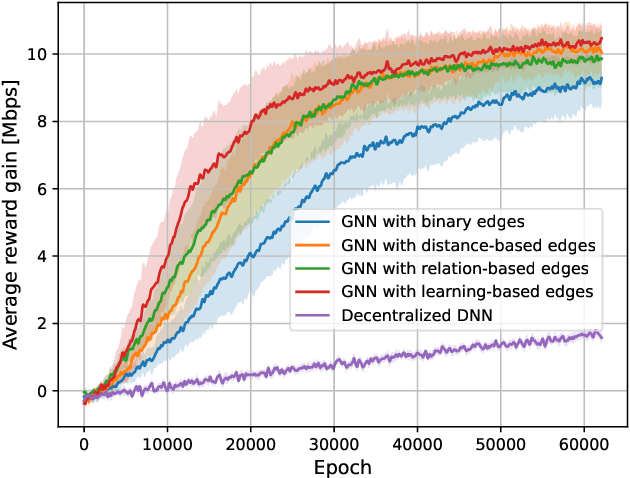

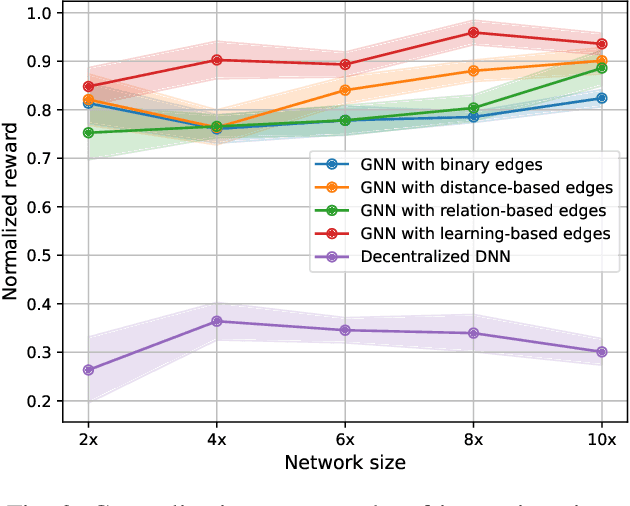

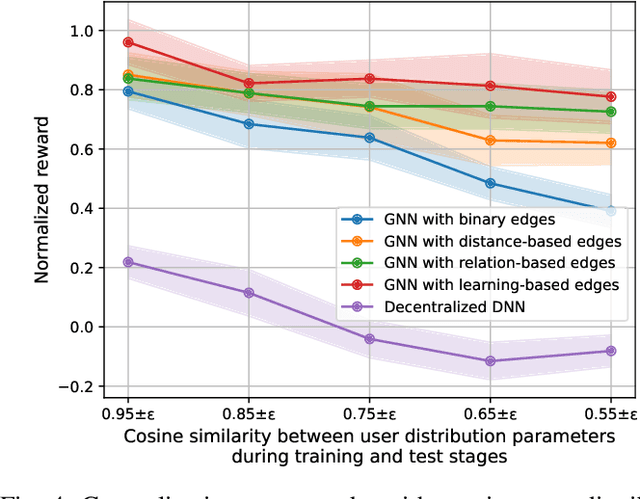

Abstract:The ever-increasing demand for high-quality and heterogeneous wireless communication services has driven extensive research on dynamic optimization strategies in wireless networks. Among several possible approaches, multi-agent deep reinforcement learning (MADRL) has emerged as a promising method to address a wide range of complex optimization problems like power control. However, the seamless application of MADRL to a variety of network optimization problems faces several challenges related to convergence. In this paper, we present the use of graphs as communication-inducing structures among distributed agents as an effective means to mitigate these challenges. Specifically, we harness graph neural networks (GNNs) as neural architectures for policy parameterization to introduce a relational inductive bias in the collective decision-making process. Most importantly, we focus on modeling the dynamic interactions among sets of neighboring agents through the introduction of innovative methods for defining a graph-induced framework for integrated communication and learning. Finally, the superior generalization capabilities of the proposed methodology to larger networks and to networks with different user categories is verified through simulations.

Data-driven Predictive Latency for 5G: A Theoretical and Experimental Analysis Using Network Measurements

Jul 10, 2023

Abstract:The advent of novel 5G services and applications with binding latency requirements and guaranteed Quality of Service (QoS) hastened the need to incorporate autonomous and proactive decision-making in network management procedures. The objective of our study is to provide a thorough analysis of predictive latency within 5G networks by utilizing real-world network data that is accessible to mobile network operators (MNOs). In particular, (i) we present an analytical formulation of the user-plane latency as a Hypoexponential distribution, which is validated by means of a comparative analysis with empirical measurements, and (ii) we conduct experimental results of probabilistic regression, anomaly detection, and predictive forecasting leveraging on emerging domains in Machine Learning (ML), such as Bayesian Learning (BL) and Machine Learning on Graphs (GML). We test our predictive framework using data gathered from scenarios of vehicular mobility, dense-urban traffic, and social gathering events. Our results provide valuable insights into the efficacy of predictive algorithms in practical applications.

An End-To-End Analysis of Deep Learning-Based Remaining Useful Life Algorithms for Satefy-Critical 5G-Enabled IIoT Networks

Jul 10, 2023

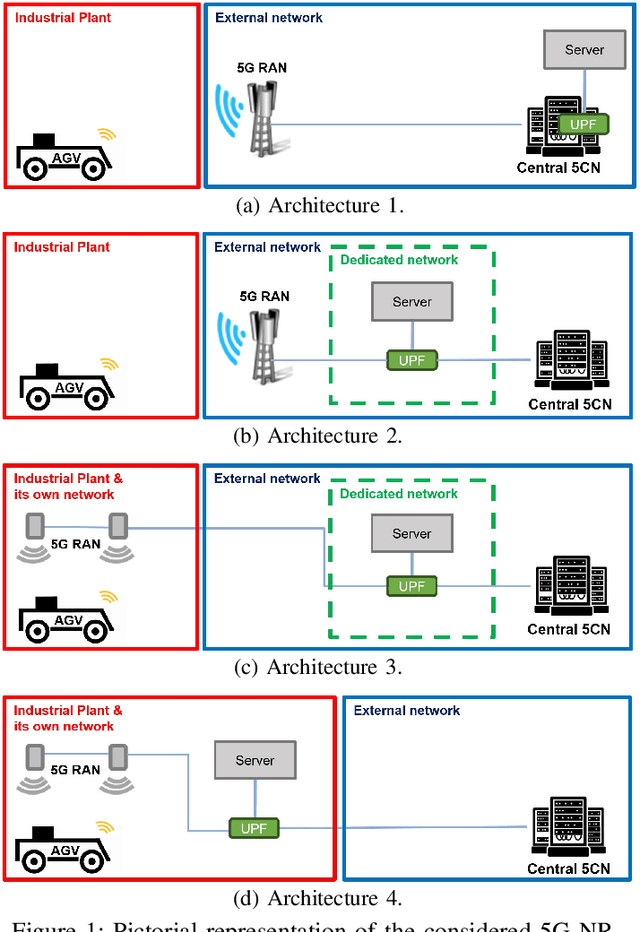

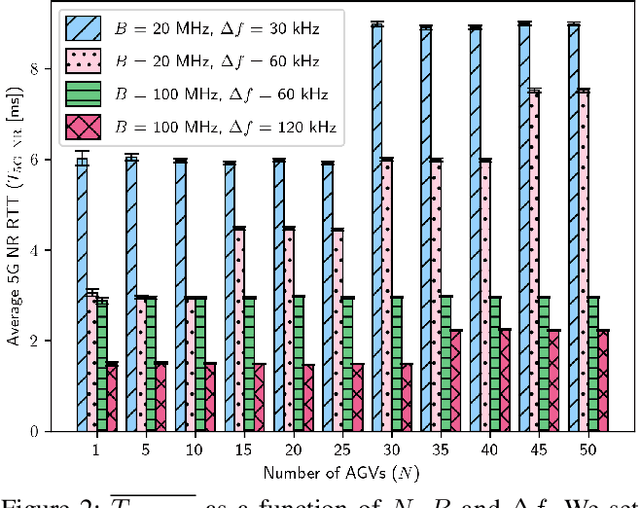

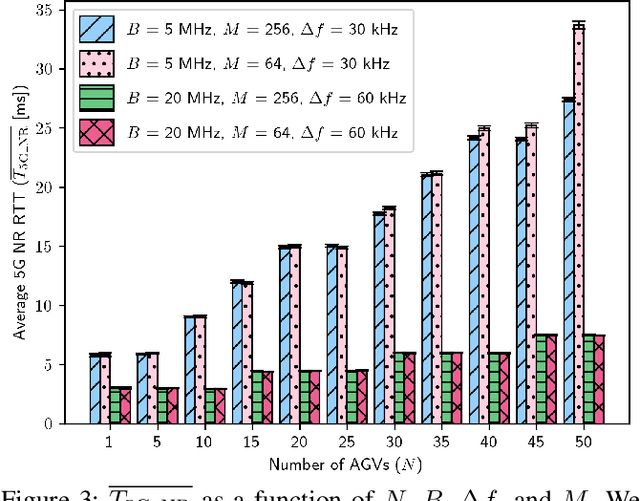

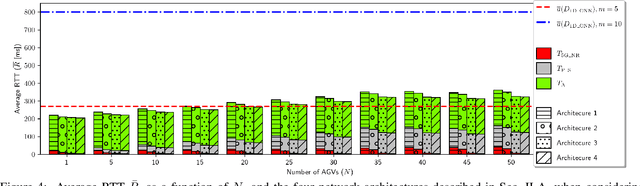

Abstract:Remaining Useful Life (RUL) prediction is a critical task that aims to estimate the amount of time until a system fails, where the latter is formed by three main components, that is, the application, communication network, and RUL logic. In this paper, we provide an end-to-end analysis of an entire RUL-based chain. Specifically, we consider a factory floor where Automated Guided Vehicles (AGVs) transport dangerous liquids whose fall may cause injuries to workers. Regarding the communication infrastructure, the AGVs are equipped with 5G User Equipments (UEs) that collect real-time data of their movements and send them to an application server. The RUL logic consists of a Deep Learning (DL)-based pipeline that assesses if there will be liquid falls by analyzing the collected data, and, eventually, sending commands to the AGVs to avoid such a danger. According to this scenario, we performed End-to-End 5G NR-compliant network simulations to study the Round-Trip Time (RTT) as a function of the overall system bandwidth, subcarrier spacing, and modulation order. Then, via real-world experiments, we collect data to train, test and compare 7 DL models and 1 baseline threshold-based algorithm in terms of cost and average advance. Finally, we assess whether or not the RTT provided by four different 5G NR network architectures is compatible with the average advance provided by the best-performing one-Dimensional Convolutional Neural Network (1D-CNN). Numerical results show under which conditions the DL-based approach for RUL estimation matches with the RTT performance provided by different 5G NR network architectures.

Uplink Scheduling in Federated Learning: an Importance-Aware Approach via Graph Representation Learning

Jan 27, 2023Abstract:Federated Learning (FL) has emerged as a promising framework for distributed training of AI-based services, applications, and network procedures in 6G. One of the major challenges affecting the performance and efficiency of 6G wireless FL systems is the massive scheduling of user devices over resource-constrained channels. In this work, we argue that the uplink scheduling of FL client devices is a problem with a rich relational structure. To address this challenge, we propose a novel, energy-efficient, and importance-aware metric for client scheduling in FL applications by leveraging Unsupervised Graph Representation Learning (UGRL). Our proposed approach introduces a relational inductive bias in the scheduling process and does not require the collection of training feedback information from client devices, unlike state-of-the-art importance-aware mechanisms. We evaluate our proposed solution against baseline scheduling algorithms based on recently proposed metrics in the literature. Results show that, when considering scenarios of nodes exhibiting spatial relations, our approach can achieve an average gain of up to 10% in model accuracy and up to 17 times in energy efficiency compared to state-of-the-art importance-aware policies.

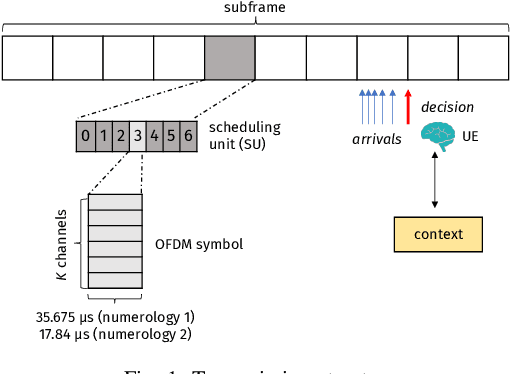

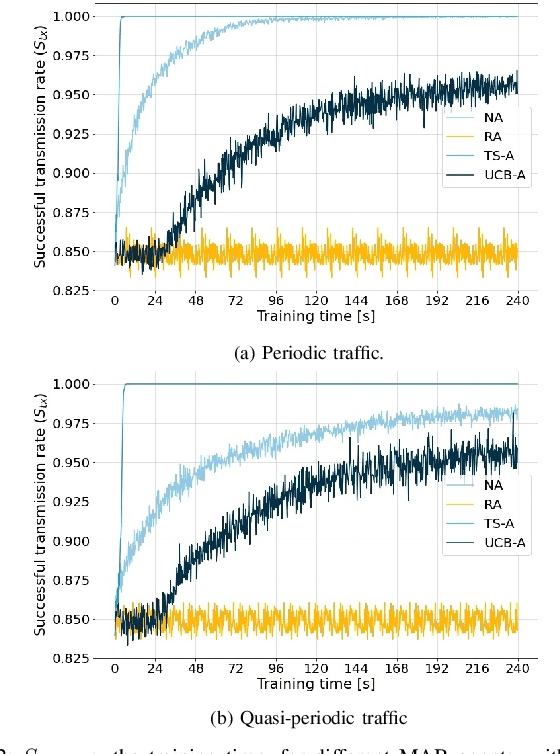

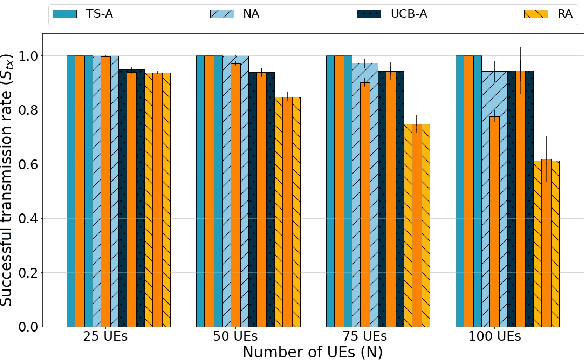

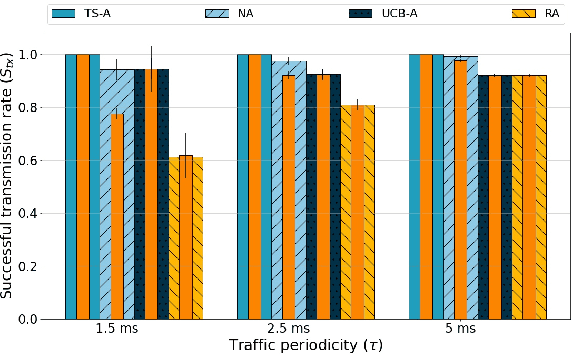

Distributed Resource Allocation for URLLC in IIoT Scenarios: A Multi-Armed Bandit Approach

Nov 22, 2022

Abstract:This paper addresses the problem of enabling inter-machine Ultra-Reliable Low-Latency Communication (URLLC) in future 6G Industrial Internet of Things (IIoT) networks. As far as the Radio Access Network (RAN) is concerned, centralized pre-configured resource allocation requires scheduling grants to be disseminated to the User Equipments (UEs) before uplink transmissions, which is not efficient for URLLC, especially in case of flexible/unpredictable traffic. To alleviate this burden, we study a distributed, user-centric scheme based on machine learning in which UEs autonomously select their uplink radio resources without the need to wait for scheduling grants or preconfiguration of connections. Using simulation, we demonstrate that a Multi-Armed Bandit (MAB) approach represents a desirable solution to allocate resources with URLLC in mind in an IIoT environment, in case of both periodic and aperiodic traffic, even considering highly populated networks and aggressive traffic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge