Multi-Agent Reinforcement Learning for Power Control in Wireless Networks via Adaptive Graphs

Paper and Code

Nov 27, 2023

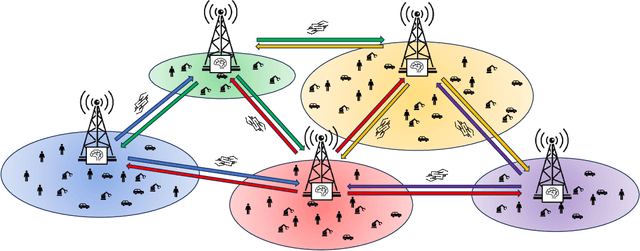

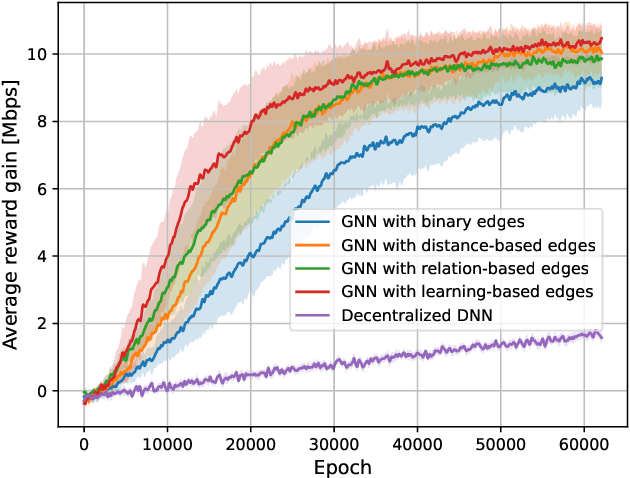

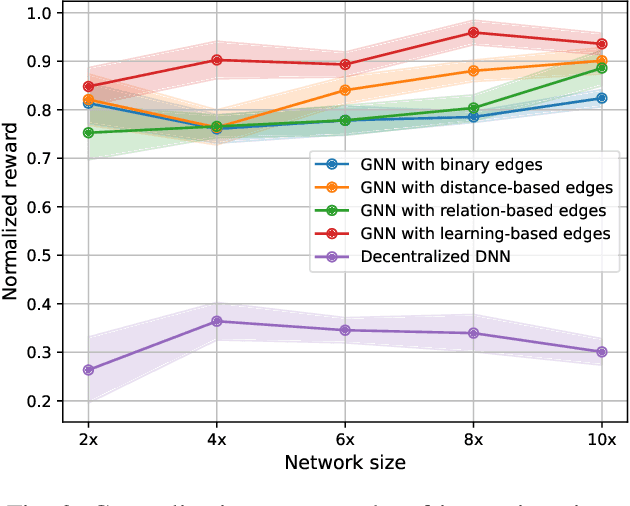

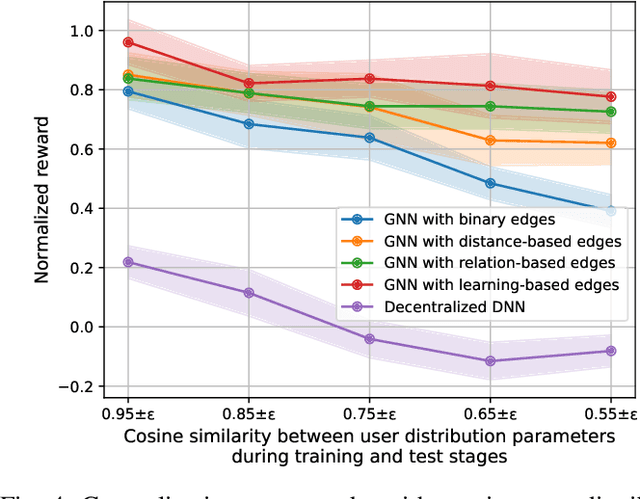

The ever-increasing demand for high-quality and heterogeneous wireless communication services has driven extensive research on dynamic optimization strategies in wireless networks. Among several possible approaches, multi-agent deep reinforcement learning (MADRL) has emerged as a promising method to address a wide range of complex optimization problems like power control. However, the seamless application of MADRL to a variety of network optimization problems faces several challenges related to convergence. In this paper, we present the use of graphs as communication-inducing structures among distributed agents as an effective means to mitigate these challenges. Specifically, we harness graph neural networks (GNNs) as neural architectures for policy parameterization to introduce a relational inductive bias in the collective decision-making process. Most importantly, we focus on modeling the dynamic interactions among sets of neighboring agents through the introduction of innovative methods for defining a graph-induced framework for integrated communication and learning. Finally, the superior generalization capabilities of the proposed methodology to larger networks and to networks with different user categories is verified through simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge