Giampaolo Cuozzo

An End-To-End Analysis of Deep Learning-Based Remaining Useful Life Algorithms for Satefy-Critical 5G-Enabled IIoT Networks

Jul 10, 2023

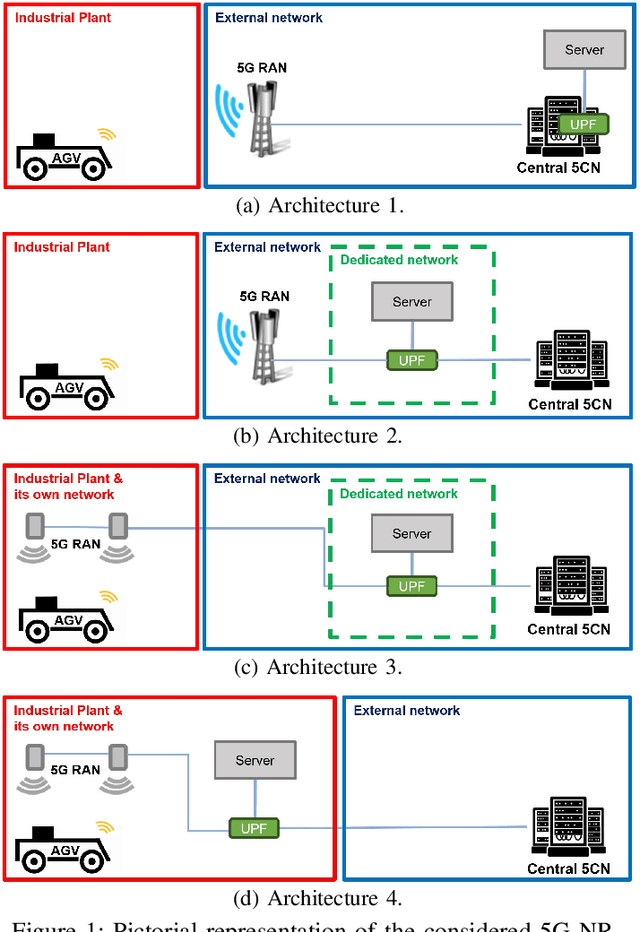

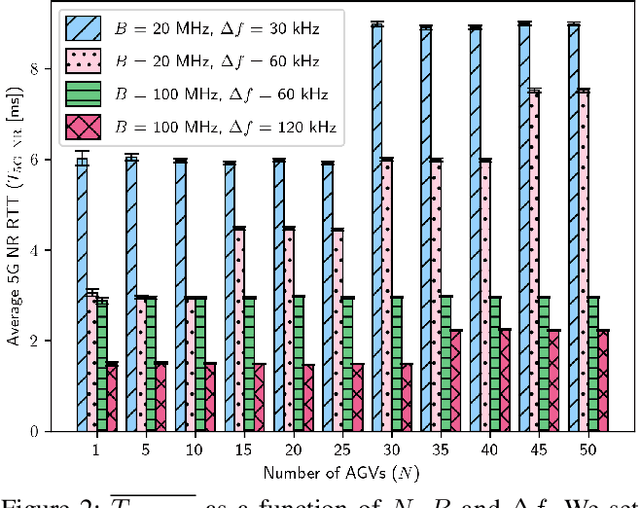

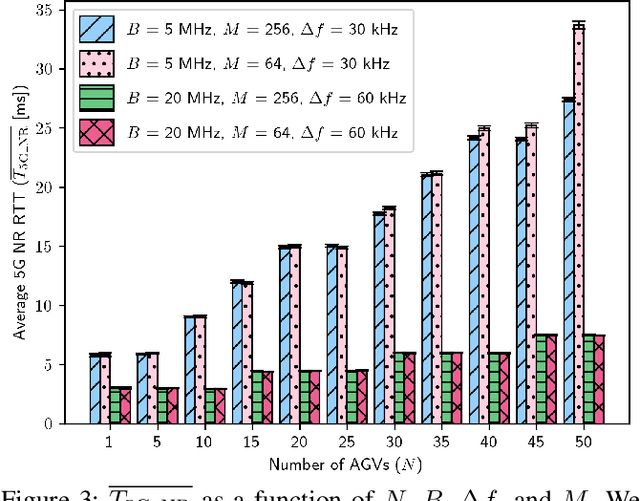

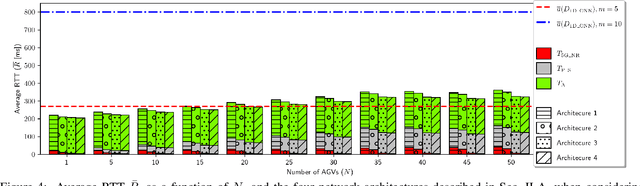

Abstract:Remaining Useful Life (RUL) prediction is a critical task that aims to estimate the amount of time until a system fails, where the latter is formed by three main components, that is, the application, communication network, and RUL logic. In this paper, we provide an end-to-end analysis of an entire RUL-based chain. Specifically, we consider a factory floor where Automated Guided Vehicles (AGVs) transport dangerous liquids whose fall may cause injuries to workers. Regarding the communication infrastructure, the AGVs are equipped with 5G User Equipments (UEs) that collect real-time data of their movements and send them to an application server. The RUL logic consists of a Deep Learning (DL)-based pipeline that assesses if there will be liquid falls by analyzing the collected data, and, eventually, sending commands to the AGVs to avoid such a danger. According to this scenario, we performed End-to-End 5G NR-compliant network simulations to study the Round-Trip Time (RTT) as a function of the overall system bandwidth, subcarrier spacing, and modulation order. Then, via real-world experiments, we collect data to train, test and compare 7 DL models and 1 baseline threshold-based algorithm in terms of cost and average advance. Finally, we assess whether or not the RTT provided by four different 5G NR network architectures is compatible with the average advance provided by the best-performing one-Dimensional Convolutional Neural Network (1D-CNN). Numerical results show under which conditions the DL-based approach for RUL estimation matches with the RTT performance provided by different 5G NR network architectures.

Distributed Resource Allocation for URLLC in IIoT Scenarios: A Multi-Armed Bandit Approach

Nov 22, 2022

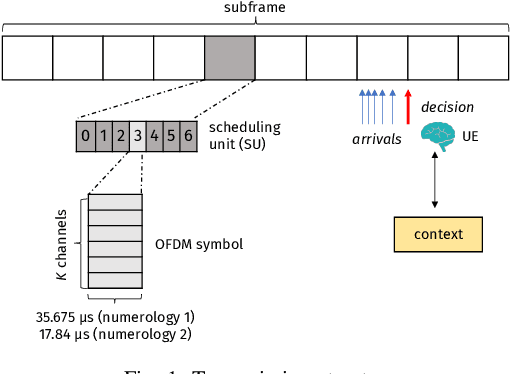

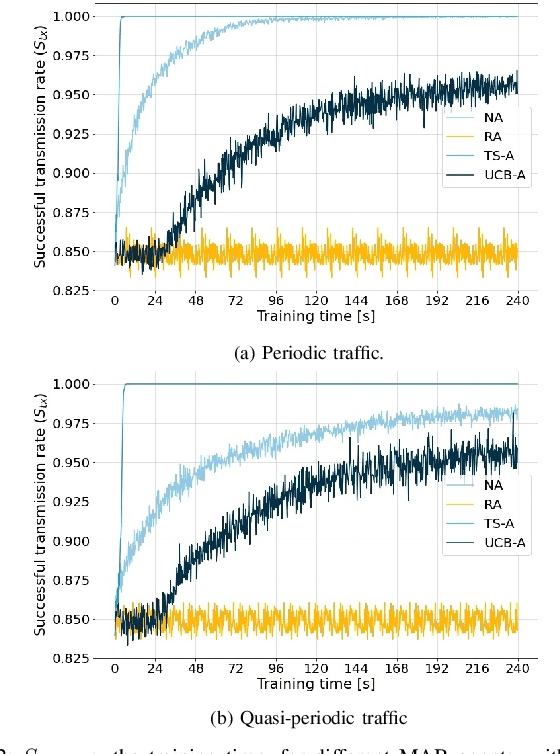

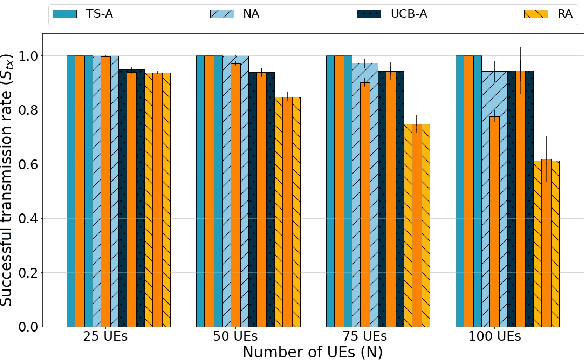

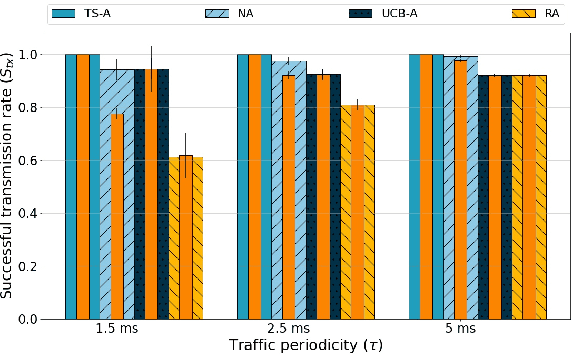

Abstract:This paper addresses the problem of enabling inter-machine Ultra-Reliable Low-Latency Communication (URLLC) in future 6G Industrial Internet of Things (IIoT) networks. As far as the Radio Access Network (RAN) is concerned, centralized pre-configured resource allocation requires scheduling grants to be disseminated to the User Equipments (UEs) before uplink transmissions, which is not efficient for URLLC, especially in case of flexible/unpredictable traffic. To alleviate this burden, we study a distributed, user-centric scheme based on machine learning in which UEs autonomously select their uplink radio resources without the need to wait for scheduling grants or preconfiguration of connections. Using simulation, we demonstrate that a Multi-Armed Bandit (MAB) approach represents a desirable solution to allocate resources with URLLC in mind in an IIoT environment, in case of both periodic and aperiodic traffic, even considering highly populated networks and aggressive traffic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge