Robert J. Piechocki

FROST: Towards Energy-efficient AI-on-5G Platforms -- A GPU Power Capping Evaluation

Oct 17, 2023

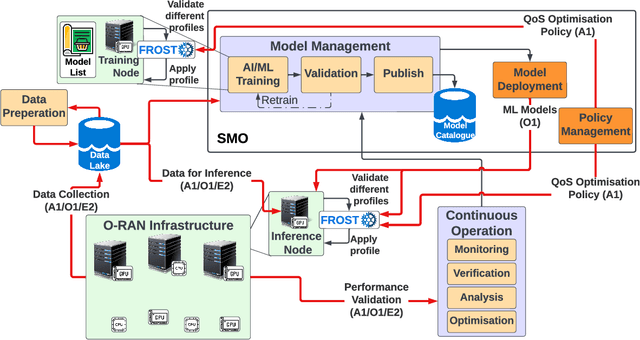

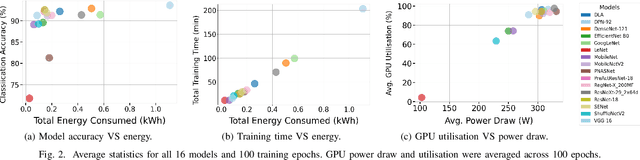

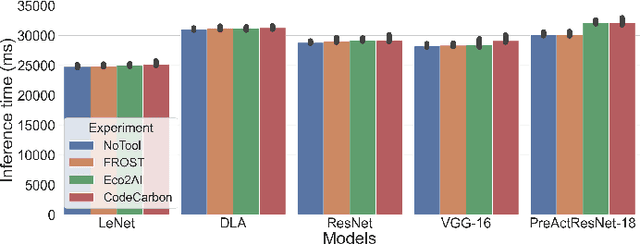

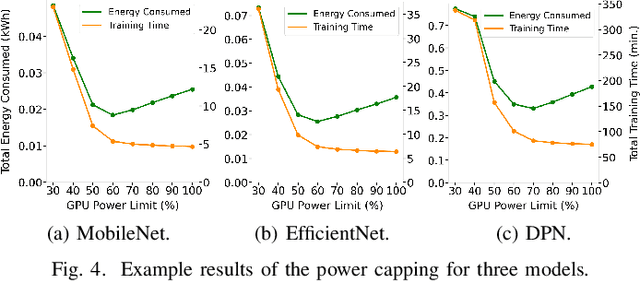

Abstract:The Open Radio Access Network (O-RAN) is a burgeoning market with projected growth in the upcoming years. RAN has the highest CAPEX impact on the network and, most importantly, consumes 73% of its total energy. That makes it an ideal target for optimisation through the integration of Machine Learning (ML). However, the energy consumption of ML is frequently overlooked in such ecosystems. Our work addresses this critical aspect by presenting FROST - Flexible Reconfiguration method with Online System Tuning - a solution for energy-aware ML pipelines that adhere to O-RAN's specifications and principles. FROST is capable of profiling the energy consumption of an ML pipeline and optimising the hardware accordingly, thereby limiting the power draw. Our findings indicate that FROST can achieve energy savings of up to 26.4% without compromising the model's accuracy or introducing significant time delays.

Privacy in Multimodal Federated Human Activity Recognition

May 20, 2023

Abstract:Human Activity Recognition (HAR) training data is often privacy-sensitive or held by non-cooperative entities. Federated Learning (FL) addresses such concerns by training ML models on edge clients. This work studies the impact of privacy in federated HAR at a user, environment, and sensor level. We show that the performance of FL for HAR depends on the assumed privacy level of the FL system and primarily upon the colocation of data from different sensors. By avoiding data sharing and assuming privacy at the human or environment level, as prior works have done, the accuracy decreases by 5-7%. However, extending this to the modality level and strictly separating sensor data between multiple clients may decrease the accuracy by 19-42%. As this form of privacy is necessary for the ethical utilisation of passive sensing methods in HAR, we implement a system where clients mutually train both a general FL model and a group-level one per modality. Our evaluation shows that this method leads to only a 7-13% decrease in accuracy, making it possible to build HAR systems with diverse hardware.

Multimodal sensor fusion in the latent representation space

Aug 03, 2022

Abstract:A new method for multimodal sensor fusion is introduced. The technique relies on a two-stage process. In the first stage, a multimodal generative model is constructed from unlabelled training data. In the second stage, the generative model serves as a reconstruction prior and the search manifold for the sensor fusion tasks. The method also handles cases where observations are accessed only via subsampling i.e. compressed sensing. We demonstrate the effectiveness and excellent performance on a range of multimodal fusion experiments such as multisensory classification, denoising, and recovery from subsampled observations.

Self-Supervised WiFi-Based Activity Recognition

Apr 19, 2021

Abstract:Traditional approaches to activity recognition involve the use of wearable sensors or cameras in order to recognise human activities. In this work, we extract fine-grained physical layer information from WiFi devices for the purpose of passive activity recognition in indoor environments. While such data is ubiquitous, few approaches are designed to utilise large amounts of unlabelled WiFi data. We propose the use of self-supervised contrastive learning to improve activity recognition performance when using multiple views of the transmitted WiFi signal captured by different synchronised receivers. We conduct experiments where the transmitters and receivers are arranged in different physical layouts so as to cover both Line-of-Sight (LoS) and non LoS (NLoS) conditions. We compare the proposed contrastive learning system with non-contrastive systems and observe a 17.7% increase in macro averaged F1 score on the task of WiFi based activity recognition, as well as significant improvements in one- and few-shot learning scenarios.

Location Anomalies Detection for Connected and Autonomous Vehicles

Jul 01, 2019

Abstract:Future Connected and Automated Vehicles (CAV), and more generally ITS, will form a highly interconnected system. Such a paradigm is referred to as the Internet of Vehicles (herein Internet of CAVs) and is a prerequisite to orchestrate traffic flows in cities. For optimal decision making and supervision, traffic centres will have access to suitably anonymized CAV mobility information. Safe and secure operations will then be contingent on early detection of anomalies. In this paper, a novel unsupervised learning model based on deep autoencoder is proposed to detect the self-reported location anomaly in CAVs, using vehicle locations and the Received Signal Strength Indicator (RSSI) as features. Quantitative experiments on simulation datasets show that the proposed approach is effective and robust in detecting self-reported location anomalies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge