Reza Samavi

Cascading Robustness Verification: Toward Efficient Model-Agnostic Certification

Feb 04, 2026Abstract:Certifying neural network robustness against adversarial examples is challenging, as formal guarantees often require solving non-convex problems. Hence, incomplete verifiers are widely used because they scale efficiently and substantially reduce the cost of robustness verification compared to complete methods. However, relying on a single verifier can underestimate robustness because of loose approximations or misalignment with training methods. In this work, we propose Cascading Robustness Verification (CRV), which goes beyond an engineering improvement by exposing fundamental limitations of existing robustness metric and introducing a framework that enhances both reliability and efficiency. CRV is a model-agnostic verifier, meaning that its robustness guarantees are independent of the model's training process. The key insight behind the CRV framework is that, when using multiple verification methods, an input is certifiably robust if at least one method certifies it as robust. Rather than relying solely on a single verifier with a fixed constraint set, CRV progressively applies multiple verifiers to balance the tightness of the bound and computational cost. Starting with the least expensive method, CRV halts as soon as an input is certified as robust; otherwise, it proceeds to more expensive methods. For computationally expensive methods, we introduce a Stepwise Relaxation Algorithm (SR) that incrementally adds constraints and checks for certification at each step, thereby avoiding unnecessary computation. Our theoretical analysis demonstrates that CRV achieves equal or higher verified accuracy compared to powerful but computationally expensive incomplete verifiers in the cascade, while significantly reducing verification overhead. Empirical results confirm that CRV certifies at least as many inputs as benchmark approaches, while improving runtime efficiency by up to ~90%.

Superposition as Lossy Compression: Measure with Sparse Autoencoders and Connect to Adversarial Vulnerability

Dec 15, 2025Abstract:Neural networks achieve remarkable performance through superposition: encoding multiple features as overlapping directions in activation space rather than dedicating individual neurons to each feature. This challenges interpretability, yet we lack principled methods to measure superposition. We present an information-theoretic framework measuring a neural representation's effective degrees of freedom. We apply Shannon entropy to sparse autoencoder activations to compute the number of effective features as the minimum neurons needed for interference-free encoding. Equivalently, this measures how many "virtual neurons" the network simulates through superposition. When networks encode more effective features than actual neurons, they must accept interference as the price of compression. Our metric strongly correlates with ground truth in toy models, detects minimal superposition in algorithmic tasks, and reveals systematic reduction under dropout. Layer-wise patterns mirror intrinsic dimensionality studies on Pythia-70M. The metric also captures developmental dynamics, detecting sharp feature consolidation during grokking. Surprisingly, adversarial training can increase effective features while improving robustness, contradicting the hypothesis that superposition causes vulnerability. Instead, the effect depends on task complexity and network capacity: simple tasks with ample capacity allow feature expansion (abundance regime), while complex tasks or limited capacity force reduction (scarcity regime). By defining superposition as lossy compression, this work enables principled measurement of how neural networks organize information under computational constraints, connecting superposition to adversarial robustness.

* Accepted to TMLR, view HTML here: https://leonardbereska.github.io/blog/2025/superposition/

Estimating Quality in Therapeutic Conversations: A Multi-Dimensional Natural Language Processing Framework

May 09, 2025

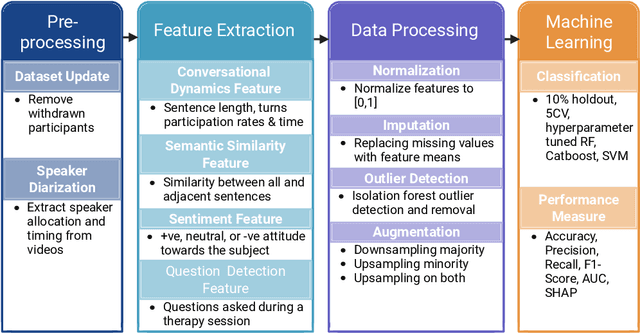

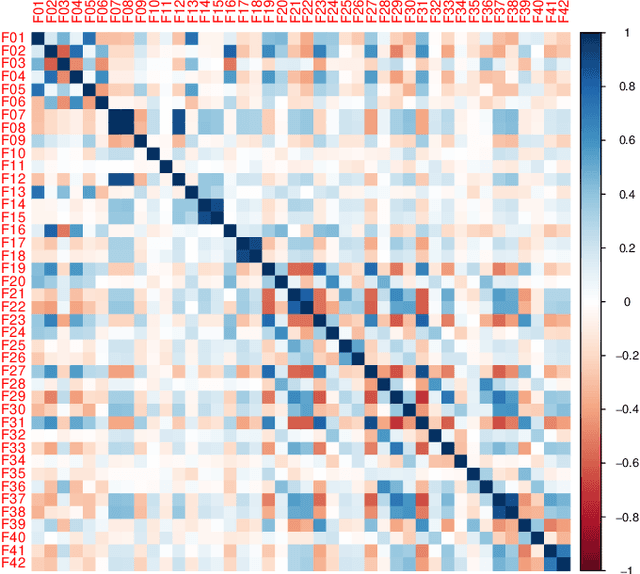

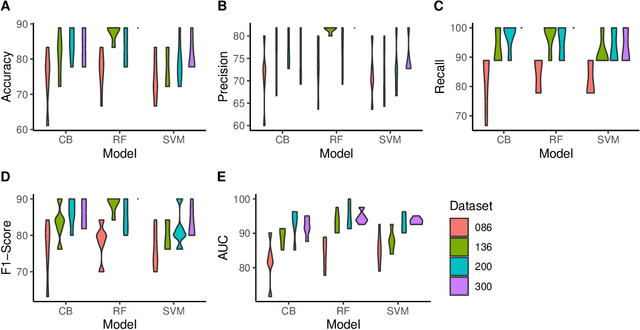

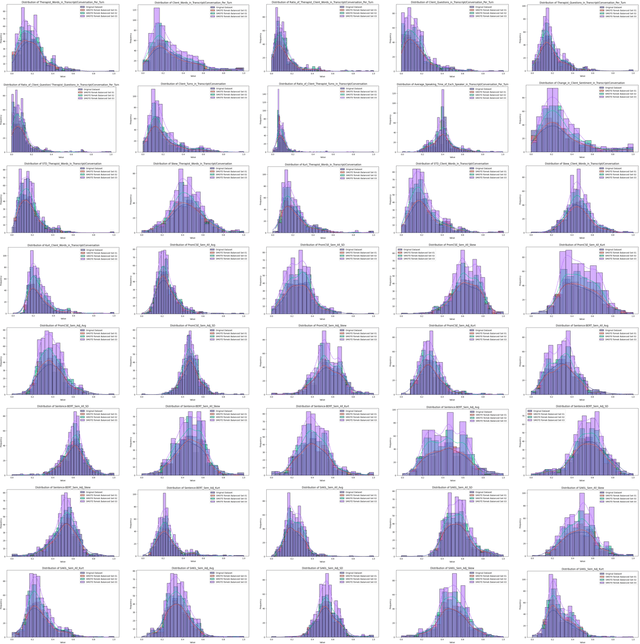

Abstract:Engagement between client and therapist is a critical determinant of therapeutic success. We propose a multi-dimensional natural language processing (NLP) framework that objectively classifies engagement quality in counseling sessions based on textual transcripts. Using 253 motivational interviewing transcripts (150 high-quality, 103 low-quality), we extracted 42 features across four domains: conversational dynamics, semantic similarity as topic alignment, sentiment classification, and question detection. Classifiers, including Random Forest (RF), Cat-Boost, and Support Vector Machines (SVM), were hyperparameter tuned and trained using a stratified 5-fold cross-validation and evaluated on a holdout test set. On balanced (non-augmented) data, RF achieved the highest classification accuracy (76.7%), and SVM achieved the highest AUC (85.4%). After SMOTE-Tomek augmentation, performance improved significantly: RF achieved up to 88.9% accuracy, 90.0% F1-score, and 94.6% AUC, while SVM reached 81.1% accuracy, 83.1% F1-score, and 93.6% AUC. The augmented data results reflect the potential of the framework in future larger-scale applications. Feature contribution revealed conversational dynamics and semantic similarity between clients and therapists were among the top contributors, led by words uttered by the client (mean and standard deviation). The framework was robust across the original and augmented datasets and demonstrated consistent improvements in F1 scores and recall. While currently text-based, the framework supports future multimodal extensions (e.g., vocal tone, facial affect) for more holistic assessments. This work introduces a scalable, data-driven method for evaluating engagement quality of the therapy session, offering clinicians real-time feedback to enhance the quality of both virtual and in-person therapeutic interactions.

Understanding LLM Scientific Reasoning through Promptings and Model's Explanation on the Answers

May 02, 2025Abstract:Large language models (LLMs) have demonstrated remarkable capabilities in natural language understanding, reasoning, and problem-solving across various domains. However, their ability to perform complex, multi-step reasoning task-essential for applications in science, medicine, and law-remains an area of active investigation. This paper examines the reasoning capabilities of contemporary LLMs, analyzing their strengths, limitations, and potential for improvement. The study uses prompt engineering techniques on the Graduate-Level GoogleProof Q&A (GPQA) dataset to assess the scientific reasoning of GPT-4o. Five popular prompt engineering techniques and two tailored promptings were tested: baseline direct answer (zero-shot), chain-of-thought (CoT), zero-shot CoT, self-ask, self-consistency, decomposition, and multipath promptings. Our findings indicate that while LLMs exhibit emergent reasoning abilities, they often rely on pattern recognition rather than true logical inference, leading to inconsistencies in complex problem-solving. The results indicated that self-consistency outperformed the other prompt engineering technique with an accuracy of 52.99%, followed by direct answer (52.23%). Zero-shot CoT (50%) outperformed multipath (48.44%), decomposition (47.77%), self-ask (46.88%), and CoT (43.75%). Self-consistency performed the second worst in explaining the answers. Simple techniques such as direct answer, CoT, and zero-shot CoT have the best scientific reasoning. We propose a research agenda aimed at bridging these gaps by integrating structured reasoning frameworks, hybrid AI approaches, and human-in-the-loop methodologies. By critically evaluating the reasoning mechanisms of LLMs, this paper contributes to the ongoing discourse on the future of artificial general intelligence and the development of more robust, trustworthy AI systems.

Evidential Uncertainty Sets in Deep Classifiers Using Conformal Prediction

Jun 16, 2024

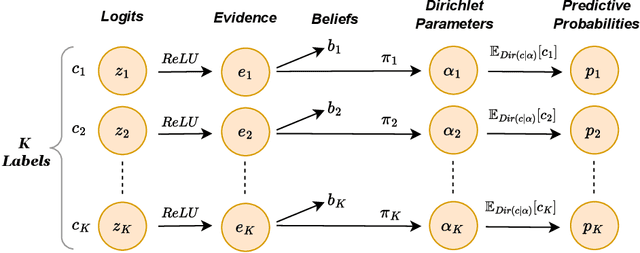

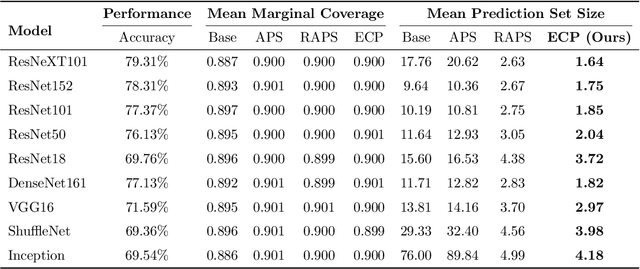

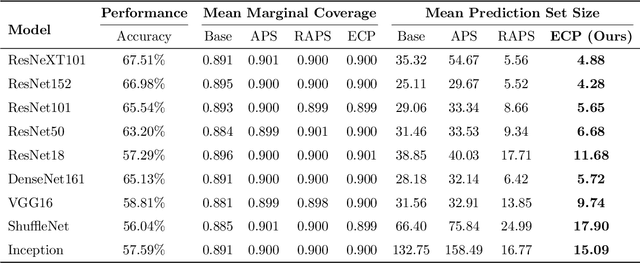

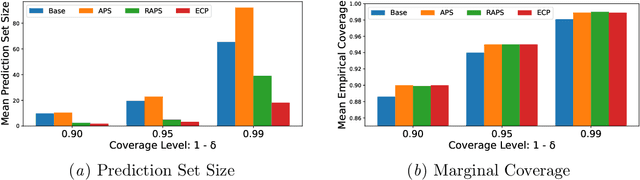

Abstract:In this paper, we propose Evidential Conformal Prediction (ECP) method for image classifiers to generate the conformal prediction sets. Our method is designed based on a non-conformity score function that has its roots in Evidential Deep Learning (EDL) as a method of quantifying model (epistemic) uncertainty in DNN classifiers. We use evidence that are derived from the logit values of target labels to compute the components of our non-conformity score function: the heuristic notion of uncertainty in CP, uncertainty surprisal, and expected utility. Our extensive experimental evaluation demonstrates that ECP outperforms three state-of-the-art methods for generating CP sets, in terms of their set sizes and adaptivity while maintaining the coverage of true labels.

CycleGAN Models for MRI Image Translation

Jan 05, 2024Abstract:Image-to-image translation has gained popularity in the medical field to transform images from one domain to another. Medical image synthesis via domain transformation is advantageous in its ability to augment an image dataset where images for a given class is limited. From the learning perspective, this process contributes to data-oriented robustness of the model by inherently broadening the model's exposure to more diverse visual data and enabling it to learn more generalized features. In the case of generating additional neuroimages, it is advantageous to obtain unidentifiable medical data and augment smaller annotated datasets. This study proposes the development of a CycleGAN model for translating neuroimages from one field strength to another (e.g., 3 Tesla to 1.5). This model was compared to a model based on DCGAN architecture. CycleGAN was able to generate the synthetic and reconstructed images with reasonable accuracy. The mapping function from the source (3 Tesla) to target domain (1.5 Tesla) performed optimally with an average PSNR value of 25.69 $\pm$ 2.49 dB and an MAE value of 2106.27 $\pm$ 1218.37.

Quantifying Deep Learning Model Uncertainty in Conformal Prediction

Jun 01, 2023

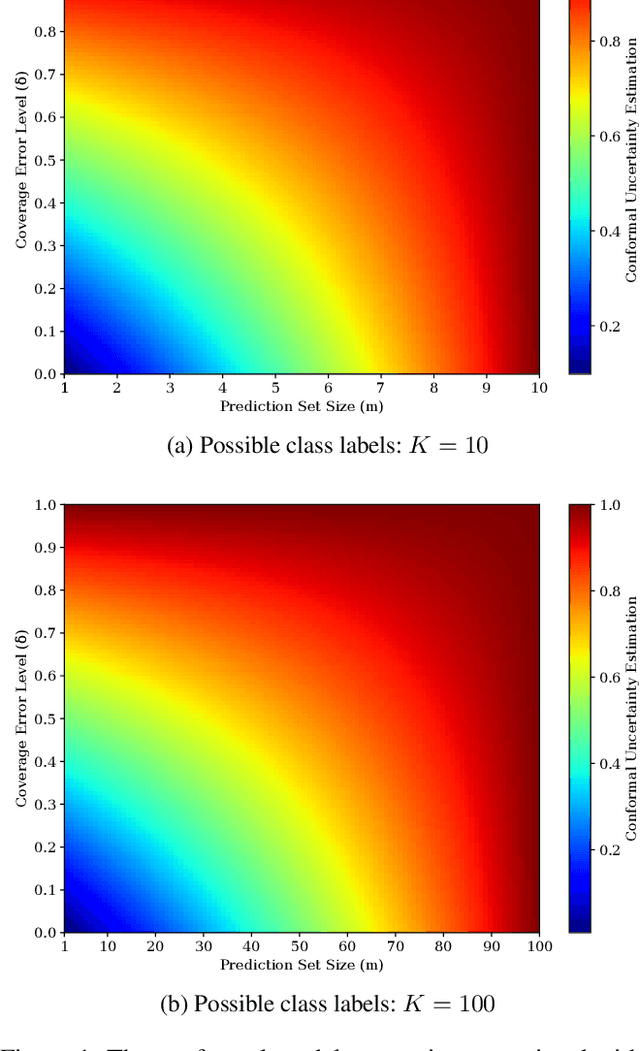

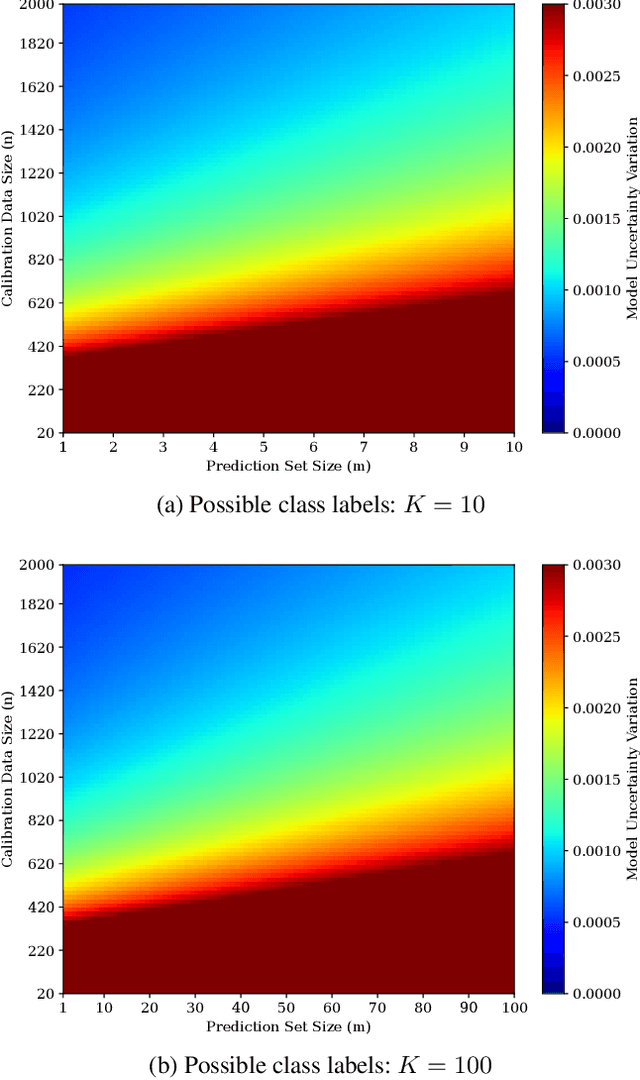

Abstract:Precise estimation of predictive uncertainty in deep neural networks is a critical requirement for reliable decision-making in machine learning and statistical modeling, particularly in the context of medical AI. Conformal Prediction (CP) has emerged as a promising framework for representing the model uncertainty by providing well-calibrated confidence levels for individual predictions. However, the quantification of model uncertainty in conformal prediction remains an active research area, yet to be fully addressed. In this paper, we explore state-of-the-art CP methodologies and their theoretical foundations. We propose a probabilistic approach in quantifying the model uncertainty derived from the produced prediction sets in conformal prediction and provide certified boundaries for the computed uncertainty. By doing so, we allow model uncertainty measured by CP to be compared by other uncertainty quantification methods such as Bayesian (e.g., MC-Dropout and DeepEnsemble) and Evidential approaches.

Artificial Intelligence Nomenclature Identified From Delphi Study on Key Issues Related to Trust and Barriers to Adoption for Autonomous Systems

Oct 14, 2022Abstract:The rapid integration of artificial intelligence across traditional research domains has generated an amalgamation of nomenclature. As cross-discipline teams work together on complex machine learning challenges, finding a consensus of basic definitions in the literature is a more fundamental problem. As a step in the Delphi process to define issues with trust and barriers to the adoption of autonomous systems, our study first collected and ranked the top concerns from a panel of international experts from the fields of engineering, computer science, medicine, aerospace, and defence, with experience working with artificial intelligence. This document presents a summary of the literature definitions for nomenclature derived from expert feedback.

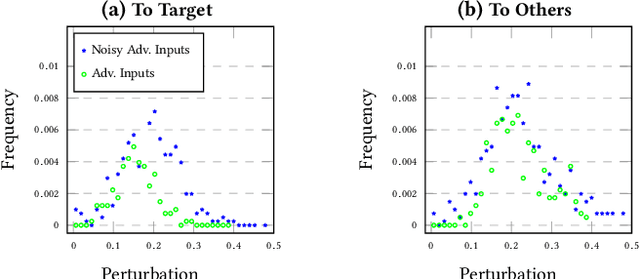

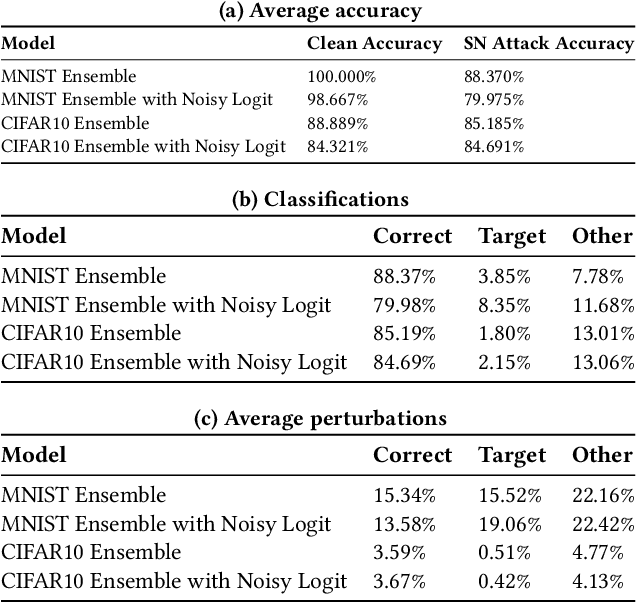

Towards Robust Deep Learning with Ensemble Networks and Noisy Layers

Jul 03, 2020

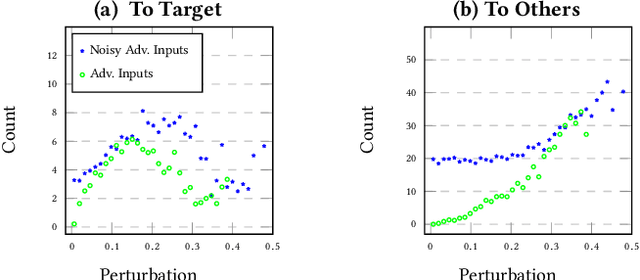

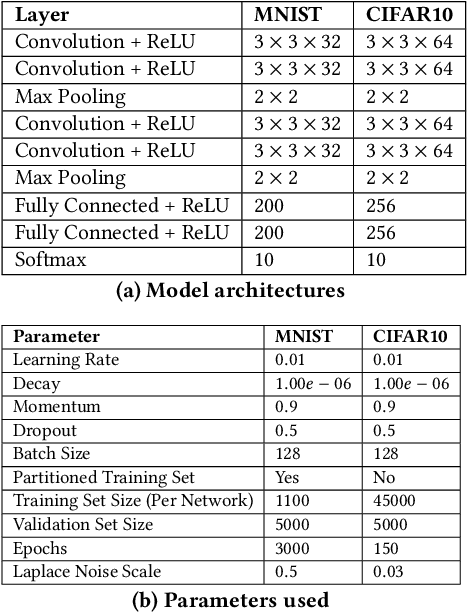

Abstract:In this paper we provide an approach for deep learning that protects against adversarial examples in image classification-type networks. The approach relies on two mechanisms:1) a mechanism that increases robustness at the expense of accuracy, and, 2) a mechanism that improves accuracy but does not always increase robustness. We show that an approach combining the two mechanisms can provide protection against adversarial examples while retaining accuracy. We formulate potential attacks on our approach and provide experimental results to demonstrate the effectiveness of our approach.

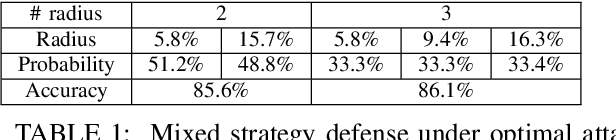

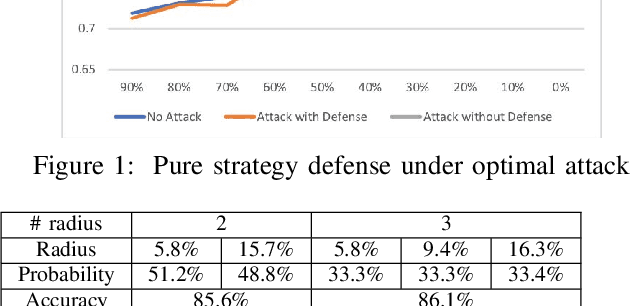

Mixed Strategy Game Model Against Data Poisoning Attacks

Jun 07, 2019

Abstract:In this paper we use game theory to model poisoning attack scenarios. We prove the non-existence of pure strategy Nash Equilibrium in the attacker and defender game. We then propose a mixed extension of our game model and an algorithm to approximate the Nash Equilibrium strategy for the defender. We then demonstrate the effectiveness of the mixed defence strategy generated by the algorithm, in an experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge