Ranjitha Prasad

Nishpaksh: TEC Standard-Compliant Framework for Fairness Auditing and Certification of AI Models

Jan 23, 2026Abstract:The growing reliance on Artificial Intelligence (AI) models in high-stakes decision-making systems, particularly within emerging telecom and 6G applications, underscores the urgent need for transparent and standardized fairness assessment frameworks. While global toolkits such as IBM AI Fairness 360 and Microsoft Fairlearn have advanced bias detection, they often lack alignment with region-specific regulatory requirements and national priorities. To address this gap, we propose Nishpaksh, an indigenous fairness evaluation tool that operationalizes the Telecommunication Engineering Centre (TEC) Standard for the Evaluation and Rating of Artificial Intelligence Systems. Nishpaksh integrates survey-based risk quantification, contextual threshold determination, and quantitative fairness evaluation into a unified, web-based dashboard. The tool employs vectorized computation, reactive state management, and certification-ready reporting to enable reproducible, audit-grade assessments, thereby addressing a critical post-standardization implementation need. Experimental validation on the COMPAS dataset demonstrates Nishpaksh's effectiveness in identifying attribute-specific bias and generating standardized fairness scores compliant with the TEC framework. The system bridges the gap between research-oriented fairness methodologies and regulatory AI governance in India, marking a significant step toward responsible and auditable AI deployment within critical infrastructure like telecommunications.

Representation Learning Preserving Ignorability and Covariate Matching for Treatment Effects

Apr 29, 2025Abstract:Estimating treatment effects from observational data is challenging due to two main reasons: (a) hidden confounding, and (b) covariate mismatch (control and treatment groups not having identical distributions). Long lines of works exist that address only either of these issues. To address the former, conventional techniques that require detailed knowledge in the form of causal graphs have been proposed. For the latter, covariate matching and importance weighting methods have been used. Recently, there has been progress in combining testable independencies with partial side information for tackling hidden confounding. A common framework to address both hidden confounding and selection bias is missing. We propose neural architectures that aim to learn a representation of pre-treatment covariates that is a valid adjustment and also satisfies covariate matching constraints. We combine two different neural architectures: one based on gradient matching across domains created by subsampling a suitable anchor variable that assumes causal side information, followed by the other, a covariate matching transformation. We prove that approximately invariant representations yield approximate valid adjustment sets which would enable an interval around the true causal effect. In contrast to usual sensitivity analysis, where an unknown nuisance parameter is varied, we have a testable approximation yielding a bound on the effect estimate. We also outperform various baselines with respect to ATE and PEHE errors on causal benchmarks that include IHDP, Jobs, Cattaneo, and an image-based Crowd Management dataset.

Noise Resilient Over-The-Air Federated Learning In Heterogeneous Wireless Networks

Mar 25, 2025

Abstract:In 6G wireless networks, Artificial Intelligence (AI)-driven applications demand the adoption of Federated Learning (FL) to enable efficient and privacy-preserving model training across distributed devices. Over-The-Air Federated Learning (OTA-FL) exploits the superposition property of multiple access channels, allowing edge users in 6G networks to efficiently share spectral resources and perform low-latency global model aggregation. However, these advantages come with challenges, as traditional OTA-FL techniques suffer due to the joint effects of Additive White Gaussian Noise (AWGN) at the server, fading, and both data and system heterogeneity at the participating edge devices. In this work, we propose the novel Noise Resilient Over-the-Air Federated Learning (NoROTA-FL) framework to jointly tackle these challenges in federated wireless networks. In NoROTA-FL, the local optimization problems find controlled inexact solutions, which manifests as an additional proximal constraint at the clients. This approach provides robustness against straggler-induced partial work, heterogeneity, noise, and fading. From a theoretical perspective, we leverage the zeroth- and first-order inexactness and establish convergence guarantees for non-convex optimization problems in the presence of heterogeneous data and varying system capabilities. Experimentally, we validate NoROTA-FL on real-world datasets, including FEMNIST, CIFAR10, and CIFAR100, demonstrating its robustness in noisy and heterogeneous environments. Compared to state-of-the-art baselines such as COTAF and FedProx, NoROTA-FL achieves significantly more stable convergence and higher accuracy, particularly in the presence of stragglers.

On the Convergence of Continual Federated Learning Using Incrementally Aggregated Gradients

Nov 12, 2024

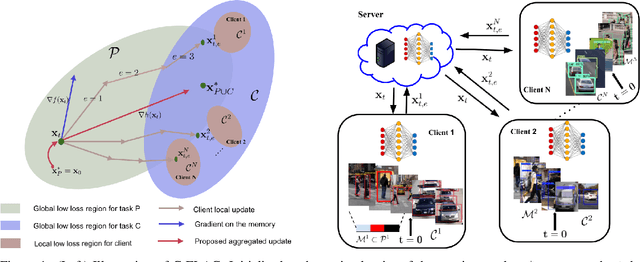

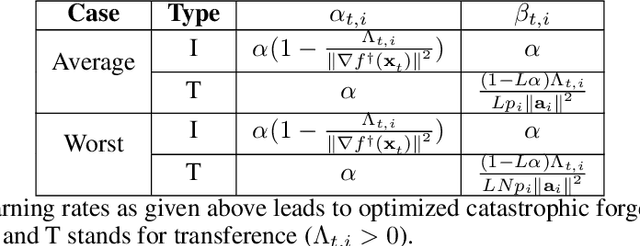

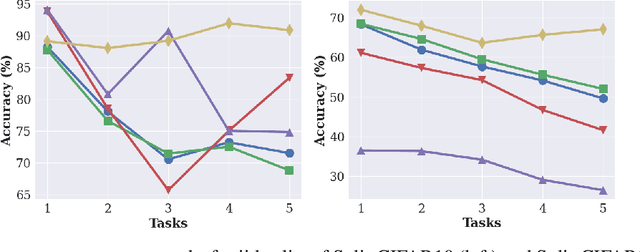

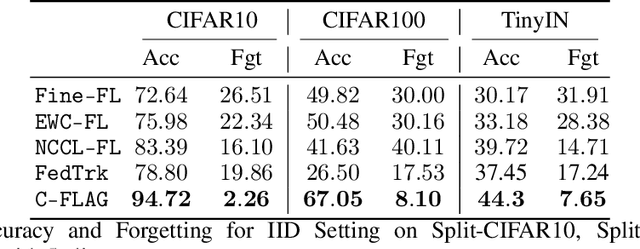

Abstract:The holy grail of machine learning is to enable Continual Federated Learning (CFL) to enhance the efficiency, privacy, and scalability of AI systems while learning from streaming data. The primary challenge of a CFL system is to overcome global catastrophic forgetting, wherein the accuracy of the global model trained on new tasks declines on the old tasks. In this work, we propose Continual Federated Learning with Aggregated Gradients (C-FLAG), a novel replay-memory based federated strategy consisting of edge-based gradient updates on memory and aggregated gradients on the current data. We provide convergence analysis of the C-FLAG approach which addresses forgetting and bias while converging at a rate of $O(1/\sqrt{T})$ over $T$ communication rounds. We formulate an optimization sub-problem that minimizes catastrophic forgetting, translating CFL into an iterative algorithm with adaptive learning rates that ensure seamless learning across tasks. We empirically show that C-FLAG outperforms several state-of-the-art baselines on both task and class-incremental settings with respect to metrics such as accuracy and forgetting.

On Homomorphic Encryption Based Strategies for Class Imbalance in Federated Learning

Oct 28, 2024Abstract:Class imbalance in training datasets can lead to bias and poor generalization in machine learning models. While pre-processing of training datasets can efficiently address both these issues in centralized learning environments, it is challenging to detect and address these issues in a distributed learning environment such as federated learning. In this paper, we propose FLICKER, a privacy preserving framework to address issues related to global class imbalance in federated learning. At the heart of our contribution lies the popular CKKS homomorphic encryption scheme, which is used by the clients to privately share their data attributes, and subsequently balance their datasets before implementing the FL scheme. Extensive experimental results show that our proposed method significantly improves the FL accuracy numbers when used along with popular datasets and relevant baselines.

CLIMAX: An exploration of Classifier-Based Contrastive Explanations

Jul 02, 2023Abstract:Explainable AI is an evolving area that deals with understanding the decision making of machine learning models so that these models are more transparent, accountable, and understandable for humans. In particular, post-hoc model-agnostic interpretable AI techniques explain the decisions of a black-box ML model for a single instance locally, without the knowledge of the intrinsic nature of the ML model. Despite their simplicity and capability in providing valuable insights, existing approaches fail to deliver consistent and reliable explanations. Moreover, in the context of black-box classifiers, existing approaches justify the predicted class, but these methods do not ensure that the explanation scores strongly differ as compared to those of another class. In this work we propose a novel post-hoc model agnostic XAI technique that provides contrastive explanations justifying the classification of a black box classifier along with a reasoning as to why another class was not predicted. Our method, which we refer to as CLIMAX which is short for Contrastive Label-aware Influence-based Model Agnostic XAI, is based on local classifiers . In order to ensure model fidelity of the explainer, we require the perturbations to be such that it leads to a class-balanced surrogate dataset. Towards this, we employ a label-aware surrogate data generation method based on random oversampling and Gaussian Mixture Model sampling. Further, we propose influence subsampling in order to retaining effective samples and hence ensure sample complexity. We show that we achieve better consistency as compared to baselines such as LIME, BayLIME, and SLIME. We also depict results on textual and image based datasets, where we generate contrastive explanations for any black-box classification model where one is able to only query the class probabilities for an instance of interest.

Over-The-Air Clustered Wireless Federated Learning

Nov 08, 2022Abstract:Privacy, security, and bandwidth constraints have led to federated learning (FL) in wireless systems, where training a machine learning (ML) model is accomplished collaboratively without sharing raw data. Often, such collaborative FL strategies necessitate model aggregation at a server. On the other hand, decentralized FL necessitates that participating clients reach a consensus ML model by exchanging parameter updates. In this work, we propose the over-the-air clustered wireless FL (CWFL) strategy, which eliminates the need for a strong central server and yet achieves an accuracy similar to the server-based strategy while using fewer channel uses as compared to decentralized FL. We theoretically show that the convergence rate of CWFL per cluster is O(1/T) while mitigating the impact of noise. Using the MNIST and CIFAR datasets, we demonstrate the accuracy performance of CWFL for the different number of clusters across communication rounds.

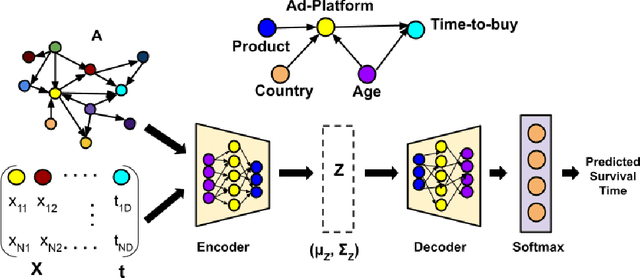

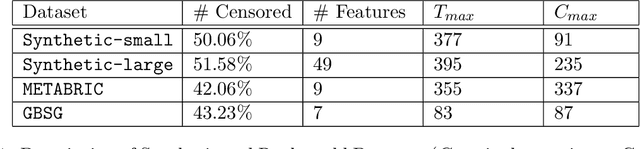

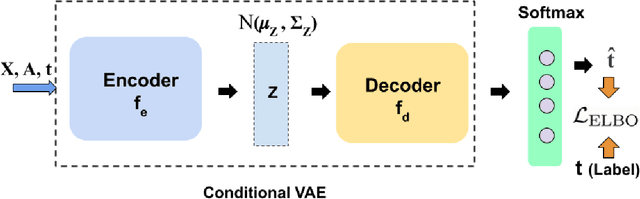

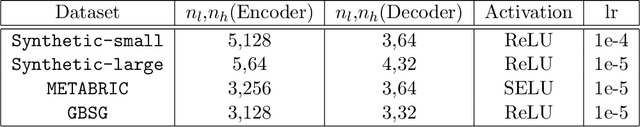

DAGSurv: Directed Acyclic Graph Based Survival Analysis Using Deep Neural Networks

Nov 02, 2021

Abstract:Causal structures for observational survival data provide crucial information regarding the relationships between covariates and time-to-event. We derive motivation from the information theoretic source coding argument, and show that incorporating the knowledge of the directed acyclic graph (DAG) can be beneficial if suitable source encoders are employed. As a possible source encoder in this context, we derive a variational inference based conditional variational autoencoder for causal structured survival prediction, which we refer to as DAGSurv. We illustrate the performance of DAGSurv on low and high-dimensional synthetic datasets, and real-world datasets such as METABRIC and GBSG. We demonstrate that the proposed method outperforms other survival analysis baselines such as Cox Proportional Hazards, DeepSurv and Deephit, which are oblivious to the underlying causal relationship between data entities.

Locally Interpretable Model Agnostic Explanations using Gaussian Processes

Aug 16, 2021

Abstract:Owing to tremendous performance improvements in data-intensive domains, machine learning (ML) has garnered immense interest in the research community. However, these ML models turn out to be black boxes, which are tough to interpret, resulting in a direct decrease in productivity. Local Interpretable Model-Agnostic Explanations (LIME) is a popular technique for explaining the prediction of a single instance. Although LIME is simple and versatile, it suffers from instability in the generated explanations. In this paper, we propose a Gaussian Process (GP) based variation of locally interpretable models. We employ a smart sampling strategy based on the acquisition functions in Bayesian optimization. Further, we employ the automatic relevance determination based covariance function in GP, with separate length-scale parameters for each feature, where the reciprocal of lengthscale parameters serve as feature explanations. We illustrate the performance of the proposed technique on two real-world datasets, and demonstrate the superior stability of the proposed technique. Furthermore, we demonstrate that the proposed technique is able to generate faithful explanations using much fewer samples as compared to LIME.

B-SMALL: A Bayesian Neural Network approach to Sparse Model-Agnostic Meta-Learning

Jan 01, 2021

Abstract:There is a growing interest in the learning-to-learn paradigm, also known as meta-learning, where models infer on new tasks using a few training examples. Recently, meta-learning based methods have been widely used in few-shot classification, regression, reinforcement learning, and domain adaptation. The model-agnostic meta-learning (MAML) algorithm is a well-known algorithm that obtains model parameter initialization at meta-training phase. In the meta-test phase, this initialization is rapidly adapted to new tasks by using gradient descent. However, meta-learning models are prone to overfitting since there are insufficient training tasks resulting in over-parameterized models with poor generalization performance for unseen tasks. In this paper, we propose a Bayesian neural network based MAML algorithm, which we refer to as the B-SMALL algorithm. The proposed framework incorporates a sparse variational loss term alongside the loss function of MAML, which uses a sparsifying approximated KL divergence as a regularizer. We demonstrate the performance of B-MAML using classification and regression tasks, and highlight that training a sparsifying BNN using MAML indeed improves the parameter footprint of the model while performing at par or even outperforming the MAML approach. We also illustrate applicability of our approach in distributed sensor networks, where sparsity and meta-learning can be beneficial.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge