Aditya Saini

FaIRCoP: Facial Image Retrieval using Contrastive Personalization

May 28, 2022

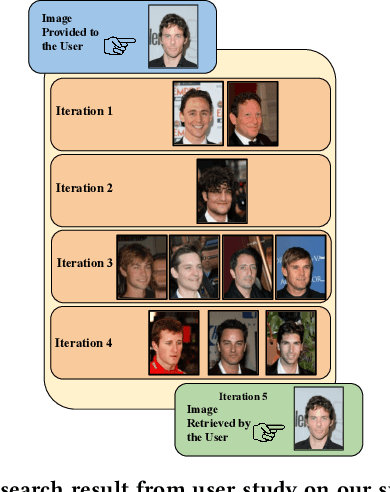

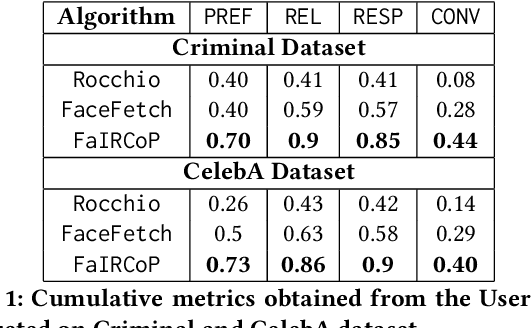

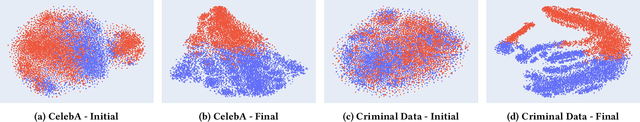

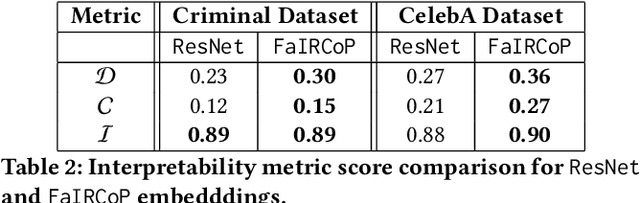

Abstract:Retrieving facial images from attributes plays a vital role in various systems such as face recognition and suspect identification. Compared to other image retrieval tasks, facial image retrieval is more challenging due to the high subjectivity involved in describing a person's facial features. Existing methods do so by comparing specific characteristics from the user's mental image against the suggested images via high-level supervision such as using natural language. In contrast, we propose a method that uses a relatively simpler form of binary supervision by utilizing the user's feedback to label images as either similar or dissimilar to the target image. Such supervision enables us to exploit the contrastive learning paradigm for encapsulating each user's personalized notion of similarity. For this, we propose a novel loss function optimized online via user feedback. We validate the efficacy of our proposed approach using a carefully designed testbed to simulate user feedback and a large-scale user study. Our experiments demonstrate that our method iteratively improves personalization, leading to faster convergence and enhanced recommendation relevance, thereby, improving user satisfaction. Our proposed framework is also equipped with a user-friendly web interface with a real-time experience for facial image retrieval.

Locally Interpretable Model Agnostic Explanations using Gaussian Processes

Aug 16, 2021

Abstract:Owing to tremendous performance improvements in data-intensive domains, machine learning (ML) has garnered immense interest in the research community. However, these ML models turn out to be black boxes, which are tough to interpret, resulting in a direct decrease in productivity. Local Interpretable Model-Agnostic Explanations (LIME) is a popular technique for explaining the prediction of a single instance. Although LIME is simple and versatile, it suffers from instability in the generated explanations. In this paper, we propose a Gaussian Process (GP) based variation of locally interpretable models. We employ a smart sampling strategy based on the acquisition functions in Bayesian optimization. Further, we employ the automatic relevance determination based covariance function in GP, with separate length-scale parameters for each feature, where the reciprocal of lengthscale parameters serve as feature explanations. We illustrate the performance of the proposed technique on two real-world datasets, and demonstrate the superior stability of the proposed technique. Furthermore, we demonstrate that the proposed technique is able to generate faithful explanations using much fewer samples as compared to LIME.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge