Ramyad Hadidi

Mustafar: Promoting Unstructured Sparsity for KV Cache Pruning in LLM Inference

May 28, 2025Abstract:We demonstrate that unstructured sparsity significantly improves KV cache compression for LLMs, enabling sparsity levels up to 70% without compromising accuracy or requiring fine-tuning. We conduct a systematic exploration of pruning strategies and find per-token magnitude-based pruning as highly effective for both Key and Value caches under unstructured sparsity, surpassing prior structured pruning schemes. The Key cache benefits from prominent outlier elements, while the Value cache surprisingly benefits from a simple magnitude-based pruning despite its uniform distribution. KV cache size is the major bottleneck in decode performance due to high memory overhead for large context lengths. To address this, we use a bitmap-based sparse format and a custom attention kernel capable of compressing and directly computing over compressed caches pruned to arbitrary sparsity patterns, significantly accelerating memory-bound operations in decode computations and thereby compensating for the overhead of runtime pruning and compression. Our custom attention kernel coupled with the bitmap-based format delivers substantial compression of KV cache upto 45% of dense inference and thereby enables longer context length and increased tokens/sec throughput of upto 2.23x compared to dense inference. Our pruning mechanism and sparse attention kernel is available at https://github.com/dhjoo98/mustafar.

Endor: Hardware-Friendly Sparse Format for Offloaded LLM Inference

Jun 17, 2024

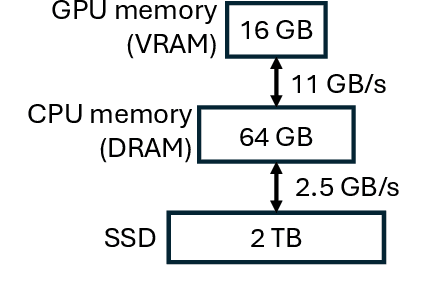

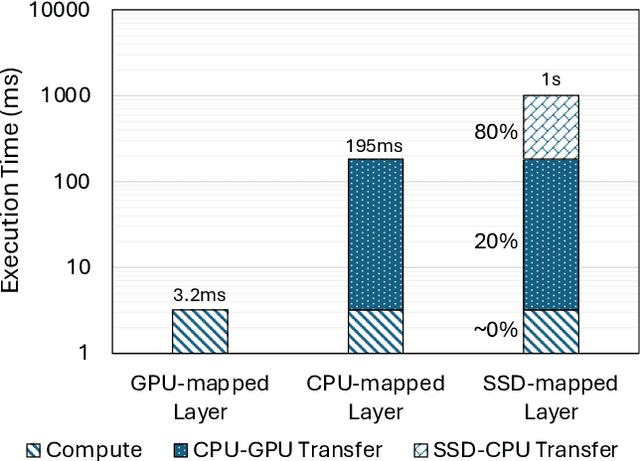

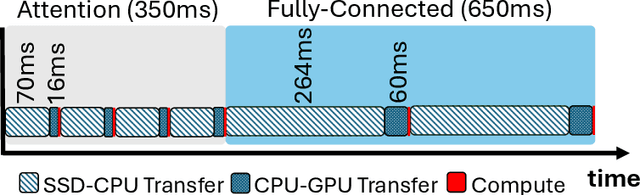

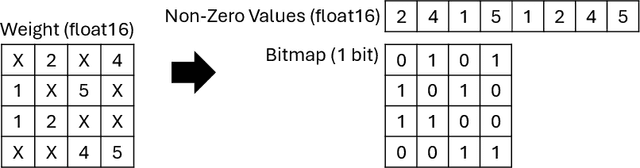

Abstract:The increasing size of large language models (LLMs) challenges their usage on resource-constrained platforms. For example, memory on modern GPUs is insufficient to hold LLMs that are hundreds of Gigabytes in size. Offloading is a popular method to escape this constraint by storing weights of an LLM model to host CPU memory and SSD, then loading each weight to GPU before every use. In our case study of offloaded inference, we found that due to the low bandwidth between storage devices and GPU, the latency of transferring large model weights from its offloaded location to GPU memory becomes the critical bottleneck with actual compute taking nearly 0% of runtime. To effectively reduce the weight transfer latency, we propose a novel sparse format that compresses the unstructured sparse pattern of pruned LLM weights to non-zero values with high compression ratio and low decompression overhead. Endor achieves this by expressing the positions of non-zero elements with a bitmap. Compared to offloaded inference using the popular Huggingface Accelerate, applying Endor accelerates OPT-66B by 1.70x and Llama2-70B by 1.78x. When direct weight transfer from SSD to GPU is leveraged, Endor achieves 2.25x speedup on OPT-66B and 2.37x speedup on Llama2-70B.

Network architecture search of X-ray based scientific applications

Apr 16, 2024

Abstract:X-ray and electron diffraction-based microscopy use bragg peak detection and ptychography to perform 3-D imaging at an atomic resolution. Typically, these techniques are implemented using computationally complex tasks such as a Psuedo-Voigt function or solving a complex inverse problem. Recently, the use of deep neural networks has improved the existing state-of-the-art approaches. However, the design and development of the neural network models depends on time and labor intensive tuning of the model by application experts. To that end, we propose a hyperparameter (HPS) and neural architecture search (NAS) approach to automate the design and optimization of the neural network models for model size, energy consumption and throughput. We demonstrate the improved performance of the auto-tuned models when compared to the manually tuned BraggNN and PtychoNN benchmark. We study and demonstrate the importance of the exploring the search space of tunable hyperparameters in enhancing the performance of bragg peak detection and ptychographic reconstruction. Our NAS and HPS of (1) BraggNN achieves a 31.03\% improvement in bragg peak detection accuracy with a 87.57\% reduction in model size, and (2) PtychoNN achieves a 16.77\% improvement in model accuracy and a 12.82\% reduction in model size when compared to the baseline PtychoNN model. When inferred on the Orin-AGX platform, the optimized Braggnn and Ptychonn models demonstrate a 10.51\% and 9.47\% reduction in inference latency and a 44.18\% and 15.34\% reduction in energy consumption when compared to their respective baselines, when inferred in the Orin-AGX edge platform.

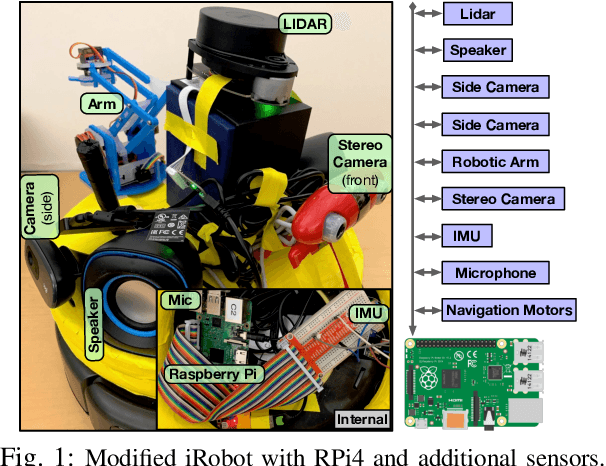

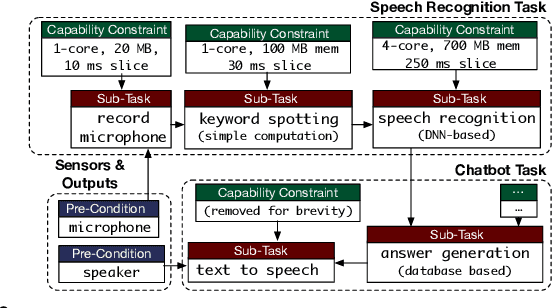

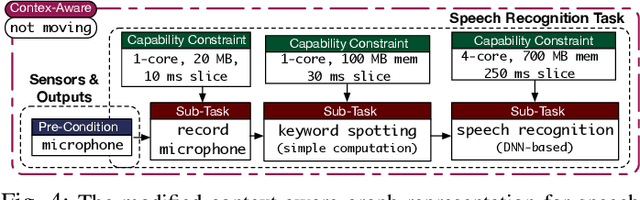

Context-Aware Task Handling in Resource-Constrained Robots with Virtualization

Apr 09, 2021

Abstract:Intelligent mobile robots are critical in several scenarios. However, as their computational resources are limited, mobile robots struggle to handle several tasks concurrently and yet guaranteeing real-timeliness. To address this challenge and improve the real-timeliness of critical tasks under resource constraints, we propose a fast context-aware task handling technique. To effectively handling tasks in real-time, our proposed context-aware technique comprises of three main ingredients: (i) a dynamic time-sharing mechanism, coupled with (ii) an event-driven task scheduling using reactive programming paradigm to mindfully use the limited resources; and, (iii) a lightweight virtualized execution to easily integrate functionalities and their dependencies. We showcase our technique on a Raspberry-Pi-based robot with a variety of tasks such as Simultaneous localization and mapping (SLAM), sign detection, and speech recognition with a 42% speedup in total execution time compared to the common Linux scheduler.

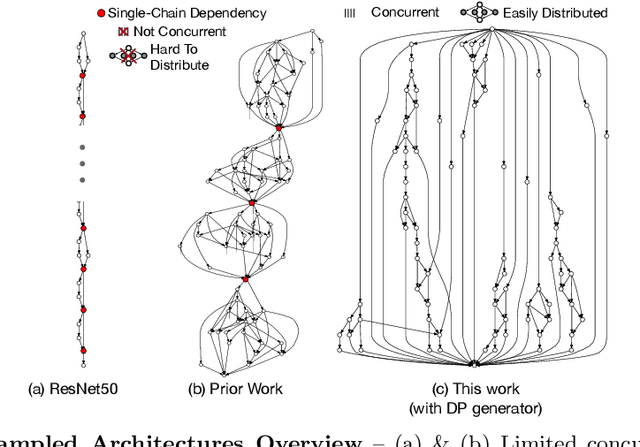

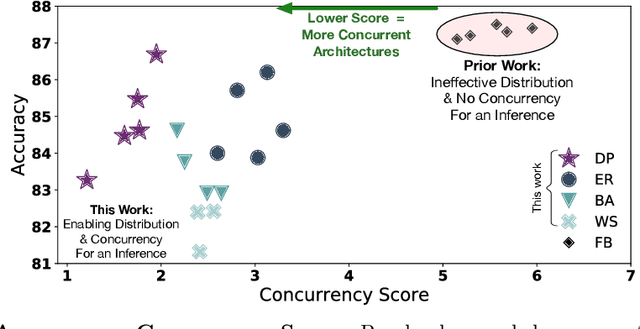

Reducing Inference Latency with Concurrent Architectures for Image Recognition

Nov 13, 2020

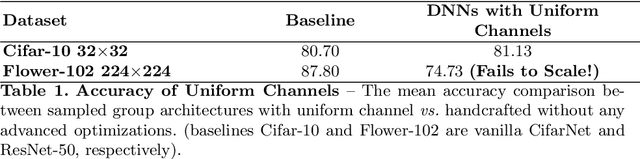

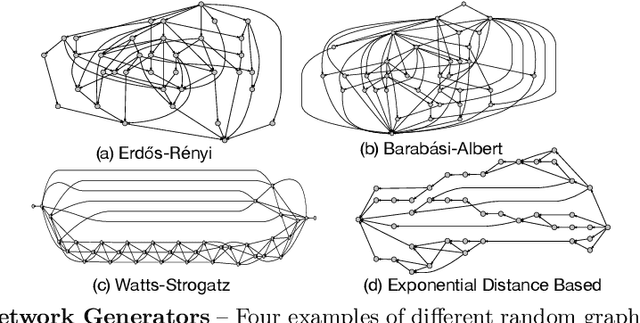

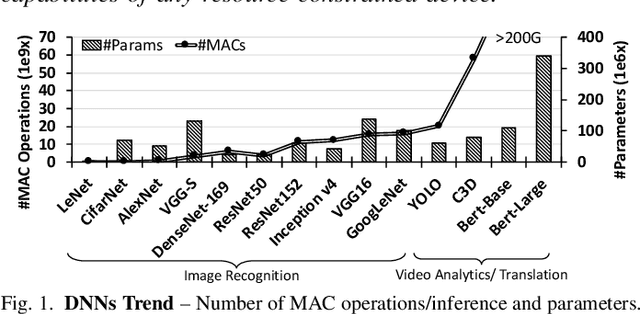

Abstract:Satisfying the high computation demand of modern deep learning architectures is challenging for achieving low inference latency. The current approaches in decreasing latency only increase parallelism within a layer. This is because architectures typically capture a single-chain dependency pattern that prevents efficient distribution with a higher concurrency (i.e., simultaneous execution of one inference among devices). Such single-chain dependencies are so widespread that even implicitly biases recent neural architecture search (NAS) studies. In this visionary paper, we draw attention to an entirely new space of NAS that relaxes the single-chain dependency to provide higher concurrency and distribution opportunities. To quantitatively compare these architectures, we propose a score that encapsulates crucial metrics such as communication, concurrency, and load balancing. Additionally, we propose a new generator and transformation block that consistently deliver superior architectures compared to current state-of-the-art methods. Finally, our preliminary results show that these new architectures reduce the inference latency and deserve more attention.

Edge-Tailored Perception: Fast Inferencing in-the-Edge with Efficient Model Distribution

Mar 13, 2020

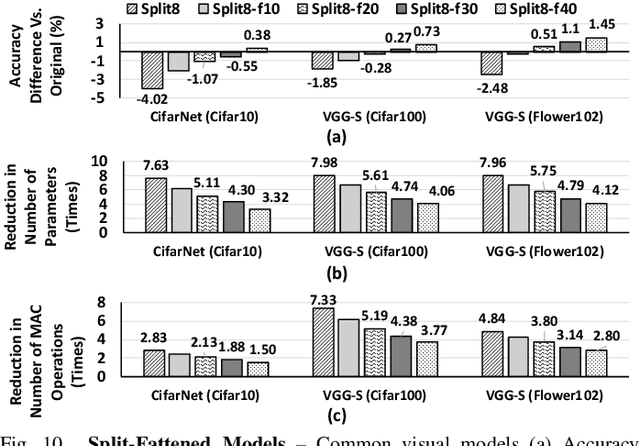

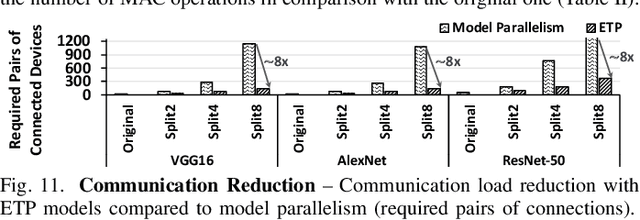

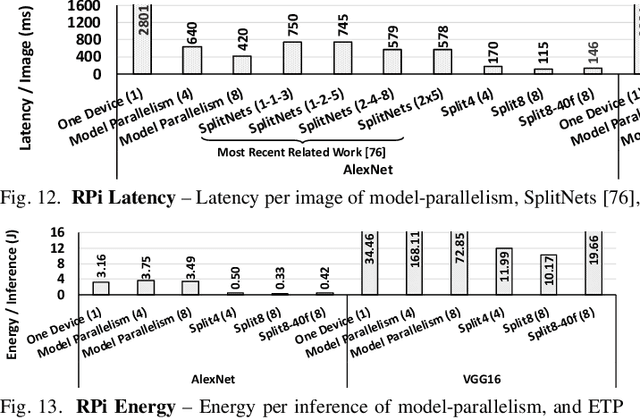

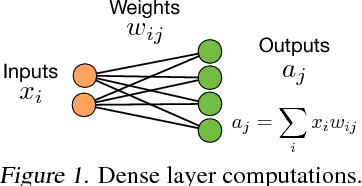

Abstract:The rise of deep neural networks (DNNs) is inspiring new studies in myriad of edge use cases with robots, autonomous agents, and Internet-of-things (IoT) devices. However, in-the-edge inferencing of DNNs is still a severe challenge mainly because of the contradiction between the inherent intensive resource requirements and the tight resource availability in several edge domains. Further, as communication is costly, taking advantage of other available edge devices is not an effective solution in edge domains. Therefore, to benefit from available compute resources with low communication overhead, we propose new edge-tailored perception (ETP) models that consist of several almost-independent and narrow branches. ETP models offer close-to-minimum communication overheads with better distribution opportunities while significantly reducing memory and computation footprints, all with a trivial accuracy loss for not accuracy-critical tasks. To show the benefits, we deploy ETP models on two real systems, Raspberry Pis and edge-level PYNQ FPGAs. Additionally, we share our insights about tailoring a systolic-based architecture for edge computing with FPGA implementations. ETP models created based on LeNet, CifarNet, VGG-S/16, AlexNet, and ResNets and trained on MNIST, CIFAR10/100, Flower102, and ImageNet, achieve a maximum and average speedups of 56x and 7x, compared to originals. ETP is an addition to existing single-device optimizations for embedded devices by enabling the exploitation of multiple devices. As an example, we show applying pruning and quantization on ETP models improves the average speedup to 33x.

Collaborative Execution of Deep Neural Networks on Internet of Things Devices

Jan 08, 2019

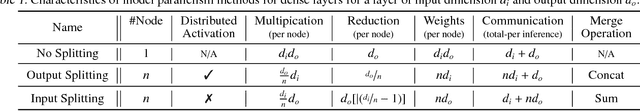

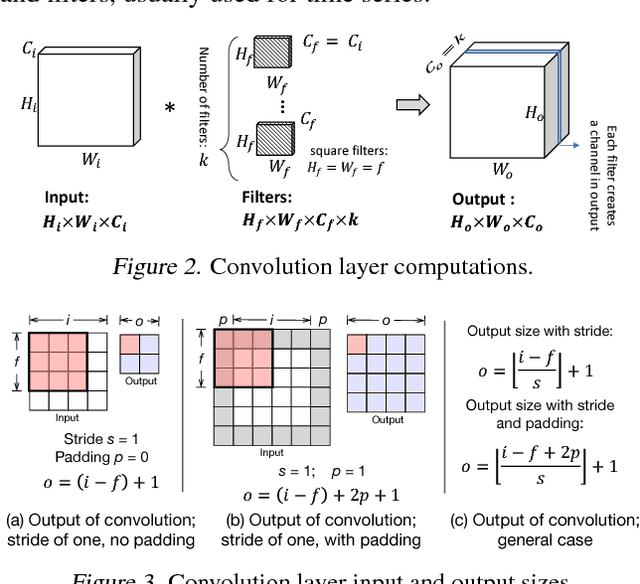

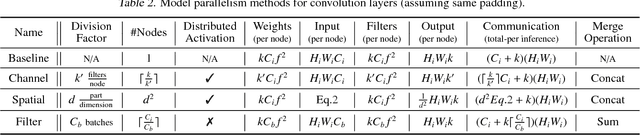

Abstract:With recent advancements in deep neural networks (DNNs), we are able to solve traditionally challenging problems. Since DNNs are compute intensive, consumers, to deploy a service, need to rely on expensive and scarce compute resources in the cloud. This approach, in addition to its dependability on high-quality network infrastructure and data centers, raises new privacy concerns. These challenges may limit DNN-based applications, so many researchers have tried optimize DNNs for local and in-edge execution. However, inadequate power and computing resources of edge devices along with small number of requests limits current optimizations applicability, such as batch processing. In this paper, we propose an approach that utilizes aggregated existing computing power of Internet of Things (IoT) devices surrounding an environment by creating a collaborative network. In this approach, IoT devices cooperate to conduct single-batch inferencing in real time. While exploiting several new model-parallelism methods and their distribution characteristics, our approach enhances the collaborative network by creating a balanced and distributed processing pipeline. We have illustrated our work using many Raspberry Pis with studying DNN models such as AlexNet, VGG16, Xception, and C3D.

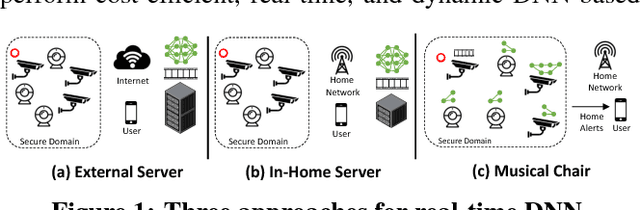

Musical Chair: Efficient Real-Time Recognition Using Collaborative IoT Devices

Mar 21, 2018

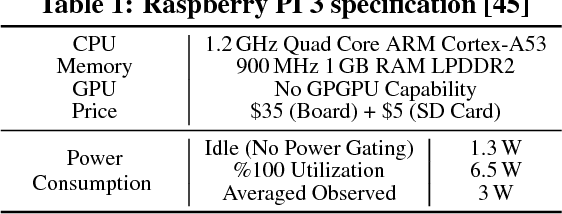

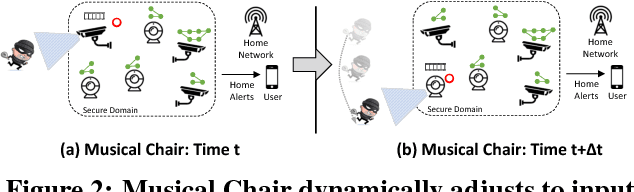

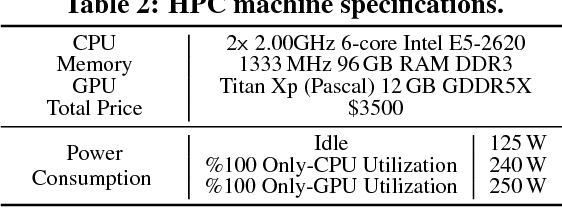

Abstract:The prevalence of Internet of things (IoT) devices and abundance of sensor data has created an increase in real-time data processing such as recognition of speech, image, and video. While currently such processes are offloaded to the computationally powerful cloud system, a localized and distributed approach is desirable because (i) it preserves the privacy of users and (ii) it omits the dependency on cloud services. However, IoT networks are usually composed of resource-constrained devices, and a single device is not powerful enough to process real-time data. To overcome this challenge, we examine data and model parallelism for such devices in the context of deep neural networks. We propose Musical Chair to enable efficient, localized, and dynamic real-time recognition by harvesting the aggregated computational power from the resource-constrained devices in the same IoT network as input sensors. Musical chair adapts to the availability of computing devices at runtime and adjusts to the inherit dynamics of IoT networks. To demonstrate Musical Chair, on a network of Raspberry PIs (up to 12) each connected to a camera, we implement a state-of-the-art action recognition model for videos and two recognition models for images. Compared to the Tegra TX2, an embedded low-power platform with a six-core CPU and a GPU, our distributed action recognition system achieves not only similar energy consumption but also twice the performance of the TX2. Furthermore, in image recognition, Musical Chair achieves similar performance and saves dynamic energy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge