Jiashen Cao

Hydro: Adaptive Query Processing of ML Queries

Mar 22, 2024Abstract:Query optimization in relational database management systems (DBMSs) is critical for fast query processing. The query optimizer relies on precise selectivity and cost estimates to effectively optimize queries prior to execution. While this strategy is effective for relational DBMSs, it is not sufficient for DBMSs tailored for processing machine learning (ML) queries. In ML-centric DBMSs, query optimization is challenging for two reasons. First, the performance bottleneck of the queries shifts to user-defined functions (UDFs) that often wrap around deep learning models, making it difficult to accurately estimate UDF statistics without profiling the query. This leads to inaccurate statistics and sub-optimal query plans. Second, the optimal query plan for ML queries is data-dependent, necessitating DBMSs to adapt the query plan on the fly during execution. So, a static query plan is not sufficient for such queries. In this paper, we present Hydro, an ML-centric DBMS that utilizes adaptive query processing (AQP) for efficiently processing ML queries. Hydro is designed to quickly evaluate UDF-based query predicates by ensuring optimal predicate evaluation order and improving the scalability of UDF execution. By integrating AQP, Hydro continuously monitors UDF statistics, routes data to predicates in an optimal order, and dynamically allocates resources for evaluating predicates. We demonstrate Hydro's efficacy through four illustrative use cases, delivering up to 11.52x speedup over a baseline system.

Reducing Inference Latency with Concurrent Architectures for Image Recognition

Nov 13, 2020

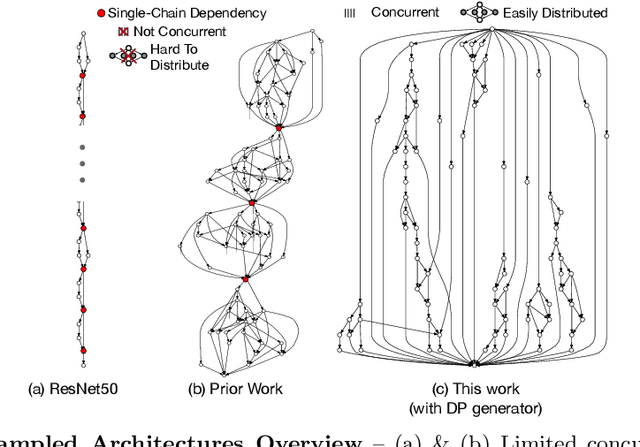

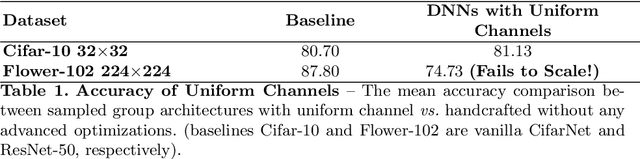

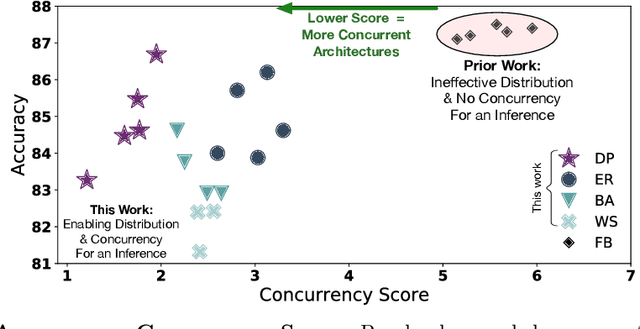

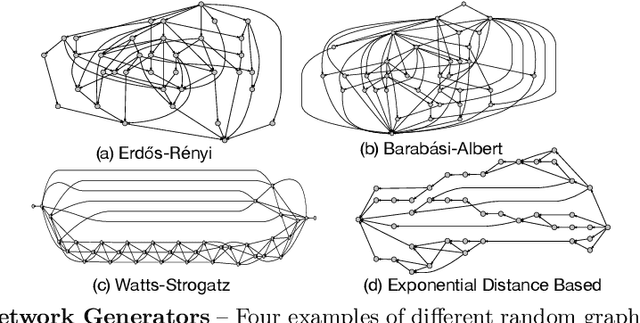

Abstract:Satisfying the high computation demand of modern deep learning architectures is challenging for achieving low inference latency. The current approaches in decreasing latency only increase parallelism within a layer. This is because architectures typically capture a single-chain dependency pattern that prevents efficient distribution with a higher concurrency (i.e., simultaneous execution of one inference among devices). Such single-chain dependencies are so widespread that even implicitly biases recent neural architecture search (NAS) studies. In this visionary paper, we draw attention to an entirely new space of NAS that relaxes the single-chain dependency to provide higher concurrency and distribution opportunities. To quantitatively compare these architectures, we propose a score that encapsulates crucial metrics such as communication, concurrency, and load balancing. Additionally, we propose a new generator and transformation block that consistently deliver superior architectures compared to current state-of-the-art methods. Finally, our preliminary results show that these new architectures reduce the inference latency and deserve more attention.

Edge-Tailored Perception: Fast Inferencing in-the-Edge with Efficient Model Distribution

Mar 13, 2020

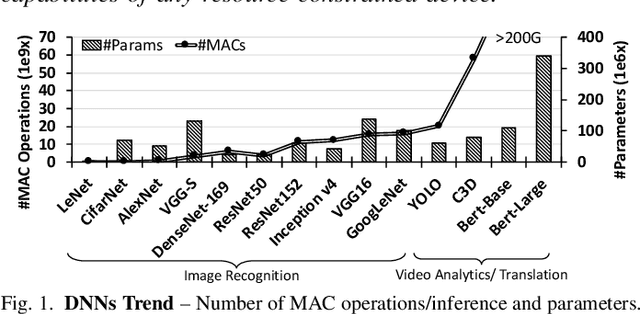

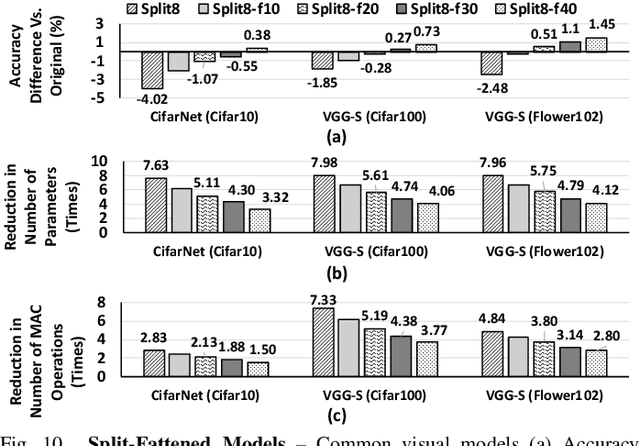

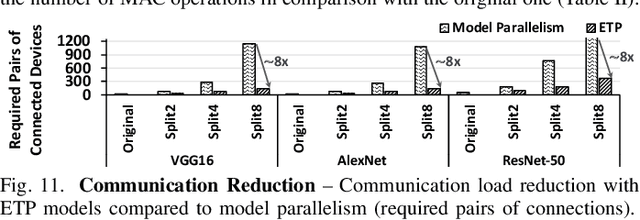

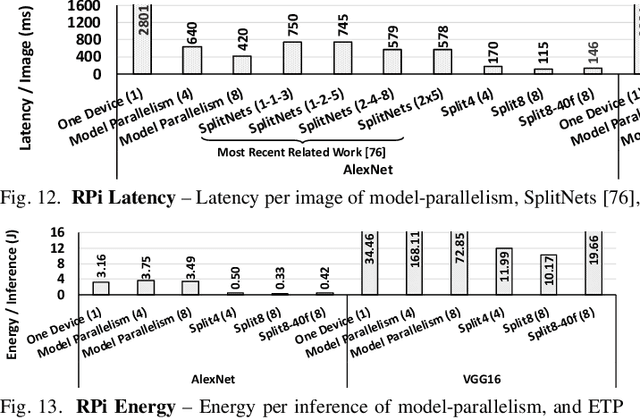

Abstract:The rise of deep neural networks (DNNs) is inspiring new studies in myriad of edge use cases with robots, autonomous agents, and Internet-of-things (IoT) devices. However, in-the-edge inferencing of DNNs is still a severe challenge mainly because of the contradiction between the inherent intensive resource requirements and the tight resource availability in several edge domains. Further, as communication is costly, taking advantage of other available edge devices is not an effective solution in edge domains. Therefore, to benefit from available compute resources with low communication overhead, we propose new edge-tailored perception (ETP) models that consist of several almost-independent and narrow branches. ETP models offer close-to-minimum communication overheads with better distribution opportunities while significantly reducing memory and computation footprints, all with a trivial accuracy loss for not accuracy-critical tasks. To show the benefits, we deploy ETP models on two real systems, Raspberry Pis and edge-level PYNQ FPGAs. Additionally, we share our insights about tailoring a systolic-based architecture for edge computing with FPGA implementations. ETP models created based on LeNet, CifarNet, VGG-S/16, AlexNet, and ResNets and trained on MNIST, CIFAR10/100, Flower102, and ImageNet, achieve a maximum and average speedups of 56x and 7x, compared to originals. ETP is an addition to existing single-device optimizations for embedded devices by enabling the exploitation of multiple devices. As an example, we show applying pruning and quantization on ETP models improves the average speedup to 33x.

Collaborative Execution of Deep Neural Networks on Internet of Things Devices

Jan 08, 2019

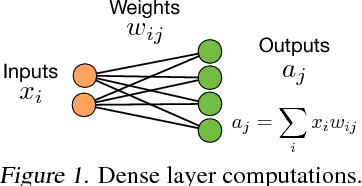

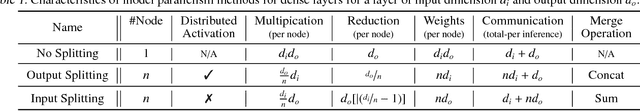

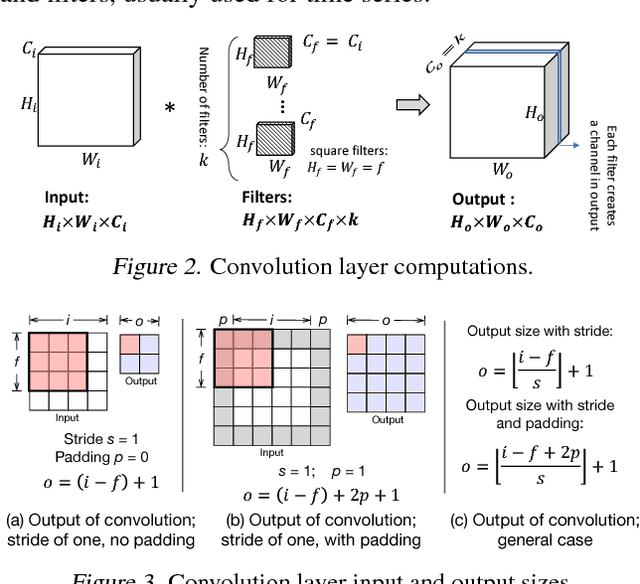

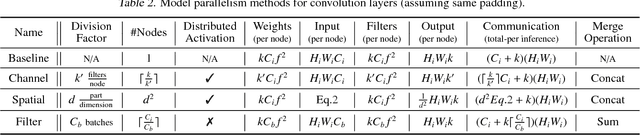

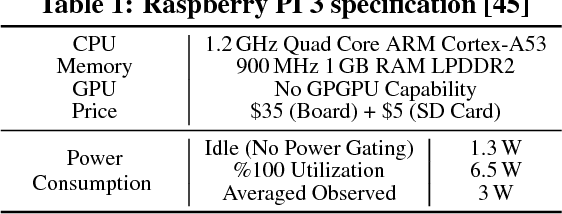

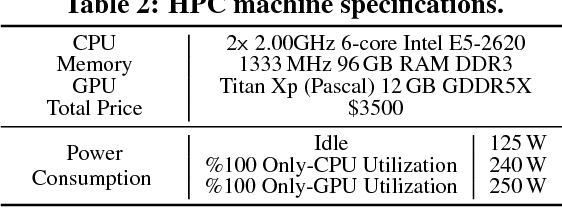

Abstract:With recent advancements in deep neural networks (DNNs), we are able to solve traditionally challenging problems. Since DNNs are compute intensive, consumers, to deploy a service, need to rely on expensive and scarce compute resources in the cloud. This approach, in addition to its dependability on high-quality network infrastructure and data centers, raises new privacy concerns. These challenges may limit DNN-based applications, so many researchers have tried optimize DNNs for local and in-edge execution. However, inadequate power and computing resources of edge devices along with small number of requests limits current optimizations applicability, such as batch processing. In this paper, we propose an approach that utilizes aggregated existing computing power of Internet of Things (IoT) devices surrounding an environment by creating a collaborative network. In this approach, IoT devices cooperate to conduct single-batch inferencing in real time. While exploiting several new model-parallelism methods and their distribution characteristics, our approach enhances the collaborative network by creating a balanced and distributed processing pipeline. We have illustrated our work using many Raspberry Pis with studying DNN models such as AlexNet, VGG16, Xception, and C3D.

Musical Chair: Efficient Real-Time Recognition Using Collaborative IoT Devices

Mar 21, 2018

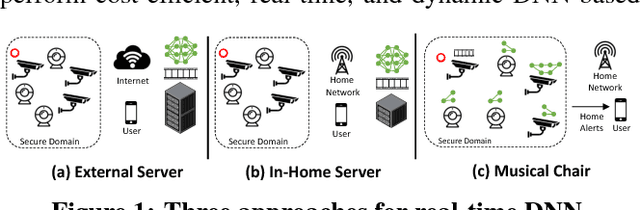

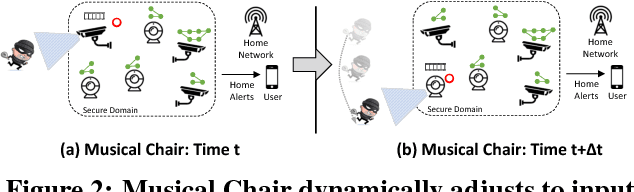

Abstract:The prevalence of Internet of things (IoT) devices and abundance of sensor data has created an increase in real-time data processing such as recognition of speech, image, and video. While currently such processes are offloaded to the computationally powerful cloud system, a localized and distributed approach is desirable because (i) it preserves the privacy of users and (ii) it omits the dependency on cloud services. However, IoT networks are usually composed of resource-constrained devices, and a single device is not powerful enough to process real-time data. To overcome this challenge, we examine data and model parallelism for such devices in the context of deep neural networks. We propose Musical Chair to enable efficient, localized, and dynamic real-time recognition by harvesting the aggregated computational power from the resource-constrained devices in the same IoT network as input sensors. Musical chair adapts to the availability of computing devices at runtime and adjusts to the inherit dynamics of IoT networks. To demonstrate Musical Chair, on a network of Raspberry PIs (up to 12) each connected to a camera, we implement a state-of-the-art action recognition model for videos and two recognition models for images. Compared to the Tegra TX2, an embedded low-power platform with a six-core CPU and a GPU, our distributed action recognition system achieves not only similar energy consumption but also twice the performance of the TX2. Furthermore, in image recognition, Musical Chair achieves similar performance and saves dynamic energy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge