Rajmonda S. Caceres

Reducing the Sensitivity of Neural Physics Simulators to Mesh Topology via Pretraining

Jan 16, 2025Abstract:Meshes are used to represent complex objects in high fidelity physics simulators across a variety of domains, such as radar sensing and aerodynamics. There is growing interest in using neural networks to accelerate physics simulations, and also a growing body of work on applying neural networks directly to irregular mesh data. Since multiple mesh topologies can represent the same object, mesh augmentation is typically required to handle topological variation when training neural networks. Due to the sensitivity of physics simulators to small changes in mesh shape, it is challenging to use these augmentations when training neural network-based physics simulators. In this work, we show that variations in mesh topology can significantly reduce the performance of neural network simulators. We evaluate whether pretraining can be used to address this issue, and find that employing an established autoencoder pretraining technique with graph embedding models reduces the sensitivity of neural network simulators to variations in mesh topology. Finally, we highlight future research directions that may further reduce neural simulator sensitivity to mesh topology.

GRASP: Accelerating Shortest Path Attacks via Graph Attention

Oct 23, 2023Abstract:Recent advances in machine learning (ML) have shown promise in aiding and accelerating classical combinatorial optimization algorithms. ML-based speed ups that aim to learn in an end to end manner (i.e., directly output the solution) tend to trade off run time with solution quality. Therefore, solutions that are able to accelerate existing solvers while maintaining their performance guarantees, are of great interest. We consider an APX-hard problem, where an adversary aims to attack shortest paths in a graph by removing the minimum number of edges. We propose the GRASP algorithm: Graph Attention Accelerated Shortest Path Attack, an ML aided optimization algorithm that achieves run times up to 10x faster, while maintaining the quality of solution generated. GRASP uses a graph attention network to identify a smaller subgraph containing the combinatorial solution, thus effectively reducing the input problem size. Additionally, we demonstrate how careful representation of the input graph, including node features that correlate well with the optimization task, can highlight important structure in the optimization solution.

Graph-SCP: Accelerating Set Cover Problems with Graph Neural Networks

Oct 12, 2023

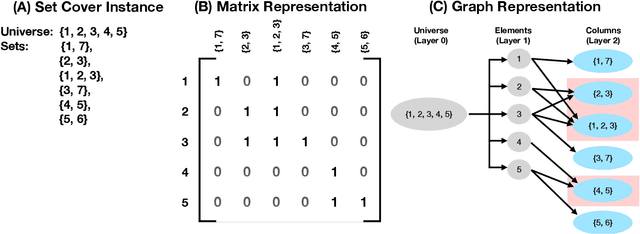

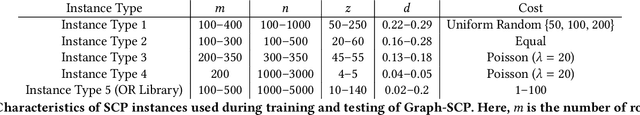

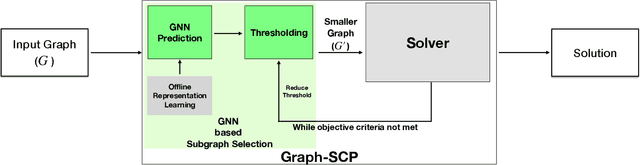

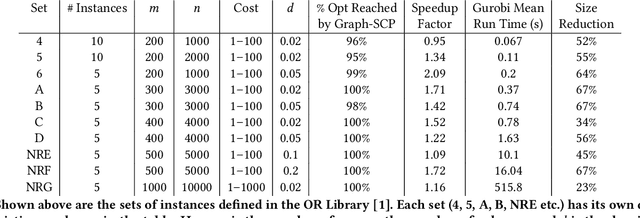

Abstract:Machine learning (ML) approaches are increasingly being used to accelerate combinatorial optimization (CO) problems. We look specifically at the Set Cover Problem (SCP) and propose Graph-SCP, a graph neural network method that can augment existing optimization solvers by learning to identify a much smaller sub-problem that contains the solution space. We evaluate the performance of Graph-SCP on synthetic weighted and unweighted SCP instances with diverse problem characteristics and complexities, and on instances from the OR Library, a canonical benchmark for SCP. We show that Graph-SCP reduces the problem size by 30-70% and achieves run time speedups up to~25x when compared to commercial solvers (Gurobi). Given a desired optimality threshold, Graph-SCP will improve upon it or even achieve 100% optimality. This is in contrast to fast greedy solutions that significantly compromise solution quality to achieve guaranteed polynomial run time. Graph-SCP can generalize to larger problem sizes and can be used with other conventional or ML-augmented CO solvers to lead to potential additional run time improvement.

System Analysis for Responsible Design of Modern AI/ML Systems

Apr 19, 2022Abstract:The irresponsible use of ML algorithms in practical settings has received a lot of deserved attention in the recent years. We posit that the traditional system analysis perspective is needed when designing and implementing ML algorithms and systems. Such perspective can provide a formal way for evaluating and enabling responsible ML practices. In this paper, we review components of the System Analysis methodology and highlight how they connect and enable responsible practices of ML design.

A supervised approach to time scale detection in dynamic networks

Feb 24, 2017

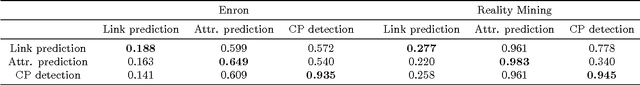

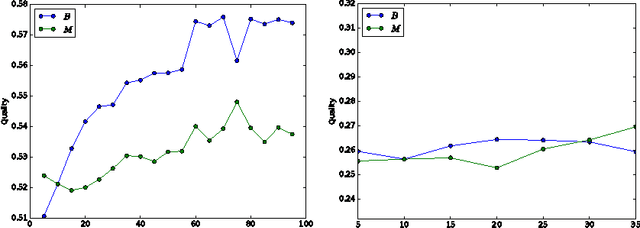

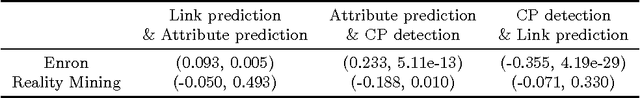

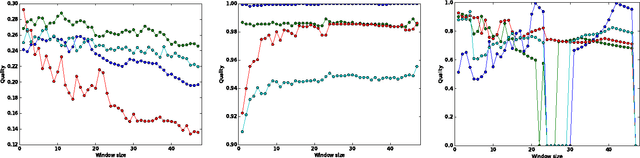

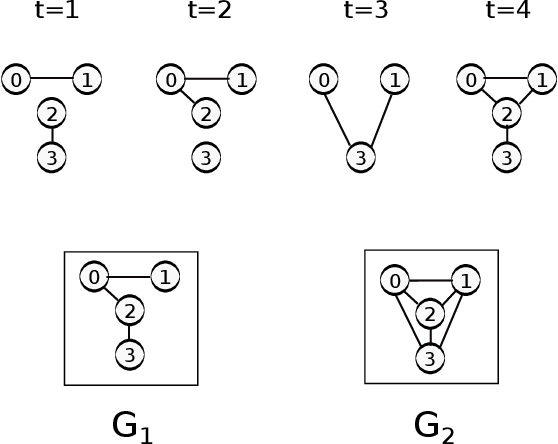

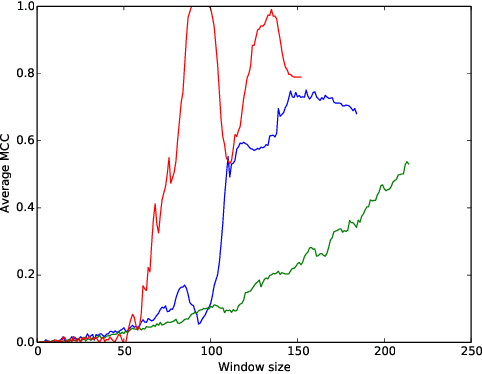

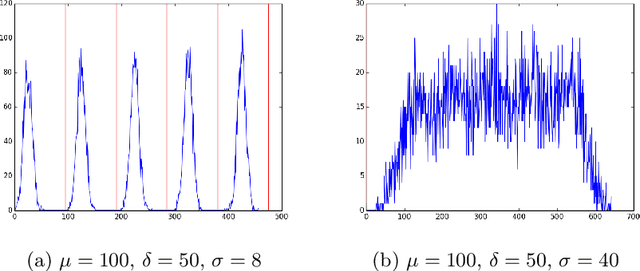

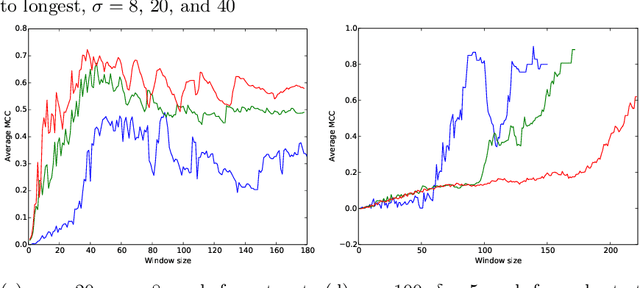

Abstract:For any stream of time-stamped edges that form a dynamic network, an important choice is the aggregation granularity that an analyst uses to bin the data. Picking such a windowing of the data is often done by hand, or left up to the technology that is collecting the data. However, the choice can make a big difference in the properties of the dynamic network. This is the time scale detection problem. In previous work, this problem is often solved with a heuristic as an unsupervised task. As an unsupervised problem, it is difficult to measure how well a given algorithm performs. In addition, we show that the quality of the windowing is dependent on which task an analyst wants to perform on the network after windowing. Therefore the time scale detection problem should not be handled independently from the rest of the analysis of the network. We introduce a framework that tackles both of these issues: By measuring the performance of the time scale detection algorithm based on how well a given task is accomplished on the resulting network, we are for the first time able to directly compare different time scale detection algorithms to each other. Using this framework, we introduce time scale detection algorithms that take a supervised approach: they leverage ground truth on training data to find a good windowing of the test data. We compare the supervised approach to previous approaches and several baselines on real data.

Consistent Alignment of Word Embedding Models

Feb 24, 2017

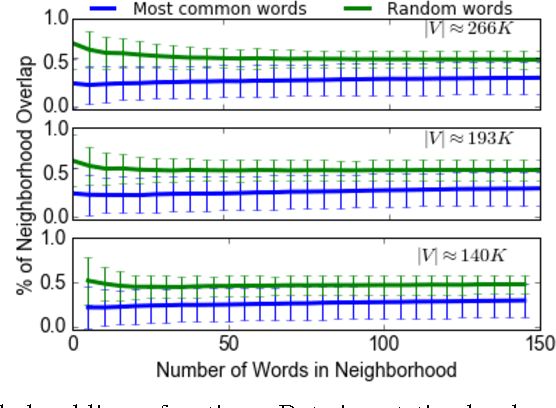

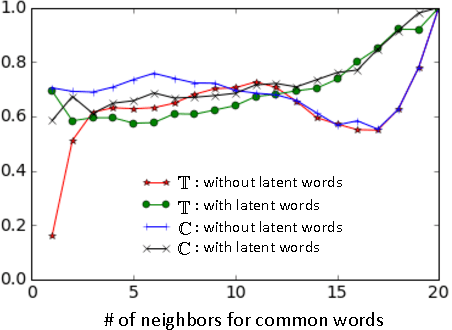

Abstract:Word embedding models offer continuous vector representations that can capture rich contextual semantics based on their word co-occurrence patterns. While these word vectors can provide very effective features used in many NLP tasks such as clustering similar words and inferring learning relationships, many challenges and open research questions remain. In this paper, we propose a solution that aligns variations of the same model (or different models) in a joint low-dimensional latent space leveraging carefully generated synthetic data points. This generative process is inspired by the observation that a variety of linguistic relationships is captured by simple linear operations in embedded space. We demonstrate that our approach can lead to substantial improvements in recovering embeddings of local neighborhoods.

Handling oversampling in dynamic networks using link prediction

Aug 11, 2015

Abstract:Oversampling is a common characteristic of data representing dynamic networks. It introduces noise into representations of dynamic networks, but there has been little work so far to compensate for it. Oversampling can affect the quality of many important algorithmic problems on dynamic networks, including link prediction. Link prediction seeks to predict edges that will be added to the network given previous snapshots. We show that not only does oversampling affect the quality of link prediction, but that we can use link prediction to recover from the effects of oversampling. We also introduce a novel generative model of noise in dynamic networks that represents oversampling. We demonstrate the results of our approach on both synthetic and real-world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge