Rahul Ragesh

A Piece-wise Polynomial Filtering Approach for Graph Neural Networks

Dec 07, 2021

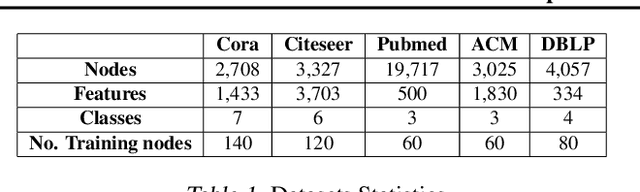

Abstract:Graph Neural Networks (GNNs) exploit signals from node features and the input graph topology to improve node classification task performance. However, these models tend to perform poorly on heterophilic graphs, where connected nodes have different labels. Recently proposed GNNs work across graphs having varying levels of homophily. Among these, models relying on polynomial graph filters have shown promise. We observe that solutions to these polynomial graph filter models are also solutions to an overdetermined system of equations. It suggests that in some instances, the model needs to learn a reasonably high order polynomial. On investigation, we find the proposed models ineffective at learning such polynomials due to their designs. To mitigate this issue, we perform an eigendecomposition of the graph and propose to learn multiple adaptive polynomial filters acting on different subsets of the spectrum. We theoretically and empirically show that our proposed model learns a better filter, thereby improving classification accuracy. We study various aspects of our proposed model including, dependency on the number of eigencomponents utilized, latent polynomial filters learned, and performance of the individual polynomials on the node classification task. We further show that our model is scalable by evaluating over large graphs. Our model achieves performance gains of up to 5% over the state-of-the-art models and outperforms existing polynomial filter-based approaches in general.

Effective Eigendecomposition based Graph Adaptation for Heterophilic Networks

Jul 28, 2021

Abstract:Graph Neural Networks (GNNs) exhibit excellent performance when graphs have strong homophily property, i.e. connected nodes have the same labels. However, they perform poorly on heterophilic graphs. Several approaches address the issue of heterophily by proposing models that adapt the graph by optimizing task-specific loss function using labelled data. These adaptations are made either via attention or by attenuating or enhancing various low-frequency/high-frequency signals, as needed for the task at hand. More recent approaches adapt the eigenvalues of the graph. One important interpretation of this adaptation is that these models select/weigh the eigenvectors of the graph. Based on this interpretation, we present an eigendecomposition based approach and propose EigenNetwork models that improve the performance of GNNs on heterophilic graphs. Performance improvement is achieved by learning flexible graph adaptation functions that modulate the eigenvalues of the graph. Regularization of these functions via parameter sharing helps to improve the performance even more. Our approach achieves up to 11% improvement in performance over the state-of-the-art methods on heterophilic graphs.

Simple Truncated SVD based Model for Node Classification on Heterophilic Graphs

Jun 24, 2021

Abstract:Graph Neural Networks (GNNs) have shown excellent performance on graphs that exhibit strong homophily with respect to the node labels i.e. connected nodes have same labels. However, they perform poorly on heterophilic graphs. Recent approaches have typically modified aggregation schemes, designed adaptive graph filters, etc. to address this limitation. In spite of this, the performance on heterophilic graphs can still be poor. We propose a simple alternative method that exploits Truncated Singular Value Decomposition (TSVD) of topological structure and node features. Our approach achieves up to ~30% improvement in performance over state-of-the-art methods on heterophilic graphs. This work is an early investigation into methods that differ from aggregation based approaches. Our experimental results suggest that it might be important to explore other alternatives to aggregation methods for heterophilic setting.

GLAM: Graph Learning by Modeling Affinity to Labeled Nodes for Graph Neural Networks

Feb 20, 2021

Abstract:Graph Neural Networks have shown excellent performance on semi-supervised classification tasks. However, they assume access to a graph that may not be often available in practice. In the absence of any graph, constructing k-Nearest Neighbor (kNN) graphs from the given data have shown to give improvements when used with GNNs over other semi-supervised methods. This paper proposes a semi-supervised graph learning method for cases when there are no graphs available. This method learns a graph as a convex combination of the unsupervised kNN graph and a supervised label-affinity graph. The label-affinity graph directly captures all the nodes' label-affinity with the labeled nodes, i.e., how likely a node has the same label as the labeled nodes. This affinity measure contrasts with the kNN graph where the metric measures closeness in the feature space. Our experiments suggest that this approach gives close to or better performance (up to 1.5%), while being simpler and faster (up to 70x) to train, than state-of-the-art graph learning methods. We also conduct several experiments to highlight the importance of individual components and contrast them with state-of-the-art methods.

User Embedding based Neighborhood Aggregation Method for Inductive Recommendation

Feb 16, 2021

Abstract:We consider the problem of learning latent features (aka embedding) for users and items in a recommendation setting. Given only a user-item interaction graph, the goal is to recommend items for each user. Traditional approaches employ matrix factorization-based collaborative filtering methods. Recent methods using graph convolutional networks (e.g., LightGCN) achieve state-of-the-art performance. They learn both user and item embedding. One major drawback of most existing methods is that they are not inductive; they do not generalize for users and items unseen during training. Besides, existing network models are quite complex, difficult to train and scale. Motivated by LightGCN, we propose a graph convolutional network modeling approach for collaborative filtering CF-GCN. We solely learn user embedding and derive item embedding using light variant CF-LGCN-U performing neighborhood aggregation, making it scalable due to reduced model complexity. CF-LGCN-U models naturally possess the inductive capability for new items, and we propose a simple solution to generalize for new users. We show how the proposed models are related to LightGCN. As a by-product, we suggest a simple solution to make LightGCN inductive. We perform comprehensive experiments on several benchmark datasets and demonstrate the capabilities of the proposed approach. Experimental results show that similar or better generalization performance is achievable than the state of the art methods in both transductive and inductive settings.

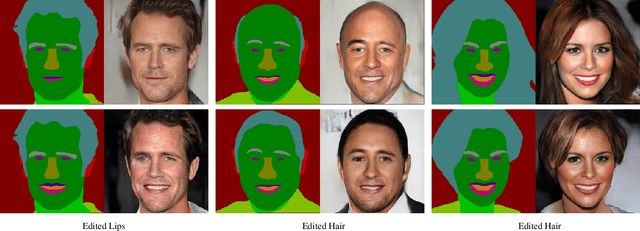

S2cGAN: Semi-Supervised Training of Conditional GANs with Fewer Labels

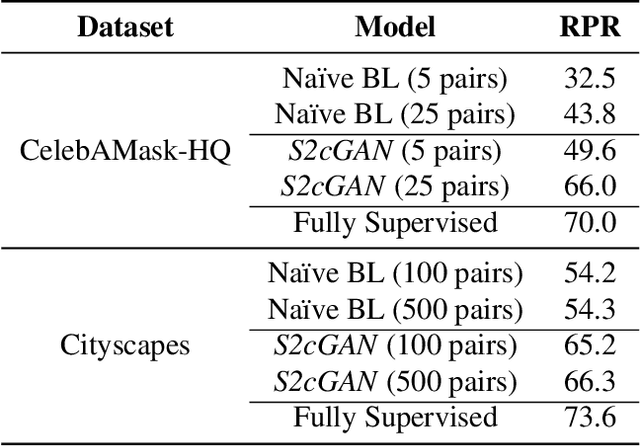

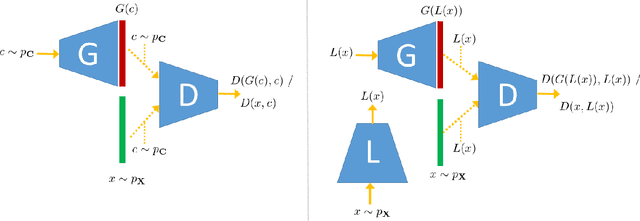

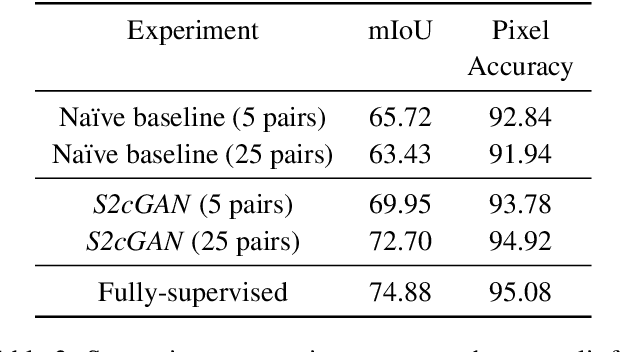

Oct 23, 2020

Abstract:Generative adversarial networks (GANs) have been remarkably successful in learning complex high dimensional real word distributions and generating realistic samples. However, they provide limited control over the generation process. Conditional GANs (cGANs) provide a mechanism to control the generation process by conditioning the output on a user defined input. Although training GANs requires only unsupervised data, training cGANs requires labelled data which can be very expensive to obtain. We propose a framework for semi-supervised training of cGANs which utilizes sparse labels to learn the conditional mapping, and at the same time leverages a large amount of unsupervised data to learn the unconditional distribution. We demonstrate effectiveness of our method on multiple datasets and different conditional tasks.

HeteGCN: Heterogeneous Graph Convolutional Networks for Text Classification

Aug 19, 2020

Abstract:We consider the problem of learning efficient and inductive graph convolutional networks for text classification with a large number of examples and features. Existing state-of-the-art graph embedding based methods such as predictive text embedding (PTE) and TextGCN have shortcomings in terms of predictive performance, scalability and inductive capability. To address these limitations, we propose a heterogeneous graph convolutional network (HeteGCN) modeling approach that unites the best aspects of PTE and TextGCN together. The main idea is to learn feature embeddings and derive document embeddings using a HeteGCN architecture with different graphs used across layers. We simplify TextGCN by dissecting into several HeteGCN models which (a) helps to study the usefulness of individual models and (b) offers flexibility in fusing learned embeddings from different models. In effect, the number of model parameters is reduced significantly, enabling faster training and improving performance in small labeled training set scenario. Our detailed experimental studies demonstrate the efficacy of the proposed approach.

A Graph Convolutional Network Composition Framework for Semi-supervised Classification

Apr 08, 2020

Abstract:Graph convolutional networks (GCNs) have gained popularity due to high performance achievable on several downstream tasks including node classification. Several architectural variants of these networks have been proposed and investigated with experimental studies in the literature. Motivated by a recent work on simplifying GCNs, we study the problem of designing other variants and propose a framework to compose networks using building blocks of GCN. The framework offers flexibility to compose and evaluate different networks using feature and/or label propagation networks, linear or non-linear networks, with each composition having different computational complexity. We conduct a detailed experimental study on several benchmark datasets with many variants and present observations from our evaluation. Our empirical experimental results suggest that several newly composed variants are useful alternatives to consider because they are as competitive as, or better than the original GCN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge