Raghava Modhugu

Ego4D: Around the World in 3,000 Hours of Egocentric Video

Oct 13, 2021

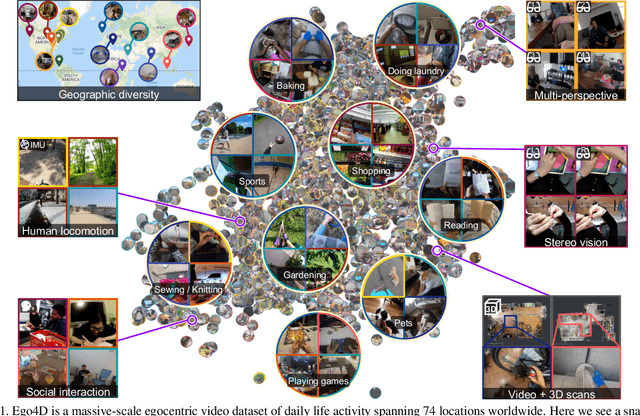

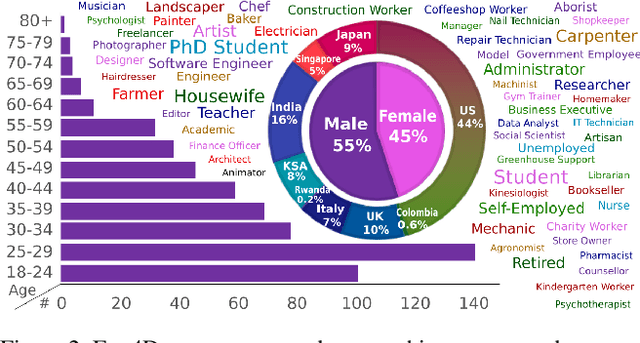

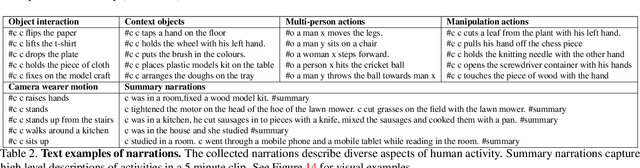

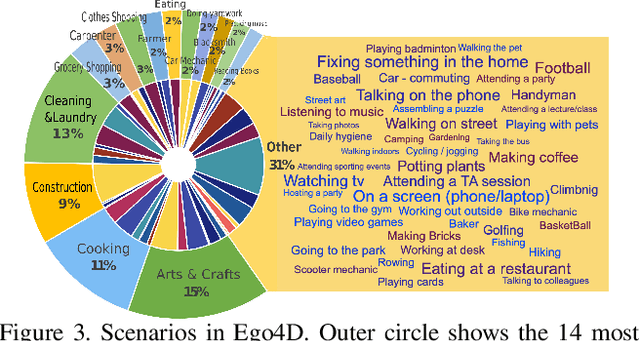

Abstract:We introduce Ego4D, a massive-scale egocentric video dataset and benchmark suite. It offers 3,025 hours of daily-life activity video spanning hundreds of scenarios (household, outdoor, workplace, leisure, etc.) captured by 855 unique camera wearers from 74 worldwide locations and 9 different countries. The approach to collection is designed to uphold rigorous privacy and ethics standards with consenting participants and robust de-identification procedures where relevant. Ego4D dramatically expands the volume of diverse egocentric video footage publicly available to the research community. Portions of the video are accompanied by audio, 3D meshes of the environment, eye gaze, stereo, and/or synchronized videos from multiple egocentric cameras at the same event. Furthermore, we present a host of new benchmark challenges centered around understanding the first-person visual experience in the past (querying an episodic memory), present (analyzing hand-object manipulation, audio-visual conversation, and social interactions), and future (forecasting activities). By publicly sharing this massive annotated dataset and benchmark suite, we aim to push the frontier of first-person perception. Project page: https://ego4d-data.org/

Evaluating Computer Vision Techniques for Urban Mobility on Large-Scale, Unconstrained Roads

Sep 11, 2021

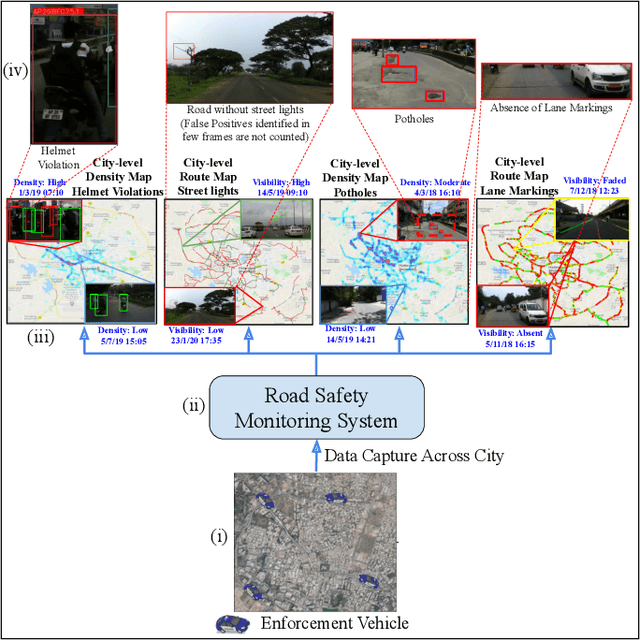

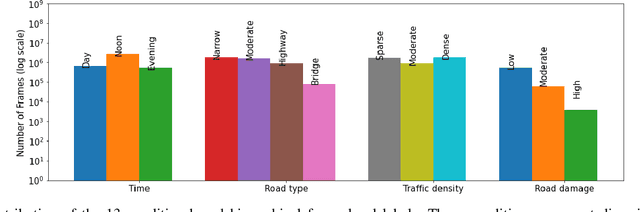

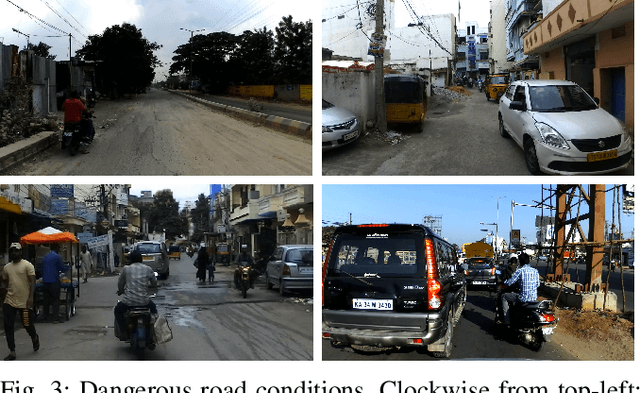

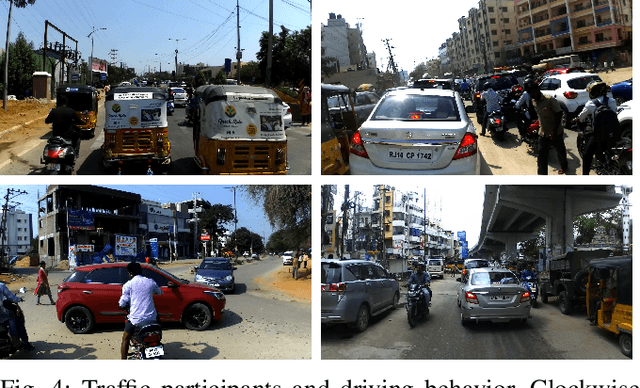

Abstract:Conventional approaches for addressing road safety rely on manual interventions or immobile CCTV infrastructure. Such methods are expensive in enforcing compliance to traffic rules and do not scale to large road networks. This paper proposes a simple mobile imaging setup to address several common problems in road safety at scale. We use recent computer vision techniques to identify possible irregularities on roads, the absence of street lights, and defective traffic signs using videos from a moving camera-mounted vehicle. Beyond the inspection of static road infrastructure, we also demonstrate the mobile imaging solution's applicability to spot traffic violations. Before deploying our system in the real-world, we investigate the strengths and shortcomings of computer vision techniques on thirteen condition-based hierarchical labels. These conditions include different timings, road type, traffic density, and state of road damage. Our demonstrations are then carried out on 2000 km of unconstrained road scenes, captured across an entire city. Through this, we quantitatively measure the overall safety of roads in the city through carefully constructed metrics. We also show an interactive dashboard for visually inspecting and initiating action in a time, labor and cost-efficient manner. Code, models, and datasets used in this work will be publicly released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge