Rachel Lea Draelos

Explainable multiple abnormality classification of chest CT volumes with AxialNet and HiResCAM

Nov 24, 2021

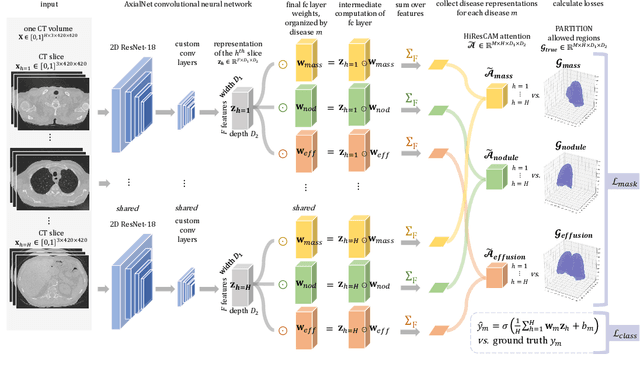

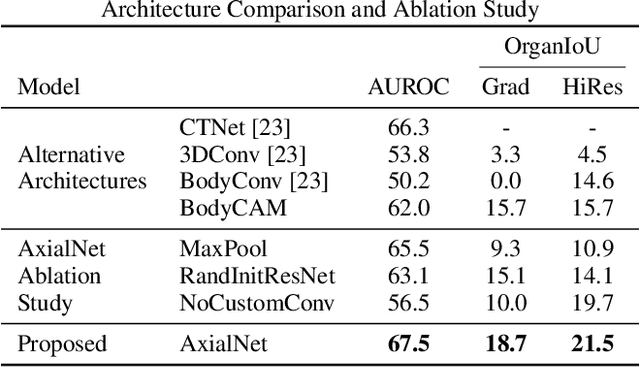

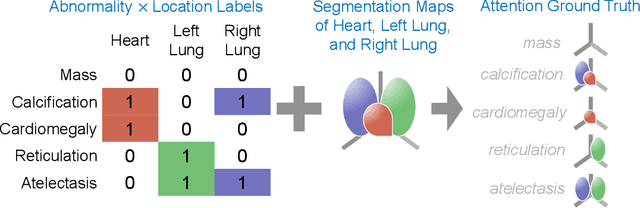

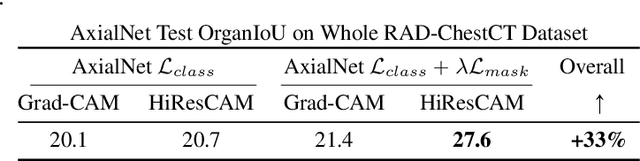

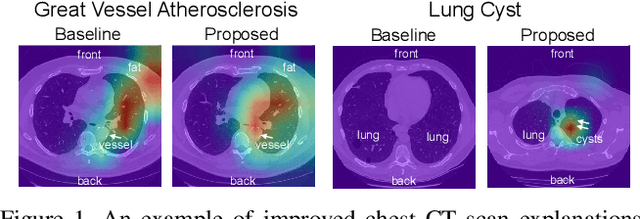

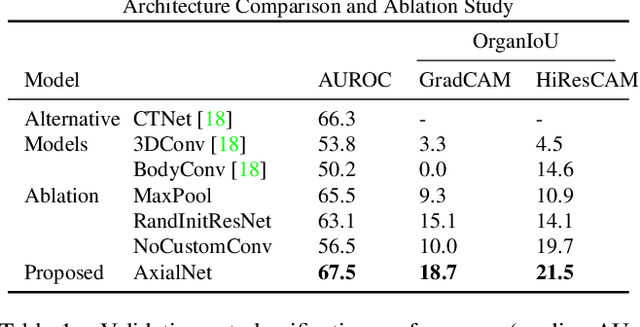

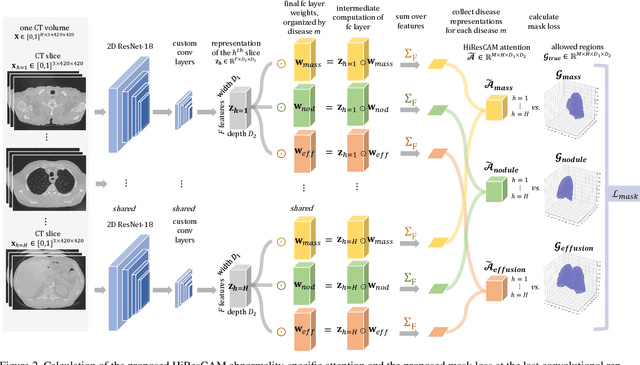

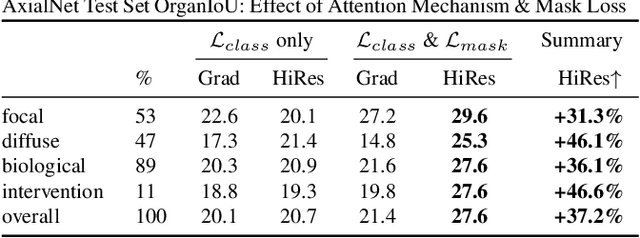

Abstract:Understanding model predictions is critical in healthcare, to facilitate rapid verification of model correctness and to guard against use of models that exploit confounding variables. We introduce the challenging new task of explainable multiple abnormality classification in volumetric medical images, in which a model must indicate the regions used to predict each abnormality. To solve this task, we propose a multiple instance learning convolutional neural network, AxialNet, that allows identification of top slices for each abnormality. Next we incorporate HiResCAM, an attention mechanism, to identify sub-slice regions. We prove that for AxialNet, HiResCAM explanations are guaranteed to reflect the locations the model used, unlike Grad-CAM which sometimes highlights irrelevant locations. Armed with a model that produces faithful explanations, we then aim to improve the model's learning through a novel mask loss that leverages HiResCAM and 3D allowed regions to encourage the model to predict abnormalities based only on the organs in which those abnormalities appear. The 3D allowed regions are obtained automatically through a new approach, PARTITION, that combines location information extracted from radiology reports with organ segmentation maps obtained through morphological image processing. Overall, we propose the first model for explainable multi-abnormality prediction in volumetric medical images, and then use the mask loss to achieve a 33% improvement in organ localization of multiple abnormalities in the RAD-ChestCT data set of 36,316 scans, representing the state of the art. This work advances the clinical applicability of multiple abnormality modeling in chest CT volumes.

Playing Codenames with Language Graphs and Word Embeddings

May 12, 2021

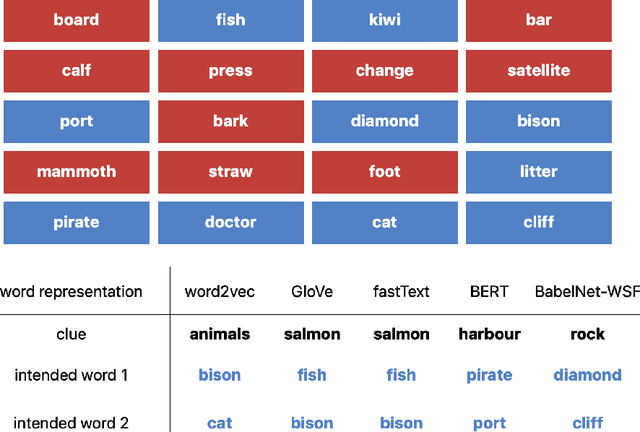

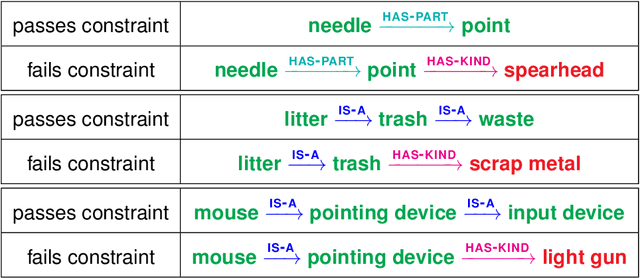

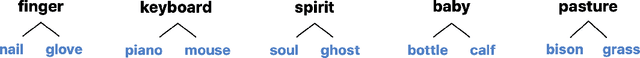

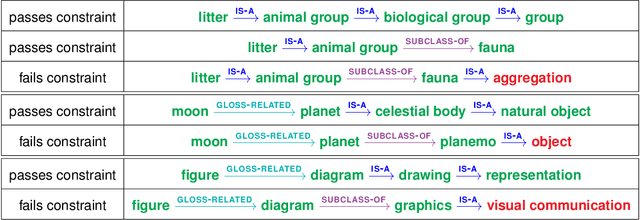

Abstract:Although board games and video games have been studied for decades in artificial intelligence research, challenging word games remain relatively unexplored. Word games are not as constrained as games like chess or poker. Instead, word game strategy is defined by the players' understanding of the way words relate to each other. The word game Codenames provides a unique opportunity to investigate common sense understanding of relationships between words, an important open challenge. We propose an algorithm that can generate Codenames clues from the language graph BabelNet or from any of several embedding methods - word2vec, GloVe, fastText or BERT. We introduce a new scoring function that measures the quality of clues, and we propose a weighting term called DETECT that incorporates dictionary-based word representations and document frequency to improve clue selection. We develop BabelNet-Word Selection Framework (BabelNet-WSF) to improve BabelNet clue quality and overcome the computational barriers that previously prevented leveraging language graphs for Codenames. Extensive experiments with human evaluators demonstrate that our proposed innovations yield state-of-the-art performance, with up to 102.8% improvement in precision@2 in some cases. Overall, this work advances the formal study of word games and approaches for common sense language understanding.

HiResCAM: Explainable Multi-Organ Multi-Abnormality Prediction in 3D Medical Images

Nov 17, 2020

Abstract:Understanding model predictions is critical in healthcare, to facilitate rapid real-time verification of model correctness and to guard against the use of models that exploit confounding variables. Motivated by the need for explainable models, we address the challenging task of explainable multiple abnormality classification in volumetric medical images. We propose a novel attention mechanism, HiResCAM, that highlights relevant regions within each volume for each abnormality queried. We investigate the relationship between HiResCAM and the popular model explanation method Grad-CAM, and demonstrate that HiResCAM yields better performance on abnormality localization and produces explanations that are more faithful to the underlying model. Finally, we introduce a mask loss that leverages HiResCAM to require the model to predict abnormalities based on only the organs in which those abnormalities appear. Our innovations achieve a 37% improvement in explanation quality, resulting in state-of-the-art weakly supervised organ localization of abnormalities in the RAD-ChestCT data set of 36,316 CT volumes. We also demonstrate on PASCAL VOC 2012 the different properties of HiResCAM and Grad-CAM on natural images. Overall, this work advances convolutional neural network explanation approaches and the clinical applicability of multi-abnormality modeling in volumetric medical images.

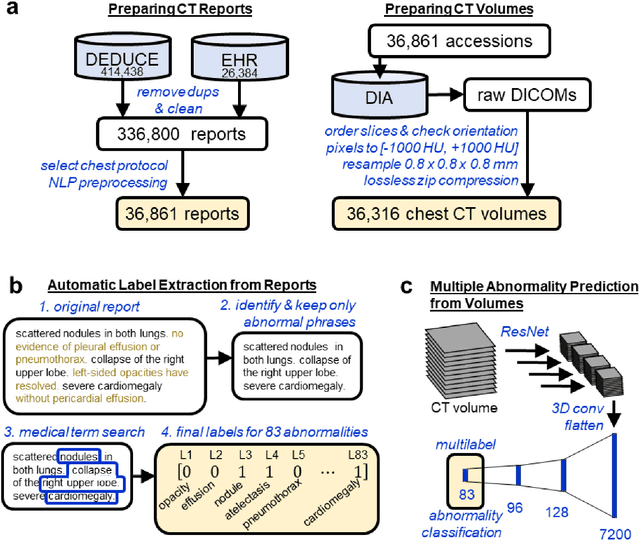

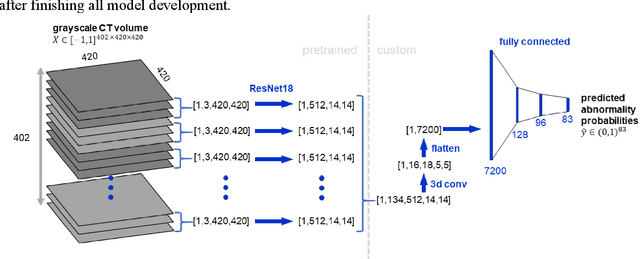

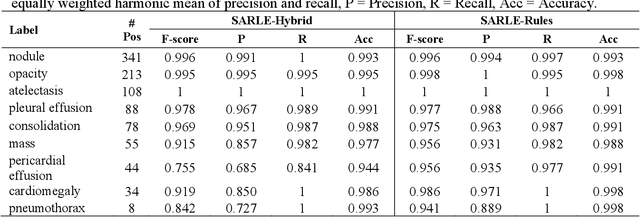

Machine-Learning-Based Multiple Abnormality Prediction with Large-Scale Chest Computed Tomography Volumes

Feb 17, 2020

Abstract:Machine learning models for radiology benefit from large-scale data sets with high quality labels for abnormalities. We curated and analyzed a chest computed tomography (CT) data set of 36,316 volumes from 19,993 unique patients. This is the largest multiply-annotated volumetric medical imaging data set reported. To annotate this data set, we developed a rule-based method for automatically extracting abnormality labels from free-text radiology reports with an average F-score of 0.976 (min 0.941, max 1.0). We also developed a model for multi-organ, multi-disease classification of chest CT volumes that uses a deep convolutional neural network (CNN). This model reached a classification performance of AUROC greater than 0.90 for 18 abnormalities, with an average AUROC of 0.773 for all 83 abnormalities, demonstrating the feasibility of learning from unfiltered whole volume CT data. We show that training on more labels improves performance significantly: for a subset of 9 labels - nodule, opacity, atelectasis, pleural effusion, consolidation, mass, pericardial effusion, cardiomegaly, and pneumothorax - the model's average AUROC increased by 10% when the number of training labels was increased from 9 to all 83. All code for volume preprocessing, automated label extraction, and the volume abnormality prediction model will be made publicly available. The 36,316 CT volumes and labels will also be made publicly available pending institutional approval.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge