Qun Dai

CloudMamba: Grouped Selective State Spaces for Point Cloud Analysis

Nov 11, 2025Abstract:Due to the long-range modeling ability and linear complexity property, Mamba has attracted considerable attention in point cloud analysis. Despite some interesting progress, related work still suffers from imperfect point cloud serialization, insufficient high-level geometric perception, and overfitting of the selective state space model (S6) at the core of Mamba. To this end, we resort to an SSM-based point cloud network termed CloudMamba to address the above challenges. Specifically, we propose sequence expanding and sequence merging, where the former serializes points along each axis separately and the latter serves to fuse the corresponding higher-order features causally inferred from different sequences, enabling unordered point sets to adapt more stably to the causal nature of Mamba without parameters. Meanwhile, we design chainedMamba that chains the forward and backward processes in the parallel bidirectional Mamba, capturing high-level geometric information during scanning. In addition, we propose a grouped selective state space model (GS6) via parameter sharing on S6, alleviating the overfitting problem caused by the computational mode in S6. Experiments on various point cloud tasks validate CloudMamba's ability to achieve state-of-the-art results with significantly less complexity.

Uncertainty Guided Refinement for Fine-Grained Salient Object Detection

Apr 13, 2025

Abstract:Recently, salient object detection (SOD) methods have achieved impressive performance. However, salient regions predicted by existing methods usually contain unsaturated regions and shadows, which limits the model for reliable fine-grained predictions. To address this, we introduce the uncertainty guidance learning approach to SOD, intended to enhance the model's perception of uncertain regions. Specifically, we design a novel Uncertainty Guided Refinement Attention Network (UGRAN), which incorporates three important components, i.e., the Multilevel Interaction Attention (MIA) module, the Scale Spatial-Consistent Attention (SSCA) module, and the Uncertainty Refinement Attention (URA) module. Unlike conventional methods dedicated to enhancing features, the proposed MIA facilitates the interaction and perception of multilevel features, leveraging the complementary characteristics among multilevel features. Then, through the proposed SSCA, the salient information across diverse scales within the aggregated features can be integrated more comprehensively and integrally. In the subsequent steps, we utilize the uncertainty map generated from the saliency prediction map to enhance the model's perception capability of uncertain regions, generating a highly-saturated fine-grained saliency prediction map. Additionally, we devise an adaptive dynamic partition (ADP) mechanism to minimize the computational overhead of the URA module and improve the utilization of uncertainty guidance. Experiments on seven benchmark datasets demonstrate the superiority of the proposed UGRAN over the state-of-the-art methodologies. Codes will be released at https://github.com/I2-Multimedia-Lab/UGRAN.

GrokLST: Towards High-Resolution Benchmark and Toolkit for Land Surface Temperature Downscaling

Sep 30, 2024

Abstract:Land Surface Temperature (LST) is a critical parameter for environmental studies, but obtaining high-resolution LST data remains challenging due to the spatio-temporal trade-off in satellite remote sensing. Guided LST downscaling has emerged as a solution, but current methods often neglect spatial non-stationarity and lack a open-source ecosystem for deep learning methods. To address these limitations, we propose the Modality-Conditional Large Selective Kernel (MoCoLSK) Networks, a novel architecture that dynamically fuses multi-modal data through modality-conditioned projections. MoCoLSK re-engineers our previous LSKNet to achieve a confluence of dynamic receptive field adjustment and multi-modal feature integration, leading to enhanced LST prediction accuracy. Furthermore, we establish the GrokLST project, a comprehensive open-source ecosystem featuring the GrokLST dataset, a high-resolution benchmark, and the GrokLST toolkit, an open-source PyTorch-based toolkit encapsulating MoCoLSK alongside 40+ state-of-the-art approaches. Extensive experimental results validate MoCoLSK's effectiveness in capturing complex dependencies and subtle variations within multispectral data, outperforming existing methods in LST downscaling. Our code, dataset, and toolkit are available at https://github.com/GrokCV/GrokLST.

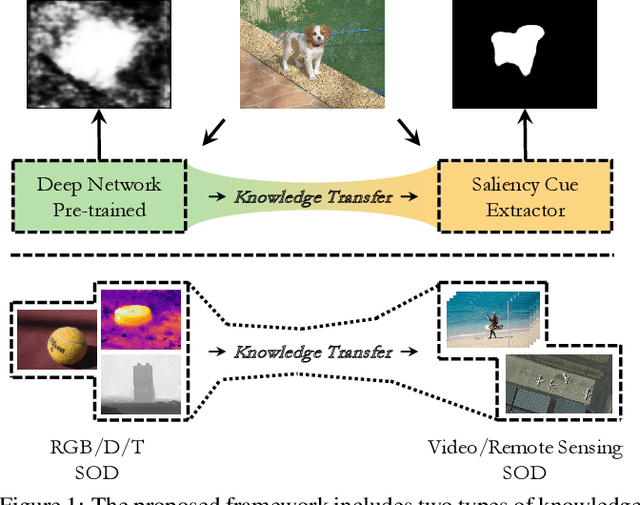

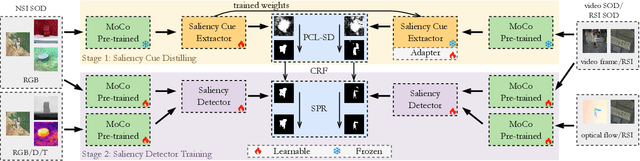

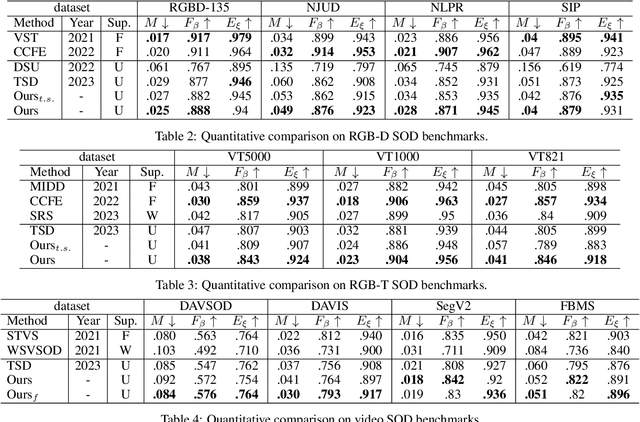

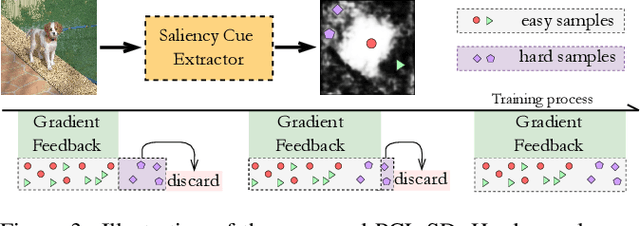

Unified Unsupervised Salient Object Detection via Knowledge Transfer

Apr 23, 2024

Abstract:Recently, unsupervised salient object detection (USOD) has gained increasing attention due to its annotation-free nature. However, current methods mainly focus on specific tasks such as RGB and RGB-D, neglecting the potential for task migration. In this paper, we propose a unified USOD framework for generic USOD tasks. Firstly, we propose a Progressive Curriculum Learning-based Saliency Distilling (PCL-SD) mechanism to extract saliency cues from a pre-trained deep network. This mechanism starts with easy samples and progressively moves towards harder ones, to avoid initial interference caused by hard samples. Afterwards, the obtained saliency cues are utilized to train a saliency detector, and we employ a Self-rectify Pseudo-label Refinement (SPR) mechanism to improve the quality of pseudo-labels. Finally, an adapter-tuning method is devised to transfer the acquired saliency knowledge, leveraging shared knowledge to attain superior transferring performance on the target tasks. Extensive experiments on five representative SOD tasks confirm the effectiveness and feasibility of our proposed method. Code and supplement materials are available at https://github.com/I2-Multimedia-Lab/A2S-v3.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge