Quentin Barthélemy

CRNL, CRNL-COPHY

Improved Riemannian potato field: an Automatic Artifact Rejection Method for EEG

Sep 11, 2025Abstract:Electroencephalography (EEG) signal cleaning has long been a critical challenge in the research community. The presence of artifacts can significantly degrade EEG data quality, complicating analysis and potentially leading to erroneous interpretations. While various artifact rejection methods have been proposed, the gold standard remains manual visual inspection by human experts-a process that is time-consuming, subjective, and impractical for large-scale EEG studies. Existing techniques are often hindered by a strong reliance on manual hyperparameter tuning, sensitivity to outliers, and high computational costs. In this paper, we introduce the improved Riemannian Potato Field (iRPF), a fast and fully automated method for EEG artifact rejection that addresses key limitations of current approaches. We evaluate iRPF against several state-of-the-art artifact rejection methods, using two publicly available EEG databases, labeled for various artifact types, comprising 226 EEG recordings. Our results demonstrate that iRPF outperforms all competitors across multiple metrics, with gains of up to 22% in recall, 102% in specificity, 54% in precision, and 24% in F1-score, compared to Isolation Forest, Autoreject, Riemannian Potato, and Riemannian Potato Field, respectively. Statistical analysis confirmed the significance of these improvements (p < 0.001) with large effect sizes (Cohen's d > 0.8) in most comparisons. Additionally, on a typical EEG recording iRPF performs artifact cleaning in under 8 milliseconds per epoch using a standard laptop, highlighting its efficiency for large-scale EEG data processing and real-time applications. iRPF offers a robust and data-driven artifact rejection solution for high-quality EEG pre-processing in brain-computer interfaces and clinical neuroimaging applications.

Translation-Equivariance of Normalization Layers and Aliasing in Convolutional Neural Networks

May 26, 2025Abstract:The design of convolutional neural architectures that are exactly equivariant to continuous translations is an active field of research. It promises to benefit scientific computing, notably by making existing imaging systems more physically accurate. Most efforts focus on the design of downsampling/pooling layers, upsampling layers and activation functions, but little attention is dedicated to normalization layers. In this work, we present a novel theoretical framework for understanding the equivariance of normalization layers to discrete shifts and continuous translations. We also determine necessary and sufficient conditions for normalization layers to be equivariant in terms of the dimensions they operate on. Using real feature maps from ResNet-18 and ImageNet, we test those theoretical results empirically and find that they are consistent with our predictions.

Bridging the Theoretical Gap in Randomized Smoothing

Apr 03, 2025Abstract:Randomized smoothing has become a leading approach for certifying adversarial robustness in machine learning models. However, a persistent gap remains between theoretical certified robustness and empirical robustness accuracy. This paper introduces a new framework that bridges this gap by leveraging Lipschitz continuity for certification and proposing a novel, less conservative method for computing confidence intervals in randomized smoothing. Our approach tightens the bounds of certified robustness, offering a more accurate reflection of model robustness in practice. Through rigorous experimentation we show that our method improves the robust accuracy, compressing the gap between empirical findings and previous theoretical results. We argue that investigating local Lipschitz constants and designing ad-hoc confidence intervals can further enhance the performance of randomized smoothing. These results pave the way for a deeper understanding of the relationship between Lipschitz continuity and certified robustness.

Spectral Norm of Convolutional Layers with Circular and Zero Paddings

Jan 31, 2024Abstract:This paper leverages the use of \emph{Gram iteration} an efficient, deterministic, and differentiable method for computing spectral norm with an upper bound guarantee. Designed for circular convolutional layers, we generalize the use of the Gram iteration to zero padding convolutional layers and prove its quadratic convergence. We also provide theorems for bridging the gap between circular and zero padding convolution's spectral norm. We design a \emph{spectral rescaling} that can be used as a competitive $1$-Lipschitz layer that enhances network robustness. Demonstrated through experiments, our method outperforms state-of-the-art techniques in precision, computational cost, and scalability. The code of experiments is available at https://github.com/blaisedelattre/lip4conv.

The Lipschitz-Variance-Margin Tradeoff for Enhanced Randomized Smoothing

Sep 28, 2023

Abstract:Real-life applications of deep neural networks are hindered by their unsteady predictions when faced with noisy inputs and adversarial attacks. The certified radius is in this context a crucial indicator of the robustness of models. However how to design an efficient classifier with a sufficient certified radius? Randomized smoothing provides a promising framework by relying on noise injection in inputs to obtain a smoothed and more robust classifier. In this paper, we first show that the variance introduced by randomized smoothing closely interacts with two other important properties of the classifier, i.e. its Lipschitz constant and margin. More precisely, our work emphasizes the dual impact of the Lipschitz constant of the base classifier, on both the smoothed classifier and the empirical variance. Moreover, to increase the certified robust radius, we introduce a different simplex projection technique for the base classifier to leverage the variance-margin trade-off thanks to Bernstein's concentration inequality, along with an enhanced Lipschitz bound. Experimental results show a significant improvement in certified accuracy compared to current state-of-the-art methods. Our novel certification procedure allows us to use pre-trained models that are used with randomized smoothing, effectively improving the current certification radius in a zero-shot manner.

Efficient Bound of Lipschitz Constant for Convolutional Layers by Gram Iteration

May 26, 2023

Abstract:Since the control of the Lipschitz constant has a great impact on the training stability, generalization, and robustness of neural networks, the estimation of this value is nowadays a real scientific challenge. In this paper we introduce a precise, fast, and differentiable upper bound for the spectral norm of convolutional layers using circulant matrix theory and a new alternative to the Power iteration. Called the Gram iteration, our approach exhibits a superlinear convergence. First, we show through a comprehensive set of experiments that our approach outperforms other state-of-the-art methods in terms of precision, computational cost, and scalability. Then, it proves highly effective for the Lipschitz regularization of convolutional neural networks, with competitive results against concurrent approaches. Code is available at https://github.com/blaisedelattre/lip4conv.

End-to-end P300 BCI using Bayesian accumulation of Riemannian probabilities

Mar 15, 2022

Abstract:In brain-computer interfaces (BCI), most of the approaches based on event-related potential (ERP) focus on the detection of P300, aiming for single trial classification for a speller task. While this is an important objective, existing P300 BCI still require several repetitions to achieve a correct classification accuracy. Signal processing and machine learning advances in P300 BCI mostly revolve around the P300 detection part, leaving the character classification out of the scope. To reduce the number of repetitions while maintaining a good character classification, it is critical to embrace the full classification problem. We introduce an end-to-end pipeline, starting from feature extraction, and is composed of an ERP-level classification using probabilistic Riemannian MDM which feeds a character-level classification using Bayesian accumulation of confidence across trials. Whereas existing approaches only increase the confidence of a character when it is flashed, our new pipeline, called Bayesian accumulation of Riemannian probabilities (ASAP), update the confidence of each character after each flash. We provide the proper derivation and theoretical reformulation of this Bayesian approach for a seamless processing of information from signal to BCI characters. We demonstrate that our approach performs significantly better than standard methods on public P300 datasets.

Minimizing subject-dependent calibration for BCI with Riemannian transfer learning

Nov 23, 2021

Abstract:Calibration is still an important issue for user experience in Brain-Computer Interfaces (BCI). Common experimental designs often involve a lengthy training period that raises the cognitive fatigue, before even starting to use the BCI. Reducing or suppressing this subject-dependent calibration is possible by relying on advanced machine learning techniques, such as transfer learning. Building on Riemannian BCI, we present a simple and effective scheme to train a classifier on data recorded from different subjects, to reduce the calibration while preserving good performances. The main novelty of this paper is to propose a unique approach that could be applied on very different paradigms. To demonstrate the robustness of this approach, we conducted a meta-analysis on multiple datasets for three BCI paradigms: event-related potentials (P300), motor imagery and SSVEP. Relying on the MOABB open source framework to ensure the reproducibility of the experiments and the statistical analysis, the results clearly show that the proposed approach could be applied on any kind of BCI paradigm and in most of the cases to significantly improve the classifier reliability. We point out some key features to further improve transfer learning methods.

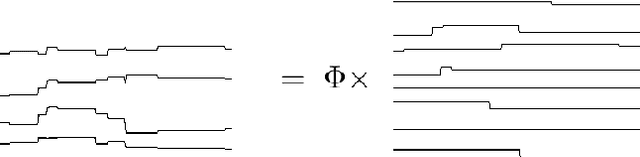

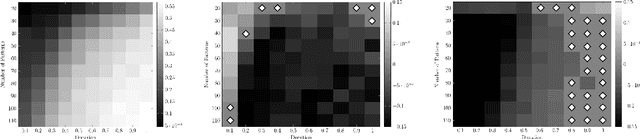

Multi-dimensional signal approximation with sparse structured priors using split Bregman iterations

Sep 29, 2016

Abstract:This paper addresses the structurally-constrained sparse decomposition of multi-dimensional signals onto overcomplete families of vectors, called dictionaries. The contribution of the paper is threefold. Firstly, a generic spatio-temporal regularization term is designed and used together with the standard $\ell_1$ regularization term to enforce a sparse decomposition preserving the spatio-temporal structure of the signal. Secondly, an optimization algorithm based on the split Bregman approach is proposed to handle the associated optimization problem, and its convergence is analyzed. Our well-founded approach yields same accuracy as the other algorithms at the state-of-the-art, with significant gains in terms of convergence speed. Thirdly, the empirical validation of the approach on artificial and real-world problems demonstrates the generality and effectiveness of the method. On artificial problems, the proposed regularization subsumes the Total Variation minimization and recovers the expected decomposition. On the real-world problem of electro-encephalography brainwave decomposition, the approach outperforms similar approaches in terms of P300 evoked potentials detection, using structured spatial priors to guide the decomposition.

Multi-dimensional sparse structured signal approximation using split Bregman iterations

Mar 10, 2015

Abstract:The paper focuses on the sparse approximation of signals using overcomplete representations, such that it preserves the (prior) structure of multi-dimensional signals. The underlying optimization problem is tackled using a multi-dimensional split Bregman optimization approach. An extensive empirical evaluation shows how the proposed approach compares to the state of the art depending on the signal features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge