Quan Van Nguyen

UIT-DarkCow team at ImageCLEFmedical Caption 2024: Diagnostic Captioning for Radiology Images Efficiency with Transformer Models

May 28, 2024

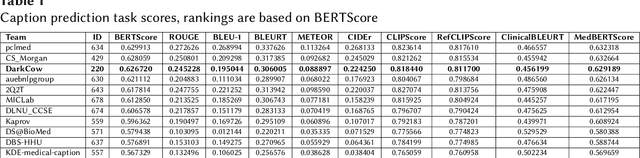

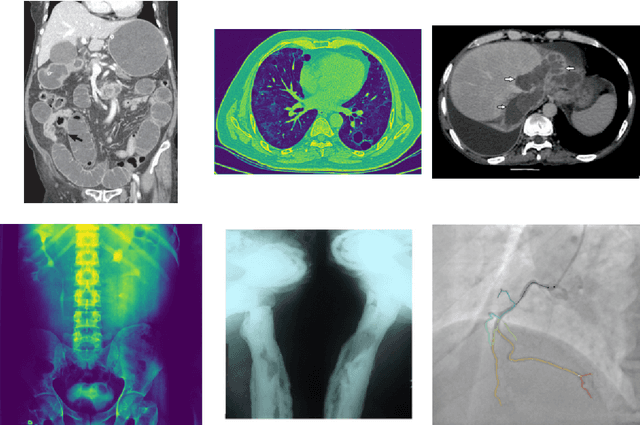

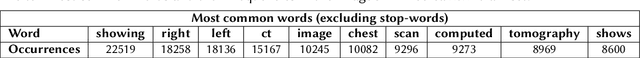

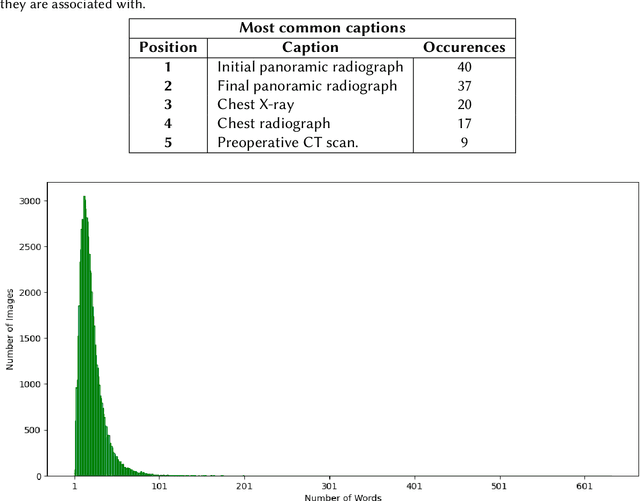

Abstract:Purpose: This study focuses on the development of automated text generation from radiology images, termed diagnostic captioning, to assist medical professionals in reducing clinical errors and improving productivity. The aim is to provide tools that enhance report quality and efficiency, which can significantly impact both clinical practice and deep learning research in the biomedical field. Methods: In our participation in the ImageCLEFmedical2024 Caption evaluation campaign, we explored caption prediction tasks using advanced Transformer-based models. We developed methods incorporating Transformer encoder-decoder and Query Transformer architectures. These models were trained and evaluated to generate diagnostic captions from radiology images. Results: Experimental evaluations demonstrated the effectiveness of our models, with the VisionDiagnostor-BioBART model achieving the highest BERTScore of 0.6267. This performance contributed to our team, DarkCow, achieving third place on the leaderboard. Conclusion: Our diagnostic captioning models show great promise in aiding medical professionals by generating high-quality reports efficiently. This approach can facilitate better data processing and performance optimization in medical imaging departments, ultimately benefiting healthcare delivery.

ViOCRVQA: Novel Benchmark Dataset and Vision Reader for Visual Question Answering by Understanding Vietnamese Text in Images

Apr 29, 2024

Abstract:Optical Character Recognition - Visual Question Answering (OCR-VQA) is the task of answering text information contained in images that have just been significantly developed in the English language in recent years. However, there are limited studies of this task in low-resource languages such as Vietnamese. To this end, we introduce a novel dataset, ViOCRVQA (Vietnamese Optical Character Recognition - Visual Question Answering dataset), consisting of 28,000+ images and 120,000+ question-answer pairs. In this dataset, all the images contain text and questions about the information relevant to the text in the images. We deploy ideas from state-of-the-art methods proposed for English to conduct experiments on our dataset, revealing the challenges and difficulties inherent in a Vietnamese dataset. Furthermore, we introduce a novel approach, called VisionReader, which achieved 0.4116 in EM and 0.6990 in the F1-score on the test set. Through the results, we found that the OCR system plays a very important role in VQA models on the ViOCRVQA dataset. In addition, the objects in the image also play a role in improving model performance. We open access to our dataset at link (https://github.com/qhnhynmm/ViOCRVQA.git) for further research in OCR-VQA task in Vietnamese.

ViTextVQA: A Large-Scale Visual Question Answering Dataset for Evaluating Vietnamese Text Comprehension in Images

Apr 16, 2024

Abstract:Visual Question Answering (VQA) is a complicated task that requires the capability of simultaneously processing natural language and images. Initially, this task was researched, focusing on methods to help machines understand objects and scene contexts in images. However, some text appearing in the image that carries explicit information about the full content of the image is not mentioned. Along with the continuous development of the AI era, there have been many studies on the reading comprehension ability of VQA models in the world. As a developing country, conditions are still limited, and this task is still open in Vietnam. Therefore, we introduce the first large-scale dataset in Vietnamese specializing in the ability to understand text appearing in images, we call it ViTextVQA (\textbf{Vi}etnamese \textbf{Text}-based \textbf{V}isual \textbf{Q}uestion \textbf{A}nswering dataset) which contains \textbf{over 16,000} images and \textbf{over 50,000} questions with answers. Through meticulous experiments with various state-of-the-art models, we uncover the significance of the order in which tokens in OCR text are processed and selected to formulate answers. This finding helped us significantly improve the performance of the baseline models on the ViTextVQA dataset. Our dataset is available at this \href{https://github.com/minhquan6203/ViTextVQA-Dataset}{link} for research purposes.

LAPFormer: A Light and Accurate Polyp Segmentation Transformer

Oct 10, 2022

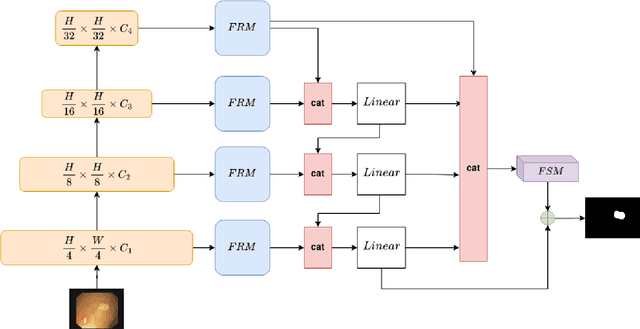

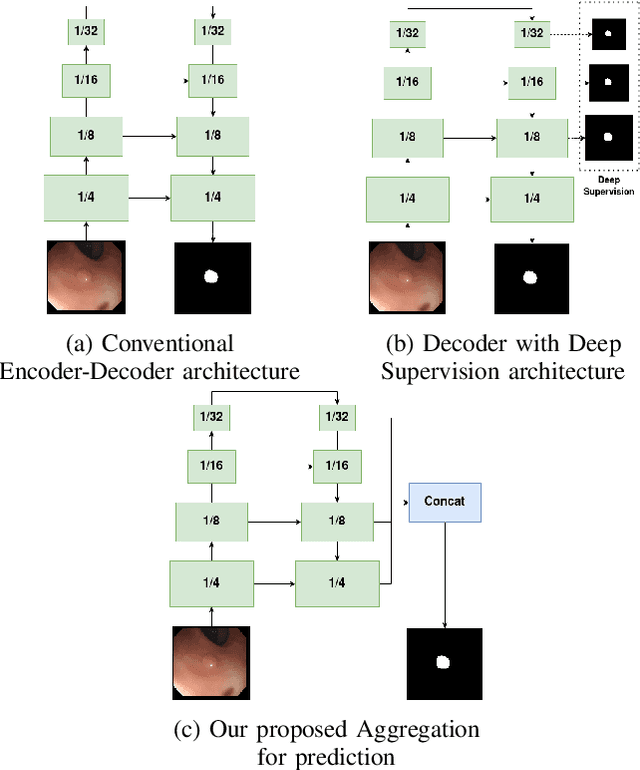

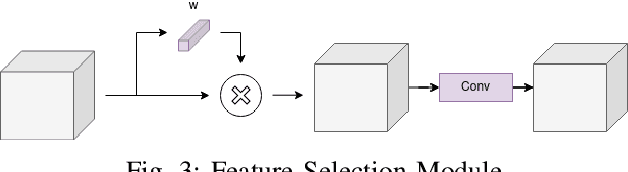

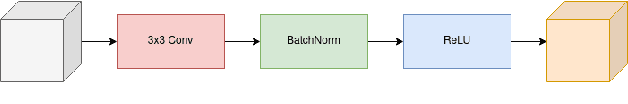

Abstract:Polyp segmentation is still known as a difficult problem due to the large variety of polyp shapes, scanning and labeling modalities. This prevents deep learning model to generalize well on unseen data. However, Transformer-based approach recently has achieved some remarkable results on performance with the ability of extracting global context better than CNN-based architecture and yet lead to better generalization. To leverage this strength of Transformer, we propose a new model with encoder-decoder architecture named LAPFormer, which uses a hierarchical Transformer encoder to better extract global feature and combine with our novel CNN (Convolutional Neural Network) decoder for capturing local appearance of the polyps. Our proposed decoder contains a progressive feature fusion module designed for fusing feature from upper scales and lower scales and enable multi-scale features to be more correlative. Besides, we also use feature refinement module and feature selection module for processing feature. We test our model on five popular benchmark datasets for polyp segmentation, including Kvasir, CVC-Clinic DB, CVC-ColonDB, CVC-T, and ETIS-Larib

Online pseudo labeling for polyp segmentation with momentum networks

Sep 29, 2022

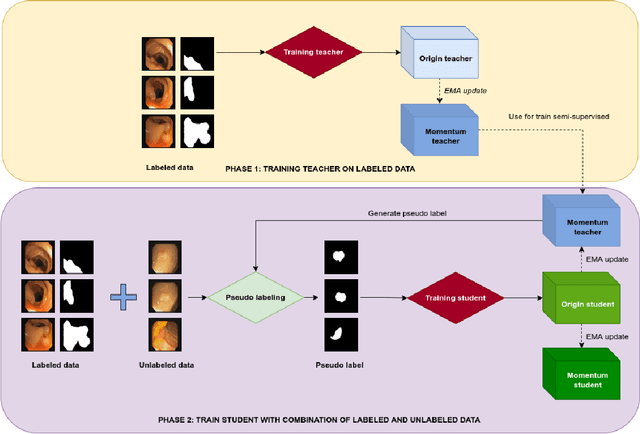

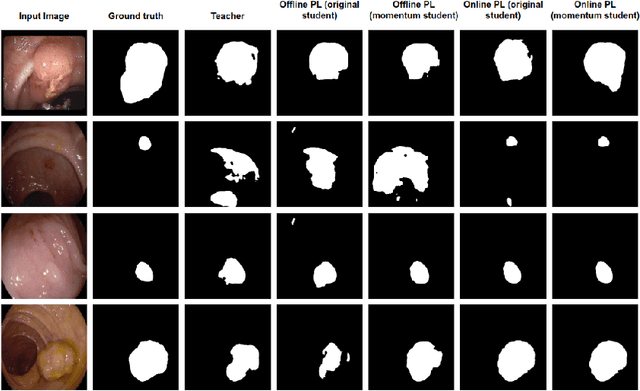

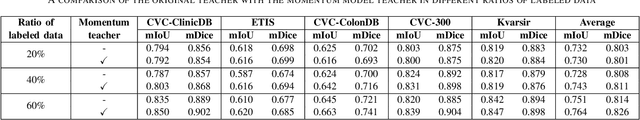

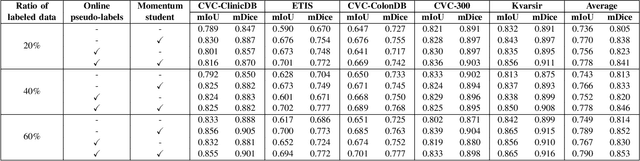

Abstract:Semantic segmentation is an essential task in developing medical image diagnosis systems. However, building an annotated medical dataset is expensive. Thus, semi-supervised methods are significant in this circumstance. In semi-supervised learning, the quality of labels plays a crucial role in model performance. In this work, we present a new pseudo labeling strategy that enhances the quality of pseudo labels used for training student networks. We follow the multi-stage semi-supervised training approach, which trains a teacher model on a labeled dataset and then uses the trained teacher to render pseudo labels for student training. By doing so, the pseudo labels will be updated and more precise as training progress. The key difference between previous and our methods is that we update the teacher model during the student training process. So the quality of pseudo labels is improved during the student training process. We also propose a simple but effective strategy to enhance the quality of pseudo labels using a momentum model -- a slow copy version of the original model during training. By applying the momentum model combined with re-rendering pseudo labels during student training, we achieved an average of 84.1% Dice Score on five datasets (i.e., Kvarsir, CVC-ClinicDB, ETIS-LaribPolypDB, CVC-ColonDB, and CVC-300) with only 20% of the dataset used as labeled data. Our results surpass common practice by 3% and even approach fully-supervised results on some datasets. Our source code and pre-trained models are available at https://github.com/sun-asterisk-research/online learning ssl

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge