Mai Nguyen

Improving Reliability and Explainability of Medical Question Answering through Atomic Fact Checking in Retrieval-Augmented LLMs

May 30, 2025Abstract:Large language models (LLMs) exhibit extensive medical knowledge but are prone to hallucinations and inaccurate citations, which pose a challenge to their clinical adoption and regulatory compliance. Current methods, such as Retrieval Augmented Generation, partially address these issues by grounding answers in source documents, but hallucinations and low fact-level explainability persist. In this work, we introduce a novel atomic fact-checking framework designed to enhance the reliability and explainability of LLMs used in medical long-form question answering. This method decomposes LLM-generated responses into discrete, verifiable units called atomic facts, each of which is independently verified against an authoritative knowledge base of medical guidelines. This approach enables targeted correction of errors and direct tracing to source literature, thereby improving the factual accuracy and explainability of medical Q&A. Extensive evaluation using multi-reader assessments by medical experts and an automated open Q&A benchmark demonstrated significant improvements in factual accuracy and explainability. Our framework achieved up to a 40% overall answer improvement and a 50% hallucination detection rate. The ability to trace each atomic fact back to the most relevant chunks from the database provides a granular, transparent explanation of the generated responses, addressing a major gap in current medical AI applications. This work represents a crucial step towards more trustworthy and reliable clinical applications of LLMs, addressing key prerequisites for clinical application and fostering greater confidence in AI-assisted healthcare.

LAPFormer: A Light and Accurate Polyp Segmentation Transformer

Oct 10, 2022

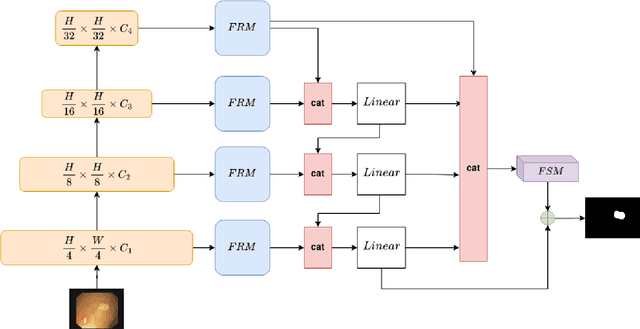

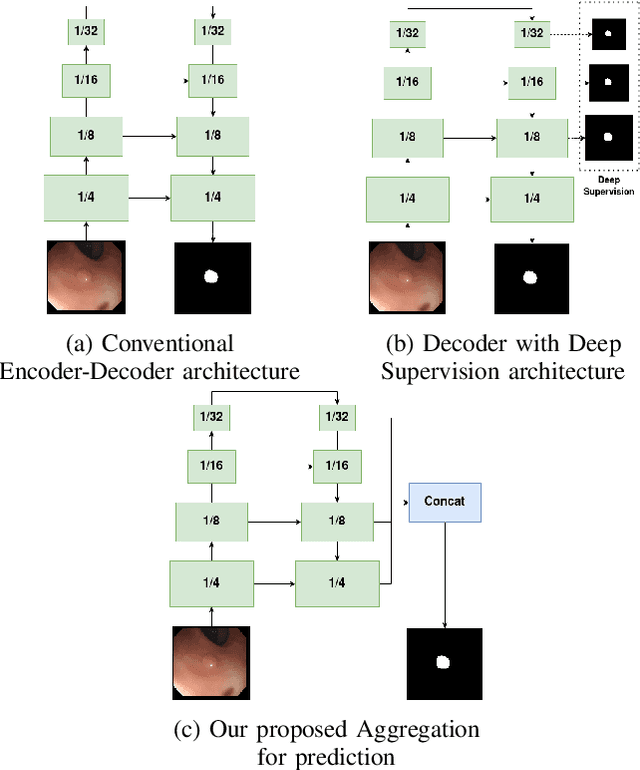

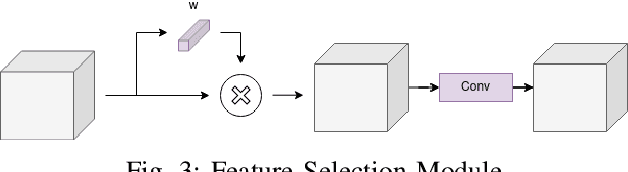

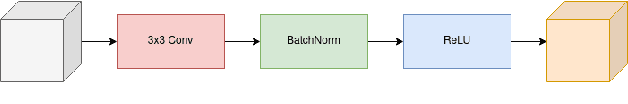

Abstract:Polyp segmentation is still known as a difficult problem due to the large variety of polyp shapes, scanning and labeling modalities. This prevents deep learning model to generalize well on unseen data. However, Transformer-based approach recently has achieved some remarkable results on performance with the ability of extracting global context better than CNN-based architecture and yet lead to better generalization. To leverage this strength of Transformer, we propose a new model with encoder-decoder architecture named LAPFormer, which uses a hierarchical Transformer encoder to better extract global feature and combine with our novel CNN (Convolutional Neural Network) decoder for capturing local appearance of the polyps. Our proposed decoder contains a progressive feature fusion module designed for fusing feature from upper scales and lower scales and enable multi-scale features to be more correlative. Besides, we also use feature refinement module and feature selection module for processing feature. We test our model on five popular benchmark datasets for polyp segmentation, including Kvasir, CVC-Clinic DB, CVC-ColonDB, CVC-T, and ETIS-Larib

Modular Resource Centric Learning for Workflow Performance Prediction

Apr 17, 2018

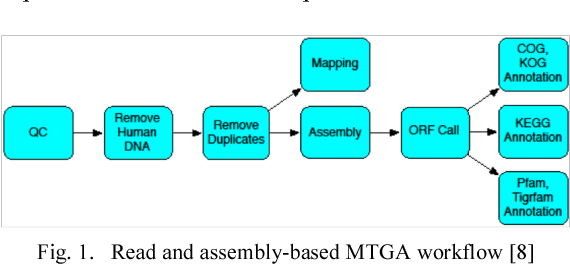

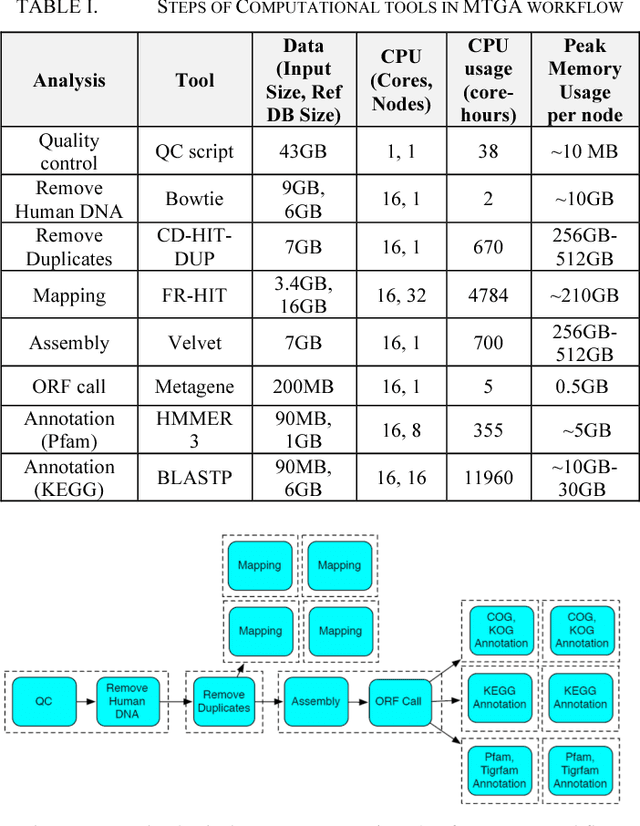

Abstract:Workflows provide an expressive programming model for fine-grained control of large-scale applications in distributed computing environments. Accurate estimates of complex workflow execution metrics on large-scale machines have several key advantages. The performance of scheduling algorithms that rely on estimates of execution metrics degrades when the accuracy of predicted execution metrics decreases. This in-progress paper presents a technique being developed to improve the accuracy of predicted performance metrics of large-scale workflows on distributed platforms. The central idea of this work is to train resource-centric machine learning agents to capture complex relationships between a set of program instructions and their performance metrics when executed on a specific resource. This resource-centric view of a workflow exploits the fact that predicting execution times of sub-modules of a workflow requires monitoring and modeling of a few dynamic and static features. We transform the input workflow that is essentially a directed acyclic graph of actions into a Physical Resource Execution Plan (PREP). This transformation enables us to model an arbitrarily complex workflow as a set of simpler programs running on physical nodes. We delegate a machine learning model to capture performance metrics for each resource type when it executes different program instructions under varying degrees of resource contention. Our algorithm takes the prediction metrics from each resource agent and composes the overall workflow performance metrics by utilizing the structure of the corresponding Physical Resource Execution Plan.

Translating Answer-Set Programs into Bit-Vector Logic

Aug 30, 2011

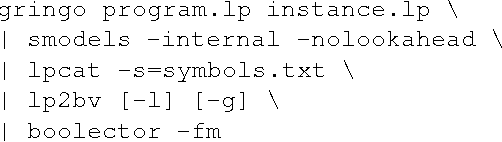

Abstract:Answer set programming (ASP) is a paradigm for declarative problem solving where problems are first formalized as rule sets, i.e., answer-set programs, in a uniform way and then solved by computing answer sets for programs. The satisfiability modulo theories (SMT) framework follows a similar modelling philosophy but the syntax is based on extensions of propositional logic rather than rules. Quite recently, a translation from answer-set programs into difference logic was provided---enabling the use of particular SMT solvers for the computation of answer sets. In this paper, the translation is revised for another SMT fragment, namely that based on fixed-width bit-vector theories. Thus, even further SMT solvers can be harnessed for the task of computing answer sets. The results of a preliminary experimental comparison are also reported. They suggest a level of performance which is similar to that achieved via difference logic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge