Qinming Zhang

Deep Random Forest with Ferroelectric Analog Content Addressable Memory

Oct 06, 2021

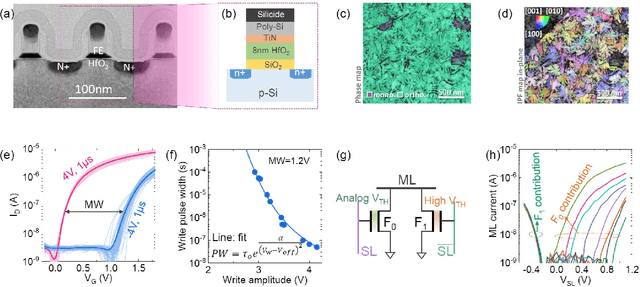

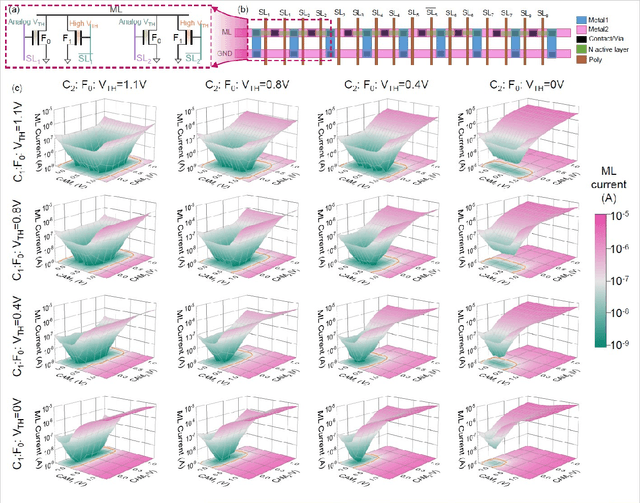

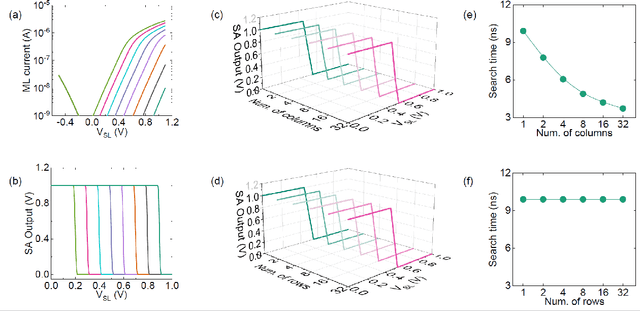

Abstract:Deep random forest (DRF), which incorporates the core features of deep learning and random forest (RF), exhibits comparable classification accuracy, interpretability, and low memory and computational overhead when compared with deep neural networks (DNNs) in various information processing tasks for edge intelligence. However, the development of efficient hardware to accelerate DRF is lagging behind its DNN counterparts. The key for hardware acceleration of DRF lies in efficiently realizing the branch-split operation at decision nodes when traversing a decision tree. In this work, we propose to implement DRF through simple associative searches realized with ferroelectric analog content addressable memory (ACAM). Utilizing only two ferroelectric field effect transistors (FeFETs), the ultra-compact ACAM cell can perform a branch-split operation with an energy-efficient associative search by storing the decision boundaries as the analog polarization states in an FeFET. The DRF accelerator architecture and the corresponding mapping of the DRF model to the ACAM arrays are presented. The functionality, characteristics, and scalability of the FeFET ACAM based DRF and its robustness against FeFET device non-idealities are validated both in experiments and simulations. Evaluation results show that the FeFET ACAM DRF accelerator exhibits 10^6x/16x and 10^6x/2.5x improvements in terms of energy and latency when compared with other deep random forest hardware implementations on the state-of-the-art CPU/ReRAM, respectively.

Cross-denoising Network against Corrupted Labels in Medical Image Segmentation with Domain Shift

Jun 19, 2020

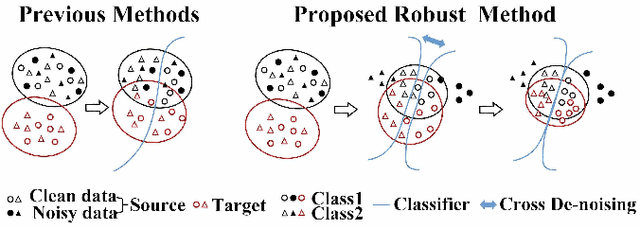

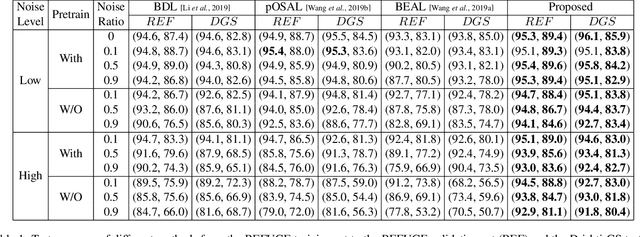

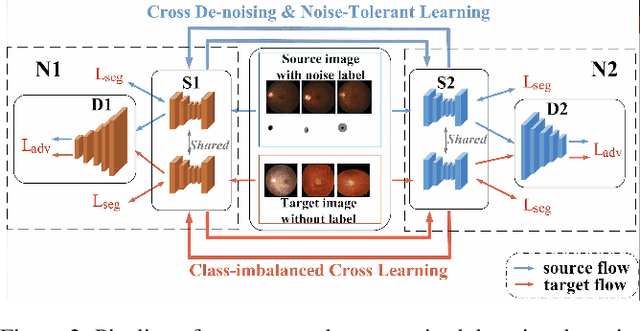

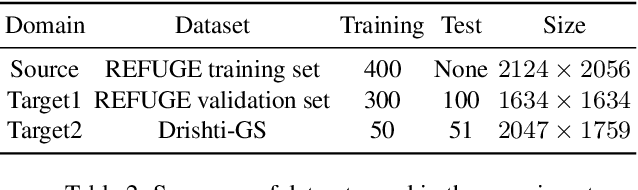

Abstract:Deep convolutional neural networks (DCNNs) have contributed many breakthroughs in segmentation tasks, especially in the field of medical imaging. However, \textit{domain shift} and \textit{corrupted annotations}, which are two common problems in medical imaging, dramatically degrade the performance of DCNNs in practice. In this paper, we propose a novel robust cross-denoising framework using two peer networks to address domain shift and corrupted label problems with a peer-review strategy. Specifically, each network performs as a mentor, mutually supervised to learn from reliable samples selected by the peer network to combat with corrupted labels. In addition, a noise-tolerant loss is proposed to encourage the network to capture the key location and filter the discrepancy under various noise-contaminant labels. To further reduce the accumulated error, we introduce a class-imbalanced cross learning using most confident predictions at the class-level. Experimental results on REFUGE and Drishti-GS datasets for optic disc (OD) and optic cup (OC) segmentation demonstrate the superior performance of our proposed approach to the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge