Qihe Huang

PHAT: Modeling Period Heterogeneity for Multivariate Time Series Forecasting

Jan 31, 2026Abstract:While existing multivariate time series forecasting models have advanced significantly in modeling periodicity, they largely neglect the periodic heterogeneity common in real-world data, where variates exhibit distinct and dynamically changing periods. To effectively capture this periodic heterogeneity, we propose PHAT (Period Heterogeneity-Aware Transformer). Specifically, PHAT arranges multivariate inputs into a three-dimensional "periodic bucket" tensor, where the dimensions correspond to variate group characteristics with similar periodicity, time steps aligned by phase, and offsets within the period. By restricting interactions within buckets and masking cross-bucket connections, PHAT effectively avoids interference from inconsistent periods. We also propose a positive-negative attention mechanism, which captures periodic dependencies from two perspectives: periodic alignment and periodic deviation. Additionally, the periodic alignment attention scores are decomposed into positive and negative components, with a modulation term encoding periodic priors. This modulation constrains the attention mechanism to more faithfully reflect the underlying periodic trends. A mathematical explanation is provided to support this property. We evaluate PHAT comprehensively on 14 real-world datasets against 18 baselines, and the results show that it significantly outperforms existing methods, achieving highly competitive forecasting performance. Our sources is available at GitHub.

To See Far, Look Close: Evolutionary Forecasting for Long-term Time Series

Jan 30, 2026Abstract:The prevailing Direct Forecasting (DF) paradigm dominates Long-term Time Series Forecasting (LTSF) by forcing models to predict the entire future horizon in a single forward pass. While efficient, this rigid coupling of output and evaluation horizons necessitates computationally prohibitive re-training for every target horizon. In this work, we uncover a counter-intuitive optimization anomaly: models trained on short horizons-when coupled with our proposed Evolutionary Forecasting (EF) paradigm-significantly outperform those trained directly on long horizons. We attribute this success to the mitigation of a fundamental optimization pathology inherent in DF, where conflicting gradients from distant futures cripple the learning of local dynamics. We establish EF as a unified generative framework, proving that DF is merely a degenerate special case of EF. Extensive experiments demonstrate that a singular EF model surpasses task-specific DF ensembles across standard benchmarks and exhibits robust asymptotic stability in extreme extrapolation. This work propels a paradigm shift in LTSF: moving from passive Static Mapping to autonomous Evolutionary Reasoning.

Talk2Image: A Multi-Agent System for Multi-Turn Image Generation and Editing

Aug 09, 2025Abstract:Text-to-image generation tasks have driven remarkable advances in diverse media applications, yet most focus on single-turn scenarios and struggle with iterative, multi-turn creative tasks. Recent dialogue-based systems attempt to bridge this gap, but their single-agent, sequential paradigm often causes intention drift and incoherent edits. To address these limitations, we present Talk2Image, a novel multi-agent system for interactive image generation and editing in multi-turn dialogue scenarios. Our approach integrates three key components: intention parsing from dialogue history, task decomposition and collaborative execution across specialized agents, and feedback-driven refinement based on a multi-view evaluation mechanism. Talk2Image enables step-by-step alignment with user intention and consistent image editing. Experiments demonstrate that Talk2Image outperforms existing baselines in controllability, coherence, and user satisfaction across iterative image generation and editing tasks.

QuiZSF: An efficient data-model interaction framework for zero-shot time-series forecasting

Aug 09, 2025Abstract:Time series forecasting has become increasingly important to empower diverse applications with streaming data. Zero-shot time-series forecasting (ZSF), particularly valuable in data-scarce scenarios, such as domain transfer or forecasting under extreme conditions, is difficult for traditional models to deal with. While time series pre-trained models (TSPMs) have demonstrated strong performance in ZSF, they often lack mechanisms to dynamically incorporate external knowledge. Fortunately, emerging retrieval-augmented generation (RAG) offers a promising path for injecting such knowledge on demand, yet they are rarely integrated with TSPMs. To leverage the strengths of both worlds, we introduce RAG into TSPMs to enhance zero-shot time series forecasting. In this paper, we propose QuiZSF (Quick Zero-Shot Time Series Forecaster), a lightweight and modular framework that couples efficient retrieval with representation learning and model adaptation for ZSF. Specifically, we construct a hierarchical tree-structured ChronoRAG Base (CRB) for scalable time-series storage and domain-aware retrieval, introduce a Multi-grained Series Interaction Learner (MSIL) to extract fine- and coarse-grained relational features, and develop a dual-branch Model Cooperation Coherer (MCC) that aligns retrieved knowledge with two kinds of TSPMs: Non-LLM based and LLM based. Compared with contemporary baselines, QuiZSF, with Non-LLM based and LLM based TSPMs as base model, respectively, ranks Top1 in 75% and 87.5% of prediction settings, while maintaining high efficiency in memory and inference time.

SynEVO: A neuro-inspired spatiotemporal evolutional framework for cross-domain adaptation

May 21, 2025Abstract:Discovering regularities from spatiotemporal systems can benefit various scientific and social planning. Current spatiotemporal learners usually train an independent model from a specific source data that leads to limited transferability among sources, where even correlated tasks requires new design and training. The key towards increasing cross-domain knowledge is to enable collective intelligence and model evolution. In this paper, inspired by neuroscience theories, we theoretically derive the increased information boundary via learning cross-domain collective intelligence and propose a Synaptic EVOlutional spatiotemporal network, SynEVO, where SynEVO breaks the model independence and enables cross-domain knowledge to be shared and aggregated. Specifically, we first re-order the sample groups to imitate the human curriculum learning, and devise two complementary learners, elastic common container and task-independent extractor to allow model growth and task-wise commonality and personality disentanglement. Then an adaptive dynamic coupler with a new difference metric determines whether the new sample group should be incorporated into common container to achieve model evolution under various domains. Experiments show that SynEVO improves the generalization capacity by at most 42% under cross-domain scenarios and SynEVO provides a paradigm of NeuroAI for knowledge transfer and adaptation.

Get Rid of Task Isolation: A Continuous Multi-task Spatio-Temporal Learning Framework

Oct 14, 2024

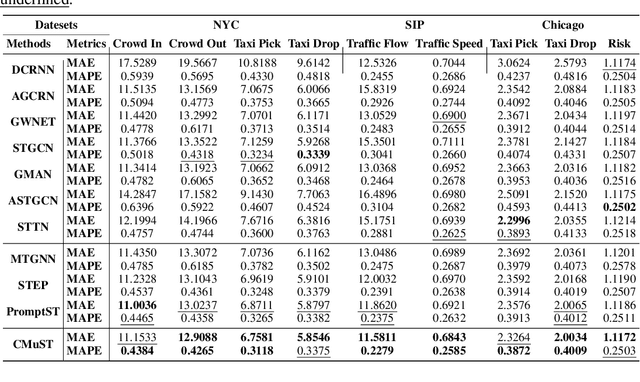

Abstract:Spatiotemporal learning has become a pivotal technique to enable urban intelligence. Traditional spatiotemporal models mostly focus on a specific task by assuming a same distribution between training and testing sets. However, given that urban systems are usually dynamic, multi-sourced with imbalanced data distributions, current specific task-specific models fail to generalize to new urban conditions and adapt to new domains without explicitly modeling interdependencies across various dimensions and types of urban data. To this end, we argue that there is an essential to propose a Continuous Multi-task Spatio-Temporal learning framework (CMuST) to empower collective urban intelligence, which reforms the urban spatiotemporal learning from single-domain to cooperatively multi-dimensional and multi-task learning. Specifically, CMuST proposes a new multi-dimensional spatiotemporal interaction network (MSTI) to allow cross-interactions between context and main observations as well as self-interactions within spatial and temporal aspects to be exposed, which is also the core for capturing task-level commonality and personalization. To ensure continuous task learning, a novel Rolling Adaptation training scheme (RoAda) is devised, which not only preserves task uniqueness by constructing data summarization-driven task prompts, but also harnesses correlated patterns among tasks by iterative model behavior modeling. We further establish a benchmark of three cities for multi-task spatiotemporal learning, and empirically demonstrate the superiority of CMuST via extensive evaluations on these datasets. The impressive improvements on both few-shot streaming data and new domain tasks against existing SOAT methods are achieved. Code is available at https://github.com/DILab-USTCSZ/CMuST.

FairSTG: Countering performance heterogeneity via collaborative sample-level optimization

Mar 19, 2024Abstract:Spatiotemporal learning plays a crucial role in mobile computing techniques to empower smart cites. While existing research has made great efforts to achieve accurate predictions on the overall dataset, they still neglect the significant performance heterogeneity across samples. In this work, we designate the performance heterogeneity as the reason for unfair spatiotemporal learning, which not only degrades the practical functions of models, but also brings serious potential risks to real-world urban applications. To fix this gap, we propose a model-independent Fairness-aware framework for SpatioTemporal Graph learning (FairSTG), which inherits the idea of exploiting advantages of well-learned samples to challenging ones with collaborative mix-up. Specifically, FairSTG consists of a spatiotemporal feature extractor for model initialization, a collaborative representation enhancement for knowledge transfer between well-learned samples and challenging ones, and fairness objectives for immediately suppressing sample-level performance heterogeneity. Experiments on four spatiotemporal datasets demonstrate that our FairSTG significantly improves the fairness quality while maintaining comparable forecasting accuracy. Case studies show FairSTG can counter both spatial and temporal performance heterogeneity by our sample-level retrieval and compensation, and our work can potentially alleviate the risks on spatiotemporal resource allocation for underrepresented urban regions.

ComS2T: A complementary spatiotemporal learning system for data-adaptive model evolution

Mar 04, 2024

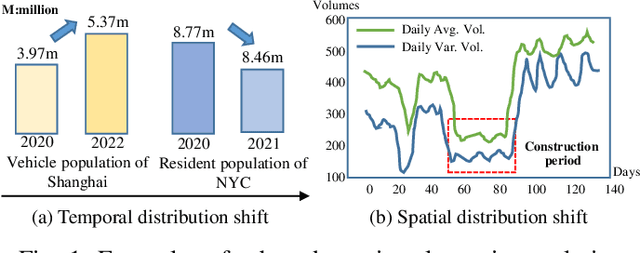

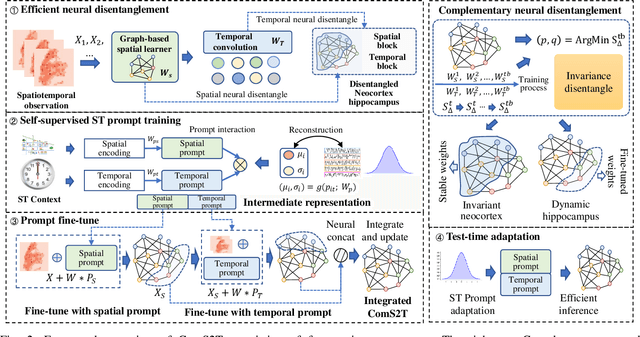

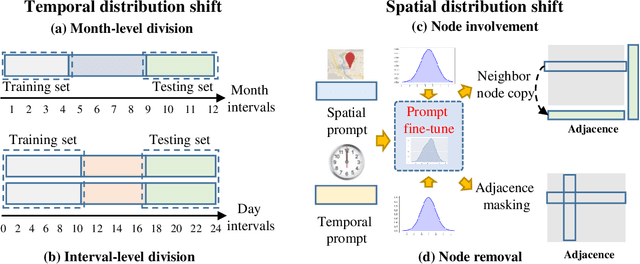

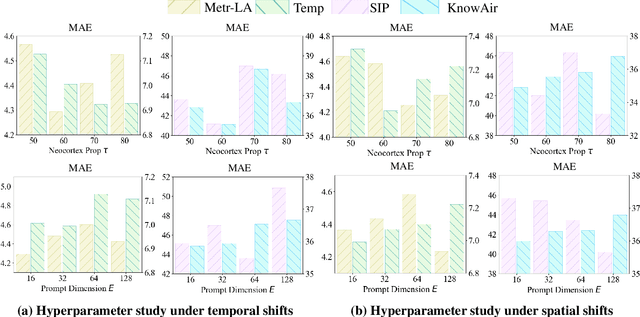

Abstract:Spatiotemporal (ST) learning has become a crucial technique to enable smart cities and sustainable urban development. Current ST learning models capture the heterogeneity via various spatial convolution and temporal evolution blocks. However, rapid urbanization leads to fluctuating distributions in urban data and city structures over short periods, resulting in existing methods suffering generalization and data adaptation issues. Despite efforts, existing methods fail to deal with newly arrived observations and those methods with generalization capacity are limited in repeated training. Motivated by complementary learning in neuroscience, we introduce a prompt-based complementary spatiotemporal learning termed ComS2T, to empower the evolution of models for data adaptation. ComS2T partitions the neural architecture into a stable neocortex for consolidating historical memory and a dynamic hippocampus for new knowledge update. We first disentangle two disjoint structures into stable and dynamic weights, and then train spatial and temporal prompts by characterizing distribution of main observations to enable prompts adaptive to new data. This data-adaptive prompt mechanism, combined with a two-stage training process, facilitates fine-tuning of the neural architecture conditioned on prompts, thereby enabling efficient adaptation during testing. Extensive experiments validate the efficacy of ComS2T in adapting to various spatiotemporal out-of-distribution scenarios while maintaining efficient inference capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge