ComS2T: A complementary spatiotemporal learning system for data-adaptive model evolution

Paper and Code

Mar 04, 2024

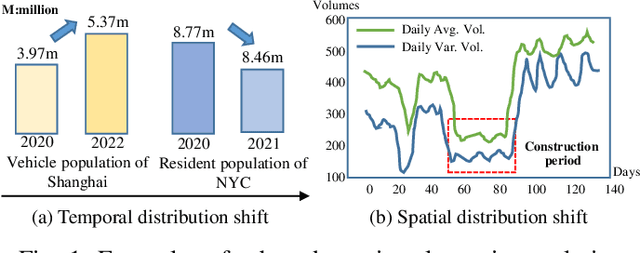

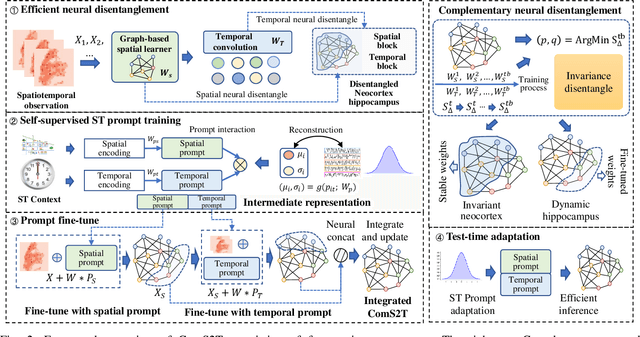

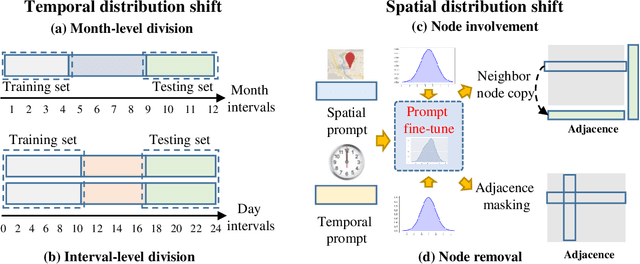

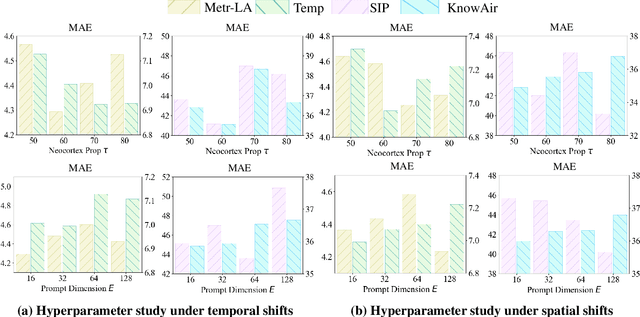

Spatiotemporal (ST) learning has become a crucial technique to enable smart cities and sustainable urban development. Current ST learning models capture the heterogeneity via various spatial convolution and temporal evolution blocks. However, rapid urbanization leads to fluctuating distributions in urban data and city structures over short periods, resulting in existing methods suffering generalization and data adaptation issues. Despite efforts, existing methods fail to deal with newly arrived observations and those methods with generalization capacity are limited in repeated training. Motivated by complementary learning in neuroscience, we introduce a prompt-based complementary spatiotemporal learning termed ComS2T, to empower the evolution of models for data adaptation. ComS2T partitions the neural architecture into a stable neocortex for consolidating historical memory and a dynamic hippocampus for new knowledge update. We first disentangle two disjoint structures into stable and dynamic weights, and then train spatial and temporal prompts by characterizing distribution of main observations to enable prompts adaptive to new data. This data-adaptive prompt mechanism, combined with a two-stage training process, facilitates fine-tuning of the neural architecture conditioned on prompts, thereby enabling efficient adaptation during testing. Extensive experiments validate the efficacy of ComS2T in adapting to various spatiotemporal out-of-distribution scenarios while maintaining efficient inference capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge