Priyantha Mudalige

Human Driver Behavior Prediction based on UrbanFlow

Nov 09, 2019

Abstract:How autonomous vehicles and human drivers share public transportation systems is an important problem, as fully automatic transportation environments are still a long way off. Understanding human drivers' behavior can be beneficial for autonomous vehicle decision making and planning, especially when the autonomous vehicle is surrounded by human drivers who have various driving behaviors and patterns of interaction with other vehicles. In this paper, we propose an LSTM-based trajectory prediction method for human drivers which can help the autonomous vehicle make better decisions, especially in urban intersection scenarios. Meanwhile, in order to collect human drivers' driving behavior data in the urban scenario, we describe a system called UrbanFlow which includes the whole procedure from raw bird's-eye view data collection via drone to the final processed trajectories. The system is mainly intended for urban scenarios but can be extended to be used for any traffic scenarios.

Hierarchical Reinforcement Learning Method for Autonomous Vehicle Behavior Planning

Nov 09, 2019

Abstract:In this work, we propose a hierarchical reinforcement learning (HRL) structure which is capable of performing autonomous vehicle planning tasks in simulated environments with multiple sub-goals. In this hierarchical structure, the network is capable of 1) learning one task with multiple sub-goals simultaneously; 2) extracting attentions of states according to changing sub-goals during the learning process; 3) reusing the well-trained network of sub-goals for other similar tasks with the same sub-goals. The states are defined as processed observations which are transmitted from the perception system of the autonomous vehicle. A hybrid reward mechanism is designed for different hierarchical layers in the proposed HRL structure. Compared to traditional RL methods, our algorithm is more sample-efficient since its modular design allows reusing the policies of sub-goals across similar tasks. The results show that the proposed method converges to an optimal policy faster than traditional RL methods.

Learning On-Road Visual Control for Self-Driving Vehicles with Auxiliary Tasks

Dec 19, 2018

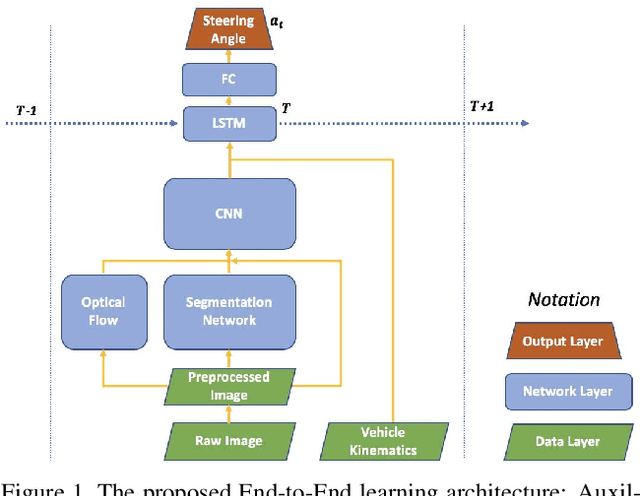

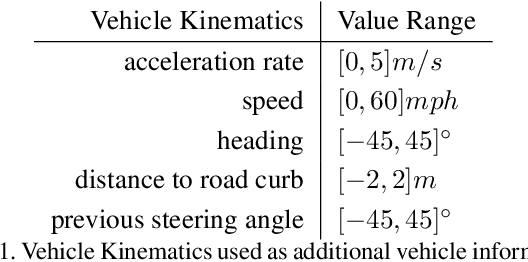

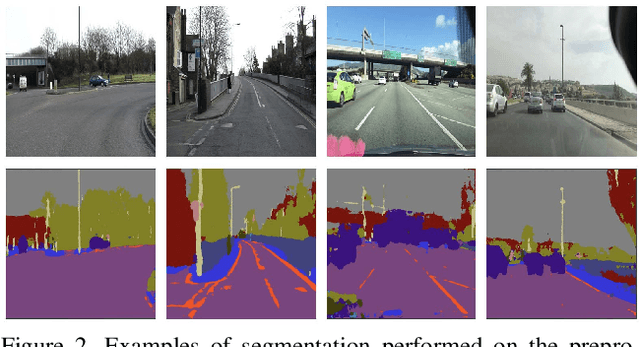

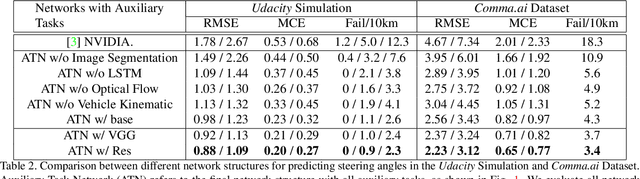

Abstract:A safe and robust on-road navigation system is a crucial component of achieving fully automated vehicles. NVIDIA recently proposed an End-to-End algorithm that can directly learn steering commands from raw pixels of a front camera by using one convolutional neural network. In this paper, we leverage auxiliary information aside from raw images and design a novel network structure, called Auxiliary Task Network (ATN), to help boost the driving performance while maintaining the advantage of minimal training data and an End-to-End training method. In this network, we introduce human prior knowledge into vehicle navigation by transferring features from image recognition tasks. Image semantic segmentation is applied as an auxiliary task for navigation. We consider temporal information by introducing an LSTM module and optical flow to the network. Finally, we combine vehicle kinematics with a sensor fusion step. We discuss the benefits of our method over state-of-the-art visual navigation methods both in the Udacity simulation environment and on the real-world Comma.ai dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge