Zhiqian Qiao

Trajectory Planning for Autonomous Vehicles Using Hierarchical Reinforcement Learning

Nov 09, 2020

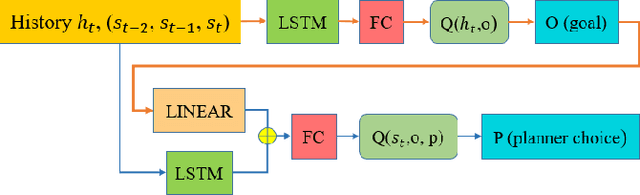

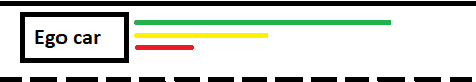

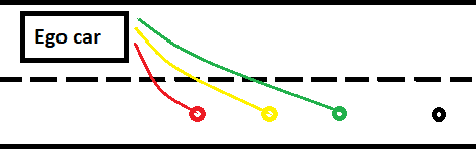

Abstract:Planning safe trajectories under uncertain and dynamic conditions makes the autonomous driving problem significantly complex. Current sampling-based methods such as Rapidly Exploring Random Trees (RRTs) are not ideal for this problem because of the high computational cost. Supervised learning methods such as Imitation Learning lack generalization and safety guarantees. To address these problems and in order to ensure a robust framework, we propose a Hierarchical Reinforcement Learning (HRL) structure combined with a Proportional-Integral-Derivative (PID) controller for trajectory planning. HRL helps divide the task of autonomous vehicle driving into sub-goals and supports the network to learn policies for both high-level options and low-level trajectory planner choices. The introduction of sub-goals decreases convergence time and enables the policies learned to be reused for other scenarios. In addition, the proposed planner is made robust by guaranteeing smooth trajectories and by handling the noisy perception system of the ego-car. The PID controller is used for tracking the waypoints, which ensures smooth trajectories and reduces jerk. The problem of incomplete observations is handled by using a Long-Short-Term-Memory (LSTM) layer in the network. Results from the high-fidelity CARLA simulator indicate that the proposed method reduces convergence time, generates smoother trajectories, and is able to handle dynamic surroundings and noisy observations.

Safe Trajectory Planning Using Reinforcement Learning for Self Driving

Nov 09, 2020

Abstract:Self-driving vehicles must be able to act intelligently in diverse and difficult environments, marked by high-dimensional state spaces, a myriad of optimization objectives and complex behaviors. Traditionally, classical optimization and search techniques have been applied to the problem of self-driving; but they do not fully address operations in environments with high-dimensional states and complex behaviors. Recently, imitation learning has been proposed for the task of self-driving; but it is labor-intensive to obtain enough training data. Reinforcement learning has been proposed as a way to directly control the car, but this has safety and comfort concerns. We propose using model-free reinforcement learning for the trajectory planning stage of self-driving and show that this approach allows us to operate the car in a more safe, general and comfortable manner, required for the task of self driving.

Behavior Planning at Urban Intersections through Hierarchical Reinforcement Learning

Nov 09, 2020

Abstract:For autonomous vehicles, effective behavior planning is crucial to ensure safety of the ego car. In many urban scenarios, it is hard to create sufficiently general heuristic rules, especially for challenging scenarios that some new human drivers find difficult. In this work, we propose a behavior planning structure based on reinforcement learning (RL) which is capable of performing autonomous vehicle behavior planning with a hierarchical structure in simulated urban environments. Application of the hierarchical structure allows the various layers of the behavior planning system to be satisfied. Our algorithms can perform better than heuristic-rule-based methods for elective decisions such as when to turn left between vehicles approaching from the opposite direction or possible lane-change when approaching an intersection due to lane blockage or delay in front of the ego car. Such behavior is hard to evaluate as correct or incorrect, but for some aggressive expert human drivers handle such scenarios effectively and quickly. On the other hand, compared to traditional RL methods, our algorithm is more sample-efficient, due to the use of a hybrid reward mechanism and heuristic exploration during the training process. The results also show that the proposed method converges to an optimal policy faster than traditional RL methods.

Human Driver Behavior Prediction based on UrbanFlow

Nov 09, 2019

Abstract:How autonomous vehicles and human drivers share public transportation systems is an important problem, as fully automatic transportation environments are still a long way off. Understanding human drivers' behavior can be beneficial for autonomous vehicle decision making and planning, especially when the autonomous vehicle is surrounded by human drivers who have various driving behaviors and patterns of interaction with other vehicles. In this paper, we propose an LSTM-based trajectory prediction method for human drivers which can help the autonomous vehicle make better decisions, especially in urban intersection scenarios. Meanwhile, in order to collect human drivers' driving behavior data in the urban scenario, we describe a system called UrbanFlow which includes the whole procedure from raw bird's-eye view data collection via drone to the final processed trajectories. The system is mainly intended for urban scenarios but can be extended to be used for any traffic scenarios.

Hierarchical Reinforcement Learning Method for Autonomous Vehicle Behavior Planning

Nov 09, 2019

Abstract:In this work, we propose a hierarchical reinforcement learning (HRL) structure which is capable of performing autonomous vehicle planning tasks in simulated environments with multiple sub-goals. In this hierarchical structure, the network is capable of 1) learning one task with multiple sub-goals simultaneously; 2) extracting attentions of states according to changing sub-goals during the learning process; 3) reusing the well-trained network of sub-goals for other similar tasks with the same sub-goals. The states are defined as processed observations which are transmitted from the perception system of the autonomous vehicle. A hybrid reward mechanism is designed for different hierarchical layers in the proposed HRL structure. Compared to traditional RL methods, our algorithm is more sample-efficient since its modular design allows reusing the policies of sub-goals across similar tasks. The results show that the proposed method converges to an optimal policy faster than traditional RL methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge