Pragyan Dahal

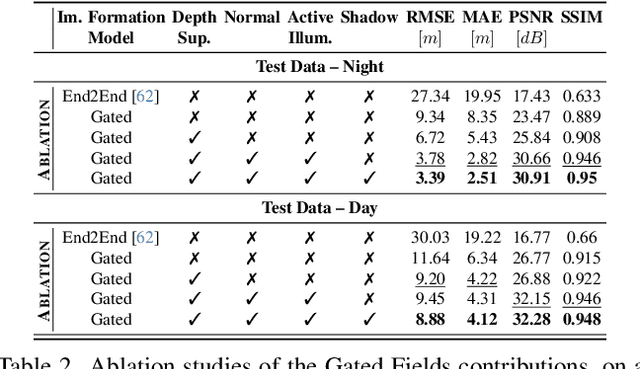

Gated Fields: Learning Scene Reconstruction from Gated Videos

May 30, 2024

Abstract:Reconstructing outdoor 3D scenes from temporal observations is a challenge that recent work on neural fields has offered a new avenue for. However, existing methods that recover scene properties, such as geometry, appearance, or radiance, solely from RGB captures often fail when handling poorly-lit or texture-deficient regions. Similarly, recovering scenes with scanning LiDAR sensors is also difficult due to their low angular sampling rate which makes recovering expansive real-world scenes difficult. Tackling these gaps, we introduce Gated Fields - a neural scene reconstruction method that utilizes active gated video sequences. To this end, we propose a neural rendering approach that seamlessly incorporates time-gated capture and illumination. Our method exploits the intrinsic depth cues in the gated videos, achieving precise and dense geometry reconstruction irrespective of ambient illumination conditions. We validate the method across day and night scenarios and find that Gated Fields compares favorably to RGB and LiDAR reconstruction methods. Our code and datasets are available at https://light.princeton.edu/gatedfields/.

Vehicle State Estimation through Modular Factor Graph-based Fusion of Multiple Sensors

Aug 09, 2023Abstract:This study focuses on the critical aspect of robust state estimation for the safe navigation of an Autonomous Vehicle (AV). Existing literature primarily employs two prevalent techniques for state estimation, namely filtering-based and graph-based approaches. Factor Graph (FG) is a graph-based approach, constructed using Values and Factors for Maximum Aposteriori (MAP) estimation, that offers a modular architecture that facilitates the integration of inputs from diverse sensors. However, most FG-based architectures in current use require explicit knowledge of sensor parameters and are designed for single setups. To address these limitations, this research introduces a novel plug-and-play FG-based state estimator capable of operating without predefined sensor parameters. This estimator is suitable for deployment in multiple sensor setups, offering convenience and providing comprehensive state estimation at a high frequency, including mean and covariances. The proposed algorithm undergoes rigorous validation using various sensor setups on two different vehicles: a quadricycle and a shuttle bus. The algorithm provides accurate and robust state estimation across diverse scenarios, even when faced with degraded Global Navigation Satellite System (GNSS) measurements or complete outages. These findings highlight the efficacy and reliability of the algorithm in real-world AV applications.

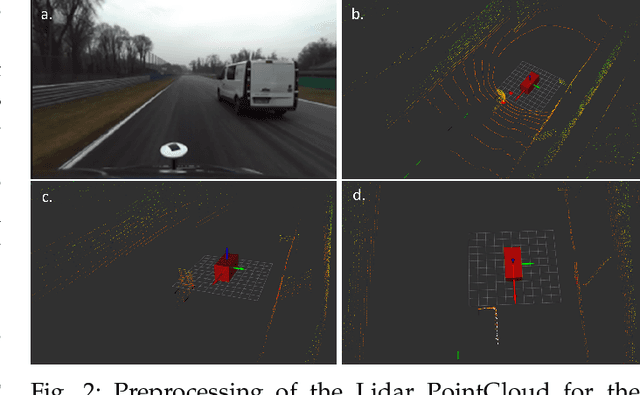

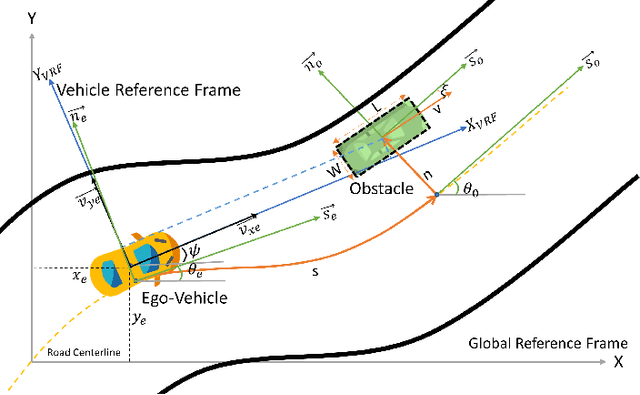

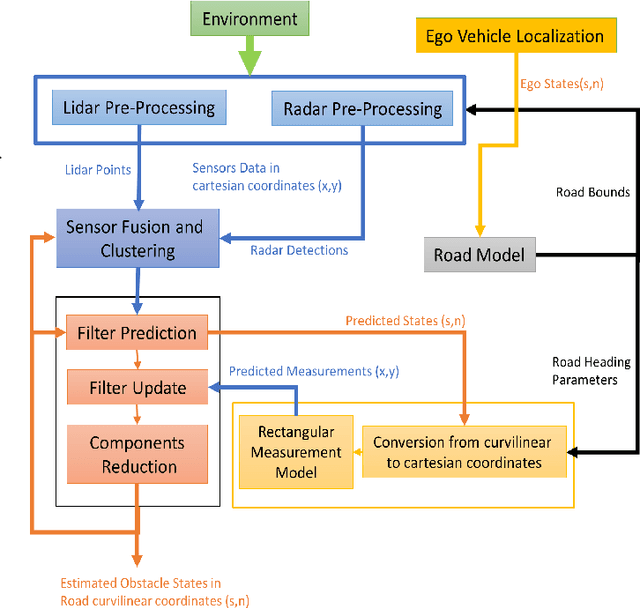

Extended Object Tracking in Curvilinear Road Coordinates for Autonomous Driving

Feb 08, 2022

Abstract:In literature, Extended Object Tracking (EOT) algorithms developed for autonomous driving predominantly provide obstacles state estimation in cartesian coordinates in the Vehicle Reference Frame. However, in many scenarios, state representation in road-aligned curvilinear coordinates is preferred when implementing autonomous driving subsystems like cruise control, lane-keeping assist, platooning, etc. This paper proposes a Gaussian Mixture Probability Hypothesis Density~(GM-PHD) filter with an Unscented Kalman Filter~(UKF) estimator that provides obstacle state estimates in curvilinear road coordinates. We employ a hybrid sensor fusion architecture between Lidar and Radar sensors to obtain rich measurement point representations for EOT. The measurement model for the UKF estimator is developed with the integration of coordinate conversion from curvilinear road coordinates to cartesian coordinates by using cubic hermit spline road model. The proposed algorithm is validated through Matlab Driving Scenario Designer simulation and experimental data collected at Monza Eni Circuit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge