Pirros Tsiakoulis

Pseudo-Cepstrum: Pitch Modification for Mel-Based Neural Vocoders

Dec 18, 2025

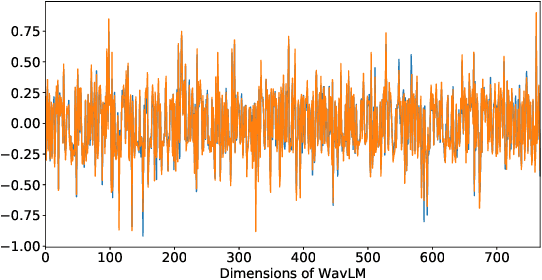

Abstract:This paper introduces a cepstrum-based pitch modification method that can be applied to any mel-spectrogram representation. As a result, this method is compatible with any mel-based vocoder without requiring any additional training or changes to the model. This is achieved by directly modifying the cepstrum feature space in order to shift the harmonic structure to the desired target. The spectrogram magnitude is computed via the pseudo-inverse mel transform, then converted to the cepstrum by applying DCT. In this domain, the cepstral peak is shifted without having to estimate its position and the modified mel is recomputed by applying IDCT and mel-filterbank. These pitch-shifted mel-spectrogram features can be converted to speech with any compatible vocoder. The proposed method is validated experimentally with objective and subjective metrics on various state-of-the-art neural vocoders as well as in comparison with traditional pitch modification methods.

MambaRate: Speech Quality Assessment Across Different Sampling Rates

Jul 16, 2025Abstract:We propose MambaRate, which predicts Mean Opinion Scores (MOS) with limited bias regarding the sampling rate of the waveform under evaluation. It is designed for Track 3 of the AudioMOS Challenge 2025, which focuses on predicting MOS for speech in high sampling frequencies. Our model leverages self-supervised embeddings and selective state space modeling. The target ratings are encoded in a continuous representation via Gaussian radial basis functions (RBF). The results of the challenge were based on the system-level Spearman's Rank Correllation Coefficient (SRCC) metric. An initial MambaRate version (T16 system) outperformed the pre-trained baseline (B03) by ~14% in a few-shot setting without pre-training. T16 ranked fourth out of five in the challenge, differing by ~6% from the winning system. We present additional results on the BVCC dataset as well as ablations with different representations as input, which outperform the initial T16 version.

Improved Text Emotion Prediction Using Combined Valence and Arousal Ordinal Classification

Apr 02, 2024

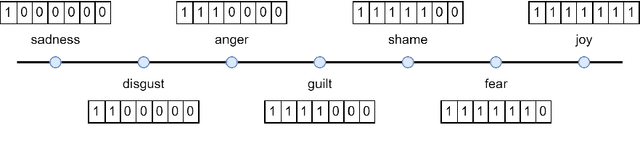

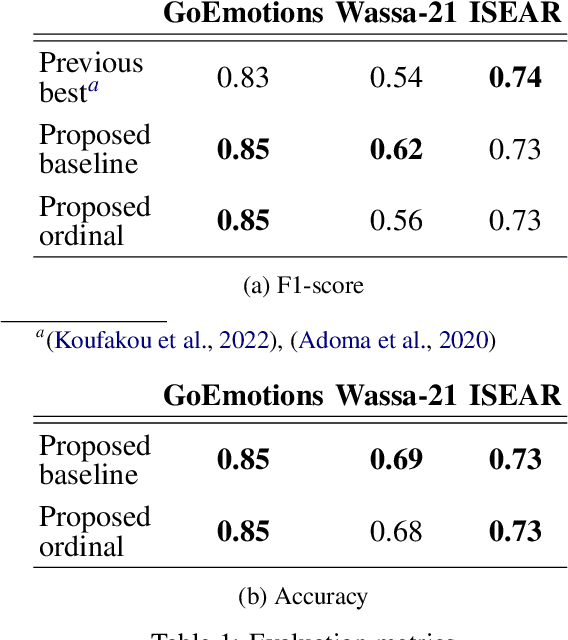

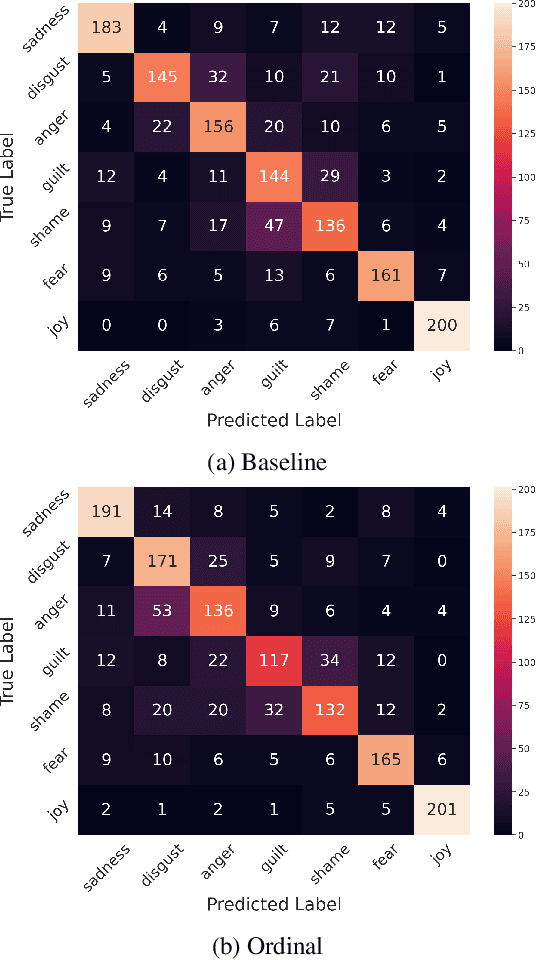

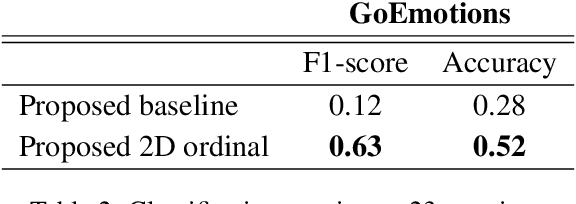

Abstract:Emotion detection in textual data has received growing interest in recent years, as it is pivotal for developing empathetic human-computer interaction systems. This paper introduces a method for categorizing emotions from text, which acknowledges and differentiates between the diversified similarities and distinctions of various emotions. Initially, we establish a baseline by training a transformer-based model for standard emotion classification, achieving state-of-the-art performance. We argue that not all misclassifications are of the same importance, as there are perceptual similarities among emotional classes. We thus redefine the emotion labeling problem by shifting it from a traditional classification model to an ordinal classification one, where discrete emotions are arranged in a sequential order according to their valence levels. Finally, we propose a method that performs ordinal classification in the two-dimensional emotion space, considering both valence and arousal scales. The results show that our approach not only preserves high accuracy in emotion prediction but also significantly reduces the magnitude of errors in cases of misclassification.

Low-Resource Cross-Domain Singing Voice Synthesis via Reduced Self-Supervised Speech Representations

Feb 02, 2024

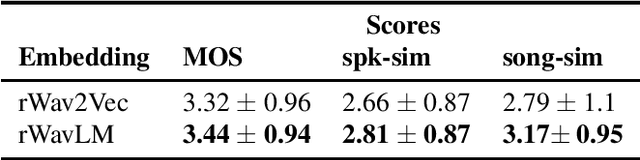

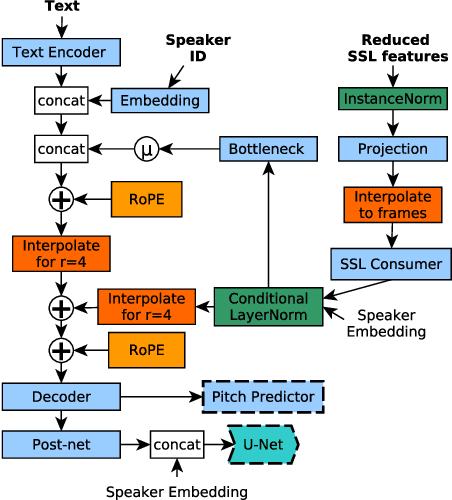

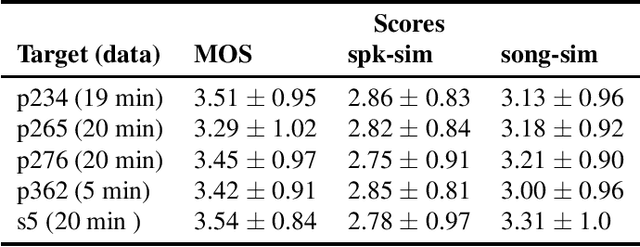

Abstract:In this paper, we propose a singing voice synthesis model, Karaoker-SSL, that is trained only on text and speech data as a typical multi-speaker acoustic model. It is a low-resource pipeline that does not utilize any singing data end-to-end, since its vocoder is also trained on speech data. Karaoker-SSL is conditioned by self-supervised speech representations in an unsupervised manner. We preprocess these representations by selecting only a subset of their task-correlated dimensions. The conditioning module is indirectly guided to capture style information during training by multi-tasking. This is achieved with a Conformer-based module, which predicts the pitch from the acoustic model's output. Thus, Karaoker-SSL allows singing voice synthesis without reliance on hand-crafted and domain-specific features. There are also no requirements for text alignments or lyrics timestamps. To refine the voice quality, we employ a U-Net discriminator that is conditioned on the target speaker and follows a Diffusion GAN training scheme.

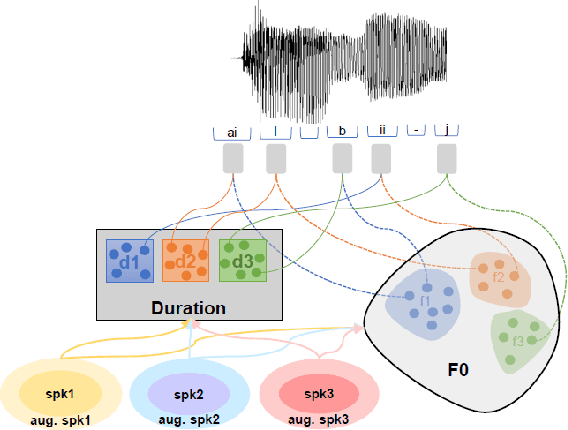

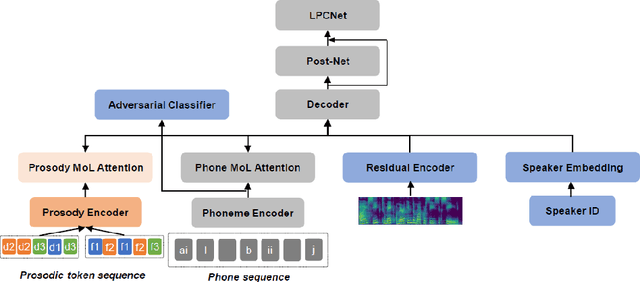

Controllable speech synthesis by learning discrete phoneme-level prosodic representations

Nov 29, 2022

Abstract:In this paper, we present a novel method for phoneme-level prosody control of F0 and duration using intuitive discrete labels. We propose an unsupervised prosodic clustering process which is used to discretize phoneme-level F0 and duration features from a multispeaker speech dataset. These features are fed as an input sequence of prosodic labels to a prosody encoder module which augments an autoregressive attention-based text-to-speech model. We utilize various methods in order to improve prosodic control range and coverage, such as augmentation, F0 normalization, balanced clustering for duration and speaker-independent clustering. The final model enables fine-grained phoneme-level prosody control for all speakers contained in the training set, while maintaining the speaker identity. Instead of relying on reference utterances for inference, we introduce a prior prosody encoder which learns the style of each speaker and enables speech synthesis without the requirement of reference audio. We also fine-tune the multispeaker model to unseen speakers with limited amounts of data, as a realistic application scenario and show that the prosody control capabilities are maintained, verifying that the speaker-independent prosodic clustering is effective. Experimental results show that the model has high output speech quality and that the proposed method allows efficient prosody control within each speaker's range despite the variability that a multispeaker setting introduces.

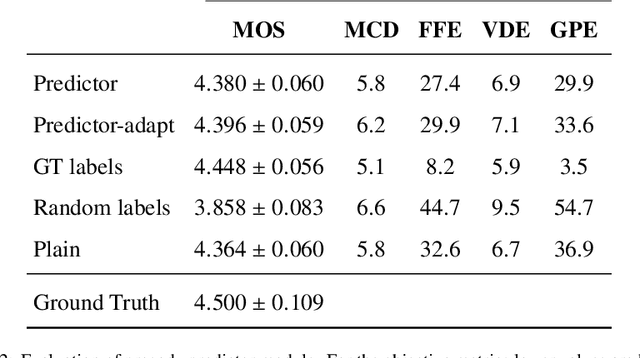

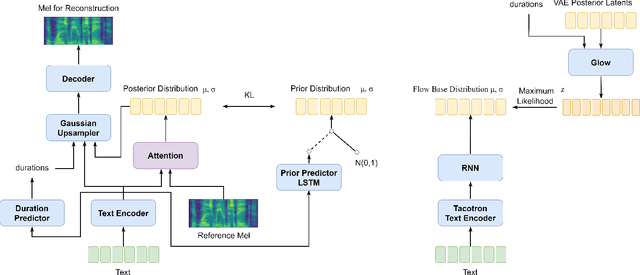

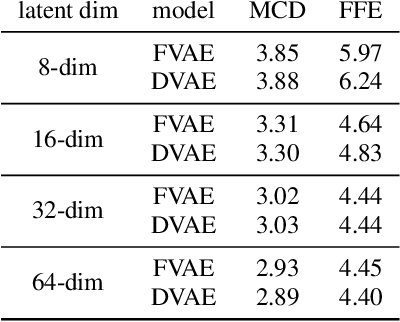

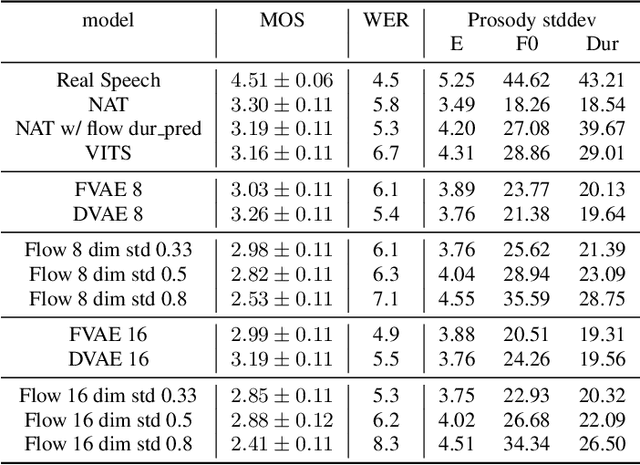

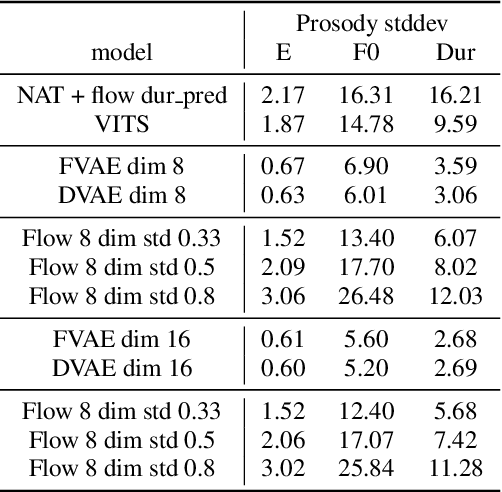

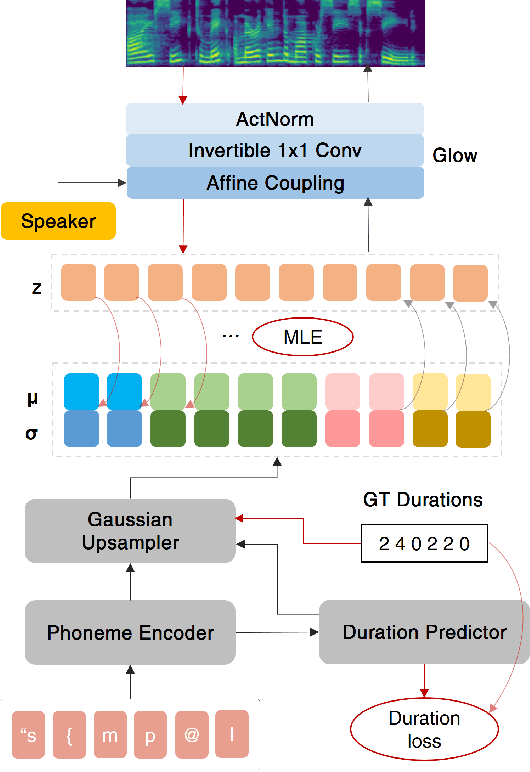

Predicting phoneme-level prosody latents using AR and flow-based Prior Networks for expressive speech synthesis

Nov 02, 2022

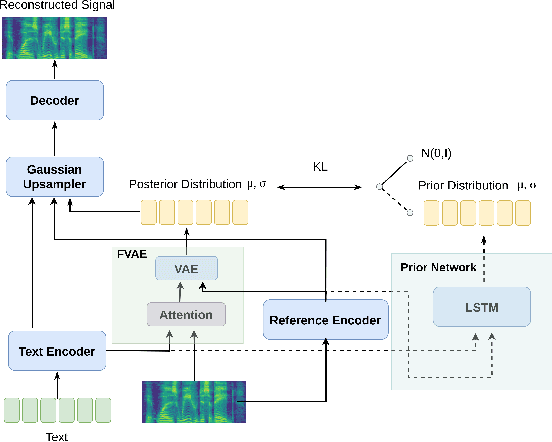

Abstract:A large part of the expressive speech synthesis literature focuses on learning prosodic representations of the speech signal which are then modeled by a prior distribution during inference. In this paper, we compare different prior architectures at the task of predicting phoneme level prosodic representations extracted with an unsupervised FVAE model. We use both subjective and objective metrics to show that normalizing flow based prior networks can result in more expressive speech at the cost of a slight drop in quality. Furthermore, we show that the synthesized speech has higher variability, for a given text, due to the nature of normalizing flows. We also propose a Dynamical VAE model, that can generate higher quality speech although with decreased expressiveness and variability compared to the flow based models.

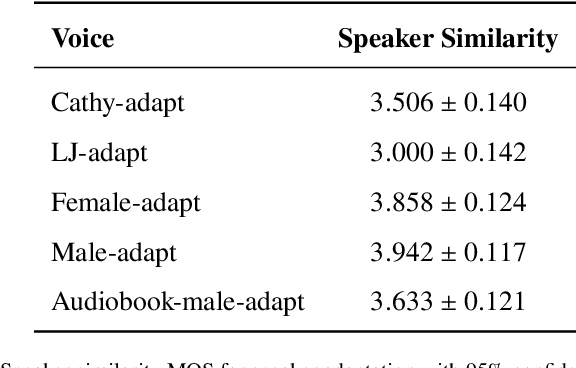

Generating Gender-Ambiguous Text-to-Speech Voices

Nov 01, 2022

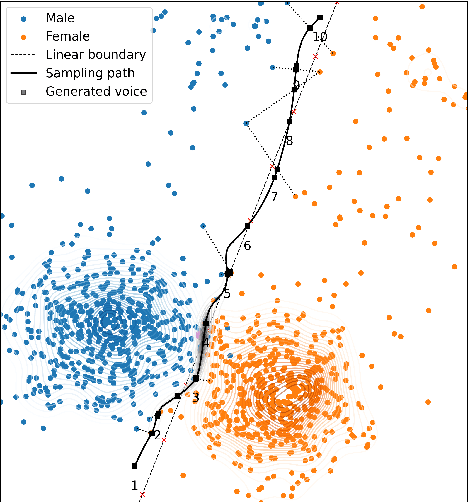

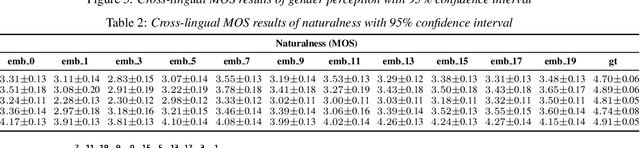

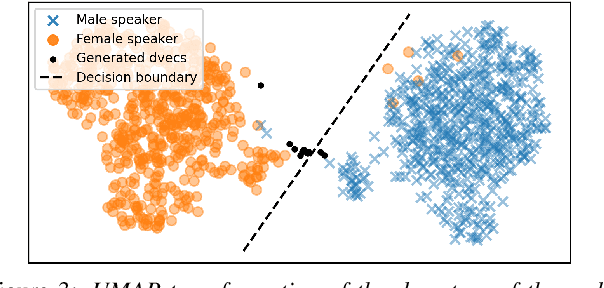

Abstract:The gender of a voice assistant or any voice user interface is a central element of its perceived identity. While a female voice is a common choice, there is an increasing interest in alternative approaches where the gender is ambiguous rather than clearly identifying as female or male. This work addresses the task of generating gender-ambiguous text-to-speech (TTS) voices that do not correspond to any existing person. This is accomplished by sampling from a latent speaker embeddings' space that was formed while training a multilingual, multi-speaker TTS system on data from multiple male and female speakers. Various options are investigated regarding the sampling process. In our experiments, the effects of different sampling choices on the gender ambiguity and the naturalness of the resulting voices are evaluated. The proposed method is shown able to efficiently generate novel speakers that are superior to a baseline averaged speaker embedding. To our knowledge, this is the first systematic approach that can reliably generate a range of gender-ambiguous voices to meet diverse user requirements.

Investigating Content-Aware Neural Text-To-Speech MOS Prediction Using Prosodic and Linguistic Features

Nov 01, 2022

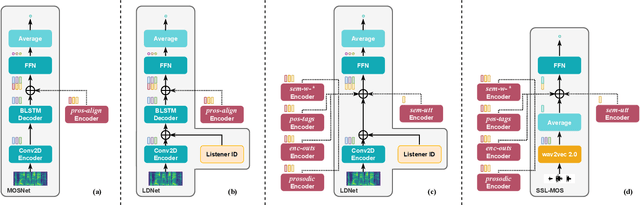

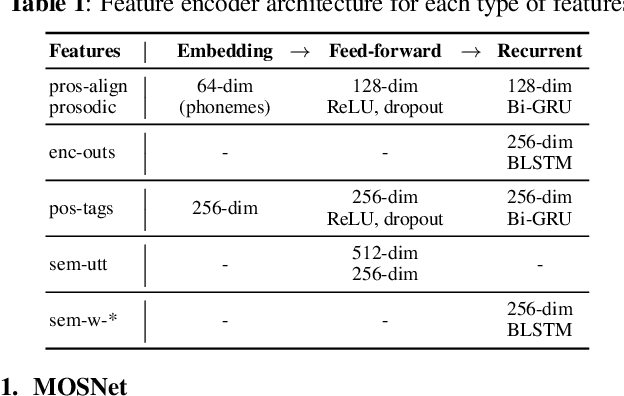

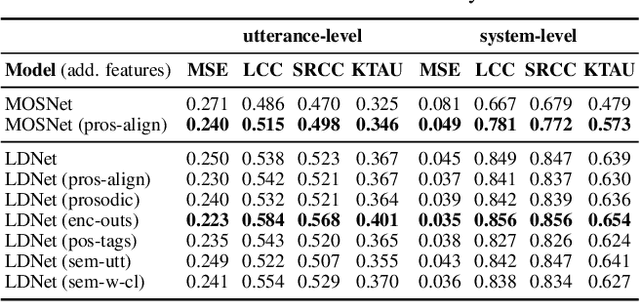

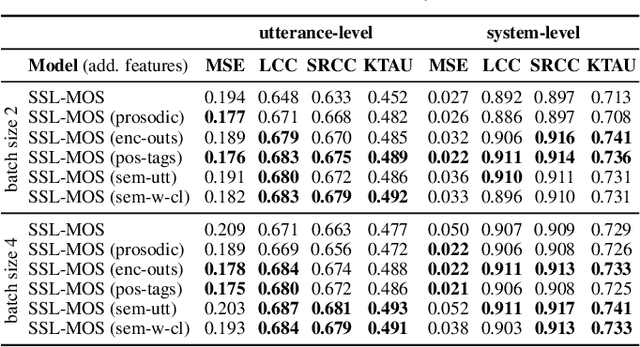

Abstract:Current state-of-the-art methods for automatic synthetic speech evaluation are based on MOS prediction neural models. Such MOS prediction models include MOSNet and LDNet that use spectral features as input, and SSL-MOS that relies on a pretrained self-supervised learning model that directly uses the speech signal as input. In modern high-quality neural TTS systems, prosodic appropriateness with regard to the spoken content is a decisive factor for speech naturalness. For this reason, we propose to include prosodic and linguistic features as additional inputs in MOS prediction systems, and evaluate their impact on the prediction outcome. We consider phoneme level F0 and duration features as prosodic inputs, as well as Tacotron encoder outputs, POS tags and BERT embeddings as higher-level linguistic inputs. All MOS prediction systems are trained on SOMOS, a neural TTS-only dataset with crowdsourced naturalness MOS evaluations. Results show that the proposed additional features are beneficial in the MOS prediction task, by improving the predicted MOS scores' correlation with the ground truths, both at utterance-level and system-level predictions.

Learning utterance-level representations through token-level acoustic latents prediction for Expressive Speech Synthesis

Nov 01, 2022

Abstract:This paper proposes an Expressive Speech Synthesis model that utilizes token-level latent prosodic variables in order to capture and control utterance-level attributes, such as character acting voice and speaking style. Current works aim to explicitly factorize such fine-grained and utterance-level speech attributes into different representations extracted by modules that operate in the corresponding level. We show that the fine-grained latent space also captures coarse-grained information, which is more evident as the dimension of latent space increases in order to capture diverse prosodic representations. Therefore, a trade-off arises between the diversity of the token-level and utterance-level representations and their disentanglement. We alleviate this issue by first capturing rich speech attributes into a token-level latent space and then, separately train a prior network that given the input text, learns utterance-level representations in order to predict the phoneme-level, posterior latents extracted during the previous step. Both qualitative and quantitative evaluations are used to demonstrate the effectiveness of the proposed approach. Audio samples are available in our demo page.

Cross-lingual Text-To-Speech with Flow-based Voice Conversion for Improved Pronunciation

Oct 31, 2022

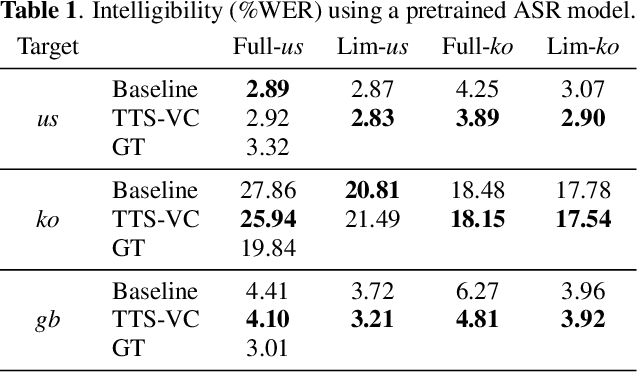

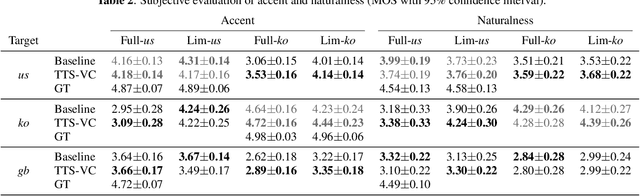

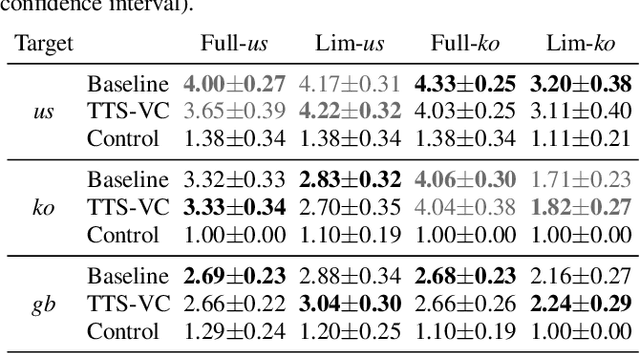

Abstract:This paper presents a method for end-to-end cross-lingual text-to-speech (TTS) which aims to preserve the target language's pronunciation regardless of the original speaker's language. The model used is based on a non-attentive Tacotron architecture, where the decoder has been replaced with a normalizing flow network conditioned on the speaker identity, allowing both TTS and voice conversion (VC) to be performed by the same model due to the inherent linguistic content and speaker identity disentanglement. When used in a cross-lingual setting, acoustic features are initially produced with a native speaker of the target language and then voice conversion is applied by the same model in order to convert these features to the target speaker's voice. We verify through objective and subjective evaluations that our method can have benefits compared to baseline cross-lingual synthesis. By including speakers averaging 7.5 minutes of speech, we also present positive results on low-resource scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge