Peter Müller

Towards certifiable AI in aviation: landscape, challenges, and opportunities

Sep 13, 2024Abstract:Artificial Intelligence (AI) methods are powerful tools for various domains, including critical fields such as avionics, where certification is required to achieve and maintain an acceptable level of safety. General solutions for safety-critical systems must address three main questions: Is it suitable? What drives the system's decisions? Is it robust to errors/attacks? This is more complex in AI than in traditional methods. In this context, this paper presents a comprehensive mind map of formal AI certification in avionics. It highlights the challenges of certifying AI development with an example to emphasize the need for qualification beyond performance metrics.

DT+GNN: A Fully Explainable Graph Neural Network using Decision Trees

May 26, 2022

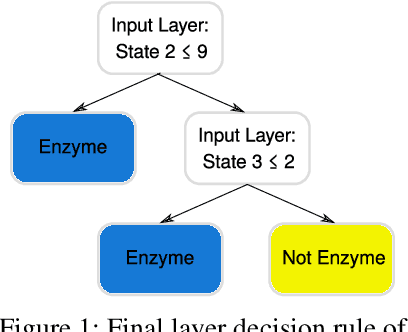

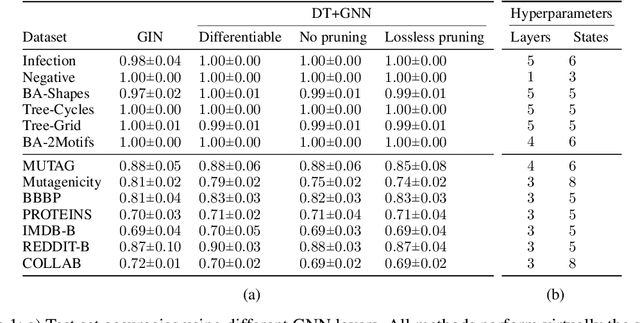

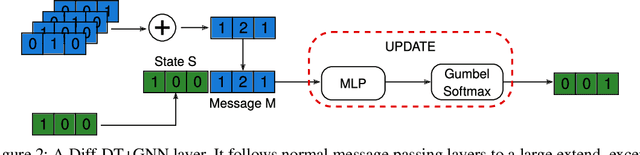

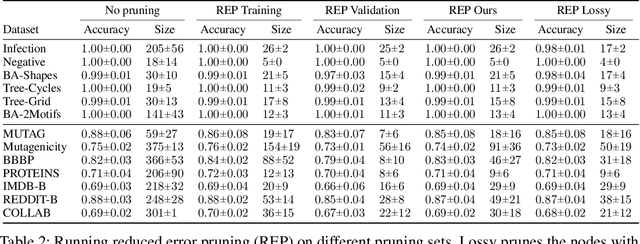

Abstract:We propose the fully explainable Decision Tree Graph Neural Network (DT+GNN) architecture. In contrast to existing black-box GNNs and post-hoc explanation methods, the reasoning of DT+GNN can be inspected at every step. To achieve this, we first construct a differentiable GNN layer, which uses a categorical state space for nodes and messages. This allows us to convert the trained MLPs in the GNN into decision trees. These trees are pruned using our newly proposed method to ensure they are small and easy to interpret. We can also use the decision trees to compute traditional explanations. We demonstrate on both real-world datasets and synthetic GNN explainability benchmarks that this architecture works as well as traditional GNNs. Furthermore, we leverage the explainability of DT+GNNs to find interesting insights into many of these datasets, with some surprising results. We also provide an interactive web tool to inspect DT+GNN's decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge