Peter Jonsson

CSPs with Few Alien Constraints

Aug 27, 2024Abstract:The constraint satisfaction problem asks to decide if a set of constraints over a relational structure $\mathcal{A}$ is satisfiable (CSP$(\mathcal{A})$). We consider CSP$(\mathcal{A} \cup \mathcal{B})$ where $\mathcal{A}$ is a structure and $\mathcal{B}$ is an alien structure, and analyse its (parameterized) complexity when at most $k$ alien constraints are allowed. We establish connections and obtain transferable complexity results to several well-studied problems that previously escaped classification attempts. Our novel approach, utilizing logical and algebraic methods, yields an FPT versus pNP dichotomy for arbitrary finite structures and sharper dichotomies for Boolean structures and first-order reducts of $(\mathbb{N},=)$ (equality CSPs), together with many partial results for general $\omega$-categorical structures.

Computational Short Cuts in Infinite Domain Constraint Satisfaction

Nov 18, 2022

Abstract:A backdoor in a finite-domain CSP instance is a set of variables where each possible instantiation moves the instance into a polynomial-time solvable class. Backdoors have found many applications in artificial intelligence and elsewhere, and the algorithmic problem of finding such backdoors has consequently been intensively studied. Sioutis and Janhunen (Proc. 42nd German Conference on AI (KI-2019)) have proposed a generalised backdoor concept suitable for infinite-domain CSP instances over binary constraints. We generalise their concept into a large class of CSPs that allow for higher-arity constraints. We show that this kind of infinite-domain backdoors have many of the positive computational properties that finite-domain backdoors have: the associated computational problems are fixed-parameter tractable whenever the underlying constraint language is finite. On the other hand, we show that infinite languages make the problems considerably harder: the general backdoor detection problem is W[2]-hard and fixed-parameter tractability is ruled out under standard complexity-theoretic assumptions. We demonstrate that backdoors may have suboptimal behaviour on binary constraints -- this is detrimental from an AI perspective where binary constraints are predominant in, for instance, spatiotemporal applications. In response to this, we introduce sidedoors as an alternative to backdoors. The fundamental computational problems for sidedoors remain fixed-parameter tractable for finite constraint language (possibly also containing non-binary relations). Moreover, the sidedoor approach has appealing computational properties that sometimes leads to faster algorithms than the backdoor approach.

Solving Infinite-Domain CSPs Using the Patchwork Property

Jul 03, 2021

Abstract:The constraint satisfaction problem (CSP) has important applications in computer science and AI. In particular, infinite-domain CSPs have been intensively used in subareas of AI such as spatio-temporal reasoning. Since constraint satisfaction is a computationally hard problem, much work has been devoted to identifying restricted problems that are efficiently solvable. One way of doing this is to restrict the interactions of variables and constraints, and a highly successful approach is to bound the treewidth of the underlying primal graph. Bodirsky & Dalmau [J. Comput. System. Sci. 79(1), 2013] and Huang et al. [Artif. Intell. 195, 2013] proved that CSP$(\Gamma)$ can be solved in $n^{f(w)}$ time (where $n$ is the size of the instance, $w$ is the treewidth of the primal graph and $f$ is a computable function) for certain classes of constraint languages $\Gamma$. We improve this bound to $f(w) \cdot n^{O(1)}$, where the function $f$ only depends on the language $\Gamma$, for CSPs whose basic relations have the patchwork property. Hence, such problems are fixed-parameter tractable and our algorithm is asymptotically faster than the previous ones. Additionally, our approach is not restricted to binary constraints, so it is applicable to a strictly larger class of problems than that of Huang et al. However, there exist natural problems that are covered by Bodirsky & Dalmau's algorithm but not by ours, and we begin investigating ways of generalising our results to larger families of languages. We also analyse our algorithm with respect to its running time and show that it is optimal (under the Exponential Time Hypothesis) for certain languages such as Allen's Interval Algebra.

On the Complexity of CCG Parsing

May 04, 2018Abstract:We study the parsing complexity of Combinatory Categorial Grammar (CCG) in the formalism of Vijay-Shanker and Weir (1994). As our main result, we prove that any parsing algorithm for this formalism will take in the worst case exponential time when the size of the grammar, and not only the length of the input sentence, is included in the analysis. This sets the formalism of Vijay-Shanker and Weir (1994) apart from weakly equivalent formalisms such as Tree-Adjoining Grammar (TAG), for which parsing can be performed in time polynomial in the combined size of grammar and input sentence. Our results contribute to a refined understanding of the class of mildly context-sensitive grammars, and inform the search for new, mildly context-sensitive versions of CCG.

A Refined View of Causal Graphs and Component Sizes: SP-Closed Graph Classes and Beyond

Feb 04, 2014

Abstract:The causal graph of a planning instance is an important tool for planning both in practice and in theory. The theoretical studies of causal graphs have largely analysed the computational complexity of planning for instances where the causal graph has a certain structure, often in combination with other parameters like the domain size of the variables. Chen and Gimand#233;nez ignored even the structure and considered only the size of the weakly connected components. They proved that planning is tractable if the components are bounded by a constant and otherwise intractable. Their intractability result was, however, conditioned by an assumption from parameterised complexity theory that has no known useful relationship with the standard complexity classes. We approach the same problem from the perspective of standard complexity classes, and prove that planning is NP-hard for classes with unbounded components under an additional restriction we refer to as SP-closed. We then argue that most NP-hardness theorems for causal graphs are difficult to apply and, thus, prove a more general result; even if the component sizes grow slowly and the class is not densely populated with graphs, planning still cannot be tractable unless the polynomial hierachy collapses. Both these results still hold when restricted to the class of acyclic causal graphs. We finally give a partial characterization of the borderline between NP-hard and NP-intermediate classes, giving further insight into the problem.

Algorithms and Limits for Compact Plan Representations

Jan 23, 2014

Abstract:Compact representations of objects is a common concept in computer science. Automated planning can be viewed as a case of this concept: a planning instance is a compact implicit representation of a graph and the problem is to find a path (a plan) in this graph. While the graphs themselves are represented compactly as planning instances, the paths are usually represented explicitly as sequences of actions. Some cases are known where the plans always have compact representations, for example, using macros. We show that these results do not extend to the general case, by proving a number of bounds for compact representations of plans under various criteria, like efficient sequential or random access of actions. In addition to this, we show that our results have consequences for what can be gained from reformulating planning into some other problem. As a contrast to this we also prove a number of positive results, demonstrating restricted cases where plans do have useful compact representations, as well as proving that macro plans have favourable access properties. Our results are finally discussed in relation to other relevant contexts.

A Complete Parameterized Complexity Analysis of Bounded Planning

Oct 29, 2013

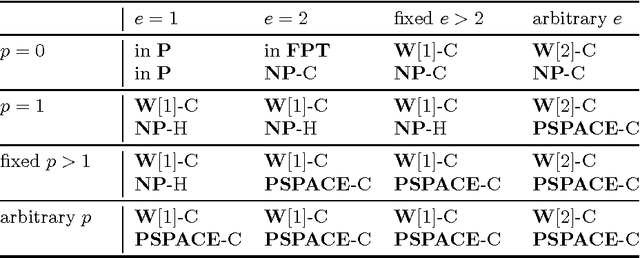

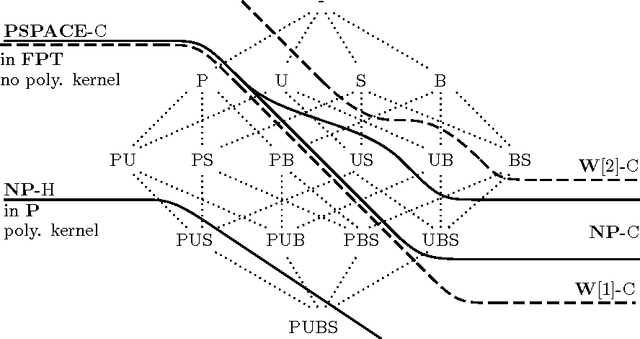

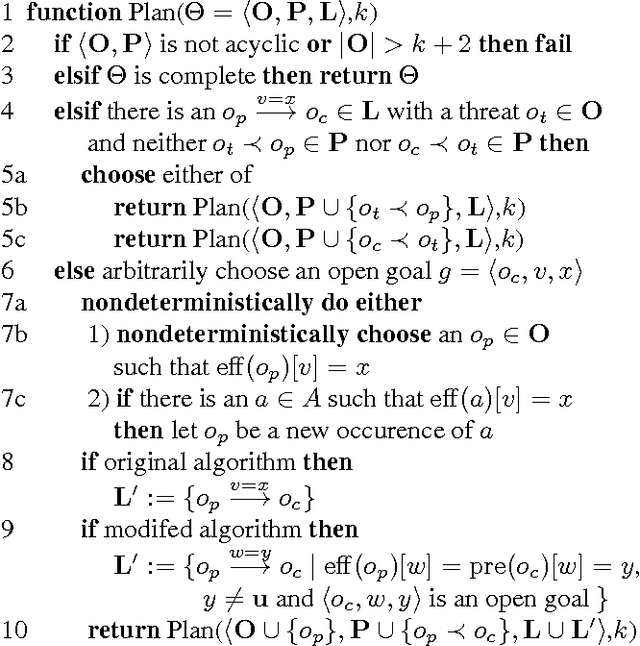

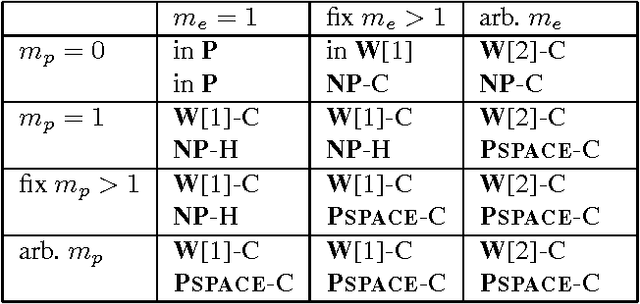

Abstract:The propositional planning problem is a notoriously difficult computational problem, which remains hard even under strong syntactical and structural restrictions. Given its difficulty it becomes natural to study planning in the context of parameterized complexity. In this paper we continue the work initiated by Downey, Fellows and Stege on the parameterized complexity of planning with respect to the parameter "length of the solution plan." We provide a complete classification of the parameterized complexity of the planning problem under two of the most prominent syntactical restrictions, i.e., the so called PUBS restrictions introduced by Baeckstroem and Nebel and restrictions on the number of preconditions and effects as introduced by Bylander. We also determine which of the considered fixed-parameter tractable problems admit a polynomial kernel and which don't.

* The paper is a combined and extended version of the papers "The Complexity of Planning Revisited - A Parameterized Analysis" (AAAI 2012, arXiv:1208.2566) and "Parameterized Complexity and Kernel Bounds for Hard Planning Problems" (CIAC 2013, arXiv:1211.0479)

Parameterized Complexity and Kernel Bounds for Hard Planning Problems

Jan 21, 2013

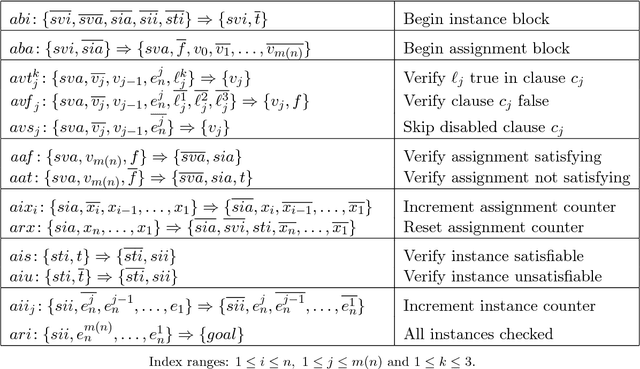

Abstract:The propositional planning problem is a notoriously difficult computational problem. Downey et al. (1999) initiated the parameterized analysis of planning (with plan length as the parameter) and B\"ackstr\"om et al. (2012) picked up this line of research and provided an extensive parameterized analysis under various restrictions, leaving open only one stubborn case. We continue this work and provide a full classification. In particular, we show that the case when actions have no preconditions and at most $e$ postconditions is fixed-parameter tractable if $e\leq 2$ and W[1]-complete otherwise. We show fixed-parameter tractability by a reduction to a variant of the Steiner Tree problem; this problem has been shown fixed-parameter tractable by Guo et al. (2007). If a problem is fixed-parameter tractable, then it admits a polynomial-time self-reduction to instances whose input size is bounded by a function of the parameter, called the kernel. For some problems, this function is even polynomial which has desirable computational implications. Recent research in parameterized complexity has focused on classifying fixed-parameter tractable problems on whether they admit polynomial kernels or not. We revisit all the previously obtained restrictions of planning that are fixed-parameter tractable and show that none of them admits a polynomial kernel unless the polynomial hierarchy collapses to its third level.

* This is the full version of a paper that will appear in the Proc. of CIAC 2013

The Complexity of Planning Revisited - A Parameterized Analysis

Aug 13, 2012

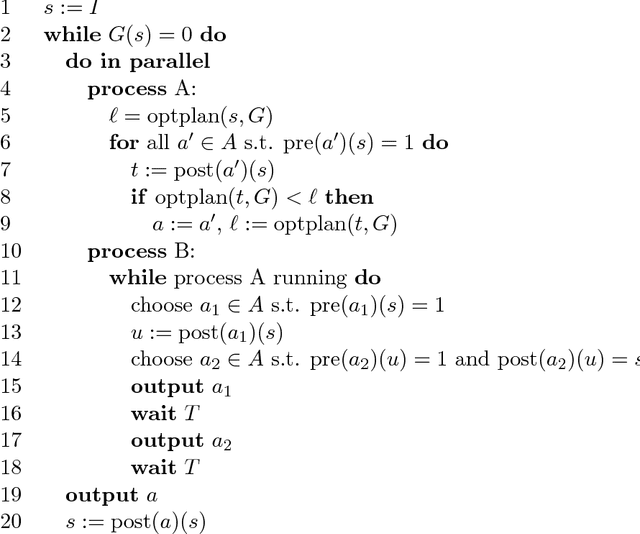

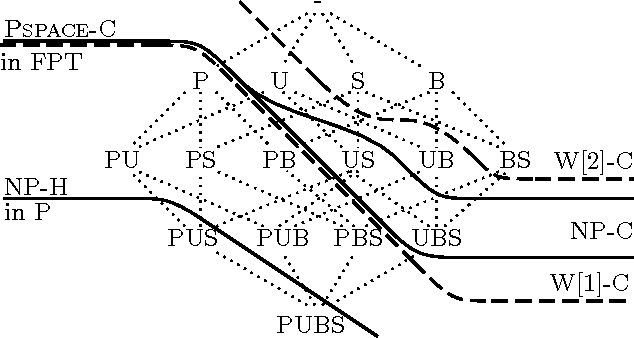

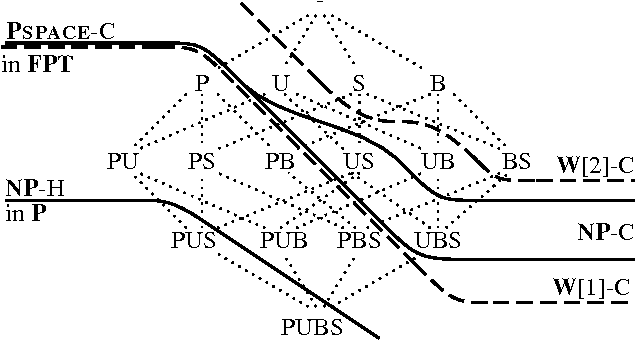

Abstract:The early classifications of the computational complexity of planning under various restrictions in STRIPS (Bylander) and SAS+ (Baeckstroem and Nebel) have influenced following research in planning in many ways. We go back and reanalyse their subclasses, but this time using the more modern tool of parameterized complexity analysis. This provides new results that together with the old results give a more detailed picture of the complexity landscape. We demonstrate separation results not possible with standard complexity theory, which contributes to explaining why certain cases of planning have seemed simpler in practice than theory has predicted. In particular, we show that certain restrictions of practical interest are tractable in the parameterized sense of the term, and that a simple heuristic is sufficient to make a well-known partial-order planner exploit this fact.

* (author's self-archived copy)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge