Peter J. Jin

Optimizing Autonomous Driving for Safety: A Human-Centric Approach with LLM-Enhanced RLHF

Jun 06, 2024

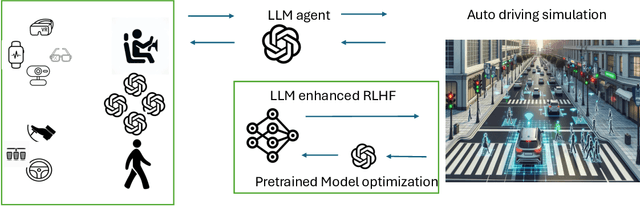

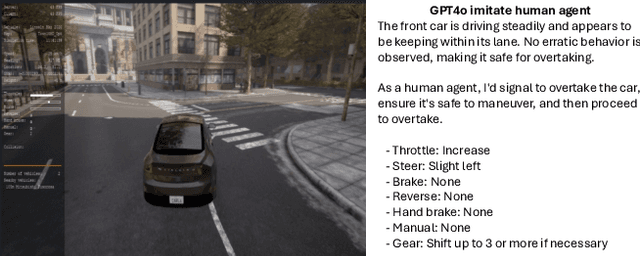

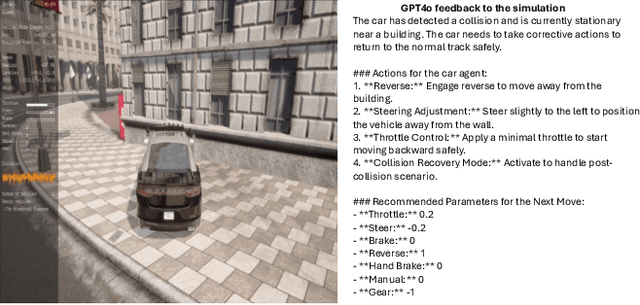

Abstract:Reinforcement Learning from Human Feedback (RLHF) is popular in large language models (LLMs), whereas traditional Reinforcement Learning (RL) often falls short. Current autonomous driving methods typically utilize either human feedback in machine learning, including RL, or LLMs. Most feedback guides the car agent's learning process (e.g., controlling the car). RLHF is usually applied in the fine-tuning step, requiring direct human "preferences," which are not commonly used in optimizing autonomous driving models. In this research, we innovatively combine RLHF and LLMs to enhance autonomous driving safety. Training a model with human guidance from scratch is inefficient. Our framework starts with a pre-trained autonomous car agent model and implements multiple human-controlled agents, such as cars and pedestrians, to simulate real-life road environments. The autonomous car model is not directly controlled by humans. We integrate both physical and physiological feedback to fine-tune the model, optimizing this process using LLMs. This multi-agent interactive environment ensures safe, realistic interactions before real-world application. Finally, we will validate our model using data gathered from real-life testbeds located in New Jersey and New York City.

A Review on Longitudinal Car-Following Model

Apr 14, 2023

Abstract:The car-following (CF) model is the core component for traffic simulations and has been built-in in many production vehicles with Advanced Driving Assistance Systems (ADAS). Research of CF behavior allows us to identify the sources of different macro phenomena induced by the basic process of pairwise vehicle interaction. The CF behavior and control model encompasses various fields, such as traffic engineering, physics, cognitive science, machine learning, and reinforcement learning. This paper provides a comprehensive survey highlighting differences, complementarities, and overlaps among various CF models according to their underlying logic and principles. We reviewed representative algorithms, ranging from the theory-based kinematic models, stimulus-response models, and cruise control models to data-driven Behavior Cloning (BC) and Imitation Learning (IL) and outlined their strengths and limitations. This review categorizes CF models that are conceptualized in varying principles and summarize the vast literature with a holistic framework.

Multimodal Gaussian Mixture Model for Realtime Roadside LiDAR Object Detection

Apr 20, 2022

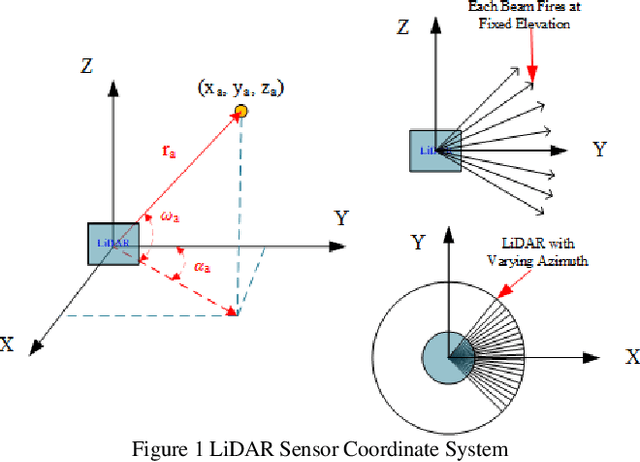

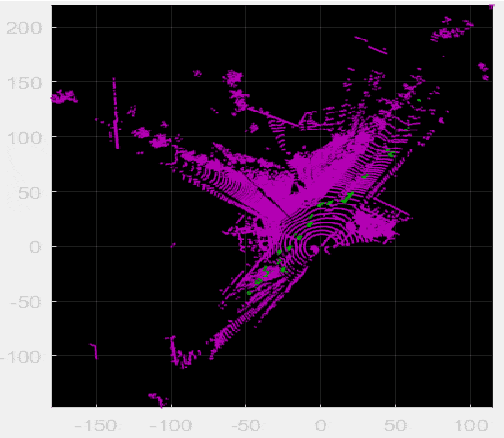

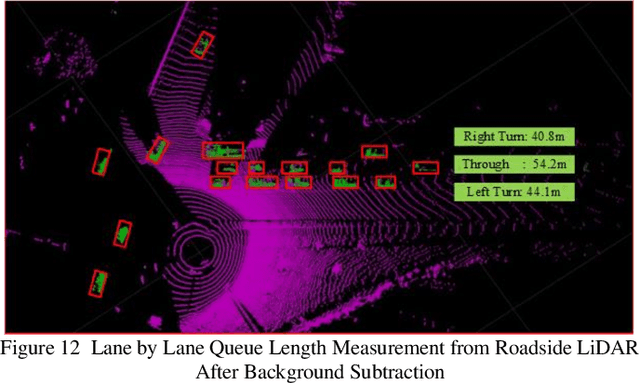

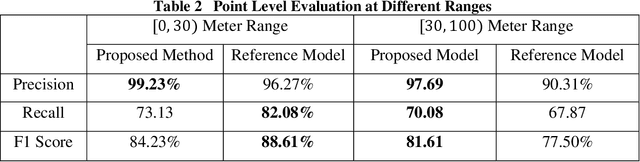

Abstract:Background modeling is widely used for intelligent surveillance systems to detect the moving targets by subtracting the static background components. Most roadside LiDAR object detection methods filter out foreground points by comparing new points to pre-trained background references based on descriptive statistics over many frames (e.g., voxel density, slopes, maximum distance). These solutions are not efficient under heavy traffic, and parameter values are hard to transfer from one scenario to another. In early studies, the video-based background modeling methods were considered not suitable for roadside LiDAR surveillance systems due to the sparse and unstructured point clouds data. In this paper, the raw LiDAR data were transformed into a multi-dimensional tensor structure based on the elevation and azimuth value of each LiDAR point. With this high-order data representation, we break the barrier to allow the efficient Gaussian Mixture Model (GMM) method for roadside LiDAR background modeling. The probabilistic GMM is built with superior agility and real-time capability. The proposed Method was compared against two state-of-the-art roadside LiDAR background models and evaluated based on point level, object level, and path level, demonstrating better robustness under heavy traffic and challenging weather. This multimodal GMM method is capable of handling dynamic backgrounds with noisy measurements and substantially enhances the infrastructure-based LiDAR object detection, whereby various 3D modeling for smart city applications could be created

Roadside Lidar Vehicle Detection and Tracking Using Range And Intensity Background Subtraction

Jan 14, 2022

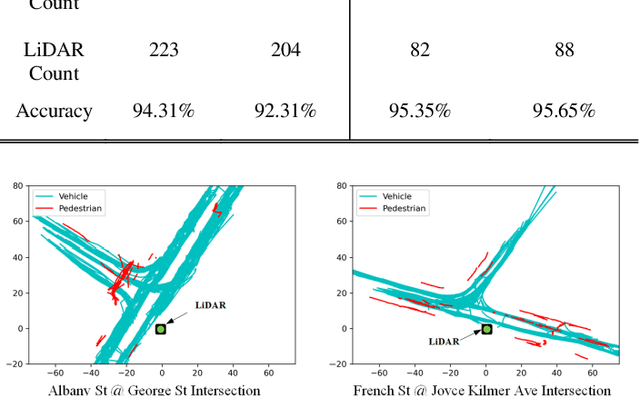

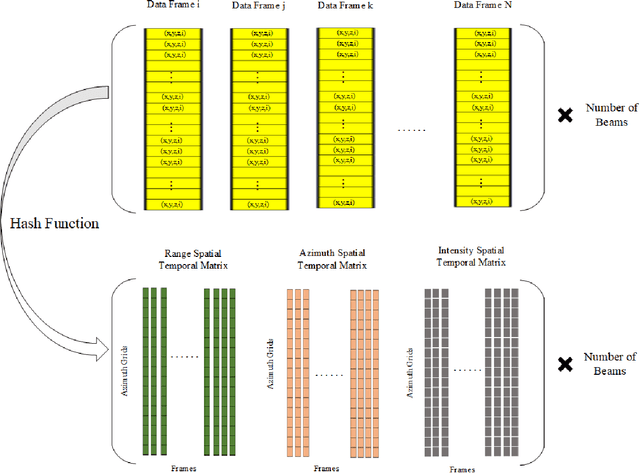

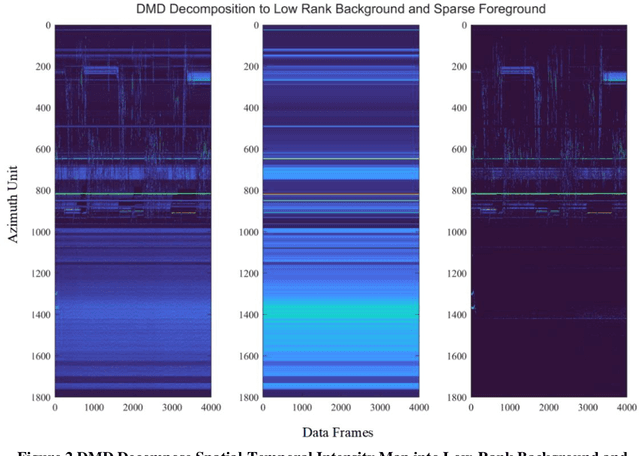

Abstract:In this paper, we present the solution of roadside LiDAR object detection using a combination of two unsupervised learning algorithms. The 3D point clouds data are firstly converted into spherical coordinates and filled into the azimuth grid matrix using a hash function. After that, the raw LiDAR data were rearranged into spatial-temporal data structures to store the information of range, azimuth, and intensity. Dynamic Mode Decomposition method is applied for decomposing the point cloud data into low-rank backgrounds and sparse foregrounds based on intensity channel pattern recognition. The Triangle Algorithm automatically finds the dividing value to separate the moving targets from static background according to range information. After intensity and range background subtraction, the foreground moving objects will be detected using a density-based detector and encoded into the state-space model for tracking. The output of the proposed model includes vehicle trajectories that can enable many mobility and safety applications. The method was validated against a commercial traffic data collection platform and demonstrated to be an efficient and reliable solution for infrastructure LiDAR object detection. In contrast to the previous methods that process directly on the scattered and discrete point clouds, the proposed method can establish the less sophisticated linear relationship of the 3D measurement data, which captures the spatial-temporal structure that we often desire.

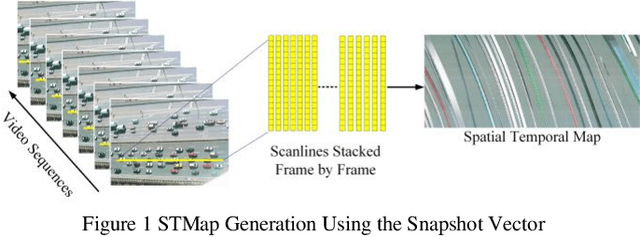

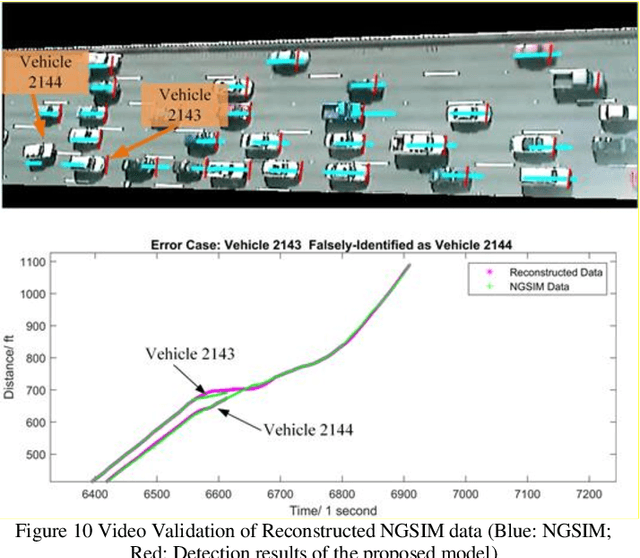

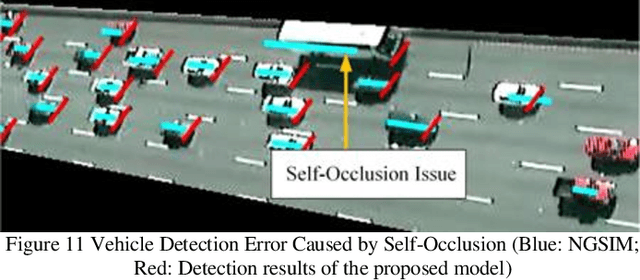

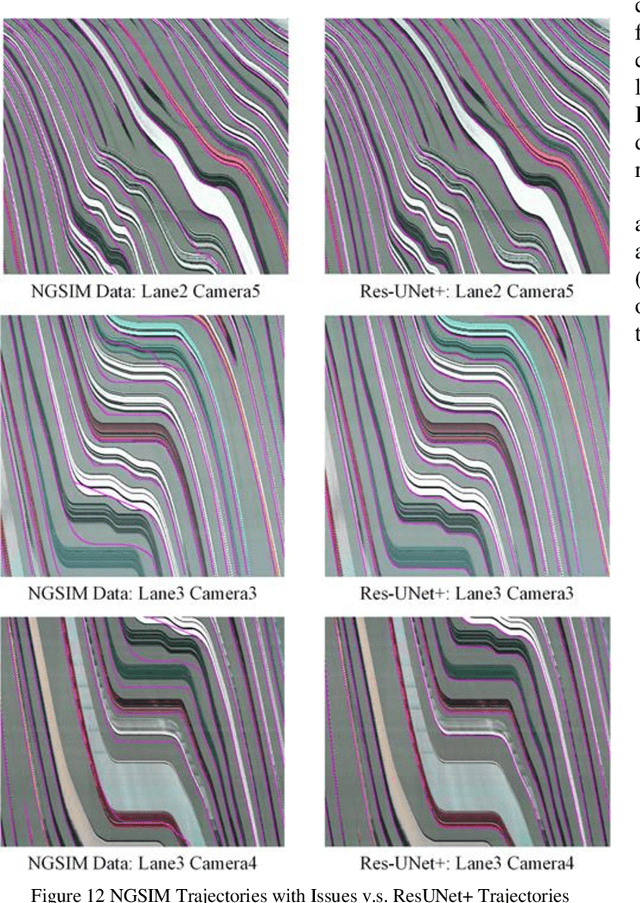

Spatial-Temporal Map Vehicle Trajectory Detection Using Dynamic Mode Decomposition and Res-UNet+ Neural Networks

Jan 13, 2022

Abstract:This paper presents a machine-learning-enhanced longitudinal scanline method to extract vehicle trajectories from high-angle traffic cameras. The Dynamic Mode Decomposition (DMD) method is applied to extract vehicle strands by decomposing the Spatial-Temporal Map (STMap) into the sparse foreground and low-rank background. A deep neural network named Res-UNet+ was designed for the semantic segmentation task by adapting two prevalent deep learning architectures. The Res-UNet+ neural networks significantly improve the performance of the STMap-based vehicle detection, and the DMD model provides many interesting insights for understanding the evolution of underlying spatial-temporal structures preserved by STMap. The model outputs were compared with the previous image processing model and mainstream semantic segmentation deep neural networks. After a thorough evaluation, the model is proved to be accurate and robust against many challenging factors. Last but not least, this paper fundamentally addressed many quality issues found in NGSIM trajectory data. The cleaned high-quality trajectory data are published to support future theoretical and modeling research on traffic flow and microscopic vehicle control. This method is a reliable solution for video-based trajectory extraction and has wide applicability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge