Peiying Zhang

DuetSVG: Unified Multimodal SVG Generation with Internal Visual Guidance

Dec 11, 2025Abstract:Recent vision-language model (VLM)-based approaches have achieved impressive results on SVG generation. However, because they generate only text and lack visual signals during decoding, they often struggle with complex semantics and fail to produce visually appealing or geometrically coherent SVGs. We introduce DuetSVG, a unified multimodal model that jointly generates image tokens and corresponding SVG tokens in an end-to-end manner. DuetSVG is trained on both image and SVG datasets. At inference, we apply a novel test-time scaling strategy that leverages the model's native visual predictions as guidance to improve SVG decoding quality. Extensive experiments show that our method outperforms existing methods, producing visually faithful, semantically aligned, and syntactically clean SVGs across a wide range of applications.

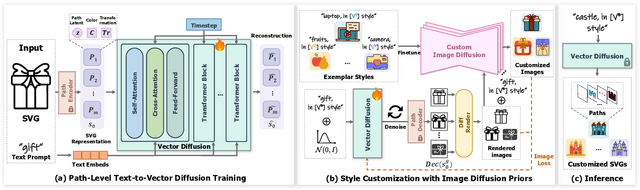

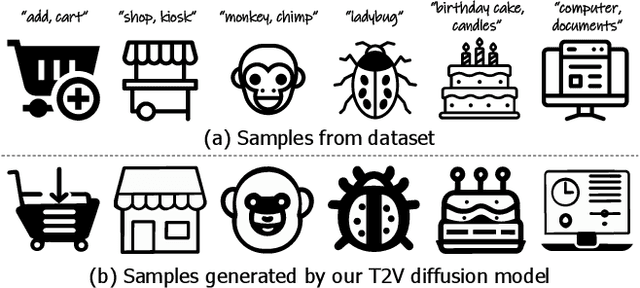

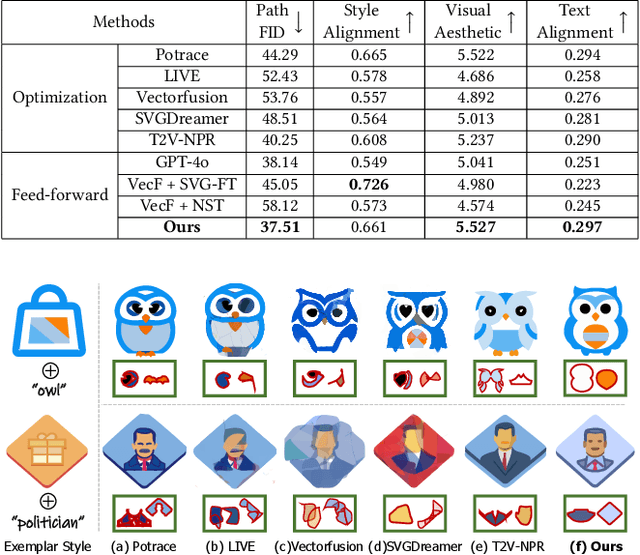

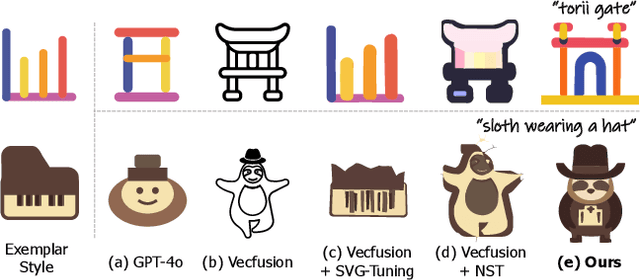

Style Customization of Text-to-Vector Generation with Image Diffusion Priors

May 15, 2025

Abstract:Scalable Vector Graphics (SVGs) are highly favored by designers due to their resolution independence and well-organized layer structure. Although existing text-to-vector (T2V) generation methods can create SVGs from text prompts, they often overlook an important need in practical applications: style customization, which is vital for producing a collection of vector graphics with consistent visual appearance and coherent aesthetics. Extending existing T2V methods for style customization poses certain challenges. Optimization-based T2V models can utilize the priors of text-to-image (T2I) models for customization, but struggle with maintaining structural regularity. On the other hand, feed-forward T2V models can ensure structural regularity, yet they encounter difficulties in disentangling content and style due to limited SVG training data. To address these challenges, we propose a novel two-stage style customization pipeline for SVG generation, making use of the advantages of both feed-forward T2V models and T2I image priors. In the first stage, we train a T2V diffusion model with a path-level representation to ensure the structural regularity of SVGs while preserving diverse expressive capabilities. In the second stage, we customize the T2V diffusion model to different styles by distilling customized T2I models. By integrating these techniques, our pipeline can generate high-quality and diverse SVGs in custom styles based on text prompts in an efficient feed-forward manner. The effectiveness of our method has been validated through extensive experiments. The project page is https://customsvg.github.io.

Text-to-Vector Generation with Neural Path Representation

May 16, 2024

Abstract:Vector graphics are widely used in digital art and highly favored by designers due to their scalability and layer-wise properties. However, the process of creating and editing vector graphics requires creativity and design expertise, making it a time-consuming task. Recent advancements in text-to-vector (T2V) generation have aimed to make this process more accessible. However, existing T2V methods directly optimize control points of vector graphics paths, often resulting in intersecting or jagged paths due to the lack of geometry constraints. To overcome these limitations, we propose a novel neural path representation by designing a dual-branch Variational Autoencoder (VAE) that learns the path latent space from both sequence and image modalities. By optimizing the combination of neural paths, we can incorporate geometric constraints while preserving expressivity in generated SVGs. Furthermore, we introduce a two-stage path optimization method to improve the visual and topological quality of generated SVGs. In the first stage, a pre-trained text-to-image diffusion model guides the initial generation of complex vector graphics through the Variational Score Distillation (VSD) process. In the second stage, we refine the graphics using a layer-wise image vectorization strategy to achieve clearer elements and structure. We demonstrate the effectiveness of our method through extensive experiments and showcase various applications. The project page is https://intchous.github.io/T2V-NPR.

DNFS-VNE: Deep Neuro-Fuzzy System-Driven Virtual Network Embedding Algorithm

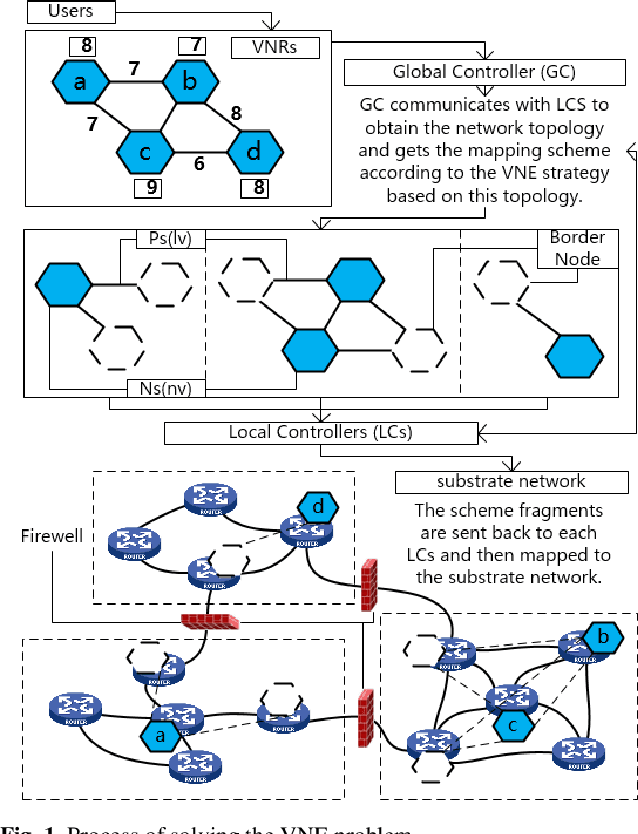

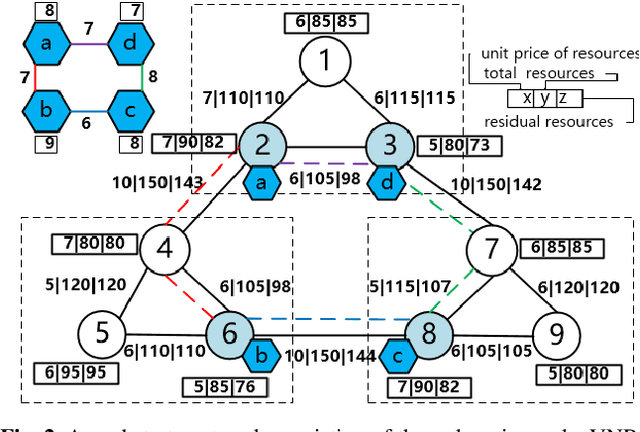

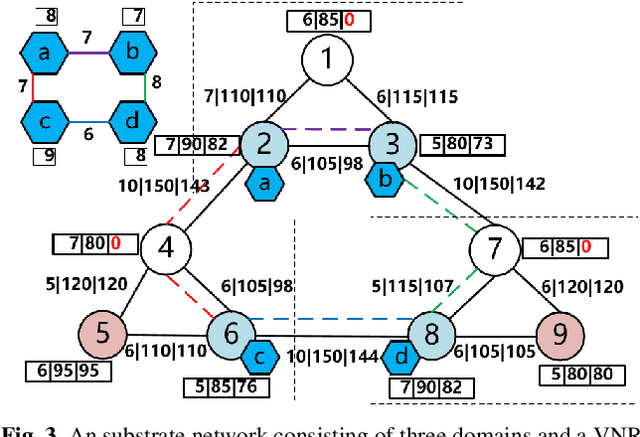

Oct 13, 2023Abstract:By decoupling substrate resources, network virtualization (NV) is a promising solution for meeting diverse demands and ensuring differentiated quality of service (QoS). In particular, virtual network embedding (VNE) is a critical enabling technology that enhances the flexibility and scalability of network deployment by addressing the coupling of Internet processes and services. However, in the existing works, the black-box nature of deep neural networks (DNNs) limits the analysis, development, and improvement of systems. In recent times, interpretable deep learning (DL) represented by deep neuro-fuzzy systems (DNFS) combined with fuzzy inference has shown promising interpretability to further exploit the hidden value in the data. Motivated by this, we propose a DNFS-based VNE algorithm that aims to provide an interpretable NV scheme. Specifically, data-driven convolutional neural networks (CNNs) are used as fuzzy implication operators to compute the embedding probabilities of candidate substrate nodes through entailment operations. And, the identified fuzzy rule patterns are cached into the weights by forward computation and gradient back-propagation (BP). In addition, the fuzzy rule base is constructed based on Mamdani-type linguistic rules using linguistic labels. Finally, the effectiveness of evaluation indicators and fuzzy rules is verified by experiments.

Text-Guided Vector Graphics Customization

Sep 21, 2023

Abstract:Vector graphics are widely used in digital art and valued by designers for their scalability and layer-wise topological properties. However, the creation and editing of vector graphics necessitate creativity and design expertise, leading to a time-consuming process. In this paper, we propose a novel pipeline that generates high-quality customized vector graphics based on textual prompts while preserving the properties and layer-wise information of a given exemplar SVG. Our method harnesses the capabilities of large pre-trained text-to-image models. By fine-tuning the cross-attention layers of the model, we generate customized raster images guided by textual prompts. To initialize the SVG, we introduce a semantic-based path alignment method that preserves and transforms crucial paths from the exemplar SVG. Additionally, we optimize path parameters using both image-level and vector-level losses, ensuring smooth shape deformation while aligning with the customized raster image. We extensively evaluate our method using multiple metrics from vector-level, image-level, and text-level perspectives. The evaluation results demonstrate the effectiveness of our pipeline in generating diverse customizations of vector graphics with exceptional quality. The project page is https://intchous.github.io/SVGCustomization.

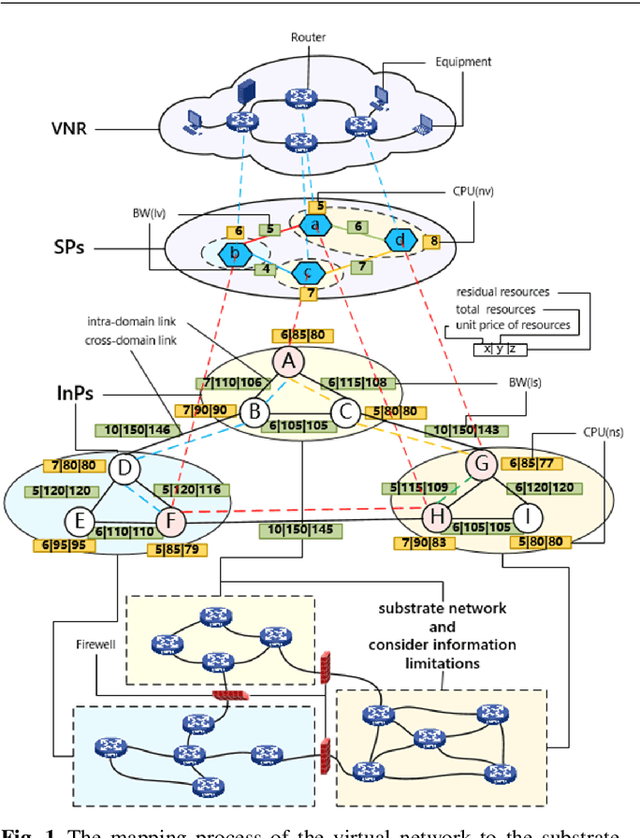

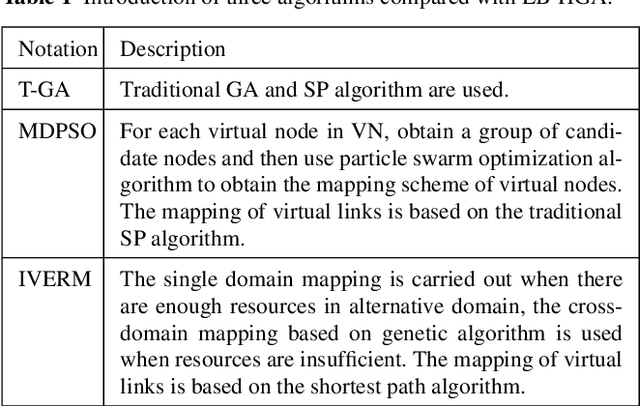

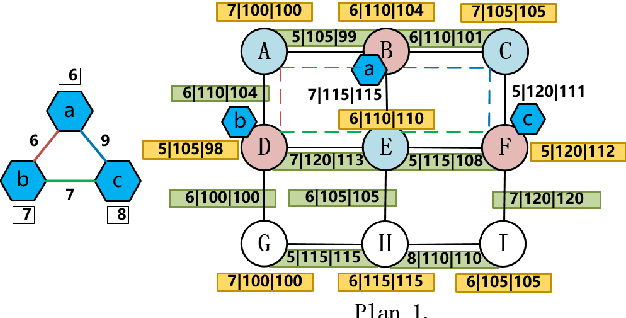

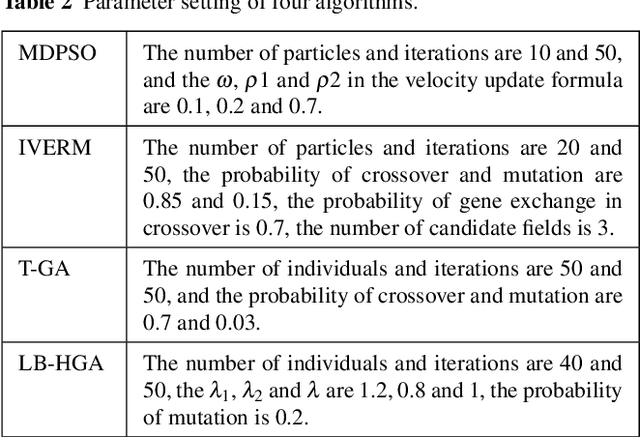

A Multi-Domain VNE Algorithm based on Load Balancing in the IoT networks

Feb 07, 2022

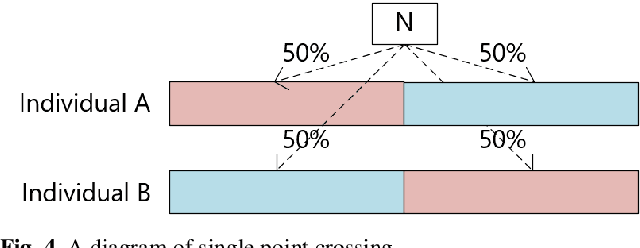

Abstract:Virtual network embedding is one of the key problems of network virtualization. Since virtual network mapping is an NP-hard problem, a lot of research has focused on the evolutionary algorithm's masterpiece genetic algorithm. However, the parameter setting in the traditional method is too dependent on experience, and its low flexibility makes it unable to adapt to increasingly complex network environments. In addition, link-mapping strategies that do not consider load balancing can easily cause link blocking in high-traffic environments. In the IoT environment involving medical, disaster relief, life support and other equipment, network performance and stability are particularly important. Therefore, how to provide a more flexible virtual network mapping service in a heterogeneous network environment with large traffic is an urgent problem. Aiming at this problem, a virtual network mapping strategy based on hybrid genetic algorithm is proposed. This strategy uses a dynamically calculated cross-probability and pheromone-based mutation gene selection strategy to improve the flexibility of the algorithm. In addition, a weight update mechanism based on load balancing is introduced to reduce the probability of mapping failure while balancing the load. Simulation results show that the proposed method performs well in a number of performance metrics including mapping average quotation, link load balancing, mapping cost-benefit ratio, acceptance rate and running time.

VNE Strategy based on Chaotic Hybrid Flower Pollination Algorithm Considering Multi-criteria Decision Making

Feb 07, 2022

Abstract:With the development of science and technology and the need for Multi-Criteria Decision-Making (MCDM), the optimization problem to be solved becomes extremely complex. The theoretically accurate and optimal solutions are often difficult to obtain. Therefore, meta-heuristic algorithms based on multi-point search have received extensive attention. Aiming at these problems, the design strategy of hybrid flower pollination algorithm for Virtual Network Embedding (VNE) problem is discussed. Combining the advantages of the Genetic Algorithm (GA) and FPA, the algorithm is optimized for the characteristics of discrete optimization problems. The cross operation is used to replace the cross-pollination operation to complete the global search and replace the mutation operation with self-pollination operation to enhance the ability of local search. Moreover, a life cycle mechanism is introduced as a complement to the traditional fitness-based selection strategy to avoid premature convergence. A chaotic optimization strategy is introduced to replace the random sequence-guided crossover process to strengthen the global search capability and reduce the probability of producing invalid individuals.

A Reliable Data-transmission Mechanism using Blockchain in Edge Computing Scenarios

Feb 07, 2022

Abstract:With the advent of the Internet of things (IoT) era, more and more devices are connected to the IoT. Under the traditional cloud-thing centralized management mode, the transmission of massive data is facing many difficulties, and the reliability of data is difficult to be guaranteed. As emerging technologies, blockchain technology and edge computing (EC) technology have attracted the attention of academia in improving the reliability, privacy and invariability of IoT technology. In this paper, we combine the characteristics of the EC and blockchain to ensure the reliability of data transmission in the IoT. First of all, we propose a data transmission mechanism based on blockchain, which uses the distributed architecture of blockchain to ensure that the data is not tampered with; secondly, we introduce the three-tier structure in the architecture in turn; finally, we introduce the four working steps of the mechanism, which are similar to the working mechanism of blockchain. In the end, the simulation results show that the proposed scheme can ensure the reliability of data transmission in the Internet of things to a great extent.

Semantic Similarity Computing Model Based on Multi Model Fine-Grained Nonlinear Fusion

Feb 05, 2022

Abstract:Natural language processing (NLP) task has achieved excellent performance in many fields, including semantic understanding, automatic summarization, image recognition and so on. However, most of the neural network models for NLP extract the text in a fine-grained way, which is not conducive to grasp the meaning of the text from a global perspective. To alleviate the problem, the combination of the traditional statistical method and deep learning model as well as a novel model based on multi model nonlinear fusion are proposed in this paper. The model uses the Jaccard coefficient based on part of speech, Term Frequency-Inverse Document Frequency (TF-IDF) and word2vec-CNN algorithm to measure the similarity of sentences respectively. According to the calculation accuracy of each model, the normalized weight coefficient is obtained and the calculation results are compared. The weighted vector is input into the fully connected neural network to give the final classification results. As a result, the statistical sentence similarity evaluation algorithm reduces the granularity of feature extraction, so it can grasp the sentence features globally. Experimental results show that the matching of sentence similarity calculation method based on multi model nonlinear fusion is 84%, and the F1 value of the model is 75%.

A multi-domain virtual network embedding algorithm with delay prediction

Feb 03, 2022

Abstract:Virtual network embedding (VNE) is an crucial part of network virtualization (NV), which aims to map the virtual networks (VNs) to a shared substrate network (SN). With the emergence of various delay-sensitive applications, how to improve the delay performance of the system has become a hot topic in academic circles. Based on extensive research, we proposed a multi-domain virtual network embedding algorithm based on delay prediction (DP-VNE). Firstly, the candidate physical nodes are selected by estimating the delay of virtual requests, then particle swarm optimization (PSO) algorithm is used to optimize the mapping process, so as to reduce the delay of the system. The simulation results show that compared with the other three advanced algorithms, the proposed algorithm can significantly reduce the system delay while keeping other indicators unaffected.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge