Pedro Neto

Classification of assembly tasks combining multiple primitive actions using Transformers and xLSTMs

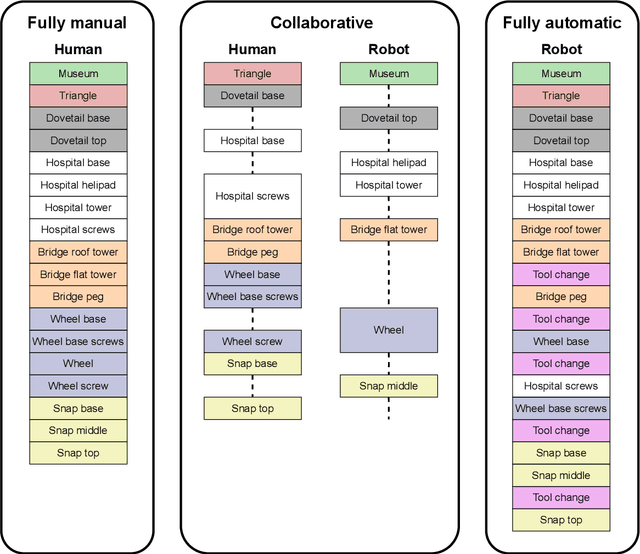

May 23, 2025Abstract:The classification of human-performed assembly tasks is essential in collaborative robotics to ensure safety, anticipate robot actions, and facilitate robot learning. However, achieving reliable classification is challenging when segmenting tasks into smaller primitive actions is unfeasible, requiring us to classify long assembly tasks that encompass multiple primitive actions. In this study, we propose classifying long assembly sequential tasks based on hand landmark coordinates and compare the performance of two well-established classifiers, LSTM and Transformer, as well as a recent model, xLSTM. We used the HRC scenario proposed in the CT benchmark, which includes long assembly tasks that combine actions such as insertions, screw fastenings, and snap fittings. Testing was conducted using sequences gathered from both the human operator who performed the training sequences and three new operators. The testing results of real-padded sequences for the LSTM, Transformer, and xLSTM models was 72.9%, 95.0% and 93.2% for the training operator, and 43.5%, 54.3% and 60.8% for the new operators, respectively. The LSTM model clearly underperformed compared to the other two approaches. As expected, both the Transformer and xLSTM achieved satisfactory results for the operator they were trained on, though the xLSTM model demonstrated better generalization capabilities to new operators. The results clearly show that for this type of classification, the xLSTM model offers a slight edge over Transformers.

Enhancing Physical Human-Robot Interaction: Recognizing Digits via Intrinsic Robot Tactile Sensing

Mar 31, 2025Abstract:Physical human-robot interaction (pHRI) remains a key challenge for achieving intuitive and safe interaction with robots. Current advancements often rely on external tactile sensors as interface, which increase the complexity of robotic systems. In this study, we leverage the intrinsic tactile sensing capabilities of collaborative robots to recognize digits drawn by humans on an uninstrumented touchpad mounted to the robot's flange. We propose a dataset of robot joint torque signals along with corresponding end-effector (EEF) forces and moments, captured from the robot's integrated torque sensors in each joint, as users draw handwritten digits (0-9) on the touchpad. The pHRI-DIGI-TACT dataset was collected from different users to capture natural variations in handwriting. To enhance classification robustness, we developed a data augmentation technique to account for reversed and rotated digits inputs. A Bidirectional Long Short-Term Memory (Bi-LSTM) network, leveraging the spatiotemporal nature of the data, performs online digit classification with an overall accuracy of 94\% across various test scenarios, including those involving users who did not participate in training the system. This methodology is implemented on a real robot in a fruit delivery task, demonstrating its potential to assist individuals in everyday life. Dataset and video demonstrations are available at: https://TS-Robotics.github.io/pHRI-DIGI/.

The untapped potential of electrically-driven phase transition actuators to power innovative soft robot designs

Nov 11, 2024Abstract:In the quest for electrically-driven soft actuators, the focus has shifted away from liquid-gas phase transition, commonly associated with reduced strain rates and actuation delays, in favour of electrostatic and other electrothermal actuation methods. This prevented the technology from capitalizing on its unique characteristics, particularly: low voltage operation, controllability, scalability, and ease of integration into robots. Here, we introduce a phase transition electric soft actuator capable of strain rates of over 16%/s and pressurization rates of 100 kPa/s, approximately one order of magnitude higher than previous attempts. Blocked forces exceeding 50 N were achieved while operating at voltages up to 24 V. We propose a method for selecting working fluids which allows for application-specific optimization, together with a nonlinear control approach that reduces both parasitic vibrations and control lag. We demonstrate the integration of this technology in soft robotic systems, including the first quadruped robot powered by liquid-gas phase transition.

Integrated Design and Fabrication of Pneumatic Soft Robot Actuators in a Single Casting Step

Jul 18, 2024Abstract:Bio-inspired soft robots have already shown the ability to handle uncertainty and adapt to unstructured environments. However, their availability is partially restricted by time-consuming, costly and highly supervised design-fabrication processes, often based on resource intensive iterative workflows. Here, we propose an integrated approach targeting the design and fabrication of pneumatic soft actuators in a single casting step. Molds and sacrificial water-soluble hollow cores are printed using fused filament fabrication (FFF). A heated water circuit accelerates the dissolution of the core's material and guarantees its complete removal from the actuator walls, while the actuator's mechanical operability is defined through finite element analysis (FEA). This enables the fabrication of actuators with non-uniform cross sections under minimal supervision, thereby reducing the number of iterations necessary during the design and fabrication processes. Three actuators capable of bending and linear motion were designed, fabricated, integrated and demonstrated as three different bio-inspired soft robots, an earthworm-inspired robot, a four-legged robot, and a robotic gripper. We demonstrate the availability, versatility and effectiveness of the proposed methods, contributing to accelerating the design and fabrication of soft robots. This study represents a step toward increasing the accessibility of soft robots to people at a lower cost.

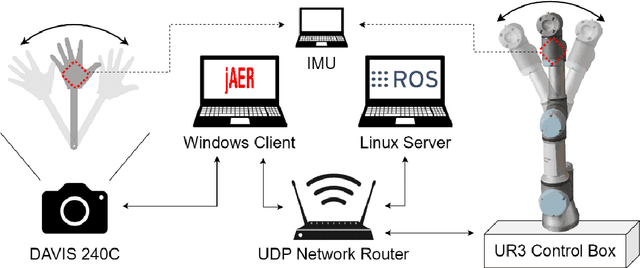

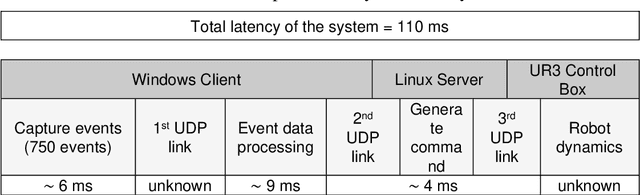

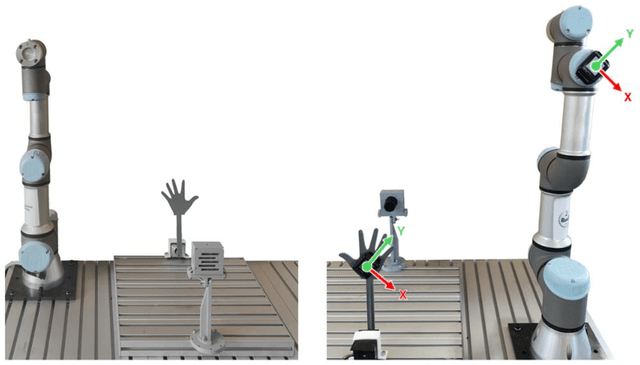

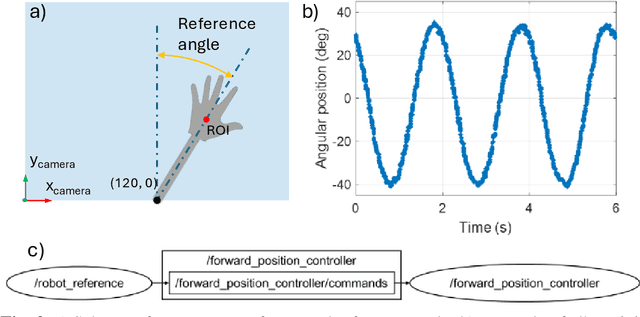

Demonstration of real-time event camera to collaborative robot communication

Jul 16, 2024

Abstract:Real-time robot actuation is one of the main challenges to overcome in human-robot interaction. Most visual sensors are either too slow or their data are too complex to provide meaningful information and low latency input to a robotic system. Data output of an event camera is high-frequency and extremely lightweight, with only 8 bytes per event. To evaluate the hypothesis of using event cameras as data source for a real-time robotic system, the position of a waving hand is acquired from the event data and transmitted to a collaborative robot as a movement command. A total time delay of 110 ms was measured between the original movement and the robot movement, where much of the delay is caused by the robot dynamics.

Event-based dataset for the detection and classification of manufacturing assembly tasks

May 23, 2024Abstract:The featured dataset, the Event-based Dataset of Assembly Tasks (EDAT24), showcases a selection of manufacturing primitive tasks (idle, pick, place, and screw), which are basic actions performed by human operators in any manufacturing assembly. The data were captured using a DAVIS240C event camera, an asynchronous vision sensor that registers events when changes in light intensity value occur. Events are a lightweight data format for conveying visual information and are well-suited for real-time detection and analysis of human motion. Each manufacturing primitive has 100 recorded samples of DAVIS240C data, including events and greyscale frames, for a total of 400 samples. In the dataset, the user interacts with objects from the open-source CT-Benchmark in front of the static DAVIS event camera. All data are made available in raw form (.aedat) and in pre-processed form (.npy). Custom-built Python code is made available together with the dataset to aid researchers to add new manufacturing primitives or extend the dataset with more samples.

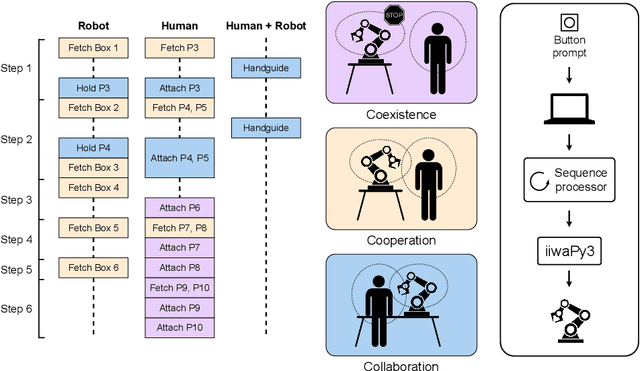

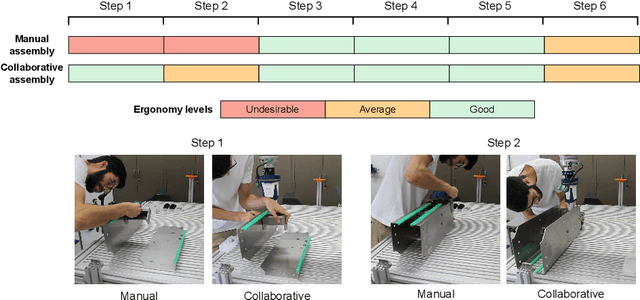

A Collaborative Robot-Assisted Manufacturing Assembly Process

Mar 08, 2024

Abstract:An effective human-robot collaborative process results in the reduction of the operator's workload, promoting a more efficient, productive, safer and less error-prone working environment. However, the implementation of collaborative robots in industry is still challenging. In this work, we compare manual and robot-assisted assembly processes to evaluate the effectiveness of collaborative robots while featuring different modes of operation (coexistence, cooperation and collaboration). Results indicate an improvement in ergonomic conditions and ease of execution without substantially compromising assembly time. Furthermore, the robot is intuitive to use and guides the user on the proper sequencing of the process.

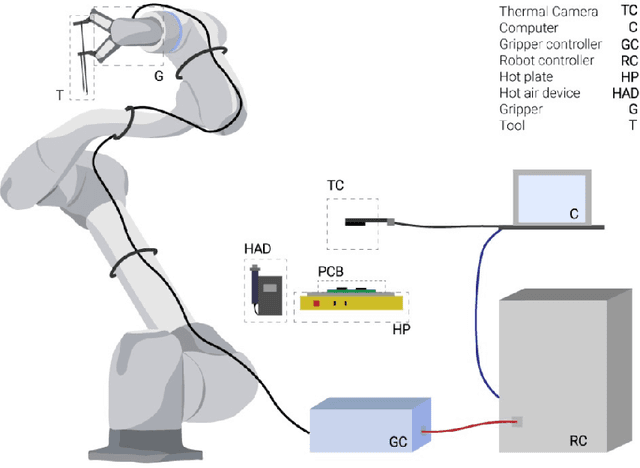

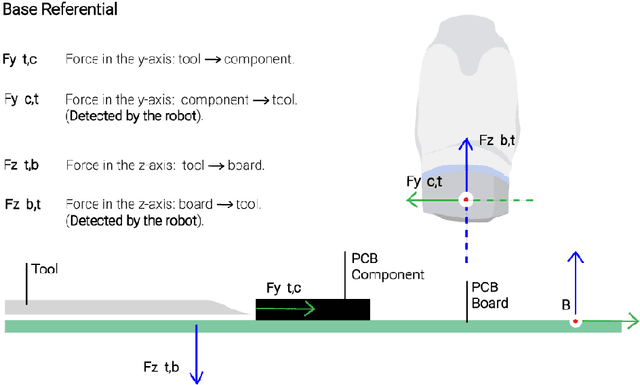

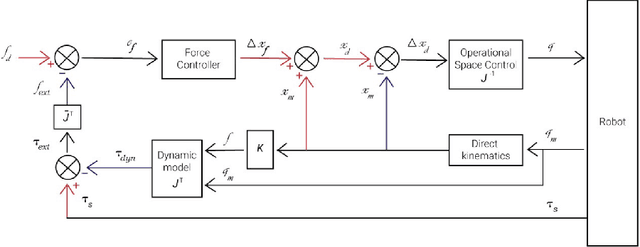

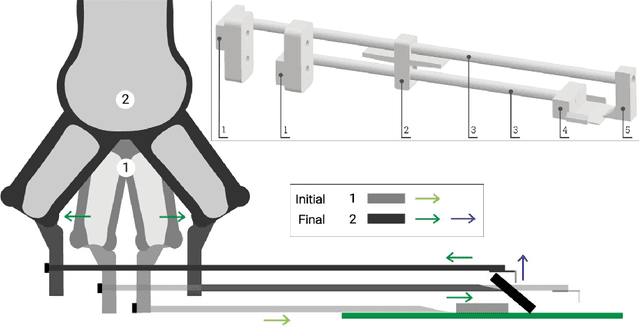

An in-Contact Robotic System for the Process of Desoldering PCB Components

Mar 08, 2024

Abstract:The disposal and recycling of electronic waste (e-waste) is a global challenge. The disassembly of components is a crucial step towards an efficient recycling process, avoiding the destructive methods. Although most disassembly work is still done manually due to the diversity and complexity of components, there is a growing interest in developing automated methods to improve efficiency and reduce labor costs. This study aims to robotize the desoldering process and extracting components from printed circuit boards (PCBs), with the goal of automating the process as much as possible. The proposed strategy consists of several phases, including the controlled contact of the robotic tool with the PCB components. A specific tool was developed to apply a controlled force against the PCB component, removing it from the board. The results demonstrate that it is feasible to remove the PCB components with a high success rate (approximately 100% for the bigger PCB components).

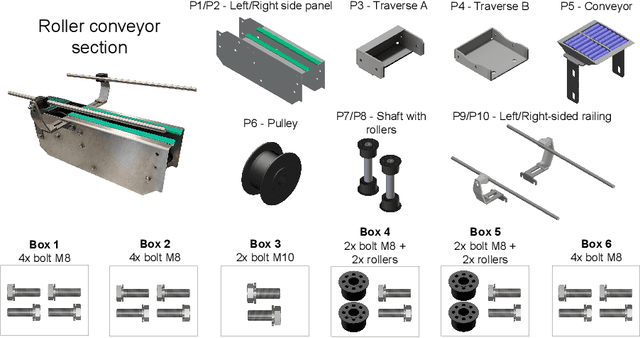

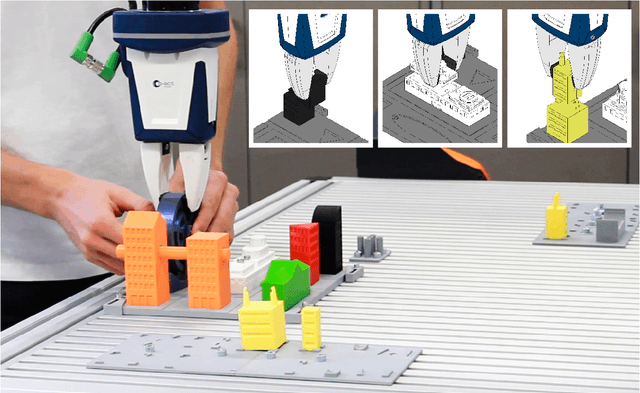

Benchmarking human-robot collaborative assembly tasks

Feb 01, 2024

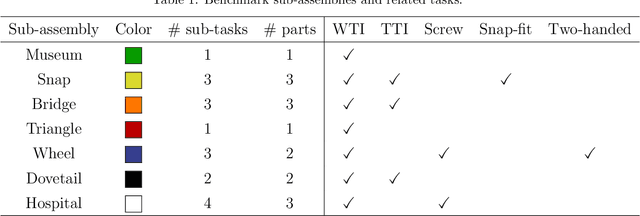

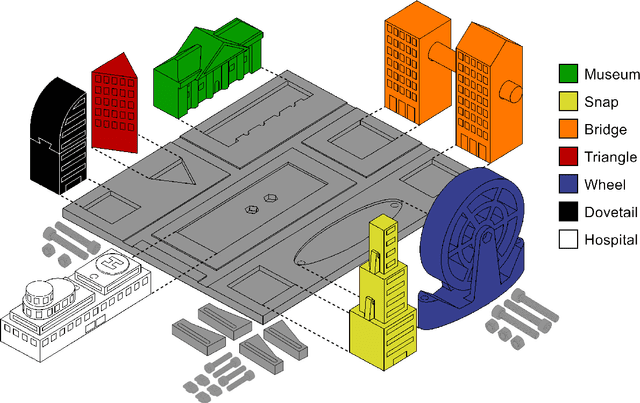

Abstract:Manufacturing assembly tasks can vary in complexity and level of automation. Yet, achieving full automation can be challenging and inefficient, particularly due to the complexity of certain assembly operations. Human-robot collaborative work, leveraging the strengths of human labor alongside the capabilities of robots, can be a solution for enhancing efficiency. This paper introduces the CT benchmark, a benchmark and model set designed to facilitate the testing and evaluation of human-robot collaborative assembly scenarios. It was designed to compare manual and automatic processes using metrics such as the assembly time and human workload. The components of the model set can be assembled through the most common assembly tasks, each with varying levels of difficulty. The CT benchmark was designed with a focus on its applicability in human-robot collaborative environments, with the aim of ensuring the reproducibility and replicability of experiments. Experiments were carried out to assess assembly performance in three different setups (manual, automatic and collaborative), measuring metrics related to the assembly time and the workload on human operators. The results suggest that the collaborative approach takes longer than the fully manual assembly, with an increase of 70.8%. However, users reported a lower overall workload, as well as reduced mental demand, physical demand, and effort according to the NASA-TLX questionnaire.

A Q-learning approach to the continuous control problem of robot inverted pendulum balancing

Dec 05, 2023Abstract:This study evaluates the application of a discrete action space reinforcement learning method (Q-learning) to the continuous control problem of robot inverted pendulum balancing. To speed up the learning process and to overcome technical difficulties related to the direct learning on the real robotic system, the learning phase is performed in simulation environment. A mathematical model of the system dynamics is implemented, deduced by curve fitting on data acquired from the real system. The proposed approach demonstrated feasible, featuring its application on a real world robot that learned to balance an inverted pendulum. This study also reinforces and demonstrates the importance of an accurate representation of the physical world in simulation to achieve a more efficient implementation of reinforcement learning algorithms in real world, even when using a discrete action space algorithm to control a continuous action.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge