Paweł Liskowski

Large-Scale Aspect-Based Sentiment Analysis with Reasoning-Infused LLMs

Jan 07, 2026Abstract:We introduce Arctic-ABSA, a collection of powerful models for real-life aspect-based sentiment analysis (ABSA). Our models are tailored to commercial needs, trained on a large corpus of public data alongside carefully generated synthetic data, resulting in a dataset 20 times larger than SemEval14. We extend typical ABSA models by expanding the number of sentiment classes from the standard three (positive, negative, neutral) to five, adding mixed and unknown classes, while also jointly predicting overall text sentiment and supporting multiple languages. We experiment with reasoning injection by fine-tuning on Chain-of-Thought (CoT) examples and introduce a novel reasoning pretraining technique for encoder-only models that significantly improves downstream fine-tuning and generalization. Our 395M-parameter encoder and 8B-parameter decoder achieve up to 10 percentage points higher accuracy than GPT-4o and Claude 3.5 Sonnet, while setting new state-of-the-art results on the SemEval14 benchmark. A single multilingual model maintains 87-91% accuracy across six languages without degrading English performance. We release ABSA-mix, a large-scale benchmark aggregating 17 public ABSA datasets across 92 domains.

Cortex AISQL: A Production SQL Engine for Unstructured Data

Nov 19, 2025Abstract:Snowflake's Cortex AISQL is a production SQL engine that integrates native semantic operations directly into SQL. This integration allows users to write declarative queries that combine relational operations with semantic reasoning, enabling them to query both structured and unstructured data effortlessly. However, making semantic operations efficient at production scale poses fundamental challenges. Semantic operations are more expensive than traditional SQL operations, possess distinct latency and throughput characteristics, and their cost and selectivity are unknown during query compilation. Furthermore, existing query engines are not designed to optimize semantic operations. The AISQL query execution engine addresses these challenges through three novel techniques informed by production deployment data from Snowflake customers. First, AI-aware query optimization treats AI inference cost as a first-class optimization objective, reasoning about large language model (LLM) cost directly during query planning to achieve 2-8$\times$ speedups. Second, adaptive model cascades reduce inference costs by routing most rows through a fast proxy model while escalating uncertain cases to a powerful oracle model, achieving 2-6$\times$ speedups while maintaining 90-95% of oracle model quality. Third, semantic join query rewriting lowers the quadratic time complexity of join operations to linear through reformulation as multi-label classification tasks, achieving 15-70$\times$ speedups with often improved prediction quality. AISQL is deployed in production at Snowflake, where it powers diverse customer workloads across analytics, search, and content understanding.

Arctic-TILT. Business Document Understanding at Sub-Billion Scale

Aug 08, 2024Abstract:The vast portion of workloads employing LLMs involves answering questions grounded on PDF or scan content. We introduce the Arctic-TILT achieving accuracy on par with models 1000$\times$ its size on these use cases. It can be fine-tuned and deployed on a single 24GB GPU, lowering operational costs while processing Visually Rich Documents with up to 400k tokens. The model establishes state-of-the-art results on seven diverse Document Understanding benchmarks, as well as provides reliable confidence scores and quick inference, which are essential for processing files in large-scale or time-sensitive enterprise environments.

EvoTorch: Scalable Evolutionary Computation in Python

Feb 27, 2023

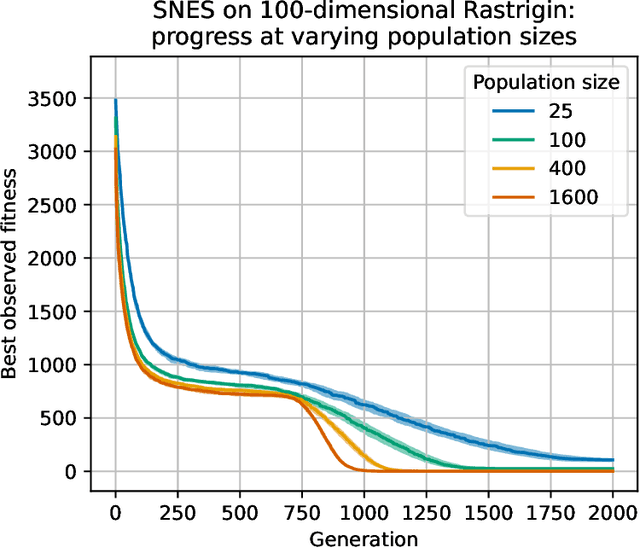

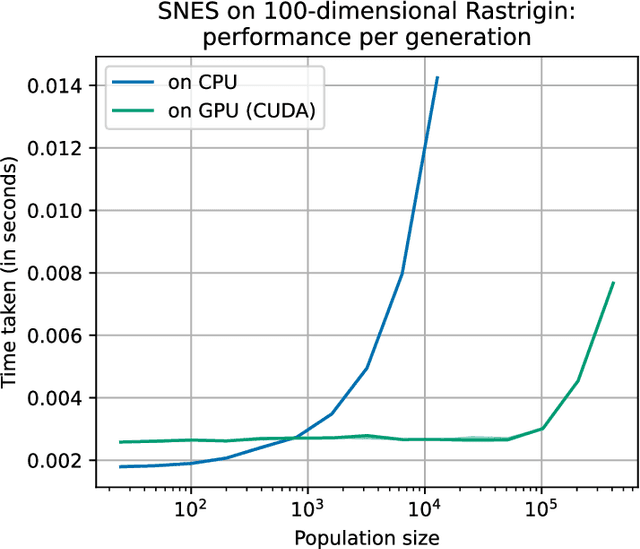

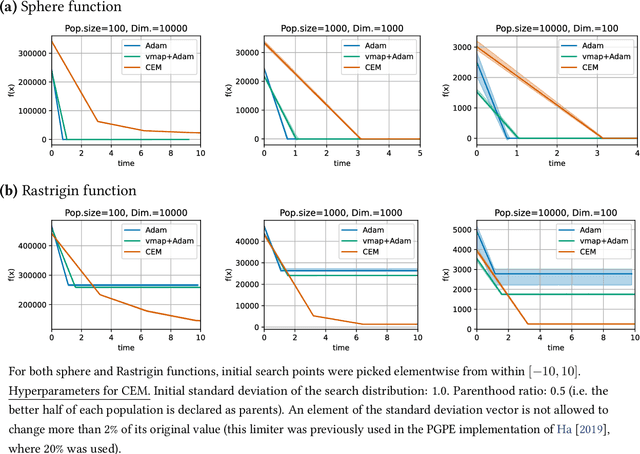

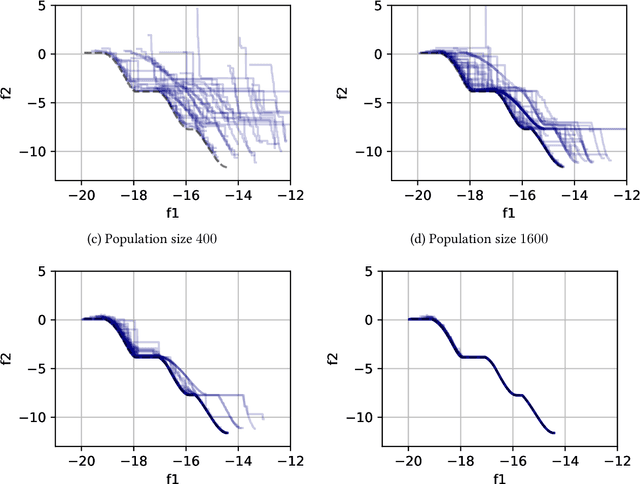

Abstract:Evolutionary computation is an important component within various fields such as artificial intelligence research, reinforcement learning, robotics, industrial automation and/or optimization, engineering design, etc. Considering the increasing computational demands and the dimensionalities of modern optimization problems, the requirement for scalable, re-usable, and practical evolutionary algorithm implementations has been growing. To address this requirement, we present EvoTorch: an evolutionary computation library designed to work with high-dimensional optimization problems, with GPU support and with high parallelization capabilities. EvoTorch is based on and seamlessly works with the PyTorch library, and therefore, allows the users to define their optimization problems using a well-known API.

ClipUp: A Simple and Powerful Optimizer for Distribution-based Policy Evolution

Aug 05, 2020

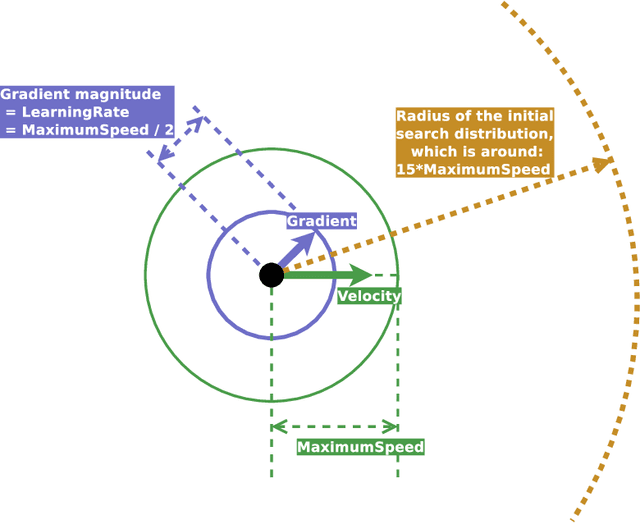

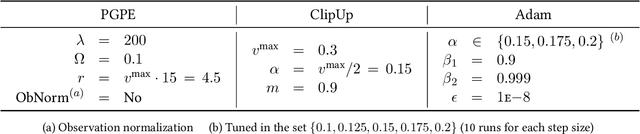

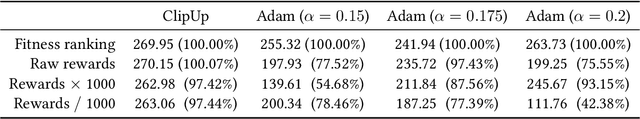

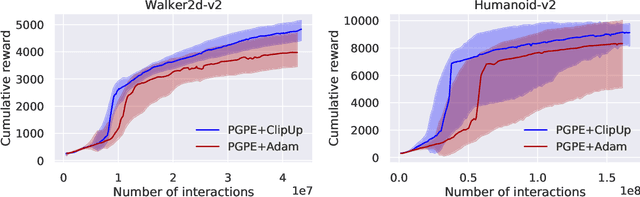

Abstract:Distribution-based search algorithms are an effective approach for evolutionary reinforcement learning of neural network controllers. In these algorithms, gradients of the total reward with respect to the policy parameters are estimated using a population of solutions drawn from a search distribution, and then used for policy optimization with stochastic gradient ascent. A common choice in the community is to use the Adam optimization algorithm for obtaining an adaptive behavior during gradient ascent, due to its success in a variety of supervised learning settings. As an alternative to Adam, we propose to enhance classical momentum-based gradient ascent with two simple techniques: gradient normalization and update clipping. We argue that the resulting optimizer called ClipUp (short for "clipped updates") is a better choice for distribution-based policy evolution because its working principles are simple and easy to understand and its hyperparameters can be tuned more intuitively in practice. Moreover, it removes the need to re-tune hyperparameters if the reward scale changes. Experiments show that ClipUp is competitive with Adam despite its simplicity and is effective on challenging continuous control benchmarks, including the Humanoid control task based on the Bullet physics simulator.

Learning to Play Othello with Deep Neural Networks

Nov 17, 2017

Abstract:Achieving superhuman playing level by AlphaGo corroborated the capabilities of convolutional neural architectures (CNNs) for capturing complex spatial patterns. This result was to a great extent due to several analogies between Go board states and 2D images CNNs have been designed for, in particular translational invariance and a relatively large board. In this paper, we verify whether CNN-based move predictors prove effective for Othello, a game with significantly different characteristics, including a much smaller board size and complete lack of translational invariance. We compare several CNN architectures and board encodings, augment them with state-of-the-art extensions, train on an extensive database of experts' moves, and examine them with respect to move prediction accuracy and playing strength. The empirical evaluation confirms high capabilities of neural move predictors and suggests a strong correlation between prediction accuracy and playing strength. The best CNNs not only surpass all other 1-ply Othello players proposed to date but defeat (2-ply) Edax, the best open-source Othello player.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge