Pauline Trouvé-Peloux

DTIS, ONERA, Université Paris Saclay

Pix2Point: Learning Outdoor 3D Using Sparse Point Clouds and Optimal Transport

Jul 30, 2021

Abstract:Good quality reconstruction and comprehension of a scene rely on 3D estimation methods. The 3D information was usually obtained from images by stereo-photogrammetry, but deep learning has recently provided us with excellent results for monocular depth estimation. Building up a sufficiently large and rich training dataset to achieve these results requires onerous processing. In this paper, we address the problem of learning outdoor 3D point cloud from monocular data using a sparse ground-truth dataset. We propose Pix2Point, a deep learning-based approach for monocular 3D point cloud prediction, able to deal with complete and challenging outdoor scenes. Our method relies on a 2D-3D hybrid neural network architecture, and a supervised end-to-end minimisation of an optimal transport divergence between point clouds. We show that, when trained on sparse point clouds, our simple promising approach achieves a better coverage of 3D outdoor scenes than efficient monocular depth methods.

Multi-Task Learning of Height and Semantics from Aerial Images

Nov 18, 2019

Abstract:Aerial or satellite imagery is a great source for land surface analysis, which might yield land use maps or elevation models. In this investigation, we present a neural network framework for learning semantics and local height together. We show how this joint multi-task learning benefits to each task on the large dataset of the 2018 Data Fusion Contest. Moreover, our framework also yields an uncertainty map which allows assessing the prediction of the model. Code is available at https://github.com/marcelampc/mtl_aerial_images .

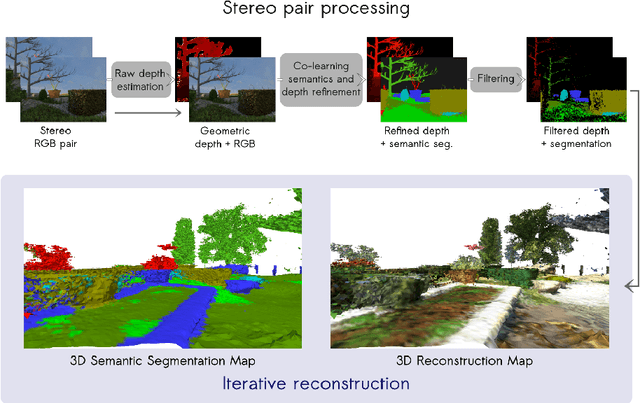

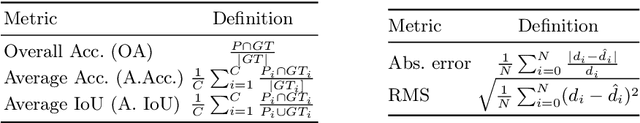

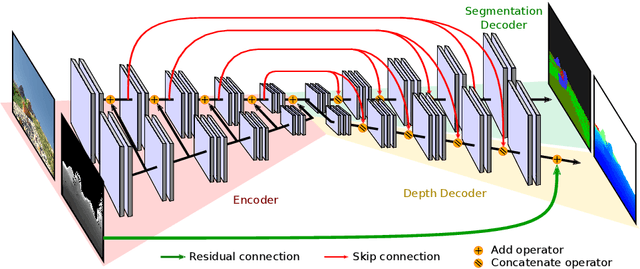

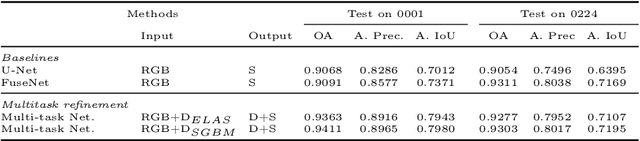

Technical Report: Co-learning of geometry and semantics for online 3D mapping

Nov 04, 2019

Abstract:This paper is a technical report about our submission for the ECCV 2018 3DRMS Workshop Challenge on Semantic 3D Reconstruction \cite{Tylecek2018rms}. In this paper, we address 3D semantic reconstruction for autonomous navigation using co-learning of depth map and semantic segmentation. The core of our pipeline is a deep multi-task neural network which tightly refines depth and also produces accurate semantic segmentation maps. Its inputs are an image and a raw depth map produced from a pair of images by standard stereo vision. The resulting semantic 3D point clouds are then merged in order to create a consistent 3D mesh, in turn used to produce dense semantic 3D reconstruction maps. The performances of each step of the proposed method are evaluated on the dataset and multiple tasks of the 3DRMS Challenge, and repeatedly surpass state-of-the-art approaches.

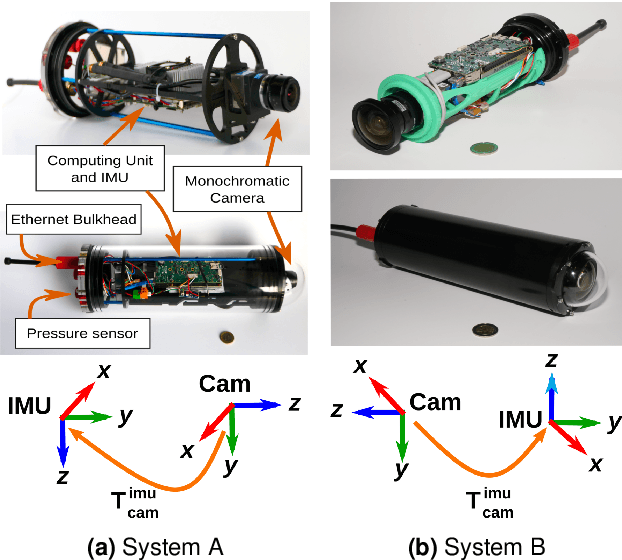

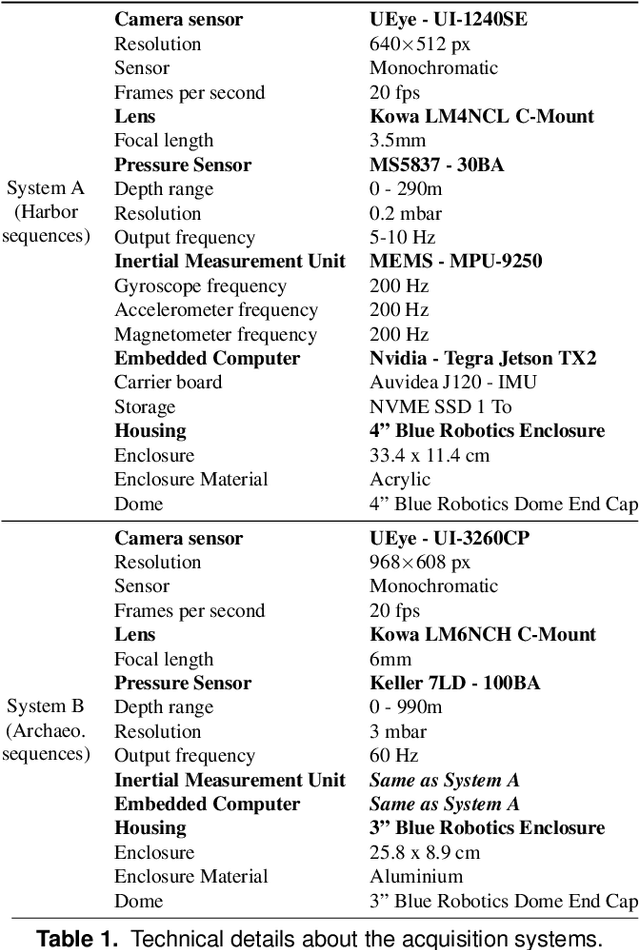

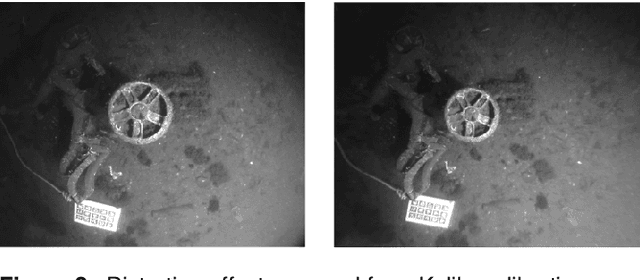

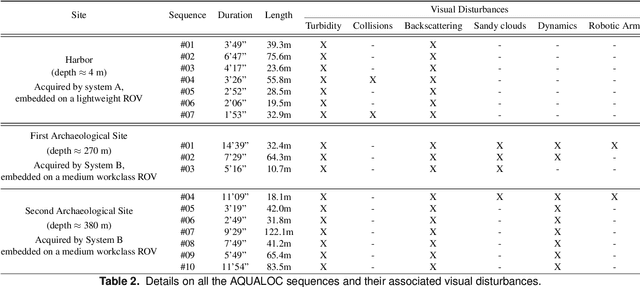

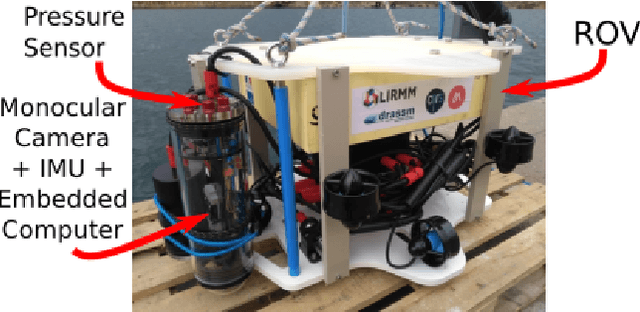

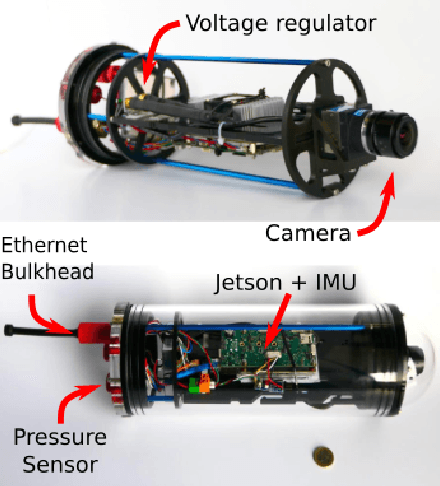

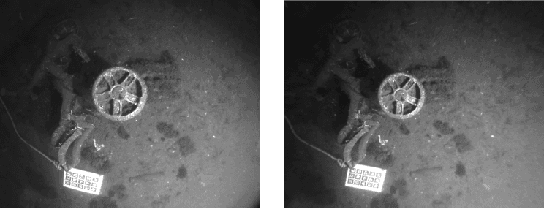

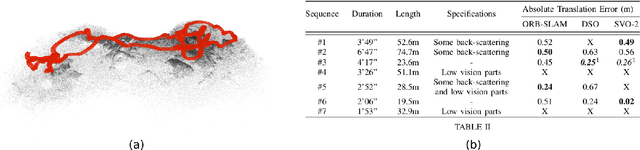

AQUALOC: An Underwater Dataset for Visual-Inertial-Pressure Localization

Oct 31, 2019

Abstract:We present a new dataset, dedicated to the development of simultaneous localization and mapping methods for underwater vehicles navigating close to the seabed. The data sequences composing this dataset are recorded in three different environments: a harbor at a depth of a few meters, a first archaeological site at a depth of 270 meters and a second site at a depth of 380 meters. The data acquisition is performed using Remotely Operated Vehicles equipped with a monocular monochromatic camera, a low-cost inertial measurement unit, a pressure sensor and a computing unit, all embedded in a single enclosure. The sensors' measurements are recorded synchronously on the computing unit and seventeen sequences have been created from all the acquired data. These sequences are made available in the form of ROS bags and as raw data. For each sequence, a trajectory has also been computed offline using a Structure-from-Motion library in order to allow the comparison with real-time localization methods. With the release of this dataset, we wish to provide data difficult to acquire and to encourage the development of vision-based localization methods dedicated to the underwater environment. The dataset can be downloaded from: http://www.lirmm.fr/aqualoc/

The Aqualoc Dataset: Towards Real-Time Underwater Localization from a Visual-Inertial-Pressure Acquisition System

Sep 19, 2018

Abstract:This paper presents a new underwater dataset acquired from a visual-inertial-pressure acquisition system and meant to be used to benchmark visual odometry, visual SLAM and multi-sensors SLAM solutions. The dataset is publicly available and contains ground-truth trajectories for evaluation.

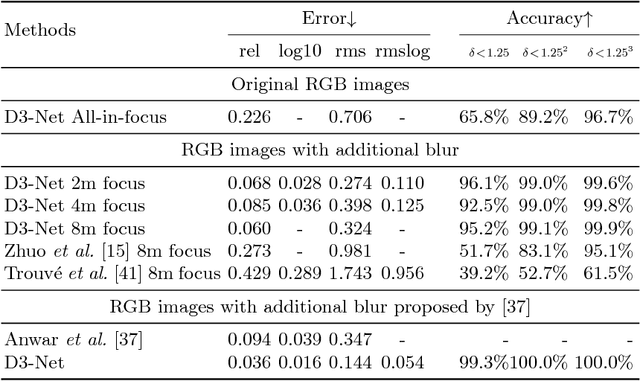

Deep Depth from Defocus: how can defocus blur improve 3D estimation using dense neural networks?

Sep 06, 2018

Abstract:Depth estimation is of critical interest for scene understanding and accurate 3D reconstruction. Most recent approaches in depth estimation with deep learning exploit geometrical structures of standard sharp images to predict corresponding depth maps. However, cameras can also produce images with defocus blur depending on the depth of the objects and camera settings. Hence, these features may represent an important hint for learning to predict depth. In this paper, we propose a full system for single-image depth prediction in the wild using depth-from-defocus and neural networks. We carry out thorough experiments to test deep convolutional networks on real and simulated defocused images using a realistic model of blur variation with respect to depth. We also investigate the influence of blur on depth prediction observing model uncertainty with a Bayesian neural network approach. From these studies, we show that out-of-focus blur greatly improves the depth-prediction network performances. Furthermore, we transfer the ability learned on a synthetic, indoor dataset to real, indoor and outdoor images. For this purpose, we present a new dataset containing real all-focus and defocused images from a Digital Single-Lens Reflex (DSLR) camera, paired with ground truth depth maps obtained with an active 3D sensor for indoor scenes. The proposed approach is successfully validated on both this new dataset and standard ones as NYUv2 or Depth-in-the-Wild. Code and new datasets are available at https://github.com/marcelampc/d3net_depth_estimation

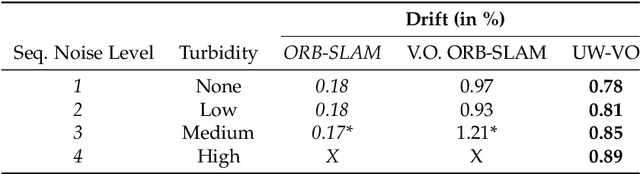

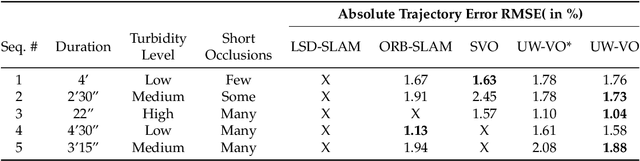

Real-time Monocular Visual Odometry for Turbid and Dynamic Underwater Environments

Jul 03, 2018

Abstract:In the context of robotic underwater operations, the visual degradations induced by the medium properties make difficult the exclusive use of cameras for localization purpose. Hence, most localization methods are based on expensive navigational sensors associated with acoustic positioning. On the other hand, visual odometry and visual SLAM have been exhaustively studied for aerial or terrestrial applications, but state-of-the-art algorithms fail underwater. In this paper we tackle the problem of using a simple low-cost camera for underwater localization and propose a new monocular visual odometry method dedicated to the underwater environment. We evaluate different tracking methods and show that optical flow based tracking is more suited to underwater images than classical approaches based on descriptors. We also propose a keyframe-based visual odometry approach highly relying on nonlinear optimization. The proposed algorithm has been assessed on both simulated and real underwater datasets and outperforms state-of-the-art visual SLAM methods under many of the most challenging conditions. The main application of this work is the localization of Remotely Operated Vehicles (ROVs) used for underwater archaeological missions but the developed system can be used in any other applications as long as visual information is available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge