Patricio Vela

A Schema-Guided Reason-while-Retrieve framework for Reasoning on Scene Graphs with Large-Language-Models (LLMs)

Feb 05, 2025

Abstract:Scene graphs have emerged as a structured and serializable environment representation for grounded spatial reasoning with Large Language Models (LLMs). In this work, we propose SG-RwR, a Schema-Guided Retrieve-while-Reason framework for reasoning and planning with scene graphs. Our approach employs two cooperative, code-writing LLM agents: a (1) Reasoner for task planning and information queries generation, and a (2) Retriever for extracting corresponding graph information following the queries. Two agents collaborate iteratively, enabling sequential reasoning and adaptive attention to graph information. Unlike prior works, both agents are prompted only with the scene graph schema rather than the full graph data, which reduces the hallucination by limiting input tokens, and drives the Reasoner to generate reasoning trace abstractly.Following the trace, the Retriever programmatically query the scene graph data based on the schema understanding, allowing dynamic and global attention on the graph that enhances alignment between reasoning and retrieval. Through experiments in multiple simulation environments, we show that our framework surpasses existing LLM-based approaches in numerical Q\&A and planning tasks, and can benefit from task-level few-shot examples, even in the absence of agent-level demonstrations. Project code will be released.

Accelerating Gaussian Variational Inference for Motion Planning Under Uncertainty

Nov 05, 2024

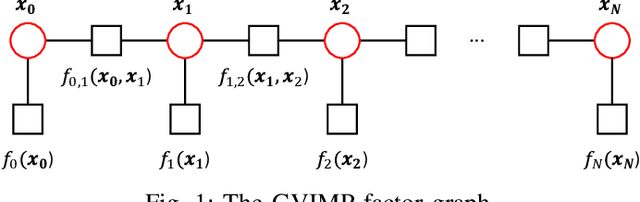

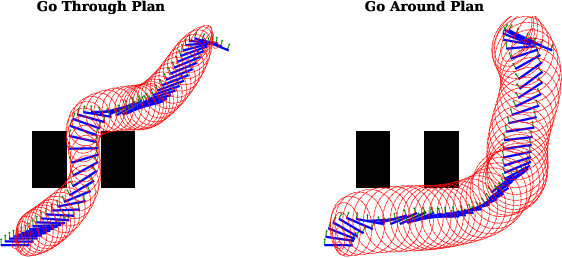

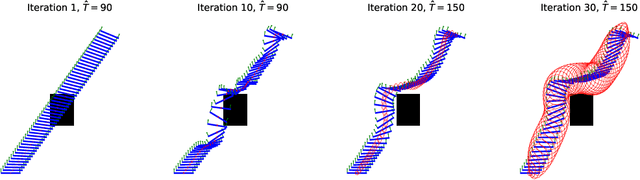

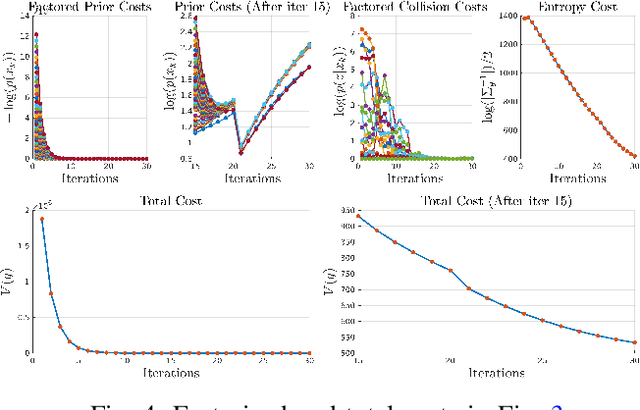

Abstract:This work addresses motion planning under uncertainty as a stochastic optimal control problem. The path distribution induced by the optimal controller corresponds to a posterior path distribution with a known form. To approximate this posterior, we frame an optimization problem in the space of Gaussian distributions, which aligns with the Gaussian Variational Inference Motion Planning (GVIMP) paradigm introduced in \cite{yu2023gaussian}. In this framework, the computation bottleneck lies in evaluating the expectation of collision costs over a dense discretized trajectory and computing the marginal covariances. This work exploits the sparse motion planning factor graph, which allows for parallel computing collision costs and Gaussian Belief Propagation (GBP) marginal covariance computation, to introduce a computationally efficient approach to solving GVIMP. We term the novel paradigm as the Parallel Gaussian Variational Inference Motion Planning (P-GVIMP). We validate the proposed framework on various robotic systems, demonstrating significant speed acceleration achieved by leveraging Graphics Processing Units (GPUs) for parallel computation. An open-sourced implementation is presented at https://github.com/hzyu17/VIMP.

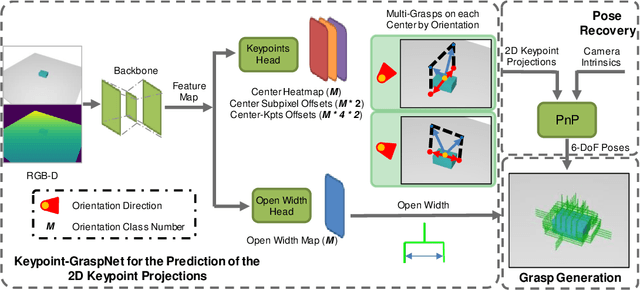

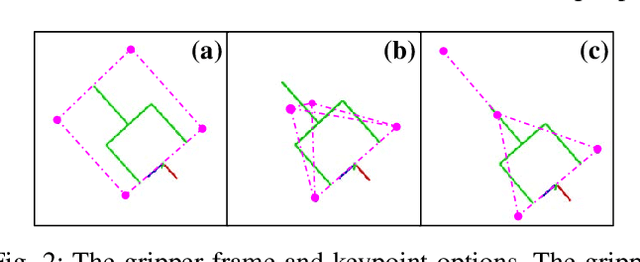

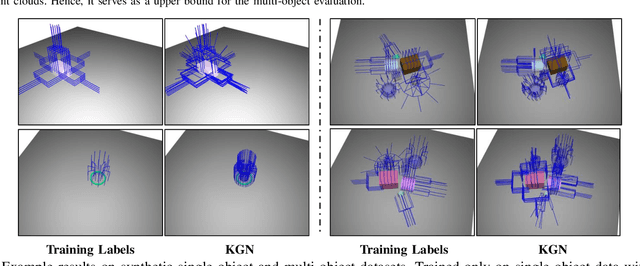

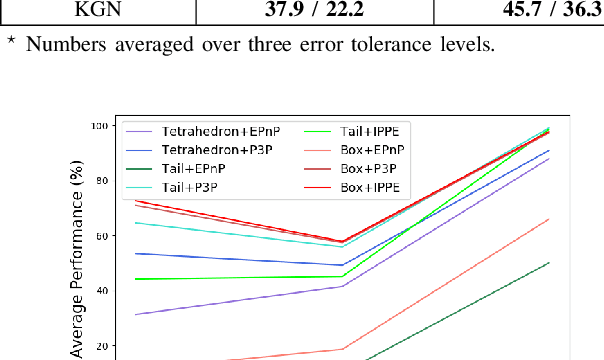

Keypoint-GraspNet: Keypoint-based 6-DoF Grasp Generation from the Monocular RGB-D input

Sep 19, 2022

Abstract:Great success has been achieved in the 6-DoF grasp learning from the point cloud input, yet the computational cost due to the point set orderlessness remains a concern. Alternatively, we explore the grasp generation from the RGB-D input in this paper. The proposed solution, Keypoint-GraspNet, detects the projection of the gripper keypoints in the image space and then recover the SE(3) poses with a PnP algorithm. A synthetic dataset based on the primitive shape and the grasp family is constructed to examine our idea. Metric-based evaluation reveals that our method outperforms the baselines in terms of the grasp proposal accuracy, diversity, and the time cost. Finally, robot experiments show high success rate, demonstrating the potential of the idea in the real-world applications.

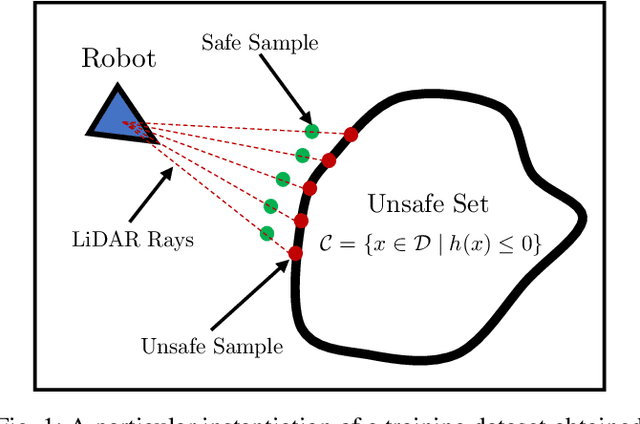

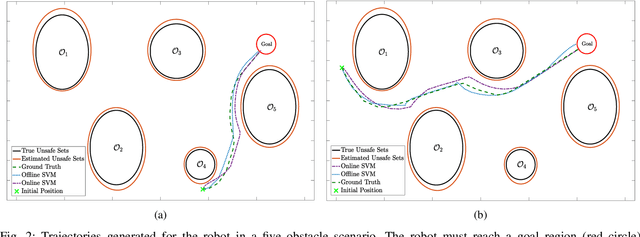

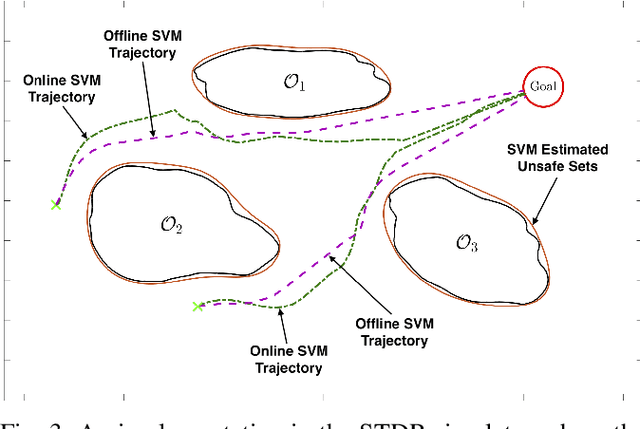

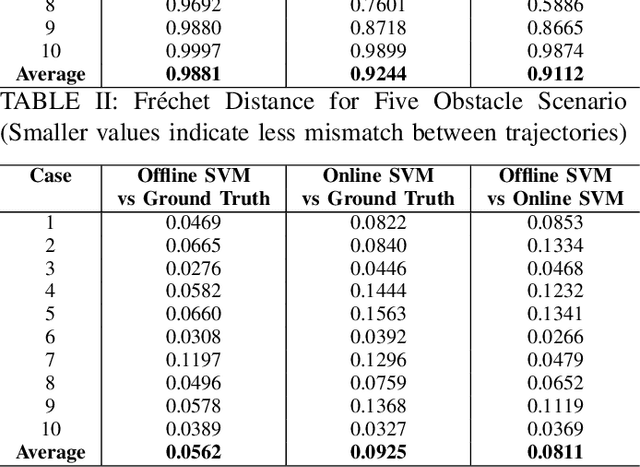

Synthesis of Control Barrier Functions Using a Supervised Machine Learning Approach

Mar 10, 2020

Abstract:Control barrier functions are mathematical constructs used to guarantee safety for robotic systems. When integrated as constraints in a quadratic programming optimization problem, instantaneous control synthesis with real-time performance demands can be achieved for robotics applications. Prevailing use has assumed full knowledge of the safety barrier functions, however there are cases where the safe regions must be estimated online from sensor measurements. In these cases, the corresponding barrier function must be synthesized online. This paper describes a learning framework for estimating control barrier functions from sensor data. Doing so affords system operation in unknown state space regions without compromising safety. Here, a support vector machine classifier provides the barrier function specification as determined by sets of safe and unsafe states obtained from sensor measurements. Theoretical safety guarantees are provided. Experimental ROS-based simulation results for an omnidirectional robot equipped with LiDAR demonstrate safe operation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge