Pablo Ruiz

LaTTICe

Improving Acquisition Speed of X-Ray Ptychography through Spatial Undersampling and Regularization

May 20, 2021

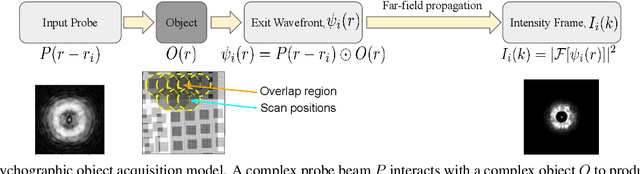

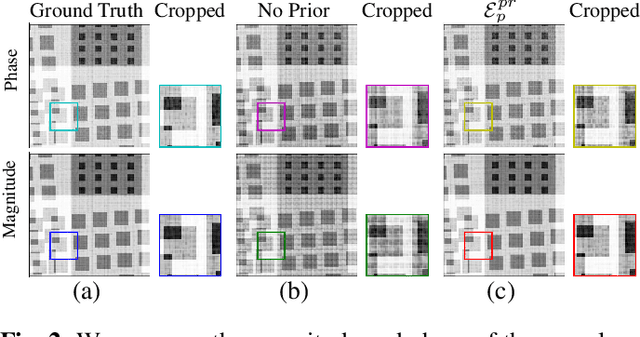

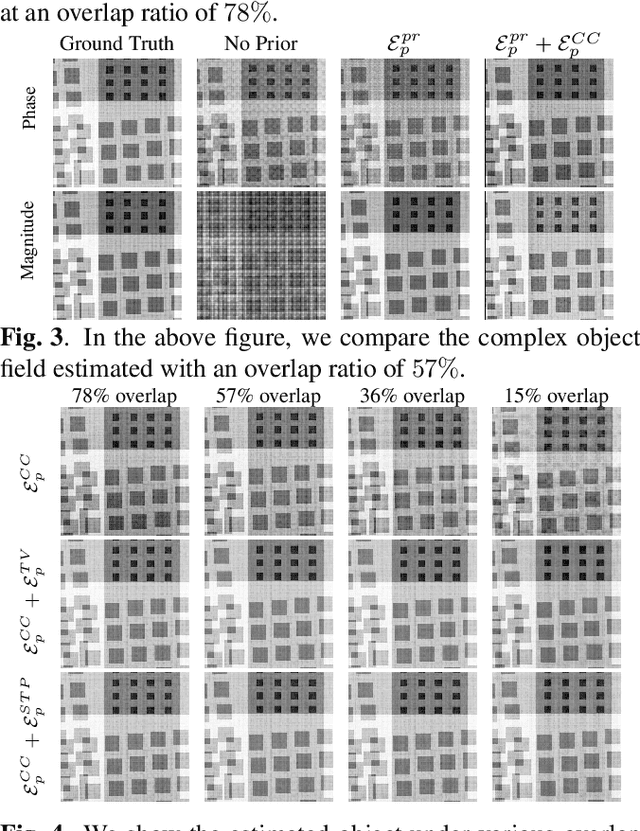

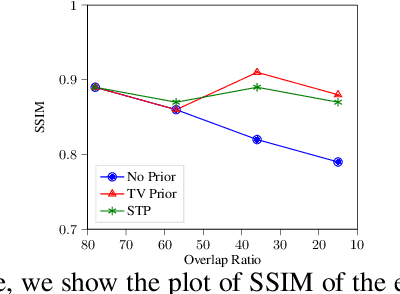

Abstract:X-ray ptychography is one of the versatile techniques for nanometer resolution imaging. The magnitude of the diffraction patterns is recorded on a detector and the phase of the diffraction patterns is estimated using phase retrieval techniques. Most phase retrieval algorithms make the solution well-posed by relying on the constraints imposed by the overlapping region between neighboring diffraction pattern samples. As the overlap between neighboring diffraction patterns reduces, the problem becomes ill-posed and the object cannot be recovered. To avoid the ill-posedness, we investigate the effect of regularizing the phase retrieval algorithm with image priors for various overlap ratios between the neighboring diffraction patterns. We show that the object can be faithfully reconstructed at low overlap ratios by regularizing the phase retrieval algorithm with image priors such as Total-Variation and Structure Tensor Prior. We also show the effectiveness of our proposed algorithm on real data acquired from an IC chip with a coherent X-ray beam.

Physics-informed attention-based neural network for solving non-linear partial differential equations

May 17, 2021

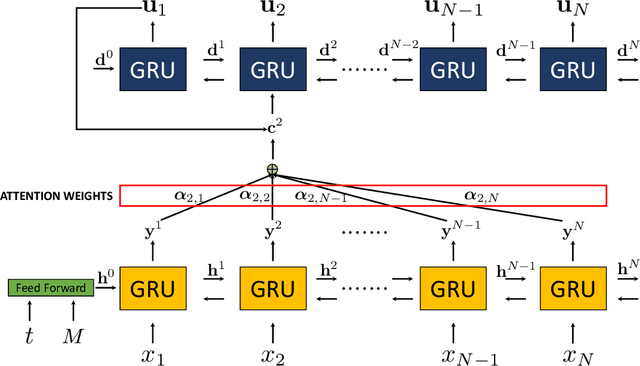

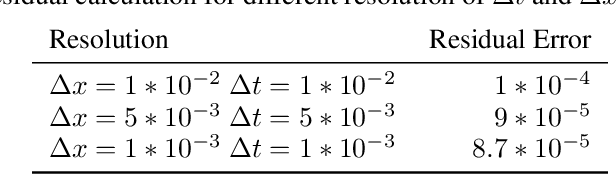

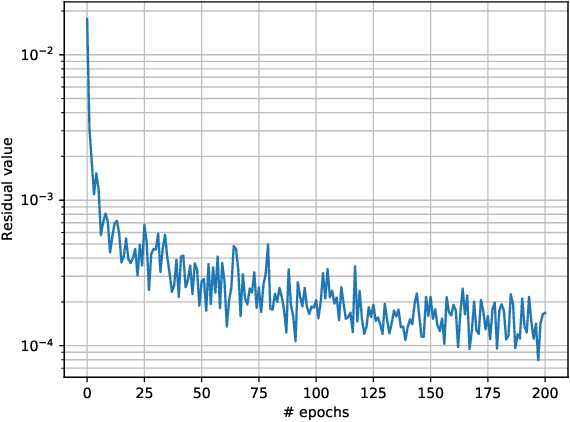

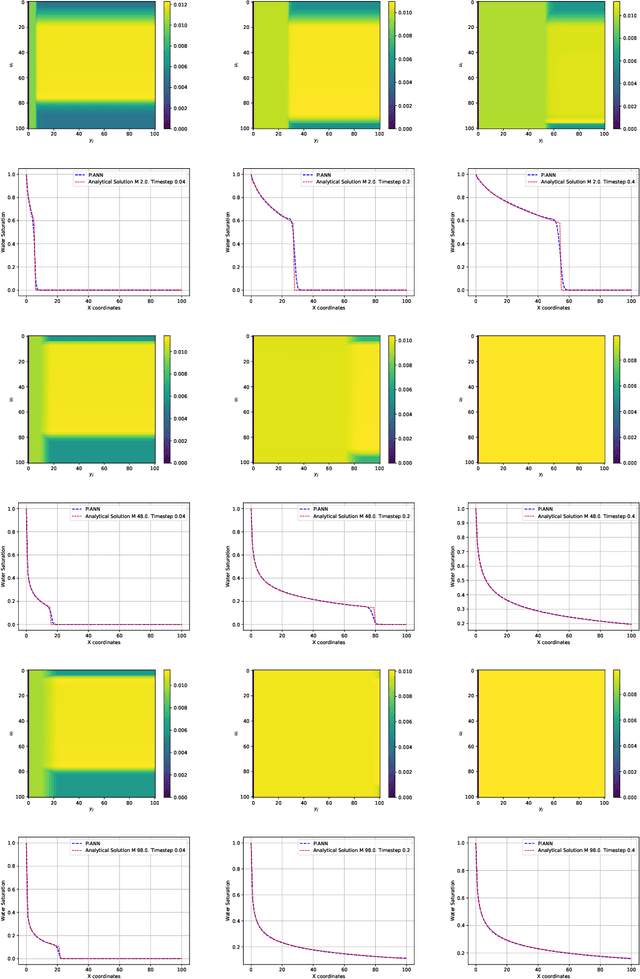

Abstract:Physics-Informed Neural Networks (PINNs) have enabled significant improvements in modelling physical processes described by partial differential equations (PDEs). PINNs are based on simple architectures, and learn the behavior of complex physical systems by optimizing the network parameters to minimize the residual of the underlying PDE. Current network architectures share some of the limitations of classical numerical discretization schemes when applied to non-linear differential equations in continuum mechanics. A paradigmatic example is the solution of hyperbolic conservation laws that develop highly localized nonlinear shock waves. Learning solutions of PDEs with dominant hyperbolic character is a challenge for current PINN approaches, which rely, like most grid-based numerical schemes, on adding artificial dissipation. Here, we address the fundamental question of which network architectures are best suited to learn the complex behavior of non-linear PDEs. We focus on network architecture rather than on residual regularization. Our new methodology, called Physics-Informed Attention-based Neural Networks, (PIANNs), is a combination of recurrent neural networks and attention mechanisms. The attention mechanism adapts the behavior of the deep neural network to the non-linear features of the solution, and break the current limitations of PINNs. We find that PIANNs effectively capture the shock front in a hyperbolic model problem, and are capable of providing high-quality solutions inside and beyond the training set.

Scalable Variational Gaussian Processes for Crowdsourcing: Glitch Detection in LIGO

Nov 05, 2019

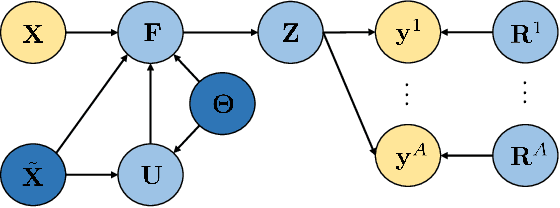

Abstract:In the last years, crowdsourcing is transforming the way classification training sets are obtained. Instead of relying on a single expert annotator, crowdsourcing shares the labelling effort among a large number of collaborators. For instance, this is being applied to the data acquired by the laureate Laser Interferometer Gravitational Waves Observatory (LIGO), in order to detect glitches which might hinder the identification of true gravitational-waves. The crowdsourcing scenario poses new challenging difficulties, as it deals with different opinions from a heterogeneous group of annotators with unknown degrees of expertise. Probabilistic methods, such as Gaussian Processes (GP), have proven successful in modeling this setting. However, GPs do not scale well to large data sets, which hampers their broad adoption in real practice (in particular at LIGO). This has led to the recent introduction of deep learning based crowdsourcing methods, which have become the state-of-the-art. However, the accurate uncertainty quantification of GPs has been partially sacrificed. This is an important aspect for astrophysicists in LIGO, since a glitch detection system should provide very accurate probability distributions of its predictions. In this work, we leverage the most popular sparse GP approximation to develop a novel GP based crowdsourcing method that factorizes into mini-batches. This makes it able to cope with previously-prohibitive data sets. The approach, which we refer to as Scalable Variational Gaussian Processes for Crowdsourcing (SVGPCR), brings back GP-based methods to the state-of-the-art, and excels at uncertainty quantification. SVGPCR is shown to outperform deep learning based methods and previous probabilistic approaches when applied to the LIGO data. Moreover, its behavior and main properties are carefully analyzed in a controlled experiment based on the MNIST data set.

Generating Navigable Semantic Maps from Social Sciences Corpora

Jul 08, 2015Abstract:It is now commonplace to observe that we are facing a deluge of online information. Researchers have of course long acknowledged the potential value of this information since digital traces make it possible to directly observe, describe and analyze social facts, and above all the co-evolution of ideas and communities over time. However, most online information is expressed through text, which means it is not directly usable by machines, since computers require structured, organized and typed information in order to be able to manipulate it. Our goal is thus twofold: 1. Provide new natural language processing techniques aiming at automatically extracting relevant information from texts, especially in the context of social sciences, and connect these pieces of information so as to obtain relevant socio-semantic networks; 2. Provide new ways of exploring these socio-semantic networks, thanks to tools allowing one to dynamically navigate these networks, de-construct and re-construct them interactively, from different points of view following the needs expressed by domain experts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge