Onel L. A. Lopez

Centre for Wireless Communications

Beamforming and Waveform Optimization for RF Wireless Power Transfer with Beyond Diagonal Reconfigurable Intelligent Surfaces

Feb 26, 2025

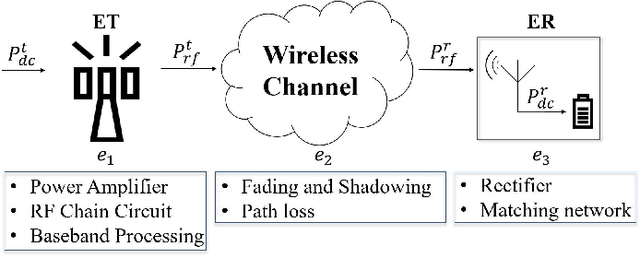

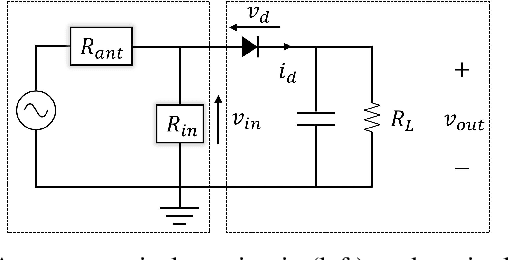

Abstract:Radio frequency (RF) wireless power transfer (WPT) is a promising technology to seamlessly charge low-power devices, but its low end-to-end power transfer efficiency remains a critical challenge. To address the latter, low-cost transmit/radiating architectures, e.g., based on reconfigurable intelligent surfaces (RISs), have shown great potential. Beyond diagonal (BD) RIS is a novel branch of RIS offering enhanced performance over traditional diagonal RIS (D-RIS) in wireless communications, but its potential gains in RF-WPT remain unexplored. Motivated by this, we analyze a BD-RIS-assisted single-antenna RF-WPT system to charge a single rectifier, and formulate a joint beamforming and multi-carrier waveform optimization problem aiming to maximize the harvested power. We propose two solutions relying on semi-definite programming for fully connected BD-RIS and an efficient low-complexity iterative method relying on successive convex approximation. Numerical results show that the proposed algorithms converge to a local optimum and that adding transmit sub-carriers or RIS elements improves the harvesting performance. We show that the transmit power budget impacts the relative power allocation among different sub-carriers depending on the rectifier's operating regime, while BD-RIS shapes the cascade channel differently for frequency-selective and flat scenarios. Finally, we verify by simulation that BD-RIS and D-RIS achieve the same performance under pure far-field line-of-sight conditions (in the absence of mutual coupling). Meanwhile, BD-RIS outperforms D-RIS as the non-line-of-sight components of the channel become dominant.

On the Radio Stripe Deployment for Indoor RF Wireless Power Transfer

Oct 14, 2023Abstract:One of the primary goals of future wireless systems is to foster sustainability, for which, radio frequency (RF) wireless power transfer (WPT) is considered a key technology enabler. The key challenge of RF-WPT systems is the extremely low end-to-end efficiency, mainly due to the losses introduced by the wireless channel. Distributed antenna systems are undoubtedly appealing as they can significantly shorten the charging distances, thus, reducing channel losses. Interestingly, radio stripe systems provide a cost-efficient and scalable way to deploy a distributed multi-antenna system, and thus have received a lot of attention recently. Herein, we consider an RF-WPT system with a transmit radio stripe network to charge multiple indoor energy hotspots, i.e., spatial regions where the energy harvesting devices are expected to be located, including near-field locations. We formulate the optimal radio stripe deployment problem aimed to maximize the minimum power received by the users and explore two specific predefined shapes, namely the straight line and polygon-shaped configurations. Then, we provide efficient solutions relying on geometric programming to optimize the location of the radio stripe elements. The results demonstrate that the proposed radio stripe deployments outperform a central fully-digital square array with the same number of elements and utilizing larger radio stripe lengths can enhance the performance, while increasing the system frequency may degrade it.

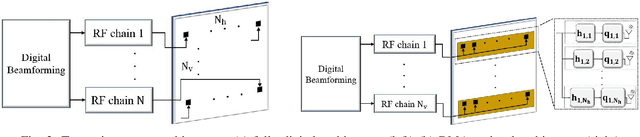

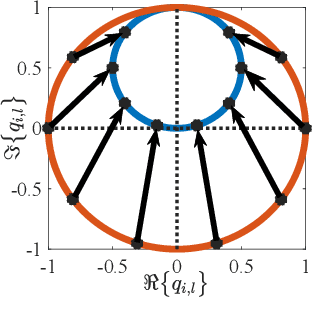

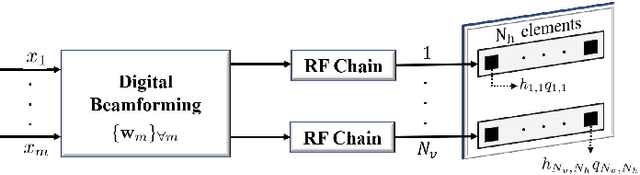

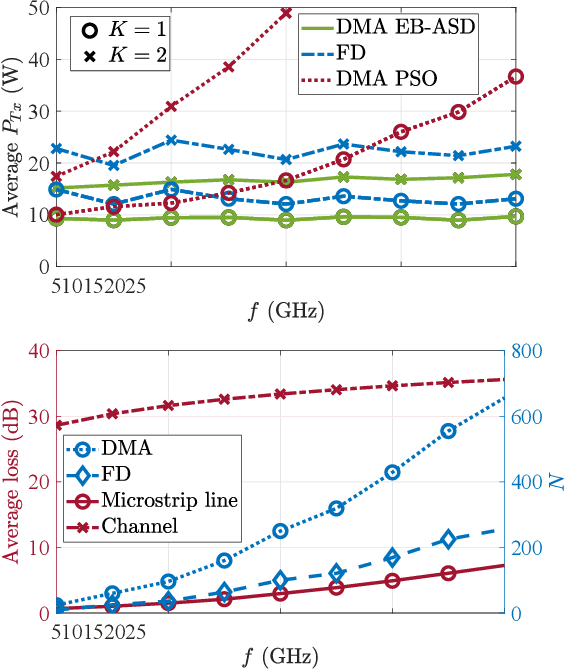

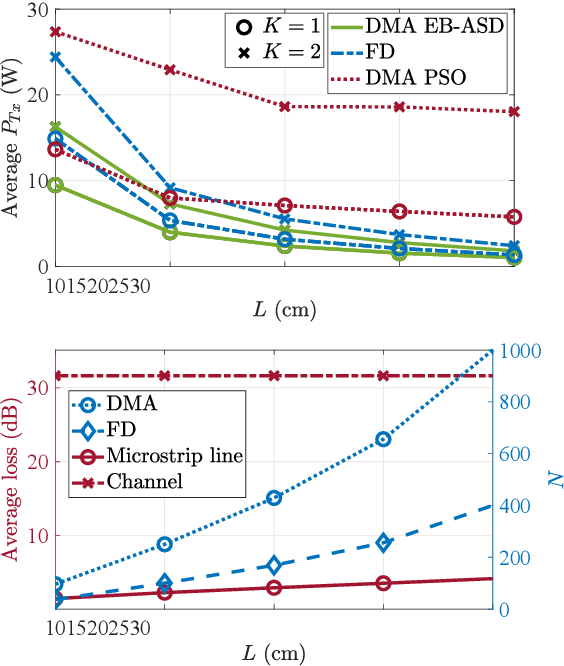

Waveform and Beamforming Optimization for Wireless Power Transfer with Dynamic Metasurface Antennas

Jul 03, 2023

Abstract:Radio frequency (RF) wireless power transfer (WPT) is a promising charging technology for future wireless systems. However, low end-to-end power transfer efficiency (PTE) is a critical challenge for practical implementations. One of the main inefficiency sources is the power consumption and loss of key components such as the high-power amplifier (HPA) and rectenna, which must be considered for PTE optimization. Herein, we investigate the power consumption of an RF-WPT system considering the emerging dynamic metasurface antenna (DMA) as the transmitter. Moreover, we incorporate the HPA and rectenna non-linearities and consider the Doherty HPA to reduce power consumption. We provide a mathematical framework to calculate each user's harvested power from multi-tone signal transmissions and the system power consumption. Then, the waveform and beamforming are designed using swarm-based intelligence to minimize power consumption while satisfying the users' energy harvesting (EH) requirements. Numerical results manifest that increasing the number of transmit tones enhances the performance in terms of coverage probability and power consumption since the HPAs operate below the saturation region in the simulation setup and the EH non-linearity is the dominant factor. Finally, our findings demonstrate that a properly shaped DMA may outperform a fully-digital antenna of the same size.

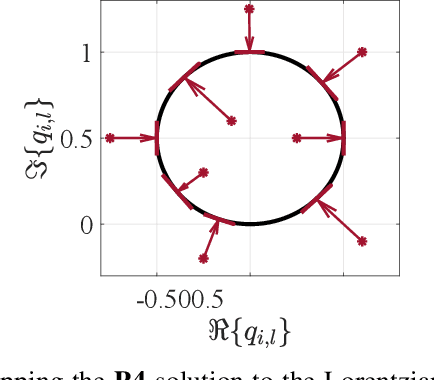

Energy Beamforming for RF Wireless Power Transfer with Dynamic Metasurface Antennas

Jul 03, 2023

Abstract:Radio frequency (RF) wireless power transfer (WPT) is a promising technology for Internet of Things networks. However, RF-WPT is still energy inefficient, calling for advances in waveform optimization, distributed antenna, and energy beamforming (EB). In particular, EB can compensate for the severe propagation loss by directing beams toward the devices. The EB flexibility depends on the transmitter architecture, existing a trade-off between cost/complexity and degrees of freedom. Thus, simpler architectures such as dynamic metasurface antennas (DMAs) are gaining attention. Herein, we consider an RF-WPT system with a transmit DMA for meeting the EH requirements of multiple devices and formulate an optimization problem for the minimum-power design. First, we provide a mathematical model to capture the frequency-dependant signal propagation effect in the DMA architecture. Next, we propose a solution based on semi-definite programming and alternating optimization. Results show that a DMA-based implementation can outperform a fully-digital structure and that utilizing a larger antenna array can reduce the required transmit power, while the operation frequency does not influence much the performance.

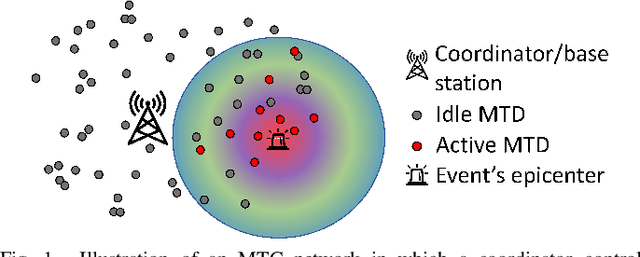

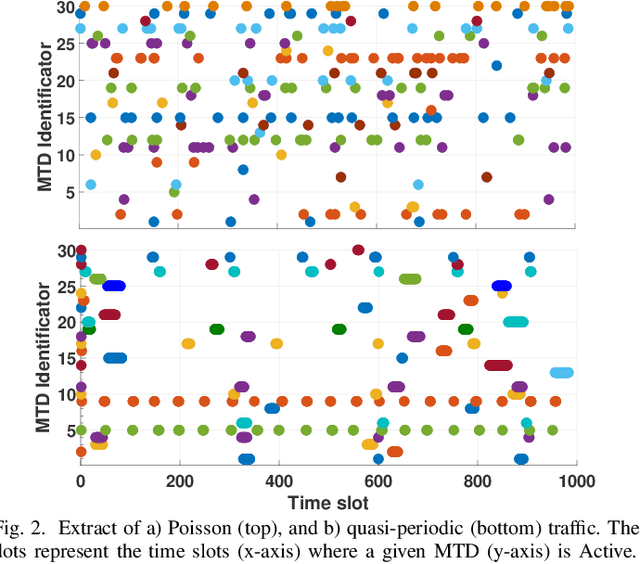

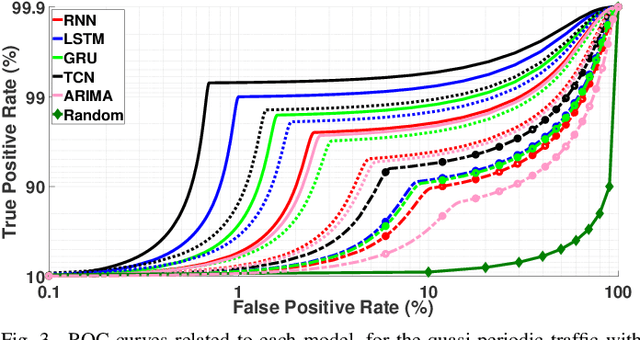

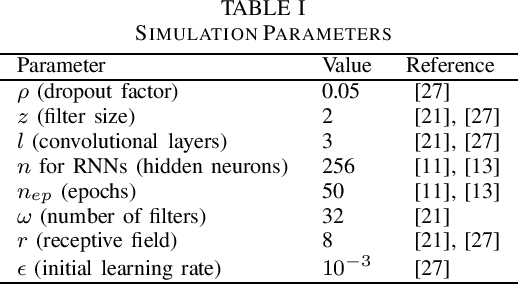

Performance Analysis of ML-based MTC Traffic Pattern Predictors

Apr 04, 2023

Abstract:Prolonging the lifetime of massive machine-type communication (MTC) networks is key to realizing a sustainable digitized society. Great energy savings can be achieved by accurately predicting MTC traffic followed by properly designed resource allocation mechanisms. However, selecting the proper MTC traffic predictor is not straightforward and depends on accuracy/complexity trade-offs and the specific MTC applications and network characteristics. Remarkably, the related state-of-the-art literature still lacks such debates. Herein, we assess the performance of several machine learning (ML) methods to predict Poisson and quasi-periodic MTC traffic in terms of accuracy and computational cost. Results show that the temporal convolutional network (TCN) outperforms the long-short term memory (LSTM), the gated recurrent units (GRU), and the recurrent neural network (RNN), in that order. For Poisson traffic, the accuracy gap between the predictors is larger than under quasi-periodic traffic. Finally, we show that running a TCN predictor is around three times more costly than other methods, while the training/inference time is the greatest/least.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge