Omar Emara

Segmenting Collision Sound Sources in Egocentric Videos

Nov 17, 2025Abstract:Humans excel at multisensory perception and can often recognise object properties from the sound of their interactions. Inspired by this, we propose the novel task of Collision Sound Source Segmentation (CS3), where we aim to segment the objects responsible for a collision sound in visual input (i.e. video frames from the collision clip), conditioned on the audio. This task presents unique challenges. Unlike isolated sound events, a collision sound arises from interactions between two objects, and the acoustic signature of the collision depends on both. We focus on egocentric video, where sounds are often clear, but the visual scene is cluttered, objects are small, and interactions are brief. To address these challenges, we propose a weakly-supervised method for audio-conditioned segmentation, utilising foundation models (CLIP and SAM2). We also incorporate egocentric cues, i.e. objects in hands, to find acting objects that can potentially be collision sound sources. Our approach outperforms competitive baselines by $3\times$ and $4.7\times$ in mIoU on two benchmarks we introduce for the CS3 task: EPIC-CS3 and Ego4D-CS3.

HD-EPIC: A Highly-Detailed Egocentric Video Dataset

Feb 06, 2025

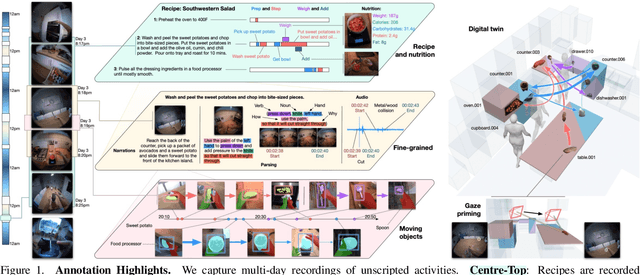

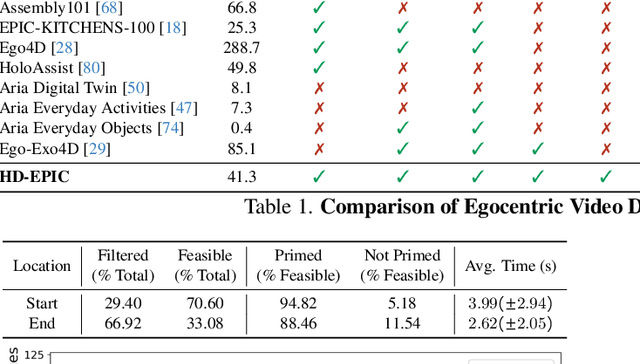

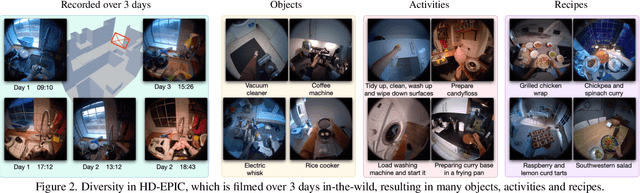

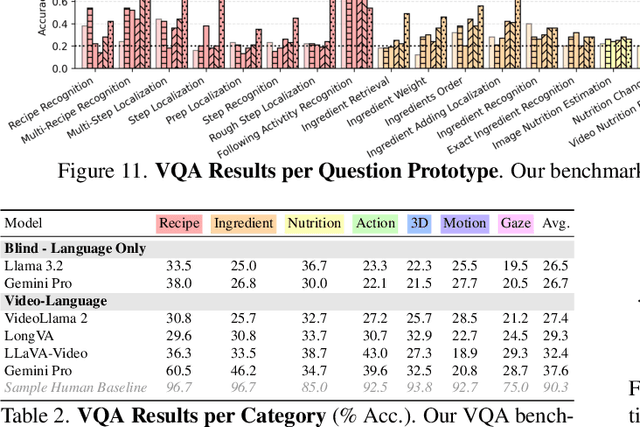

Abstract:We present a validation dataset of newly-collected kitchen-based egocentric videos, manually annotated with highly detailed and interconnected ground-truth labels covering: recipe steps, fine-grained actions, ingredients with nutritional values, moving objects, and audio annotations. Importantly, all annotations are grounded in 3D through digital twinning of the scene, fixtures, object locations, and primed with gaze. Footage is collected from unscripted recordings in diverse home environments, making HDEPIC the first dataset collected in-the-wild but with detailed annotations matching those in controlled lab environments. We show the potential of our highly-detailed annotations through a challenging VQA benchmark of 26K questions assessing the capability to recognise recipes, ingredients, nutrition, fine-grained actions, 3D perception, object motion, and gaze direction. The powerful long-context Gemini Pro only achieves 38.5% on this benchmark, showcasing its difficulty and highlighting shortcomings in current VLMs. We additionally assess action recognition, sound recognition, and long-term video-object segmentation on HD-EPIC. HD-EPIC is 41 hours of video in 9 kitchens with digital twins of 413 kitchen fixtures, capturing 69 recipes, 59K fine-grained actions, 51K audio events, 20K object movements and 37K object masks lifted to 3D. On average, we have 263 annotations per minute of our unscripted videos.

Skin Cancer Machine Learning Model Tone Bias

Oct 08, 2024

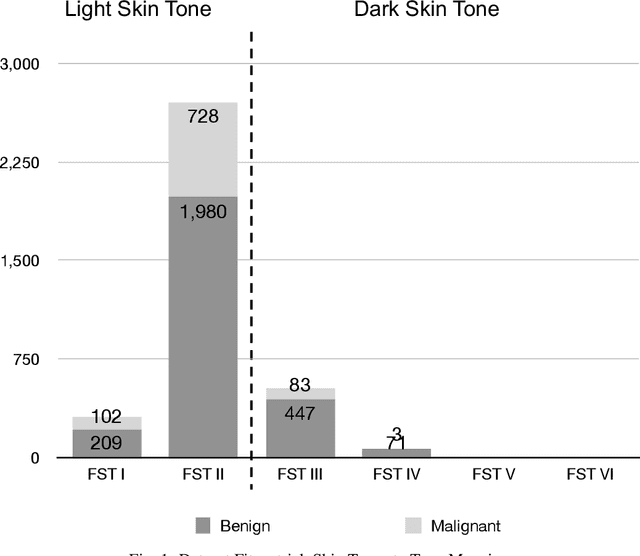

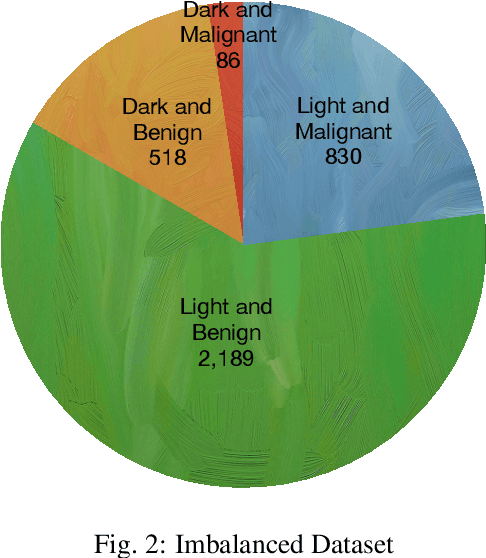

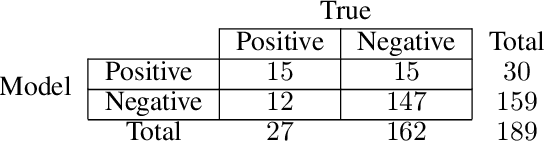

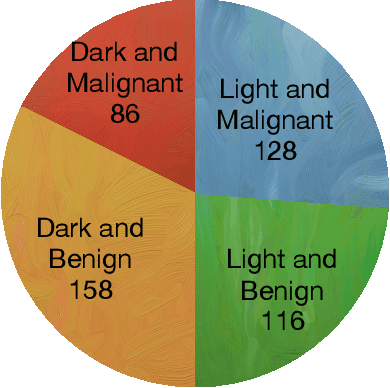

Abstract:Background: Many open-source skin cancer image datasets are the result of clinical trials conducted in countries with lighter skin tones. Due to this tone imbalance, machine learning models derived from these datasets can perform well at detecting skin cancer for lighter skin tones. Any tone bias in these models could introduce fairness concerns and reduce public trust in the artificial intelligence health field. Methods: We examine a subset of images from the International Skin Imaging Collaboration (ISIC) archive that provide tone information. The subset has a significant tone imbalance. These imbalances could explain a model's tone bias. To address this, we train models using the imbalanced dataset and a balanced dataset to compare against. The datasets are used to train a deep convolutional neural network model to classify the images as malignant or benign. We then evaluate the models' disparate impact, based on selection rate, relative to dark or light skin tone. Results: Using the imbalanced dataset, we found that the model is significantly better at detecting malignant images in lighter tone resulting in a disparate impact of 0.577. Using the balanced dataset, we found that the model is also significantly better at detecting malignant images in lighter versus darker tones with a disparate impact of 0.684. Using the imbalanced or balanced dataset to train the model still results in a disparate impact well below the standard threshold of 0.80 which suggests the model is biased with respect to skin tone. Conclusion: The results show that typical skin cancer machine learning models can be tone biased. These results provide evidence that diagnosis or tone imbalance is not the cause of the bias. Other techniques will be necessary to identify and address the bias in these models, an area of future investigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge