Md Hassanuzzaman

Congenital Heart Disease Classification Using Phonocardiograms: A Scalable Screening Tool for Diverse Environments

Mar 28, 2025

Abstract:Congenital heart disease (CHD) is a critical condition that demands early detection, particularly in infancy and childhood. This study presents a deep learning model designed to detect CHD using phonocardiogram (PCG) signals, with a focus on its application in global health. We evaluated our model on several datasets, including the primary dataset from Bangladesh, achieving a high accuracy of 94.1%, sensitivity of 92.7%, specificity of 96.3%. The model also demonstrated robust performance on the public PhysioNet Challenge 2022 and 2016 datasets, underscoring its generalizability to diverse populations and data sources. We assessed the performance of the algorithm for single and multiple auscultation sites on the chest, demonstrating that the model maintains over 85% accuracy even when using a single location. Furthermore, our algorithm was able to achieve an accuracy of 80% on low-quality recordings, which cardiologists deemed non-diagnostic. This research suggests that an AI- driven digital stethoscope could serve as a cost-effective screening tool for CHD in resource-limited settings, enhancing clinical decision support and ultimately improving patient outcomes.

Skin Cancer Machine Learning Model Tone Bias

Oct 08, 2024

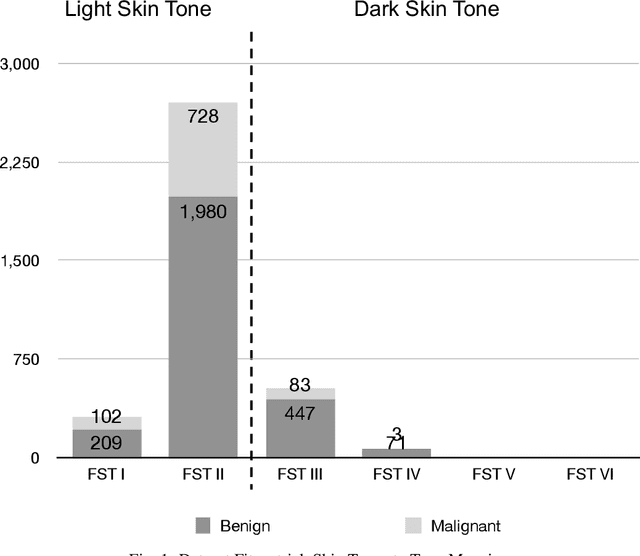

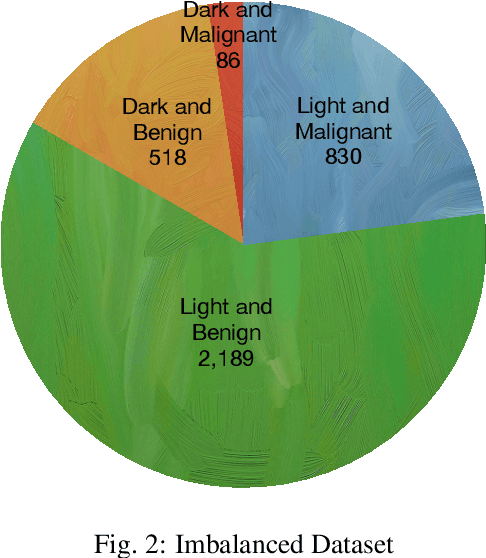

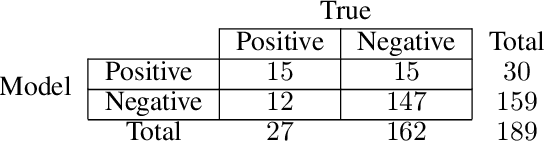

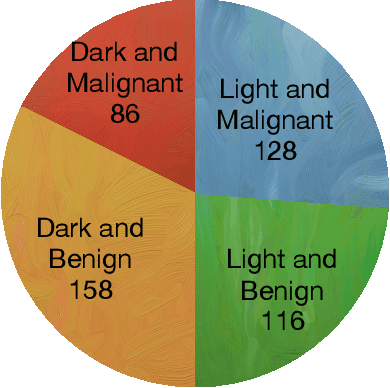

Abstract:Background: Many open-source skin cancer image datasets are the result of clinical trials conducted in countries with lighter skin tones. Due to this tone imbalance, machine learning models derived from these datasets can perform well at detecting skin cancer for lighter skin tones. Any tone bias in these models could introduce fairness concerns and reduce public trust in the artificial intelligence health field. Methods: We examine a subset of images from the International Skin Imaging Collaboration (ISIC) archive that provide tone information. The subset has a significant tone imbalance. These imbalances could explain a model's tone bias. To address this, we train models using the imbalanced dataset and a balanced dataset to compare against. The datasets are used to train a deep convolutional neural network model to classify the images as malignant or benign. We then evaluate the models' disparate impact, based on selection rate, relative to dark or light skin tone. Results: Using the imbalanced dataset, we found that the model is significantly better at detecting malignant images in lighter tone resulting in a disparate impact of 0.577. Using the balanced dataset, we found that the model is also significantly better at detecting malignant images in lighter versus darker tones with a disparate impact of 0.684. Using the imbalanced or balanced dataset to train the model still results in a disparate impact well below the standard threshold of 0.80 which suggests the model is biased with respect to skin tone. Conclusion: The results show that typical skin cancer machine learning models can be tone biased. These results provide evidence that diagnosis or tone imbalance is not the cause of the bias. Other techniques will be necessary to identify and address the bias in these models, an area of future investigation.

Classification of Short Segment Pediatric Heart Sounds Based on a Transformer-Based Convolutional Neural Network

Mar 30, 2024

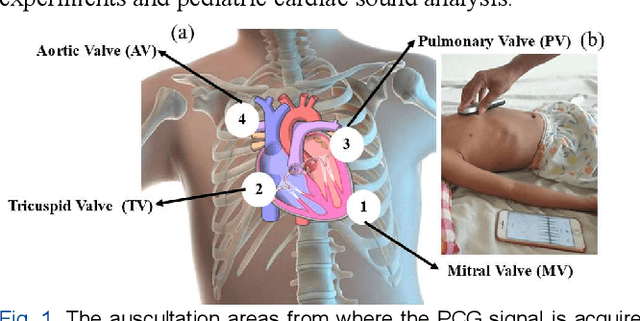

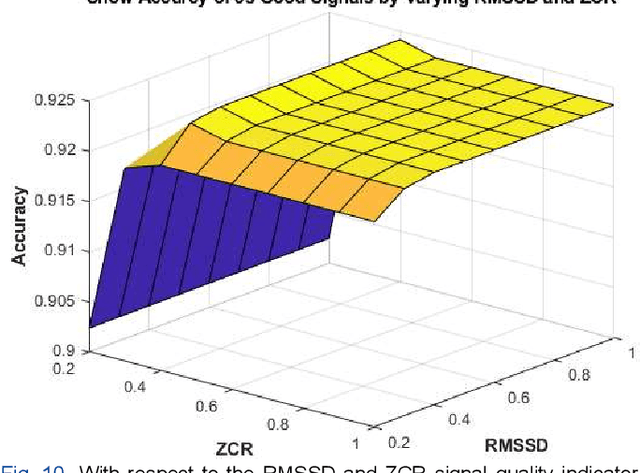

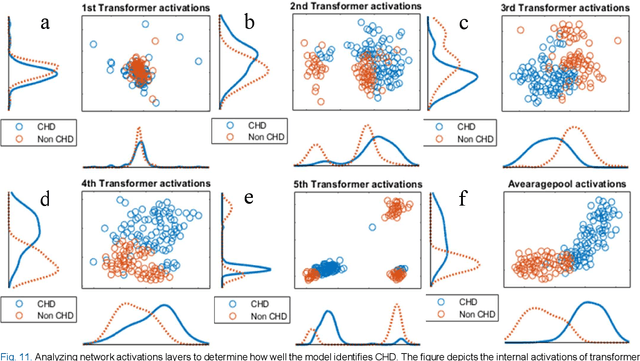

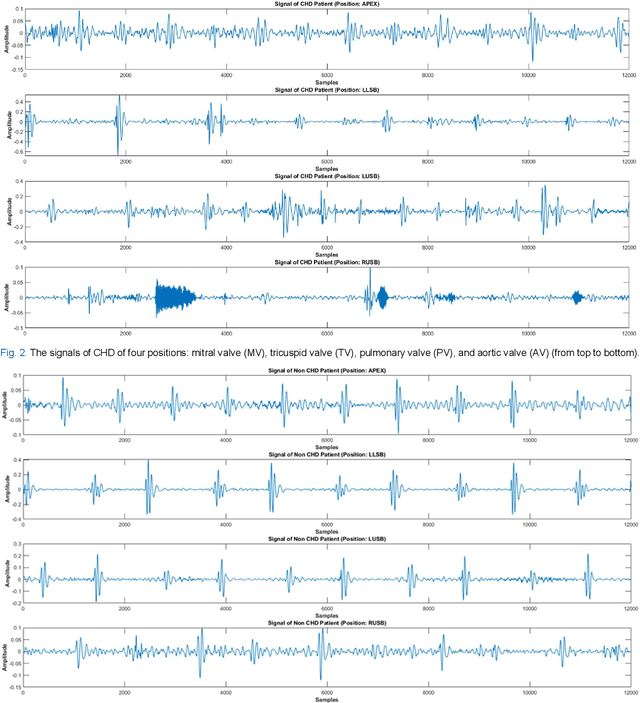

Abstract:Congenital anomalies arising as a result of a defect in the structure of the heart and great vessels are known as congenital heart diseases or CHDs. A PCG can provide essential details about the mechanical conduction system of the heart and point out specific patterns linked to different kinds of CHD. This study aims to investigate the minimum signal duration required for the automatic classification of heart sounds. This study also investigated the optimum signal quality assessment indicator (Root Mean Square of Successive Differences) RMSSD and (Zero Crossings Rate) ZCR value. Mel-frequency cepstral coefficients (MFCCs) based feature is used as an input to build a Transformer-Based residual one-dimensional convolutional neural network, which is then used for classifying the heart sound. The study showed that 0.4 is the ideal threshold for getting suitable signals for the RMSSD and ZCR indicators. Moreover, a minimum signal length of 5s is required for effective heart sound classification. It also shows that a shorter signal (3 s heart sound) does not have enough information to categorize heart sounds accurately, and the longer signal (15 s heart sound) may contain more noise. The best accuracy, 93.69%, is obtained for the 5s signal to distinguish the heart sound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge