Olivia Brown

From Snow to Rain: Evaluating Robustness, Calibration, and Complexity of Model-Based Robust Training

Jan 14, 2026Abstract:Robustness to natural corruptions remains a critical challenge for reliable deep learning, particularly in safety-sensitive domains. We study a family of model-based training approaches that leverage a learned nuisance variation model to generate realistic corruptions, as well as new hybrid strategies that combine random coverage with adversarial refinement in nuisance space. Using the Challenging Unreal and Real Environments for Traffic Sign Recognition dataset (CURE-TSR), with Snow and Rain corruptions, we evaluate accuracy, calibration, and training complexity across corruption severities. Our results show that model-based methods consistently outperform baselines Vanilla, Adversarial Training, and AugMix baselines, with model-based adversarial training providing the strongest robustness under across all corruptions but at the expense of higher computation and model-based data augmentation achieving comparable robustness with $T$ less computational complexity without incurring a statistically significant drop in performance. These findings highlight the importance of learned nuisance models for capturing natural variability, and suggest a promising path toward more resilient and calibrated models under challenging conditions.

Proceedings of the Robust Artificial Intelligence System Assurance (RAISA) Workshop 2022

Feb 10, 2022Abstract:The Robust Artificial Intelligence System Assurance (RAISA) workshop will focus on research, development and application of robust artificial intelligence (AI) and machine learning (ML) systems. Rather than studying robustness with respect to particular ML algorithms, our approach will be to explore robustness assurance at the system architecture level, during both development and deployment, and within the human-machine teaming context. While the research community is converging on robust solutions for individual AI models in specific scenarios, the problem of evaluating and assuring the robustness of an AI system across its entire life cycle is much more complex. Moreover, the operational context in which AI systems are deployed necessitates consideration of robustness and its relation to principles of fairness, privacy, and explainability.

Tools and Practices for Responsible AI Engineering

Jan 14, 2022Abstract:Responsible Artificial Intelligence (AI) - the practice of developing, evaluating, and maintaining accurate AI systems that also exhibit essential properties such as robustness and explainability - represents a multifaceted challenge that often stretches standard machine learning tooling, frameworks, and testing methods beyond their limits. In this paper, we present two new software libraries - hydra-zen and the rAI-toolbox - that address critical needs for responsible AI engineering. hydra-zen dramatically simplifies the process of making complex AI applications configurable, and their behaviors reproducible. The rAI-toolbox is designed to enable methods for evaluating and enhancing the robustness of AI-models in a way that is scalable and that composes naturally with other popular ML frameworks. We describe the design principles and methodologies that make these tools effective, including the use of property-based testing to bolster the reliability of the tools themselves. Finally, we demonstrate the composability and flexibility of the tools by showing how various use cases from adversarial robustness and explainable AI can be concisely implemented with familiar APIs.

Principles for Evaluation of AI/ML Model Performance and Robustness

Jul 06, 2021

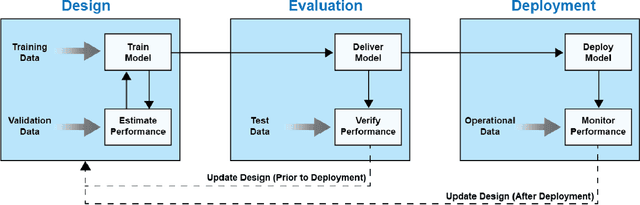

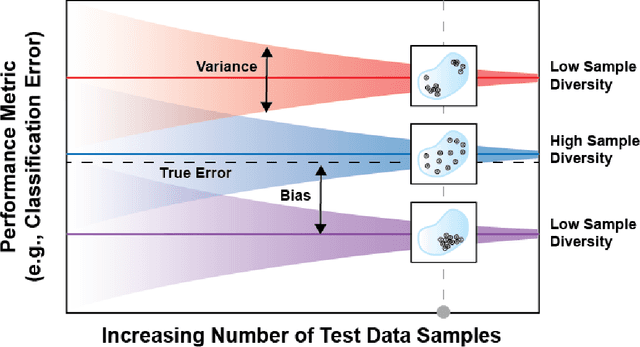

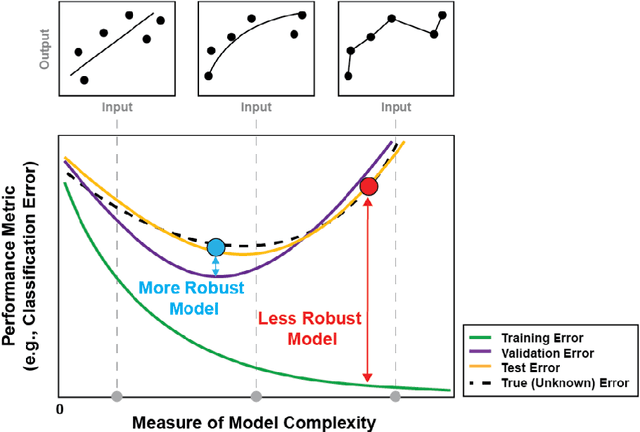

Abstract:The Department of Defense (DoD) has significantly increased its investment in the design, evaluation, and deployment of Artificial Intelligence and Machine Learning (AI/ML) capabilities to address national security needs. While there are numerous AI/ML successes in the academic and commercial sectors, many of these systems have also been shown to be brittle and nonrobust. In a complex and ever-changing national security environment, it is vital that the DoD establish a sound and methodical process to evaluate the performance and robustness of AI/ML models before these new capabilities are deployed to the field. This paper reviews the AI/ML development process, highlights common best practices for AI/ML model evaluation, and makes recommendations to DoD evaluators to ensure the deployment of robust AI/ML capabilities for national security needs.

Fast Training of Deep Neural Networks Robust to Adversarial Perturbations

Jul 08, 2020

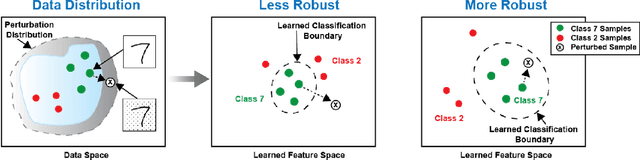

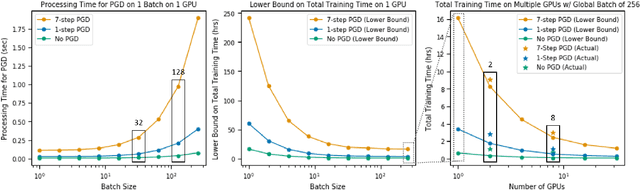

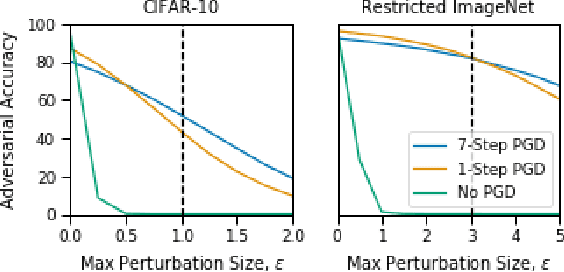

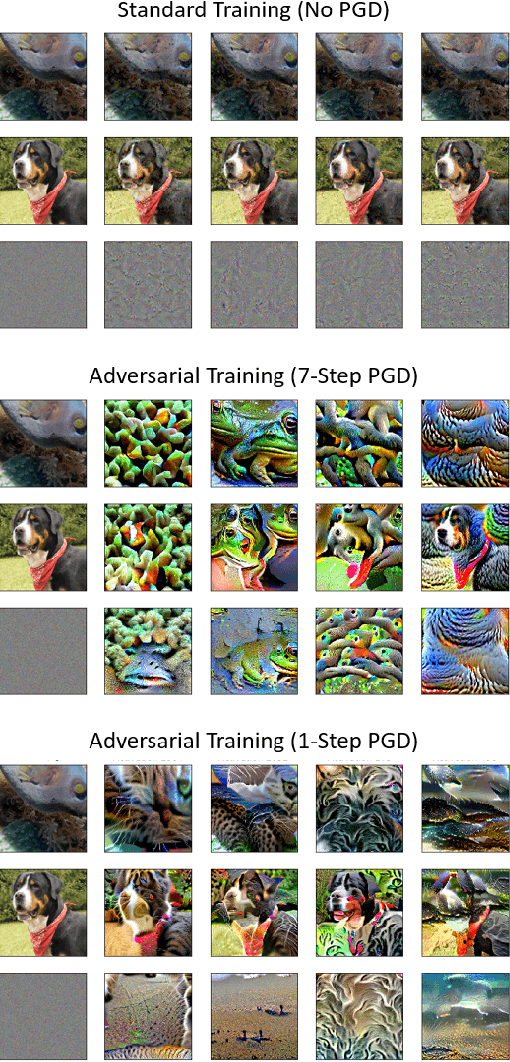

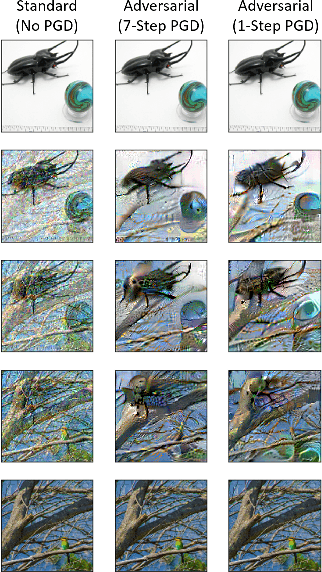

Abstract:Deep neural networks are capable of training fast and generalizing well within many domains. Despite their promising performance, deep networks have shown sensitivities to perturbations of their inputs (e.g., adversarial examples) and their learned feature representations are often difficult to interpret, raising concerns about their true capability and trustworthiness. Recent work in adversarial training, a form of robust optimization in which the model is optimized against adversarial examples, demonstrates the ability to improve performance sensitivities to perturbations and yield feature representations that are more interpretable. Adversarial training, however, comes with an increased computational cost over that of standard (i.e., nonrobust) training, rendering it impractical for use in large-scale problems. Recent work suggests that a fast approximation to adversarial training shows promise for reducing training time and maintaining robustness in the presence of perturbations bounded by the infinity norm. In this work, we demonstrate that this approach extends to the Euclidean norm and preserves the human-aligned feature representations that are common for robust models. Additionally, we show that using a distributed training scheme can further reduce the time to train robust deep networks. Fast adversarial training is a promising approach that will provide increased security and explainability in machine learning applications for which robust optimization was previously thought to be impractical.

Safe Predictors for Enforcing Input-Output Specifications

Jan 29, 2020

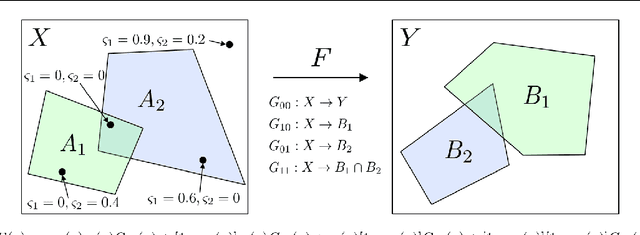

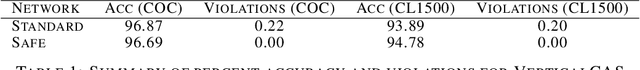

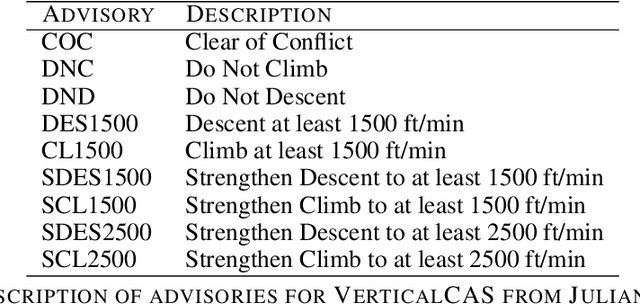

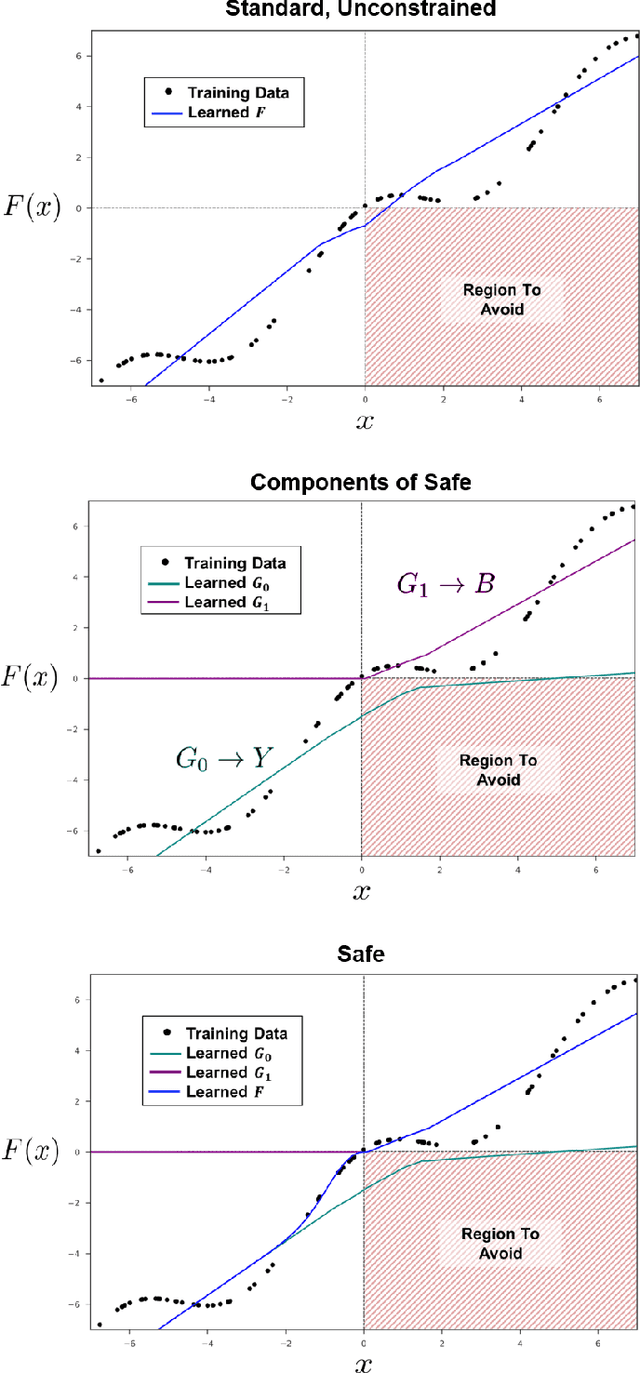

Abstract:We present an approach for designing correct-by-construction neural networks (and other machine learning models) that are guaranteed to be consistent with a collection of input-output specifications before, during, and after algorithm training. Our method involves designing a constrained predictor for each set of compatible constraints, and combining them safely via a convex combination of their predictions. We demonstrate our approach on synthetic datasets and an aircraft collision avoidance problem.

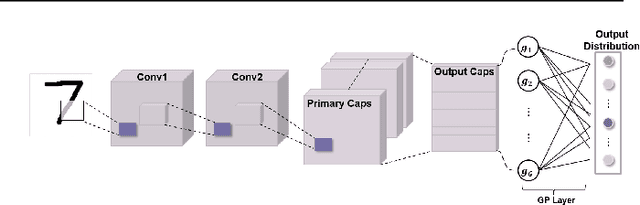

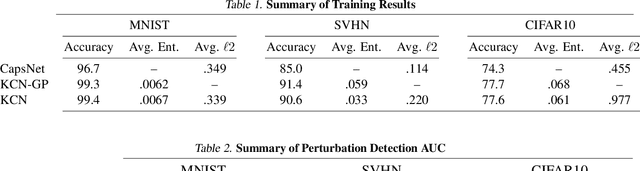

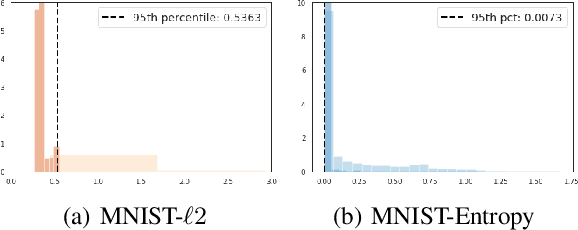

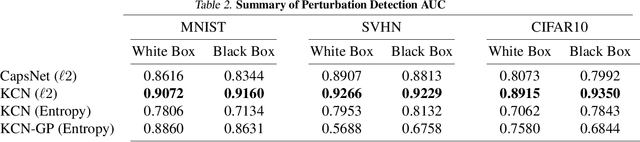

Kernelized Capsule Networks

Jun 07, 2019

Abstract:Capsule Networks attempt to represent patterns in images in a way that preserves hierarchical spatial relationships. Additionally, research has demonstrated that these techniques may be robust against adversarial perturbations. We present an improvement to training capsule networks with added robustness via non-parametric kernel methods. The representations learned through the capsule network are used to construct covariance kernels for Gaussian processes (GPs). We demonstrate that this approach achieves comparable prediction performance to Capsule Networks while improving robustness to adversarial perturbations and providing a meaningful measure of uncertainty that may aid in the detection of adversarial inputs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge