Michael Yee

Mahalanobis-Aware Training for Out-of-Distribution Detection

Nov 01, 2023Abstract:While deep learning models have seen widespread success in controlled environments, there are still barriers to their adoption in open-world settings. One critical task for safe deployment is the detection of anomalous or out-of-distribution samples that may require human intervention. In this work, we present a novel loss function and recipe for training networks with improved density-based out-of-distribution sensitivity. We demonstrate the effectiveness of our method on CIFAR-10, notably reducing the false-positive rate of the relative Mahalanobis distance method on far-OOD tasks by over 50%.

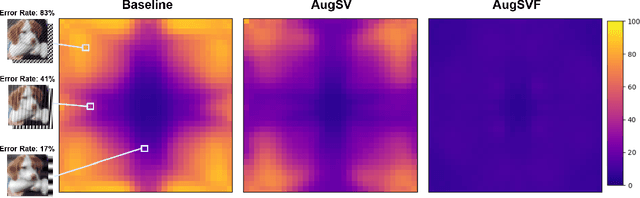

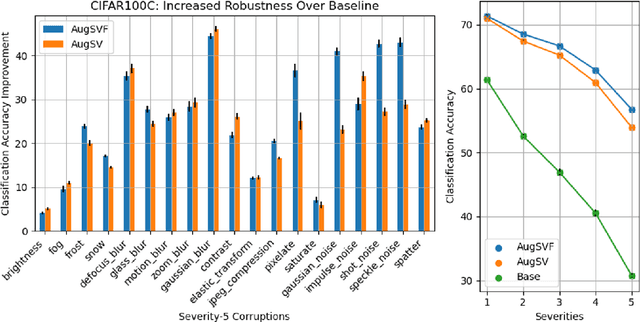

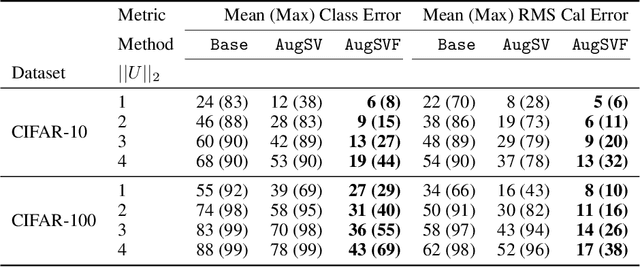

Fourier-Based Augmentations for Improved Robustness and Uncertainty Calibration

Feb 24, 2022

Abstract:Diverse data augmentation strategies are a natural approach to improving robustness in computer vision models against unforeseen shifts in data distribution. However, the ability to tailor such strategies to inoculate a model against specific classes of corruptions or attacks -- without incurring substantial losses in robustness against other classes of corruptions -- remains elusive. In this work, we successfully harden a model against Fourier-based attacks, while producing superior-to-AugMix accuracy and calibration results on both the CIFAR-10-C and CIFAR-100-C datasets; classification error is reduced by over ten percentage points for some high-severity noise and digital-type corruptions. We achieve this by incorporating Fourier-basis perturbations in the AugMix image-augmentation framework. Thus we demonstrate that the AugMix framework can be tailored to effectively target particular distribution shifts, while boosting overall model robustness.

Tools and Practices for Responsible AI Engineering

Jan 14, 2022Abstract:Responsible Artificial Intelligence (AI) - the practice of developing, evaluating, and maintaining accurate AI systems that also exhibit essential properties such as robustness and explainability - represents a multifaceted challenge that often stretches standard machine learning tooling, frameworks, and testing methods beyond their limits. In this paper, we present two new software libraries - hydra-zen and the rAI-toolbox - that address critical needs for responsible AI engineering. hydra-zen dramatically simplifies the process of making complex AI applications configurable, and their behaviors reproducible. The rAI-toolbox is designed to enable methods for evaluating and enhancing the robustness of AI-models in a way that is scalable and that composes naturally with other popular ML frameworks. We describe the design principles and methodologies that make these tools effective, including the use of property-based testing to bolster the reliability of the tools themselves. Finally, we demonstrate the composability and flexibility of the tools by showing how various use cases from adversarial robustness and explainable AI can be concisely implemented with familiar APIs.

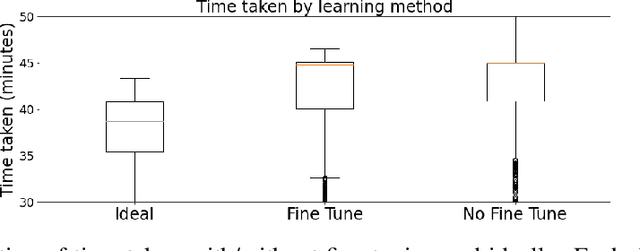

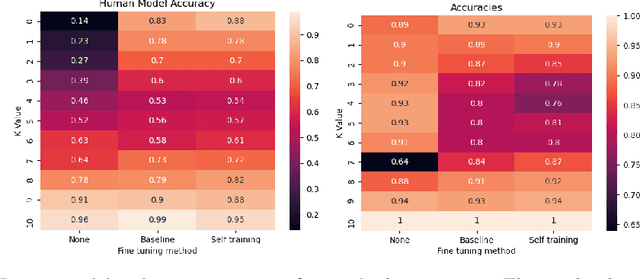

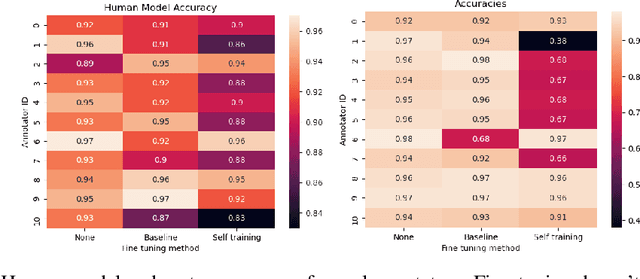

Improving Learning-to-Defer Algorithms Through Fine-Tuning

Dec 18, 2021

Abstract:The ubiquity of AI leads to situations where humans and AI work together, creating the need for learning-to-defer algorithms that determine how to partition tasks between AI and humans. We work to improve learning-to-defer algorithms when paired with specific individuals by incorporating two fine-tuning algorithms and testing their efficacy using both synthetic and image datasets. We find that fine-tuning can pick up on simple human skill patterns, but struggles with nuance, and we suggest future work that uses robust semi-supervised to improve learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge