Okyaz Eminaga

Critical Evaluation of Artificial Intelligence as Digital Twin of Pathologist for Prostate Cancer Pathology

Aug 23, 2023

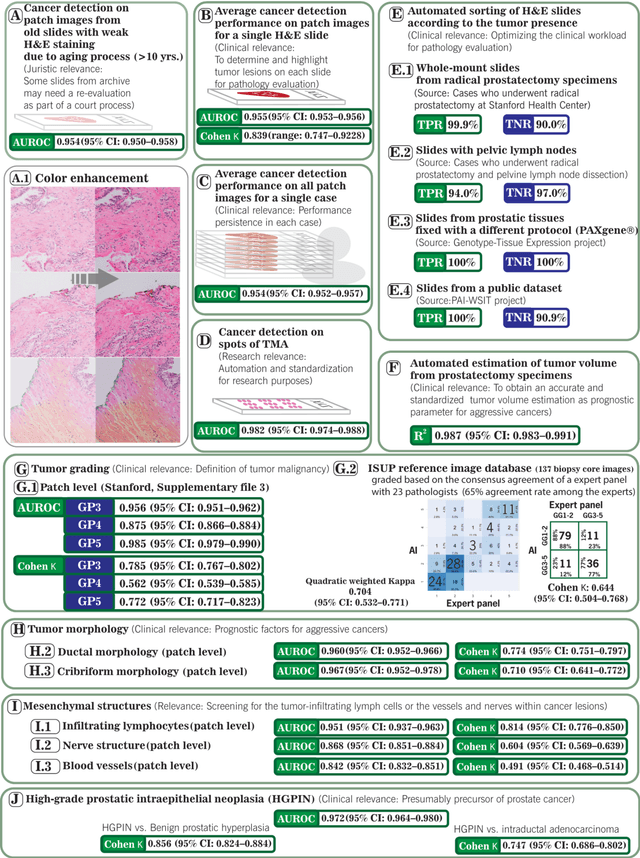

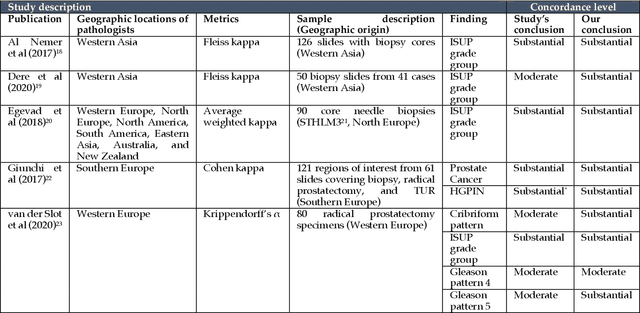

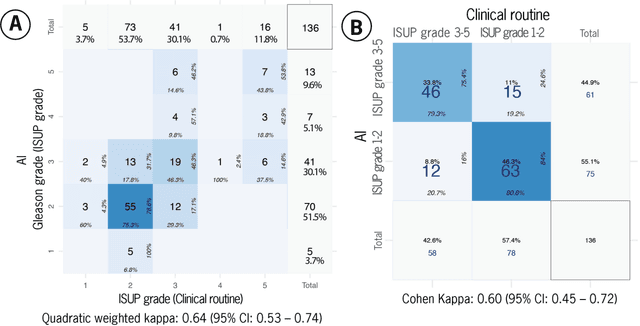

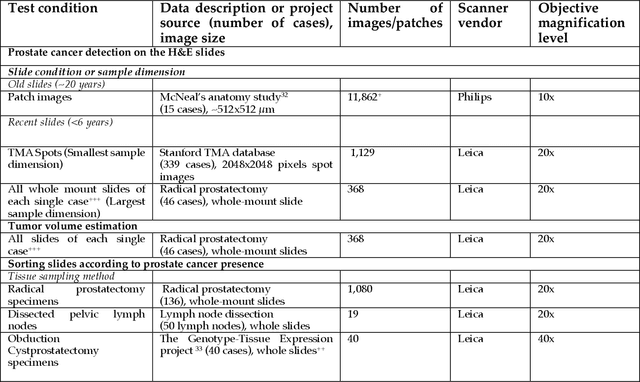

Abstract:Prostate cancer pathology plays a crucial role in clinical management but is time-consuming. Artificial intelligence (AI) shows promise in detecting prostate cancer and grading patterns. We tested an AI-based digital twin of a pathologist, vPatho, on 2,603 histology images of prostate tissue stained with hematoxylin and eosin. We analyzed various factors influencing tumor-grade disagreement between vPatho and six human pathologists. Our results demonstrated that vPatho achieved comparable performance in prostate cancer detection and tumor volume estimation, as reported in the literature. Concordance levels between vPatho and human pathologists were examined. Notably, moderate to substantial agreement was observed in identifying complementary histological features such as ductal, cribriform, nerve, blood vessels, and lymph cell infiltrations. However, concordance in tumor grading showed a decline when applied to prostatectomy specimens (kappa = 0.44) compared to biopsy cores (kappa = 0.70). Adjusting the decision threshold for the secondary Gleason pattern from 5% to 10% improved the concordance level between pathologists and vPatho for tumor grading on prostatectomy specimens (kappa from 0.44 to 0.64). Potential causes of grade discordance included the vertical extent of tumors toward the prostate boundary and the proportions of slides with prostate cancer. Gleason pattern 4 was particularly associated with discordance. Notably, grade discordance with vPatho was not specific to any of the six pathologists involved in routine clinical grading. In conclusion, our study highlights the potential utility of AI in developing a digital twin of a pathologist. This approach can help uncover limitations in AI adoption and the current grading system for prostate cancer pathology.

Conceptual Framework and Documentation Standards of Cystoscopic Media Content for Artificial Intelligence

Jan 18, 2023

Abstract:Background: The clinical documentation of cystoscopy includes visual and textual materials. However, the secondary use of visual cystoscopic data for educational and research purposes remains limited due to inefficient data management in routine clinical practice. Methods: A conceptual framework was designed to document cystoscopy in a standardized manner with three major sections: data management, annotation management, and utilization management. A Swiss-cheese model was proposed for quality control and root cause analyses. We defined the infrastructure required to implement the framework with respect to FAIR (findable, accessible, interoperable, re-usable) principles. We applied two scenarios exemplifying data sharing for research and educational projects to ensure the compliance with FAIR principles. Results: The framework was successfully implemented while following FAIR principles. The cystoscopy atlas produced from the framework could be presented in an educational web portal; a total of 68 full-length qualitative videos and corresponding annotation data were sharable for artificial intelligence projects covering frame classification and segmentation problems at case, lesion and frame levels. Conclusion: Our study shows that the proposed framework facilitates the storage of the visual documentation in a standardized manner and enables FAIR data for education and artificial intelligence research.

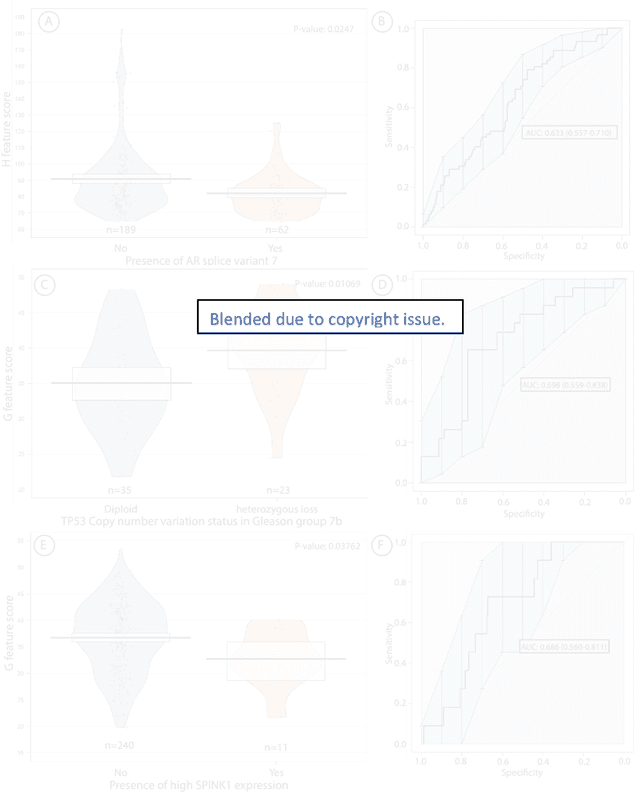

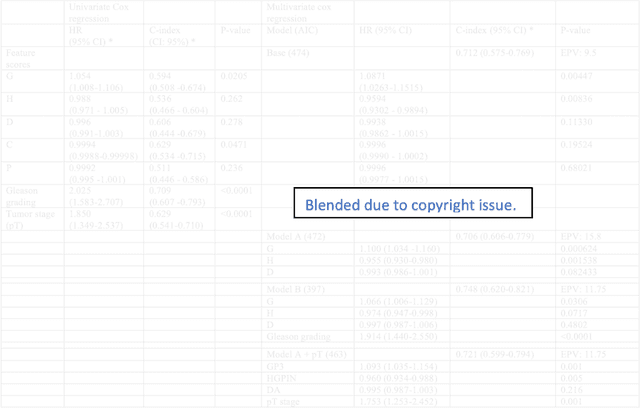

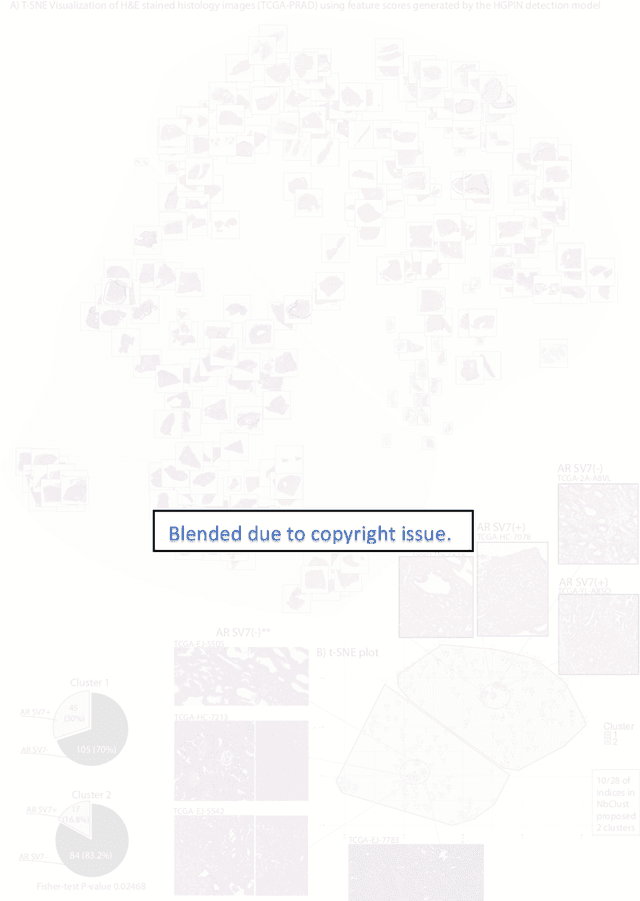

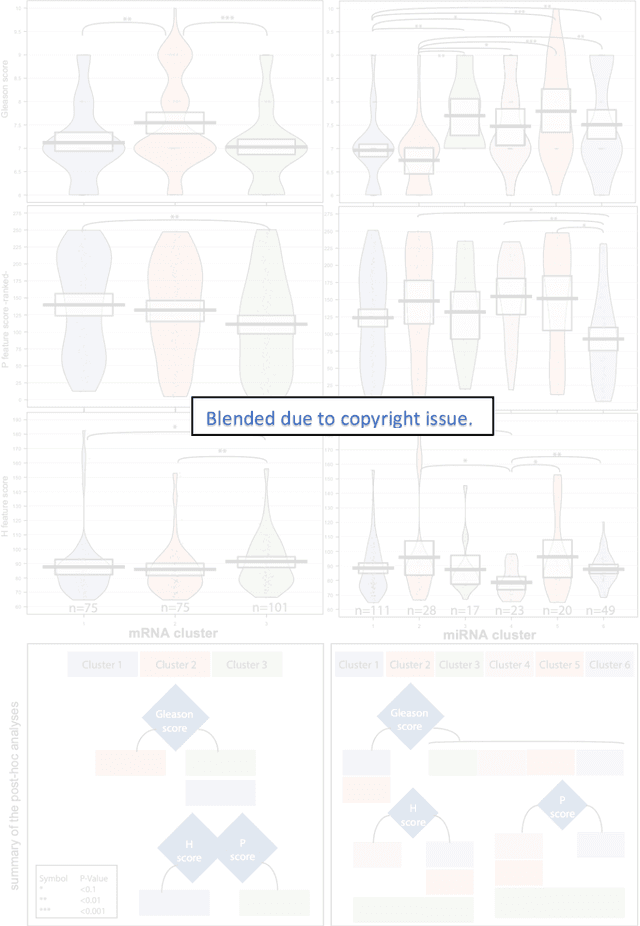

Biologic and Prognostic Feature Scores from Whole-Slide Histology Images Using Deep Learning

Oct 24, 2019

Abstract:Histopathology is a reflection of the molecular changes and provides prognostic phenotypes representing the disease progression. In this study, we introduced feature scores generated from hematoxylin and eosin histology images based on deep learning (DL) models developed for prostate pathology. We demonstrated that these feature scores were significantly prognostic for time to event endpoints (biochemical recurrence and cancer-specific survival) and had simultaneously molecular biologic associations to relevant genomic alterations and molecular subtypes using already trained DL models that were not previously exposed to the datasets of the current study. Further, we discussed the potential of such feature scores to improve the current tumor grading system and the challenges that are associated with tumor heterogeneity and the development of prognostic models from histology images. Our findings uncover the potential of feature scores from histology images as digital biomarkers in precision medicine and as an expanding utility for digital pathology.

Deep Learning for Prostate Pathology

Oct 16, 2019

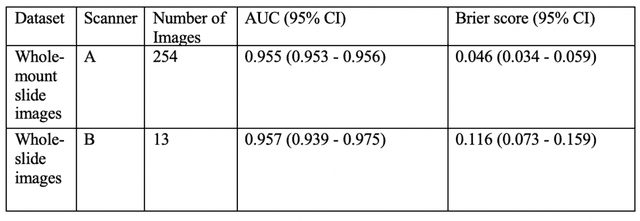

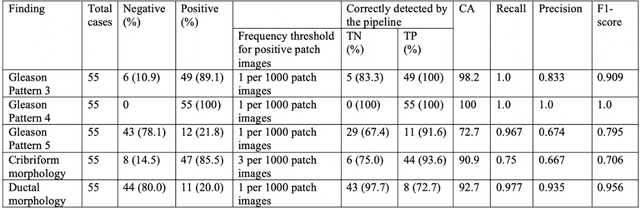

Abstract:The current study detects different morphologies related to prostate pathology using deep learning models; these models were evaluated on 2,121 hematoxylin and eosin (H&E) stain histology images captured using bright field microscopy, which spanned a variety of image qualities, origins (whole slide, tissue micro array, whole mount, Internet), scanning machines, timestamps, H&E staining protocols, and institutions. For case usage, these models were applied for the annotation tasks in clinician-oriented pathology reports for prostatectomy specimens. The true positive rate (TPR) for slides with prostate cancer was 99.7% by a false positive rate of 0.785%. The F1-scores of Gleason patterns reported in pathology reports ranged from 0.795 to 1.0 at the case level. TPR was 93.6% for the cribriform morphology and 72.6% for the ductal morphology. The correlation between the ground truth and the prediction for the relative tumor volume was 0.987 n. Our models cover the major components of prostate pathology and successfully accomplish the annotation tasks.

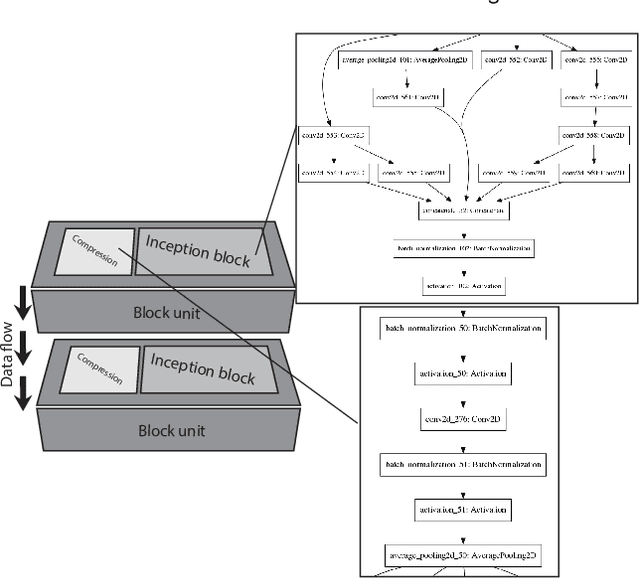

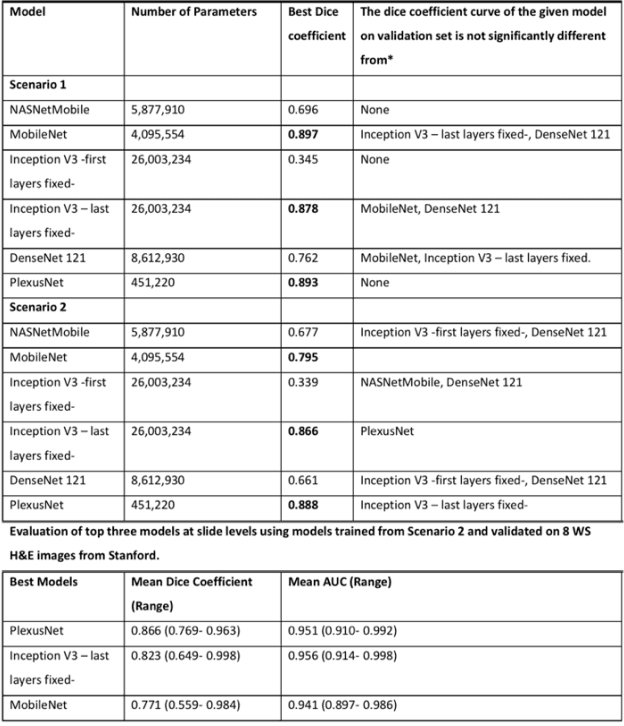

Plexus Convolutional Neural Network (PlexusNet): A novel neural network architecture for histologic image analysis

Aug 24, 2019

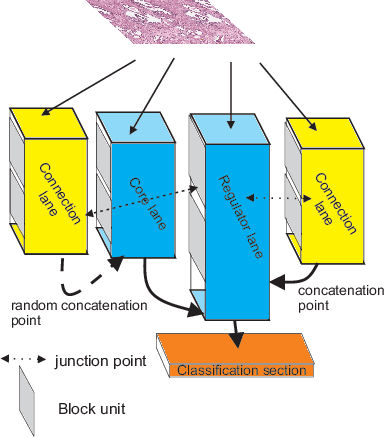

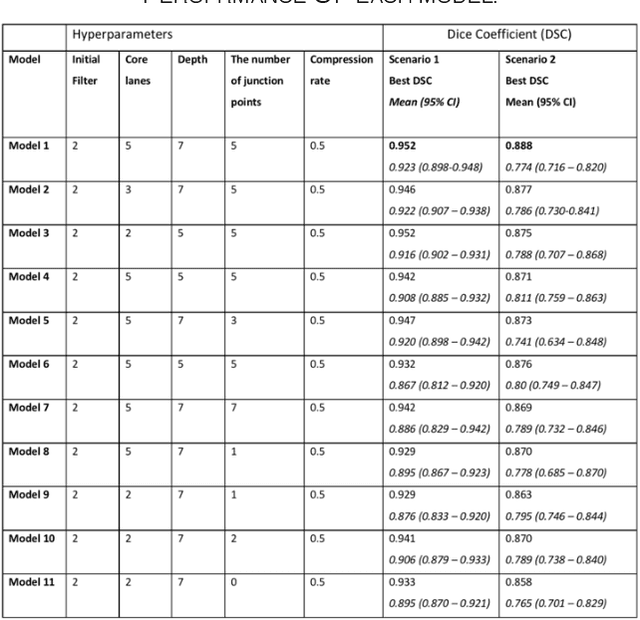

Abstract:Different convolutional neural network (CNN) models have been tested for their application in histologic imaging analyses. However, these models are prone to overfitting due to their large parameter capacity, requiring more data and expensive computational resources for model training. Given these limitations, we developed and tested PlexusNet for histologic evaluation using a single GPU by a batch dimension of 16x512x512x3. We utilized 62 Hematoxylin and eosin stain (H&E) annotated histological images of radical prostatectomy cases from TCGA-PRAD and Stanford University, and 24 H&E whole-slide images with hepatocellular carcinoma from TCGA-LIHC diagnostic histology images. Base models were DenseNet, Inception V3, and MobileNet and compared with PlexusNet. The dice coefficient (DSC) was evaluated for each model. PlexusNet delivered comparable classification performance (DSC at patch level: 0.89) for H&E whole-slice images in distinguishing prostate cancer from normal tissues. The parameter capacity of PlexusNet is 9 times smaller than MobileNet or 58 times smaller than Inception V3, respectively. Similar findings were observed in distinguishing hepatocellular carcinoma from non-cancerous liver histologies (DSC at patch level: 0.85). As conclusion, PlexusNet represents a novel model architecture for histological image analysis that achieves classification performance comparable to the base models while providing orders-of-magnitude memory savings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge