Ningning Zhao

ForgeryNet -- Face Forgery Analysis Challenge 2021: Methods and Results

Dec 15, 2021

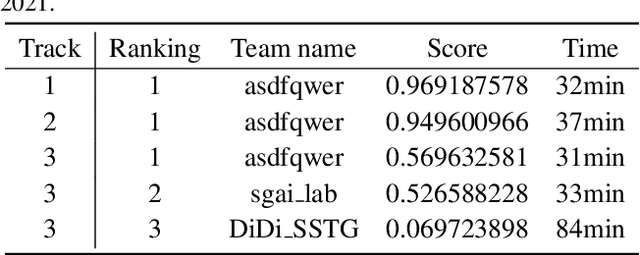

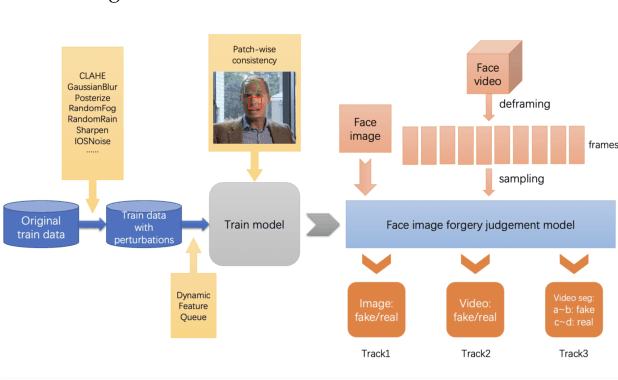

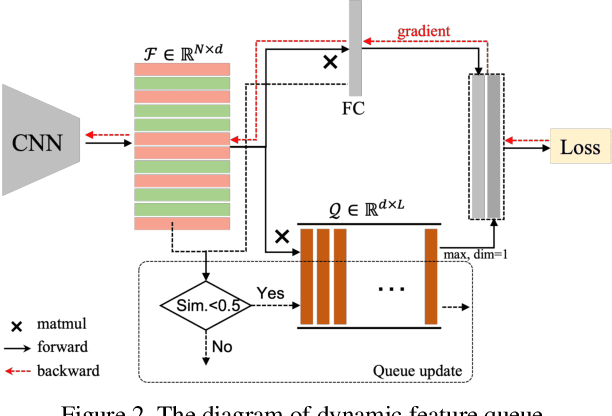

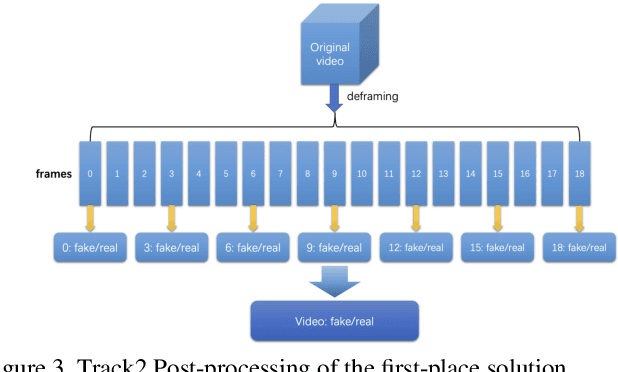

Abstract:The rapid progress of photorealistic synthesis techniques has reached a critical point where the boundary between real and manipulated images starts to blur. Recently, a mega-scale deep face forgery dataset, ForgeryNet which comprised of 2.9 million images and 221,247 videos has been released. It is by far the largest publicly available in terms of data-scale, manipulations (7 image-level approaches, 8 video-level approaches), perturbations (36 independent and more mixed perturbations), and annotations (6.3 million classification labels, 2.9 million manipulated area annotations, and 221,247 temporal forgery segment labels). This paper reports methods and results in the ForgeryNet - Face Forgery Analysis Challenge 2021, which employs the ForgeryNet benchmark. The model evaluation is conducted offline on the private test set. A total of 186 participants registered for the competition, and 11 teams made valid submissions. We will analyze the top-ranked solutions and present some discussion on future work directions.

Fully Automated Pancreas Segmentation with Two-stage 3D Convolutional Neural Networks

Jun 05, 2019

Abstract:Due to the fact that pancreas is an abdominal organ with very large variations in shape and size, automatic and accurate pancreas segmentation can be challenging for medical image analysis. In this work, we proposed a fully automated two stage framework for pancreas segmentation based on convolutional neural networks (CNN). In the first stage, a U-Net is trained for the down-sampled 3D volume segmentation. Then a candidate region covering the pancreas is extracted from the estimated labels. Motivated by the superior performance reported by renowned region based CNN, in the second stage, another 3D U-Net is trained on the candidate region generated in the first stage. We evaluated the performance of the proposed method on the NIH computed tomography (CT) dataset, and verified its superiority over other state-of-the-art 2D and 3D approaches for pancreas segmentation in terms of dice-sorensen coefficient (DSC) accuracy in testing. The mean DSC of the proposed method is 85.99%.

Motion Compensated Dynamic MRI Reconstruction with Local Affine Optical Flow Estimation

Feb 19, 2018

Abstract:This paper proposes a novel framework to reconstruct the dynamic magnetic resonance images (DMRI) with motion compensation (MC). Due to the inherent motion effects during DMRI acquisition, reconstruction of DMRI using motion estimation/compensation (ME/MC) has been studied under a compressed sensing (CS) scheme. In this paper, by embedding the intensity-based optical flow (OF) constraint into the traditional CS scheme, we are able to couple the DMRI reconstruction with motion field estimation. The formulated optimization problem is solved by a primal-dual algorithm with linesearch due to its efficiency when dealing with non-differentiable problems. With the estimated motion field, the DMRI reconstruction is refined through MC. By employing the multi-scale coarse-to-fine strategy, we are able to update the variables(temporal image sequences and motion vectors) and to refine the image reconstruction alternately. Moreover, the proposed framework is capable of handling a wide class of prior information (regularizations) for DMRI reconstruction, such as sparsity, low rank and total variation. Experiments on various DMRI data, ranging from in vivo lung to cardiac dataset, validate the reconstruction quality improvement using the proposed scheme in comparison to several state-of-the-art algorithms.

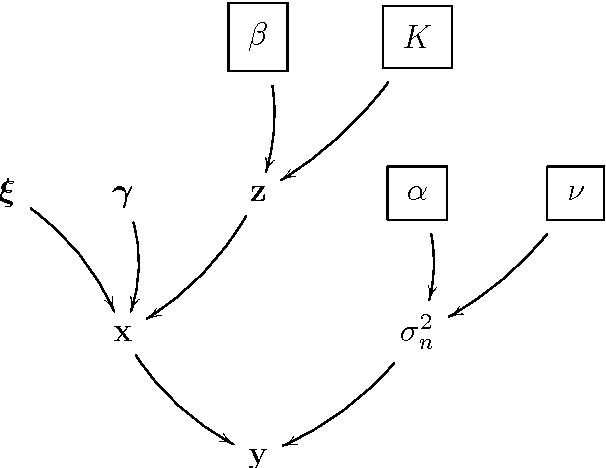

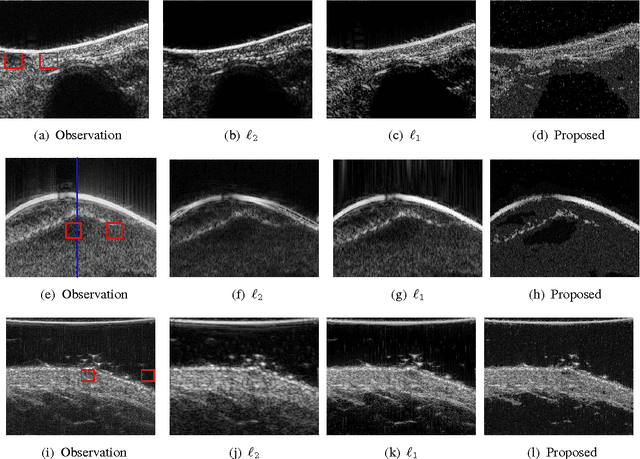

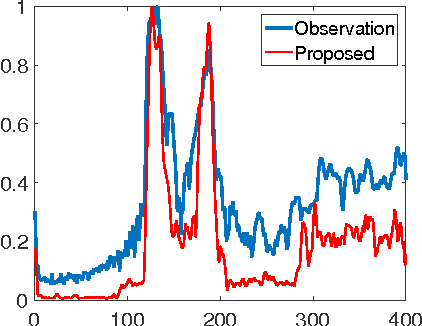

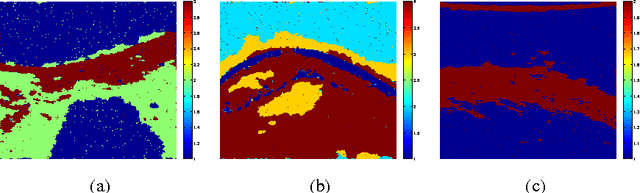

Joint Segmentation and Deconvolution of Ultrasound Images Using a Hierarchical Bayesian Model based on Generalized Gaussian Priors

May 02, 2016

Abstract:This paper proposes a joint segmentation and deconvolution Bayesian method for medical ultrasound (US) images. Contrary to piecewise homogeneous images, US images exhibit heavy characteristic speckle patterns correlated with the tissue structures. The generalized Gaussian distribution (GGD) has been shown to be one of the most relevant distributions for characterizing the speckle in US images. Thus, we propose a GGD-Potts model defined by a label map coupling US image segmentation and deconvolution. The Bayesian estimators of the unknown model parameters, including the US image, the label map and all the hyperparameters are difficult to be expressed in closed form. Thus, we investigate a Gibbs sampler to generate samples distributed according to the posterior of interest. These generated samples are finally used to compute the Bayesian estimators of the unknown parameters. The performance of the proposed Bayesian model is compared with existing approaches via several experiments conducted on realistic synthetic data and in vivo US images.

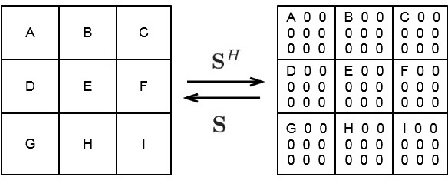

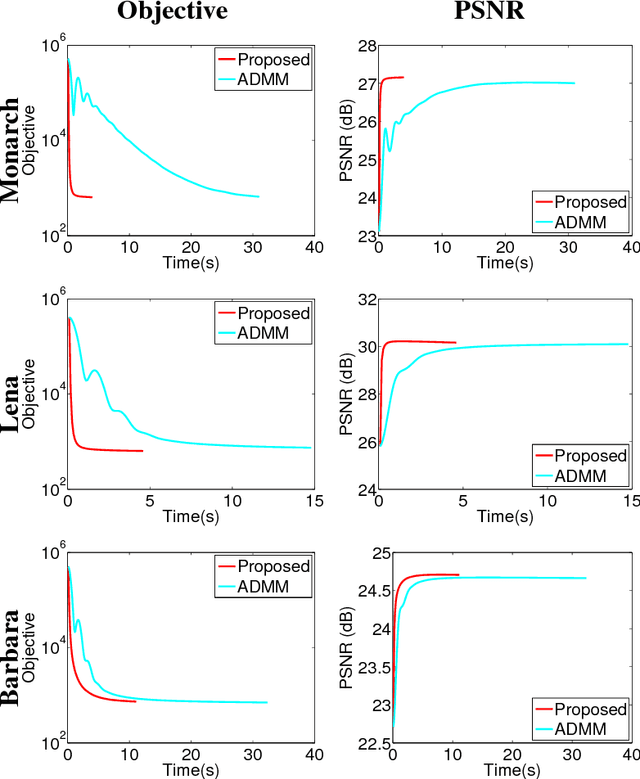

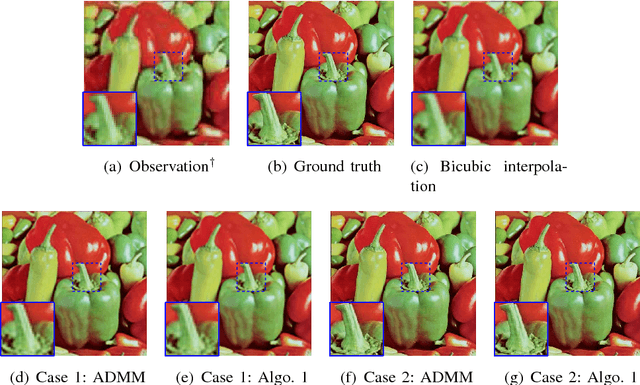

Fast Single Image Super-Resolution

May 02, 2016

Abstract:This paper addresses the problem of single image super-resolution (SR), which consists of recovering a high resolution image from its blurred, decimated and noisy version. The existing algorithms for single image SR use different strategies to handle the decimation and blurring operators. In addition to the traditional first-order gradient methods, recent techniques investigate splitting-based methods dividing the SR problem into up-sampling and deconvolution steps that can be easily solved. Instead of following this splitting strategy, we propose to deal with the decimation and blurring operators simultaneously by taking advantage of their particular properties in the frequency domain, leading to a new fast SR approach. Specifically, an analytical solution can be obtained and implemented efficiently for the Gaussian prior or any other regularization that can be formulated into an $\ell_2$-regularized quadratic model, i.e., an $\ell_2$-$\ell_2$ optimization problem. Furthermore, the flexibility of the proposed SR scheme is shown through the use of various priors/regularizations, ranging from generic image priors to learning-based approaches. In the case of non-Gaussian priors, we show how the analytical solution derived from the Gaussian case can be embedded intotraditional splitting frameworks, allowing the computation cost of existing algorithms to be decreased significantly. Simulation results conducted on several images with different priors illustrate the effectiveness of our fast SR approach compared with the existing techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge