Nikolay Kutuzov

AdLoCo: adaptive batching significantly improves communications efficiency and convergence for Large Language Models

Aug 25, 2025Abstract:Scaling distributed training of Large Language Models (LLMs) requires not only algorithmic advances but also efficient utilization of heterogeneous hardware resources. While existing methods such as DiLoCo have demonstrated promising results, they often fail to fully exploit computational clusters under dynamic workloads. To address this limitation, we propose a three-stage method that combines Multi-Instance Training (MIT), Adaptive Batched DiLoCo, and switch mode mechanism. MIT allows individual nodes to run multiple lightweight training streams with different model instances in parallel and merge them to combine knowledge, increasing throughput and reducing idle time. Adaptive Batched DiLoCo dynamically adjusts local batch sizes to balance computation and communication, substantially lowering synchronization delays. Switch mode further stabilizes training by seamlessly introducing gradient accumulation once adaptive batch sizes grow beyond hardware-friendly limits. Together, these innovations improve both convergence speed and system efficiency. We also provide a theoretical estimate of the number of communications required for the full convergence of a model trained using our method.

Exploring Applications of State Space Models and Advanced Training Techniques in Sequential Recommendations: A Comparative Study on Efficiency and Performance

Aug 10, 2024

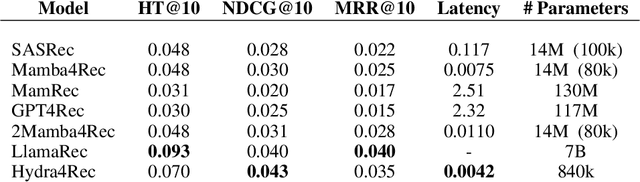

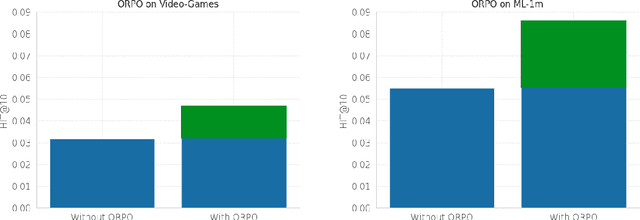

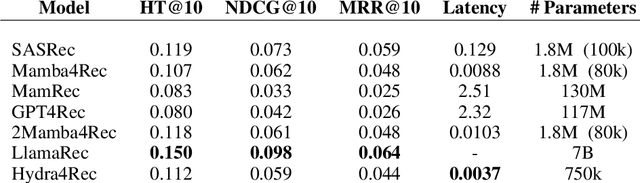

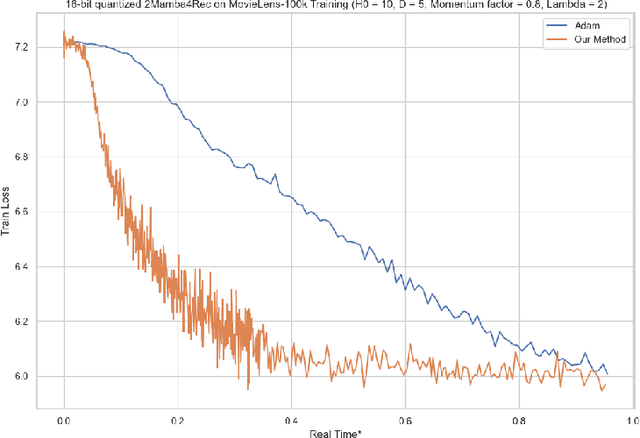

Abstract:Recommender systems aim to estimate the dynamically changing user preferences and sequential dependencies between historical user behaviour and metadata. Although transformer-based models have proven to be effective in sequential recommendations, their state growth is proportional to the length of the sequence that is being processed, which makes them expensive in terms of memory and inference costs. Our research focused on three promising directions in sequential recommendations: enhancing speed through the use of State Space Models (SSM), as they can achieve SOTA results in the sequential recommendations domain with lower latency, memory, and inference costs, as proposed by arXiv:2403.03900 improving the quality of recommendations with Large Language Models (LLMs) via Monolithic Preference Optimization without Reference Model (ORPO); and implementing adaptive batch- and step-size algorithms to reduce costs and accelerate training processes.

Breaking the Heavy-Tailed Noise Barrier in Stochastic Optimization Problems

Nov 07, 2023

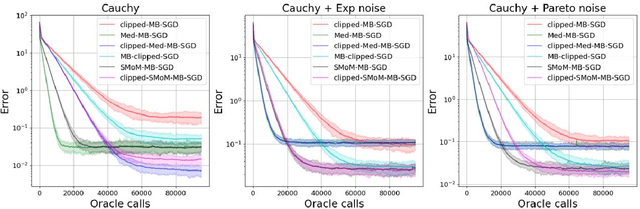

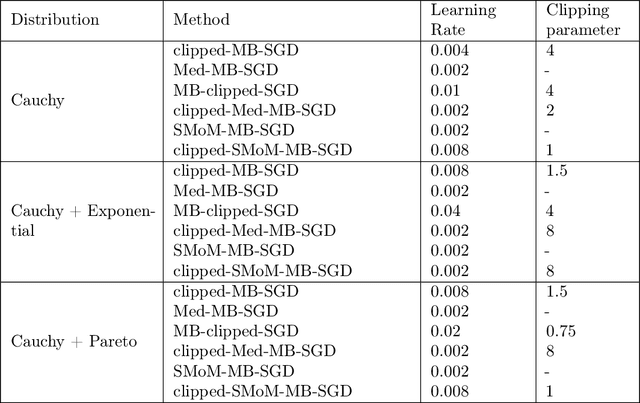

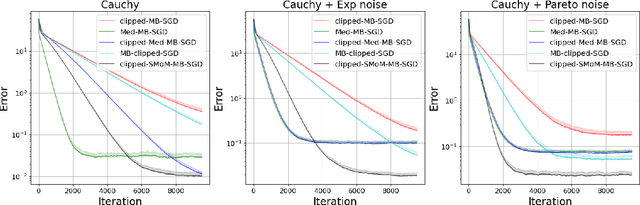

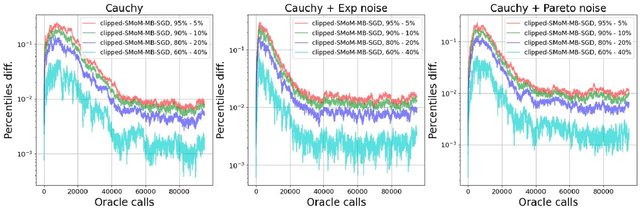

Abstract:We consider stochastic optimization problems with heavy-tailed noise with structured density. For such problems, we show that it is possible to get faster rates of convergence than $\mathcal{O}(K^{-2(\alpha - 1)/\alpha})$, when the stochastic gradients have finite moments of order $\alpha \in (1, 2]$. In particular, our analysis allows the noise norm to have an unbounded expectation. To achieve these results, we stabilize stochastic gradients, using smoothed medians of means. We prove that the resulting estimates have negligible bias and controllable variance. This allows us to carefully incorporate them into clipped-SGD and clipped-SSTM and derive new high-probability complexity bounds in the considered setup.

Implicitly normalized forecaster with clipping for linear and non-linear heavy-tailed multi-armed bandits

May 19, 2023

Abstract:The Implicitly Normalized Forecaster (INF) algorithm is considered to be an optimal solution for adversarial multi-armed bandit (MAB) problems. However, most of the existing complexity results for INF rely on restrictive assumptions, such as bounded rewards. Recently, a related algorithm was proposed that works for both adversarial and stochastic heavy-tailed MAB settings. However, this algorithm fails to fully exploit the available data. In this paper, we propose a new version of INF called the Implicitly Normalized Forecaster with clipping (INF-clip) for MAB problems with heavy-tailed reward distributions. We establish convergence results under mild assumptions on the rewards distribution and demonstrate that INF-clip is optimal for linear heavy-tailed stochastic MAB problems and works well for non-linear ones. Furthermore, we show that INF-clip outperforms the best-of-both-worlds algorithm in cases where it is difficult to distinguish between different arms.

Deep Learning-Assisted Localisation of Nanoparticles in synthetically generated two-photon microscopy images

Mar 17, 2023Abstract:Tracking single molecules is instrumental for quantifying the transport of molecules and nanoparticles in biological samples, e.g., in brain drug delivery studies. Existing intensity-based localisation methods are not developed for imaging with a scanning microscope, typically used for in vivo imaging. Low signal-to-noise ratios, movement of molecules out-of-focus, and high motion blur on images recorded with scanning two-photon microscopy (2PM) in vivo pose a challenge to the accurate localisation of molecules. Using data-driven models is challenging due to low data volumes, typical for in vivo experiments. We developed a 2PM image simulator to supplement scarce training data. The simulator mimics realistic motion blur, background fluorescence, and shot noise observed in vivo imaging. Training a data-driven model with simulated data improves localisation quality in simulated images and shows why intensity-based methods fail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge