Nicolai Oetter

Few Shot Learning for the Classification of Confocal Laser Endomicroscopy Images of Head and Neck Tumors

Nov 13, 2023

Abstract:The surgical removal of head and neck tumors requires safe margins, which are usually confirmed intraoperatively by means of frozen sections. This method is, in itself, an oversampling procedure, which has a relatively low sensitivity compared to the definitive tissue analysis on paraffin-embedded sections. Confocal laser endomicroscopy (CLE) is an in-vivo imaging technique that has shown its potential in the live optical biopsy of tissue. An automated analysis of this notoriously difficult to interpret modality would help surgeons. However, the images of CLE show a wide variability of patterns, caused both by individual factors but also, and most strongly, by the anatomical structures of the imaged tissue, making it a challenging pattern recognition task. In this work, we evaluate four popular few shot learning (FSL) methods towards their capability of generalizing to unseen anatomical domains in CLE images. We evaluate this on images of sinunasal tumors (SNT) from five patients and on images of the vocal folds (VF) from 11 patients using a cross-validation scheme. The best respective approach reached a median accuracy of 79.6% on the rather homogeneous VF dataset, but only of 61.6% for the highly diverse SNT dataset. Our results indicate that FSL on CLE images is viable, but strongly affected by the number of patients, as well as the diversity of anatomical patterns.

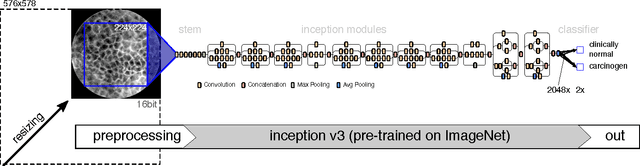

Transferability of Deep Learning Algorithms for Malignancy Detection in Confocal Laser Endomicroscopy Images from Different Anatomical Locations of the Upper Gastrointestinal Tract

Feb 24, 2019

Abstract:Squamous Cell Carcinoma (SCC) is the most common cancer type of the epithelium and is often detected at a late stage. Besides invasive diagnosis of SCC by means of biopsy and histo-pathologic assessment, Confocal Laser Endomicroscopy (CLE) has emerged as noninvasive method that was successfully used to diagnose SCC in vivo. For interpretation of CLE images, however, extensive training is required, which limits its applicability and use in clinical practice of the method. To aid diagnosis of SCC in a broader scope, automatic detection methods have been proposed. This work compares two methods with regard to their applicability in a transfer learning sense, i.e. training on one tissue type (from one clinical team) and applying the learnt classification system to another entity (different anatomy, different clinical team). Besides a previously proposed, patch-based method based on convolutional neural networks, a novel classification method on image level (based on a pre-trained Inception V.3 network with dedicated preprocessing and interpretation of class activation maps) is proposed and evaluated. The newly presented approach improves recognition performance, yielding accuracies of 91.63% on the first data set (oral cavity) and 92.63% on a joint data set. The generalization from oral cavity to the second data set (vocal folds) lead to similar area-under-the-ROC curve values than a direct training on the vocal folds data set, indicating good generalization.

Motion Artifact Detection in Confocal Laser Endomicroscopy Images

May 04, 2018

Abstract:Confocal Laser Endomicroscopy (CLE), an optical imaging technique allowing non-invasive examination of the mucosa on a (sub)cellular level, has proven to be a valuable diagnostic tool in gastroenterology and shows promising results in various anatomical regions including the oral cavity. Recently, the feasibility of automatic carcinoma detection for CLE images of sufficient quality was shown. However, in real world data sets a high amount of CLE images is corrupted by artifacts. Amongst the most prevalent artifact types are motion-induced image deteriorations. In the scope of this work, algorithmic approaches for the automatic detection of motion artifact-tainted image regions were developed. Hence, this work provides an important step towards clinical applicability of automatic carcinoma detection. Both, conventional machine learning and novel, deep learning-based approaches were assessed. The deep learning-based approach outperforms the conventional approaches, attaining an AUC of 0.90.

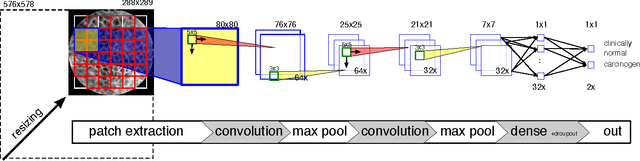

Patch-based Carcinoma Detection on Confocal Laser Endomicroscopy Images - A Cross-Site Robustness Assessment

Jul 25, 2017

Abstract:Deep learning technologies such as convolutional neural networks (CNN) provide powerful methods for image recognition and have recently been employed in the field of automated carcinoma detection in confocal laser endomicroscopy (CLE) images. CLE is a (sub-)surface microscopic imaging technique that reaches magnifications of up to 1000x and is thus suitable for in vivo structural tissue analysis. In this work, we aim to evaluate the prospects of a priorly developed deep learning-based algorithm targeted at the identification of oral squamous cell carcinoma with regard to its generalization to further anatomic locations of squamous cell carcinomas in the area of head and neck. We applied the algorithm on images acquired from the vocal fold area of five patients with histologically verified squamous cell carcinoma and presumably healthy control images of the clinically normal contra-lateral vocal cord. We find that the network trained on the oral cavity data reaches an accuracy of 89.45% and an area-under-the-curve (AUC) value of 0.955, when applied on the vocal cords data. Compared to the state of the art, we achieve very similar results, yet with an algorithm that was trained on a completely disjunct data set. Concatenating both data sets yielded further improvements in cross-validation with an accuracy of 90.81% and AUC of 0.970. In this study, for the first time to our knowledge, a deep learning mechanism for the identification of oral carcinomas using CLE Images could be applied to other disciplines in the area of head and neck. This study shows the prospect of the algorithmic approach to generalize well on other malignant entities of the head and neck, regardless of the anatomical location and furthermore in an examiner-independent manner.

* 8 pages, 8 figures, submitted to BIOIMAGING 2018

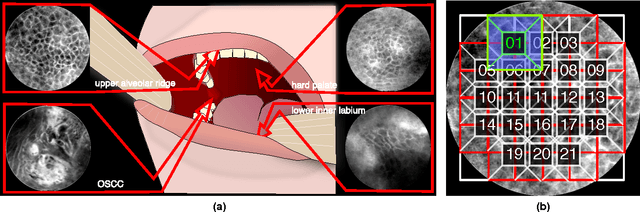

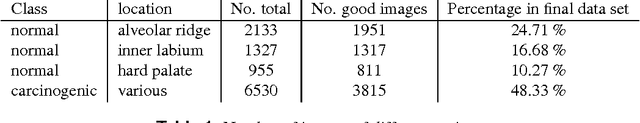

Automatic Classification of Cancerous Tissue in Laserendomicroscopy Images of the Oral Cavity using Deep Learning

Mar 10, 2017

Abstract:Oral Squamous Cell Carcinoma (OSCC) is a common type of cancer of the oral epithelium. Despite their high impact on mortality, sufficient screening methods for early diagnosis of OSCC often lack accuracy and thus OSCCs are mostly diagnosed at a late stage. Early detection and accurate outline estimation of OSCCs would lead to a better curative outcome and an reduction in recurrence rates after surgical treatment. Confocal Laser Endomicroscopy (CLE) records sub-surface micro-anatomical images for in vivo cell structure analysis. Recent CLE studies showed great prospects for a reliable, real-time ultrastructural imaging of OSCC in situ. We present and evaluate a novel automatic approach for a highly accurate OSCC diagnosis using deep learning technologies on CLE images. The method is compared against textural feature-based machine learning approaches that represent the current state of the art. For this work, CLE image sequences (7894 images) from patients diagnosed with OSCC were obtained from 4 specific locations in the oral cavity, including the OSCC lesion. The present approach is found to outperform the state of the art in CLE image recognition with an area under the curve (AUC) of 0.96 and a mean accuracy of 88.3% (sensitivity 86.6%, specificity 90%).

* 12 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge