Nico Schmidt

A Self-Supervised Feature Map Augmentation (FMA) Loss and Combined Augmentations Finetuning to Efficiently Improve the Robustness of CNNs

Dec 02, 2020

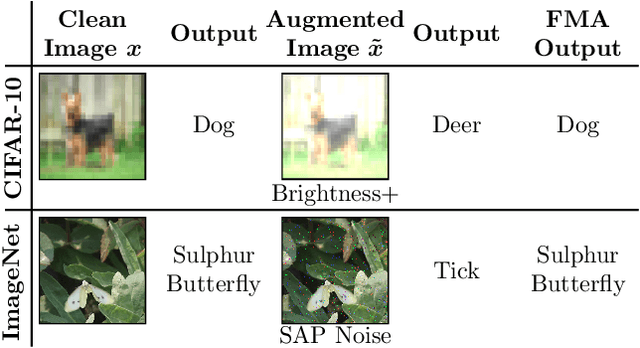

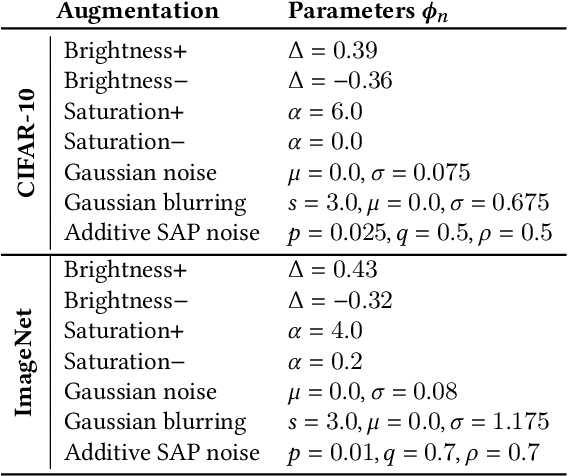

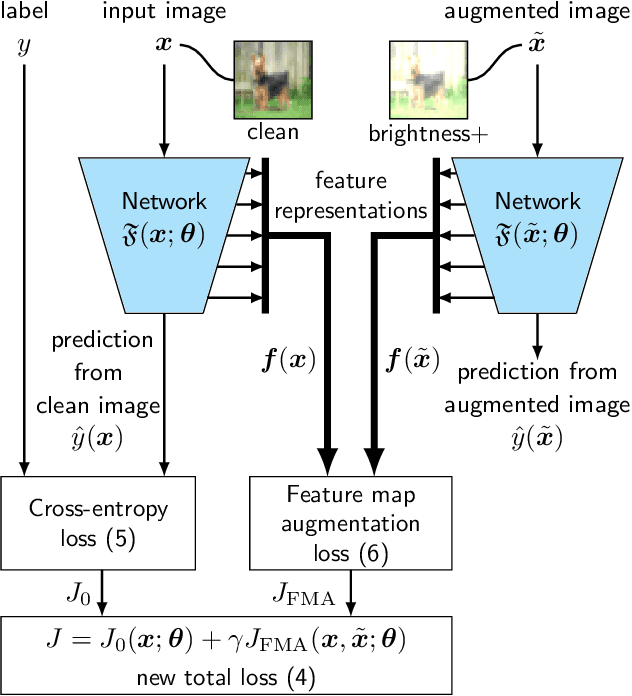

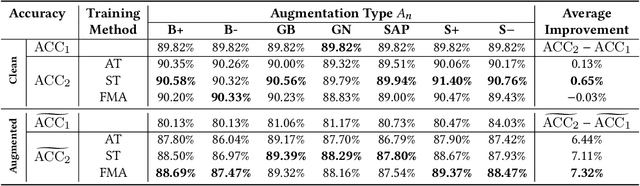

Abstract:Deep neural networks are often not robust to semantically-irrelevant changes in the input. In this work we address the issue of robustness of state-of-the-art deep convolutional neural networks (CNNs) against commonly occurring distortions in the input such as photometric changes, or the addition of blur and noise. These changes in the input are often accounted for during training in the form of data augmentation. We have two major contributions: First, we propose a new regularization loss called feature-map augmentation (FMA) loss which can be used during finetuning to make a model robust to several distortions in the input. Second, we propose a new combined augmentations (CA) finetuning strategy, that results in a single model that is robust to several augmentation types at the same time in a data-efficient manner. We use the CA strategy to improve an existing state-of-the-art method called stability training (ST). Using CA, on an image classification task with distorted images, we achieve an accuracy improvement of on average 8.94% with FMA and 8.86% with ST absolute on CIFAR-10 and 8.04% with FMA and 8.27% with ST absolute on ImageNet, compared to 1.98% and 2.12%, respectively, with the well known data augmentation method, while keeping the clean baseline performance.

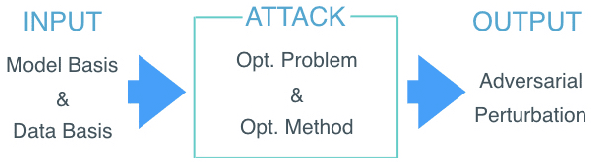

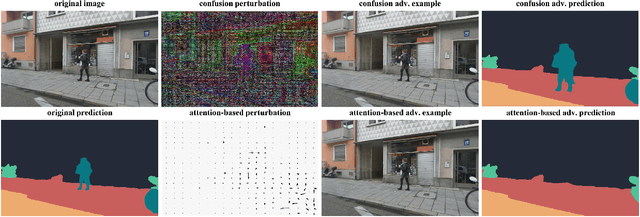

The Attack Generator: A Systematic Approach Towards Constructing Adversarial Attacks

Jun 17, 2019

Abstract:Most state-of-the-art machine learning (ML) classification systems are vulnerable to adversarial perturbations. As a consequence, adversarial robustness poses a significant challenge for the deployment of ML-based systems in safety- and security-critical environments like autonomous driving, disease detection or unmanned aerial vehicles. In the past years we have seen an impressive amount of publications presenting more and more new adversarial attacks. However, the attack research seems to be rather unstructured and new attacks often appear to be random selections from the unlimited set of possible adversarial attacks. With this publication, we present a structured analysis of the adversarial attack creation process. By detecting different building blocks of adversarial attacks, we outline the road to new sets of adversarial attacks. We call this the "attack generator". In the pursuit of this objective, we summarize and extend existing adversarial perturbation taxonomies. The resulting taxonomy is then linked to the application context of computer vision systems for autonomous vehicles, i.e. semantic segmentation and object detection. Finally, in order to prove the usefulness of the attack generator, we investigate existing semantic segmentation attacks with respect to the detected defining components of adversarial attacks.

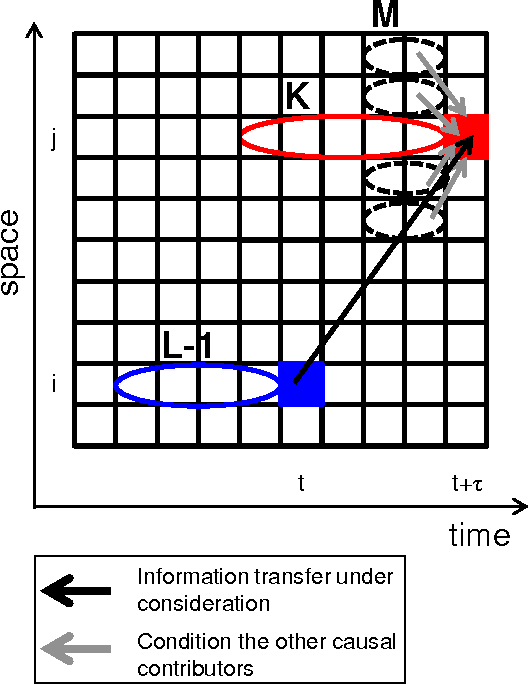

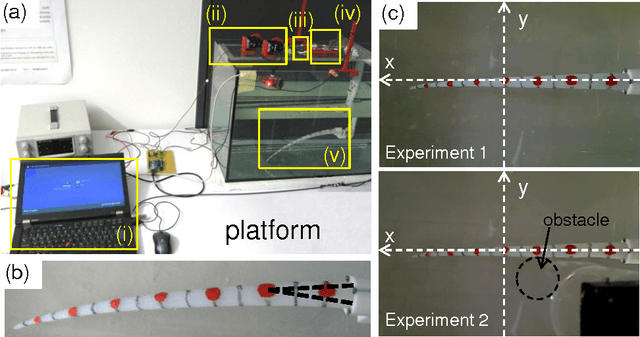

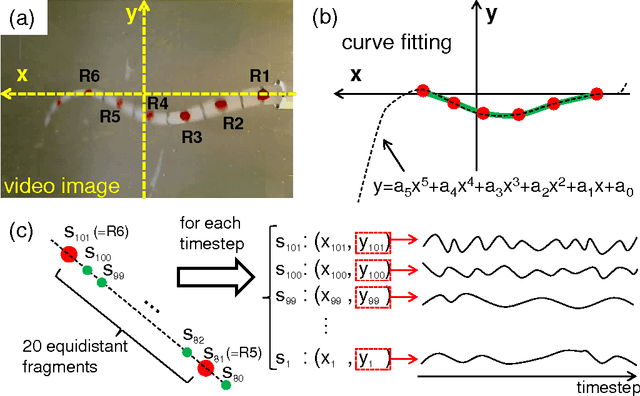

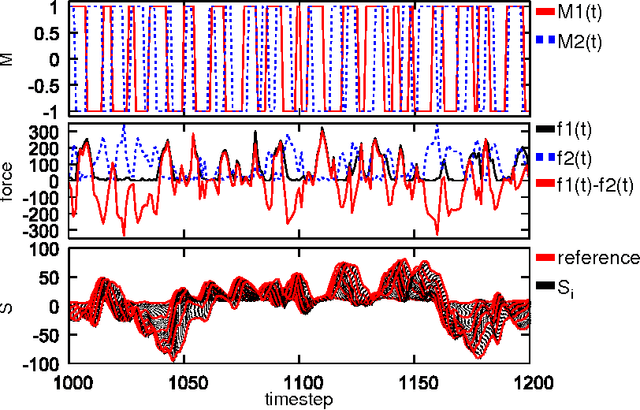

Measuring information transfer in a soft robotic arm

Jul 15, 2014

Abstract:Soft robots can exhibit diverse behaviors with simple types of actuation by partially outsourcing control to the morphological and material properties of their soft bodies, which is made possible by the tight coupling between control, body, and environment. In this paper, we present a method that will quantitatively characterize these diverse spatiotemporal dynamics of a soft body based on the information-theoretic approach. In particular, soft bodies have the ability to propagate the effect of actuation through the entire body, with a certain time delay, due to their elasticity. Our goal is to capture this delayed interaction in a quantitative manner based on a measure called momentary information transfer. We extend this measure to soft robotic applications and demonstrate its power using a physical soft robotic platform inspired by the octopus. Our approach is illustrated in two ways. First, we statistically characterize the delayed actuation propagation through the body as a strength of information transfer. Second, we capture this information propagation directly as local information dynamics. As a result, we show that our approach can successfully characterize the spatiotemporal dynamics of the soft robotic platform, explicitly visualizing how information transfers through the entire body with delays. Further extension scenarios of our approach are discussed for soft robotic applications in general.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge